68 posts tagged “machine-learning”

2022

To make the analogy explicit, in Software 1.0, human-engineered source code (e.g. some .cpp files) is compiled into a binary that does useful work. In Software 2.0 most often the source code comprises 1) the dataset that defines the desirable behavior and 2) the neural net architecture that gives the rough skeleton of the code, but with many details (the weights) to be filled in. The process of training the neural network compiles the dataset into the binary — the final neural network. In most practical applications today, the neural net architectures and the training systems are increasingly standardized into a commodity, so most of the active “software development” takes the form of curating, growing, massaging and cleaning labeled datasets.

Stable Diffusion Public Release (via) New AI just dropped. Stable Diffusion is similar to DALL-E, but completely open source and with a CC0 license applied to everything it generates. I have a Twitter thread (the via) link of comparisons I’ve made between its output and my previous DALL-E experiments. The announcement buries the lede somewhat: to try it out, visit beta.dreamstudio.ai—which you can use for free at the moment, but it’s unclear to me how billing is supposed to work.

storysniffer (via) Ben Welsh built a small Python library that guesses if a URL points to an article on a news website, or if it’s more likely to be a category page or /about page or similar. I really like this as an example of what you can do with a tiny machine learning model: the model is bundled as a ~3MB pickle file as part of the package, and the repository includes the Jupyter notebook that was used to train it.

First impressions of DALL-E, generating images from text

I made it off the DALL-E waiting list a few days ago and I’ve been having an enormous amount of fun experimenting with it. Here are some notes on what I’ve learned so far (and a bunch of example images too).

[... 2,102 words]How Imagen Actually Works. Imagen is Google’s new text-to-image model, similar to (but possibly even more effective than) DALL-E. This article is the clearest explanation I’ve seen of how Imagen works: it uses Google’s existing T5 text encoder to convert the input sentence into an encoding that captures the semantic meaning of the sentence (including things like items being described as being on top of other items), then uses a trained diffusion model to generate a 64x64 image. That image is passed through two super-res models to increase the resolution to the final 1024x1024 output.

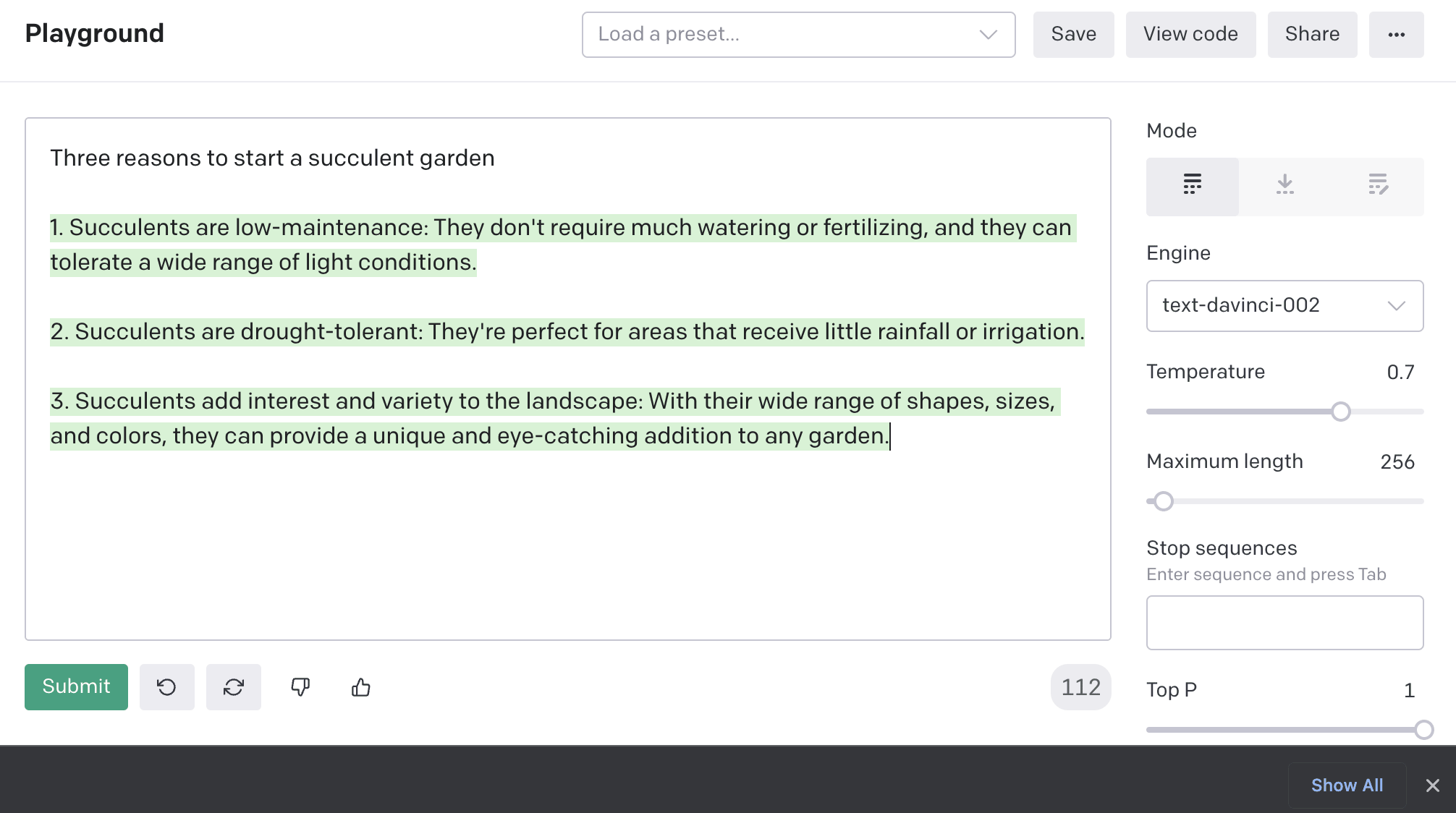

How to use the GPT-3 language model

I ran a Twitter poll the other day asking if people had tried GPT-3 and why or why not. The winning option, by quite a long way, was “No, I don’t know how to”. So here’s how to try it out, for free, without needing to write any code.

[... 838 words]2021

The art of asking nicely (via) CLIP+VQGAN Is a GAN that generates images based on some text input—you can run it on Google Collab notebooks, there are instructions linked at the bottom of this post. Janelle Shane of AI Weirdness explores tricks for getting the best results out of it for “a herd of sheep grazing on a lush green hillside”—various modifiers like “amazing awesome and epic” produce better images, but the one with the biggest impact, quite upsettingly, is “ultra high definition free desktop wallpaper”.

DALL·E: Creating Images from Text (via) “DALL·E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs.”. The examples in this paper are astonishing—“an illustration of a baby daikon radish in a tutu walking a dog” generates exactly that.

2020

How GPT3 Works—Visualizations and Animations. Nice essay full of custom animations illustrating how GPT-3 actually works.

When I was curating my generated tweets, I estimated 30-40% of the tweets were usable comedically, a massive improvement over the 5-10% usability from my GPT-2 tweet generation. However, a 30-40% success rate implies a 60-70% failure rate, which is patently unsuitable for a production application.

Tempering Expectations for GPT-3 and OpenAI’s API. Insightful commentary on GPT-3 (which is producing some ridiculously cool demos at the moment thanks to the invite-only OpenAI API) from Max Woolf.

When data is messy. I love this story: a neural network trained on images was asked what the most significant pixels in pictures of tench (a kind of fish) were: it returned pictures of fingers on a green background, because most of the tench photos it had seen were fisherfolk showing off their catch.

Data Science is a lot like Harry Potter, except there's no magic, it's just math, and instead of a sorting hat you just sort the data with a Python script.

— GPT-3, shepherded by Max Woolf

If you have to choose between engineering and ML, choose engineering. It’s easier for great engineers to pick up ML knowledge, but it’s a lot harder for ML experts to become great engineers.

Deep learning isn’t hard anymore. This article does a great job of explaining how transfer learning is unlocking a new wave of innovation around deep learning. Previously if you wanted to train a model you needed vast amounts if data and thousands of dollars of compute time. Thanks to transfer learning you can now take an existing model (such as GPT2) and train something useful on top of it that’s specific to a new domain in just minutes it hours, with only a few hundred or a few thousand new labeled samples.

A visual introduction to machine learning. Beautiful interactive essay explaining how a decision tree machine learning module is constructed, and using that to illustrate the concept of overfitting. This is one of the best explanations of machine learning fundamentals I’ve seen anywhere.

2019

Machine Learning on Mobile and at the Edge: 2019 industry year-in-review (via) This is a fantastic detailed overview of advances made in the field of machine learning on the edge (primarily on mobile devices) over 2019. I’m really excited about this trend: I love the improved privacy implications of running models on my phone without uploading data to a server, and it’s great to see techniques like Federated Learning (from Google Labs) which enable devices to privately train models in a distributed way without having to upload their training data.

We don't like limits on discrimination and lending, so we're gonna use machine learning, which is a form of money laundering for bias, a way to blame mathematical algorithms for desires to simply avoid rules that everybody else has to play by in this industry.

Exploring Neural Networks with Activation Atlases. Another promising attempt at visualizing what’s going on inside a neural network.

Practical Deep Learning for Coders 2019 (via) The deep learning evening course I took a few months ago has now been shared online in full, and it’s outstanding. “After the first lesson you’ll be able to train a state-of-the-art image classification model on your own data”—can confirm: after just the first lesson I built a bobcat v.s. cougar classifier using photos from iNaturalist.

The biggest thing I learned from the course is how powerful transfer learning is. I used to think you needed a huge amount of data to get good results from deep learning. That’s no longer true: you can take an existing model (eg ResNet for image classification) and train on top of it.

ResNet can classify images as 1,000 classes (house, cat, etc)—training an extra few hundred images of e.g. Bobcats vs Cougars only takes a couple of minutes on a GPU and can give you crazily accurate results.

It works because the pre-trained model can already pick up really subtle details—fur patterns, ear shapes etc—so you only need to train a few more layers on it for it to be able to classify against the patterns in your new set of training images.

And this doesnt just work for image classification! Natural language processing benefits from transfer learning too: take an existing model trained on the entire corpus of Wikipedia (so it knows patterns from sentence structures) and you can build IMDB sentiment analysis on top. That’s in lesson 4.

2018

Without deep understanding of the basic tools needed to build and train new algorithms, he says, researchers creating AIs resort to hearsay, like medieval alchemists. "People gravitate around cargo-cult practices," relying on "folklore and magic spells," adds François Chollet, a computer scientist at Google in Mountain View, California.

jantic/DeOldify (via) “A Deep Learning based project for colorizing and restoring old images”. Delightful (and well documented) project that uses a Self-Attention Generative Adversarial Network to colorize old black and white photos, with extremely impressive results. Built on an older version of the fastai library, and trained by running for several days on a 1080TI graphics card.

Reinforcement Learning with Prediction-Based Rewards (via) Fascinating result: by teaching a reinforcement learning agent that plays video games to optimize for "unfamiliar states" - states where it cannot predict what will happen next - the agent does a much better job of playing some games.

... for the first time exceeds average human performance on Montezuma’s Revenge. RND achieves state-of-the-art performance, periodically finds all 24 rooms and solves the first level without using demonstrations or having access to the underlying state of the game.

Automatically playing science communication games with transfer learning and fastai

This weekend was the 9th annual Science Hack Day San Francisco, which was also the 100th Science Hack Day held worldwide.

[... 1,174 words]Notebook: How to build a Teachable Machine with TensorFlow.js (via) This is a really cool Observable notebook. It explains how to build image classification that runs in the browser on top of Tensorflow.js, and includes interactive demos that hook into your webcam and let you hold up items and use them to train a classifier. Since it’s built on Observable every single underlying line of source code is available to browse as part of the essay.

Text Embedding Models Contain Bias. Here’s Why That Matters (via) Excellent discussion from the Google AI team of the enormous challenge of building machine learning models without accidentally encoding harmful bias in a way that cannot be easily detected.

Suppose a runaway success novel/tv/film franchise has "Bob" as the evil bad guy. Reams of fanfictions are written with "Bob" doing horrible things. People endlessly talk about how bad "Bob" is on twitter. Even the New York times writes about Bob latest depredations, when he plays off current events.

Your name is Bob. Suddenly all the AIs in the world associate your name with evil, death, killing, lying, stealing, fraud, and incest. AIs silently, slightly ding your essays, loan applications, uber driver applications, and everything you write online. And no one believes it's really happening. Or the powers that be think it's just a little accidental damage because the AI overall is still, overall doing a great job of sentiment analysis and fraud detection.

BearID: Bear Face Detector. Comprehensive tutorial on building a computer vision system to identify faces of bears, using dlib and the Histogram of Oriented Gradients (HOG) technique. Bears!

A Promenade of PyTorch. Useful overview of the PyTorch machine learning library from Facebook AI Research described as “a Python library enabling GPU-accelerated tensor computation”. Similar to TensorFlow, but where TensorFlow requires you to explicitly construct an execution graph PyTorch instead lets you write regular Python code (if statements, for loops etc) which PyTorch then uses to construct the execution graph for you.

6M observations total! Where has iNaturalist grown in 80 days with 1 million new observations? Citizen science app iNaturalist is seeing explosive growth at the moment—they’ve been around for nearly a decade but 1/6 of the observations posted to the site were added in just the past few months. Having tried the latest version of their iPhone app it’s easy to see why: snap a photo of some nature and upload it to the app and it will use surprisingly effective machine learning to suggest the genus or even the individual species. Submit the observation and within a few minutes other iNaturalist community members will confirm the identification or suggest a correction. It’s brilliantly well executed and an utter delight to use.