14 posts tagged “data-science”

2021

Many of you here today are toolbuilders who help people work with data. Rather than presuming that those using your tools are clear-eyed about their data, how can you build features and methods that ensure people know the limits of their data and work with them responsibly? Your tools are not neutral. Neither is the data that your tools help analyze. How can you build tools that invite responsible data use and make visible when data is being manipulated? How can you help build tools for responsible governance?

Cookiecutter Data Science (via) Some really solid thinking in this documentation for the DrivenData cookiecutter template. They emphasize designing data science projects for repeatability, such that just the src/ and data/ folders can be used to recreate all of the other analysis from scratch. I like the suggestion to give each project a dedicated S3 bucket for keeping immutable copies of the original raw data that might be too large for GitHub.

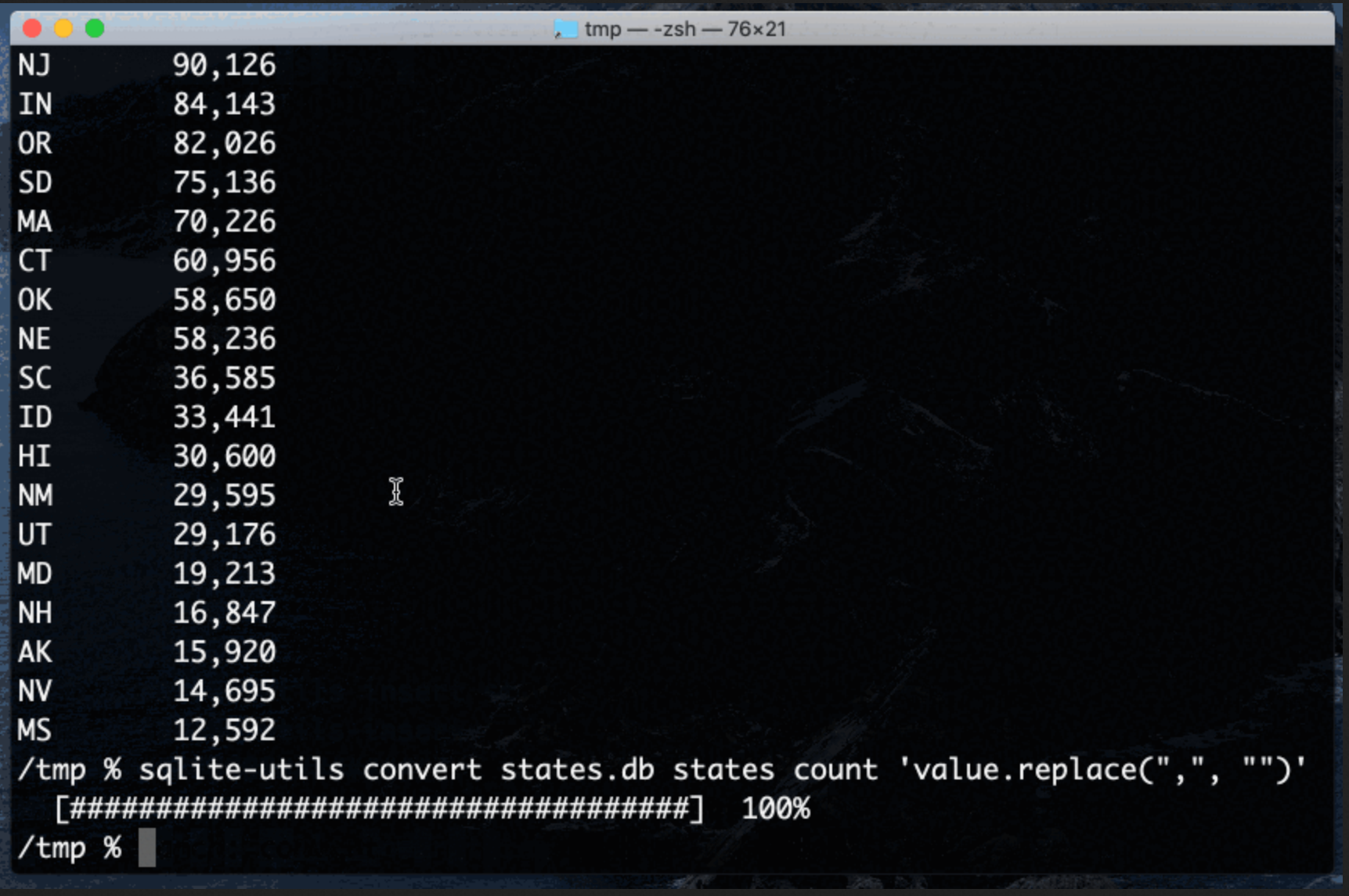

Apply conversion functions to data in SQLite columns with the sqlite-utils CLI tool

Earlier this week I released sqlite-utils 3.14 with a powerful new command-line tool: sqlite-utils convert, which applies a conversion function to data stored in a SQLite column.

The data team: a short story (via) Erik Bernhardsson’s fictional account (“I guess I should really call this a parable”) of a new data team leader successfully growing their team and building a data-first culture in a medium-sized technology company. His depiction of the initial state of the company (data in many different places, frustrated ML researchers who can’t get their research into production, confusion over what the data team is actually for) definitely rings true to me.

Group thousands of similar spreadsheet text cells in seconds (via) Luke Whyte explains how to efficiently group similar text columns in a table (Walmart and Wal-mart for example) using a clever combination of TF/IDF, sparse matrices and cosine similarity. Includes the clearest explanation of cosine similarity for text I’ve seen—and Luke wrote a Python library, textpack, that implements the described pattern.

What I’ve learned about data recently (via) Laurie Voss talks about the structure of data teams, based on his experience at npm and more recently Netlify. He suggests that Airflow and dbt are the data world’s equivalent of frameworks like Rails: opinionated tools that solve core problems and which mean that you can now hire people who understand how your data pipelines work on their first day on the job.

2020

Defining Data Intuition. Ryan T. Harter, Principal Data Scientist at Mozilla defines data intuition as “a resilience to misleading data and analyses”. He also introduces the term “data-stink” as a similar term to “code smell”, where your intuition should lead you to distrust analysis that exhibits certain characteristics without first digging in further. I strongly believe that data reports should include a link the raw methodology and numbers to ensure they can be more easily vetted—so that data-stink can be investigated with the least amount of resistance.

Announcing the Consortium for Python Data API Standards (via) Interesting effort to unify the fragmented DataFrame API ecosystem, where increasing numbers of libraries offer APIs inspired by Pandas that imitate each other but aren’t 100% compatible. The announcement includes some very clever code to support the effort: custom tooling to compare the existing APIs, and an ingenious GitHub Actions setup to run traces (via sys.settrace), derive type signatures and commit those generated signatures back to a repository.

Data Science is a lot like Harry Potter, except there's no magic, it's just math, and instead of a sorting hat you just sort the data with a Python script.

— GPT-3, shepherded by Max Woolf

2019

Data science is different now (via) Detailed examination of the current state of the job market for data science. Boot camps and university courses have produced a growing volume of junior data scientists seeking work, but the job market is much more competitive than many expected—especially for those without prior experience. Meanwhile the job itself is much more about data cleanup and software engineering skills: machine learning models and applied statistics end up being a small portion of the actual work.

2018

Things About Real-World Data Science Not Discussed In MOOCs and Thought Pieces (via) Really good article, pointing out that carefully optimizing machine learning models is only a small part of the day-to-day work of a data scientist: cleaning up data, building dashboards, shipping models to production, deciding on trade-offs between performance and production and considering the product design and ethical implementations of what you are doing make up a much larger portion of the job.

Serverless for data scientists (via) Slides and accompanying notes from a talk by Mike Lee Williams at PyBay, providing an overview of Zappa and diving a bit more deeply into pywren, which makes it trivial to parallelize a function across a set of AWS lambda instances (serverless Python map() execution essentially). I really like this format for sharing presentations—I used something similar for my own PyBay talk.

Computational and Inferential Thinking: The Foundations of Data Science. Free online textbook written for the UC Berkeley Foundations of Data Science class. The examples are all provided as Jupyter notebooks, using the mybinder web application to allow students to launch interactive notebooks for any of the examples without having to install any software on their own machines.

Beginner’s Guide to Jupyter Notebooks for Data Science (with Tips, Tricks!) (via) If you haven’t yet got on the Jupyter notebooks bandwagon this should help. It’s the single biggest productivity improvement I’ve made to my workflow in a very long time.