41 posts tagged “law”

2025

On Monday, this Court entered an order requiring OpenAI to hand over to the New York Times and its co-plaintiffs 20 million ChatGPT user conversations [...]

OpenAI is unaware of any court ordering wholesale production of personal information at this scale. This sets a dangerous precedent: it suggests that anyone who files a lawsuit against an AI company can demand production of tens of millions of conversations without first narrowing for relevance. This is not how discovery works in other cases: courts do not allow plaintiffs suing Google to dig through the private emails of tens of millions of Gmail users irrespective of their relevance. And it is not how discovery should work for generative AI tools either.

— Nov 12th letter from OpenAI to Judge Ona T. Wang, re: OpenAI, Inc., Copyright Infringement Litigation

OpenAI no longer has to preserve all of its ChatGPT data, with some exceptions (via) This is a relief:

Federal judge Ona T. Wang filed a new order on October 9 that frees OpenAI of an obligation to "preserve and segregate all output log data that would otherwise be deleted on a going forward basis."

I wrote about this in June. OpenAI were compelled by a court order to preserve all output, even from private chats, in case it became relevant to the ongoing New York Times lawsuit.

Here are those "some exceptions":

The judge in the case said that any chat logs already saved under the previous order would still be accessible and that OpenAI is required to hold on to any data related to ChatGPT accounts that have been flagged by the NYT.

Pro se litigants [people representing themselves in court without a lawyer] account for the majority of the cases in the United States where a party submitted a court filing containing AI hallucinations. In a country where legal representation is unaffordable for most people, it is no wonder that pro se litigants are depending on free or low-cost AI tools. But it is a scandal that so many have been betrayed by them, to the detriment of the cases they are litigating all on their own.

— Riana Pfefferkorn, analyzing the AI Hallucination Cases database for CIS at Stanford Law

Anthropic to pay $1.5 billion to authors in landmark AI settlement. I wrote about the details of this case when it was found that Anthropic's training on book content was fair use, but they needed to have purchased individual copies of the books first... and they had seeded their collection with pirated ebooks from Books3, PiLiMi and LibGen.

The remaining open question from that case was the penalty for pirating those 500,000 books. That question has now been resolved in a settlement:

Anthropic has reached an agreement to pay “at least” a staggering $1.5 billion, plus interest, to authors to settle its class-action lawsuit. The amount breaks down to smaller payouts expected to be approximately $3,000 per book or work.

It's wild to me that a $1.5 billion settlement can feel like a win for Anthropic, but given that it's undisputed that they downloaded pirated books (as did Meta and likely many other research teams) the maximum allowed penalty was $150,000 per book, so $3,000 per book is actually a significant discount.

As far as I can tell this case sets a precedent for Anthropic's more recent approach of buying millions of (mostly used) physical books and destructively scanning them for training as covered by "fair use". I'm not sure if other in-flight legal cases will find differently.

To be clear: it appears it is legal, at least in the USA, to buy a used copy of a physical book (used = the author gets nothing), chop the spine off, scan the pages, discard the paper copy and then train on the scanned content. The transformation from paper to scan is "fair use".

If this does hold it's going to be a great time to be a bulk retailer of used books!

Update: The official website for the class action lawsuit is www.anthropiccopyrightsettlement.com:

In the coming weeks, and if the court preliminarily approves the settlement, the website will provide to find a full and easily searchable listing of all works covered by the settlement.

In the meantime the Atlantic have a search engine to see if your work was included in LibGen, one of the pirated book sources involved in this case.

I had a look and it turns out the book I co-authored with 6 other people back in 2007 The Art & Science of JavaScript is in there, so maybe I'm due for 1/7th of one of those $3,000 settlements! (Update 4th October: you can now search for affected titles and mine isn't in there.)

Update 2: Here's an interesting detail from the Washington Post story about the settlement:

Anthropic said in the settlement that the specific digital copies of books covered by the agreement were not used in the training of its commercially released AI models.

Update 3: I'm not confident that destroying the scanned books is a hard requirement here - I got that impression from this section of the summary judgment in June:

Here, every purchased print copy was copied in order to save storage space and to enable searchability as a digital copy. The print original was destroyed. One replaced the other. And, there is no evidence that the new, digital copy was shown, shared, or sold outside the company. This use was even more clearly transformative than those in Texaco, Google, and Sony Betamax (where the number of copies went up by at least one), and, of course, more transformative than those uses rejected in Napster (where the number went up by “millions” of copies shared for free with others).

gov.uscourts.dcd.223205.1436.0_1.pdf (via) Here's the 230 page PDF ruling on the 2023 United States v. Google LLC federal antitrust case - the case that could have resulted in Google selling off Chrome and cutting most of Mozilla's funding.

I made it through the first dozen pages - it's actually quite readable.

It opens with a clear summary of the case so far, bold highlights mine:

Last year, this court ruled that Defendant Google LLC had violated Section 2 of the Sherman Act: “Google is a monopolist, and it has acted as one to maintain its monopoly.” The court found that, for more than a decade, Google had entered into distribution agreements with browser developers, original equipment manufacturers, and wireless carriers to be the out-of-the box, default general search engine (“GSE”) at key search access points. These access points were the most efficient channels for distributing a GSE, and Google paid billions to lock them up. The agreements harmed competition. They prevented rivals from accumulating the queries and associated data, or scale, to effectively compete and discouraged investment and entry into the market. And they enabled Google to earn monopoly profits from its search text ads, to amass an unparalleled volume of scale to improve its search product, and to remain the default GSE without fear of being displaced. Taken together, these agreements effectively “froze” the search ecosystem, resulting in markets in which Google has “no true competitor.”

There's an interesting generative AI twist: when the case was first argued in 2023 generative AI wasn't an influential issue, but more recently Google seem to be arguing that it is an existential threat that they need to be able to take on without additional hindrance:

The emergence of GenAl changed the course of this case. No witness at the liability trial testified that GenAl products posed a near-term threat to GSEs. The very first witness at the remedies hearing, by contrast, placed GenAl front and center as a nascent competitive threat. These remedies proceedings thus have been as much about promoting competition among GSEs as ensuring that Google’s dominance in search does not carry over into the GenAlI space. Many of Plaintiffs’ proposed remedies are crafted with that latter objective in mind.

I liked this note about the court's challenges in issuing effective remedies:

Notwithstanding this power, courts must approach the task of crafting remedies with a healthy dose of humility. This court has done so. It has no expertise in the business of GSEs, the buying and selling of search text ads, or the engineering of GenAl technologies. And, unlike the typical case where the court’s job is to resolve a dispute based on historic facts, here the court is asked to gaze into a crystal ball and look to the future. Not exactly a judge’s forte.

On to the remedies. These ones looked particularly important to me:

- Google will be barred from entering or maintaining any exclusive contract relating to the distribution of Google Search, Chrome, Google Assistant, and the Gemini app. [...]

- Google will not be required to divest Chrome; nor will the court include a contingent divestiture of the Android operating system in the final judgment. Plaintiffs overreached in seeking forced divesture of these key assets, which Google did not use to effect any illegal restraints. [...]

I guess Perplexity won't be buying Chrome then!

- Google will not be barred from making payments or offering other consideration to distribution partners for preloading or placement of Google Search, Chrome, or its GenAl products. Cutting off payments from Google almost certainly will impose substantial —in some cases, crippling— downstream harms to distribution partners, related markets, and consumers, which counsels against a broad payment ban.

That looks like a huge sigh of relief for Mozilla, who were at risk of losing a sizable portion of their income if Google's search distribution revenue were to be cut off.

NERD HARDER! is the answer every time a politician gets a technological idée-fixe about how to solve a social problem by creating a technology that can't exist. It's the answer that EU politicians who backed the catastrophic proposal to require copyright filters for all user-generated content came up with, when faced with objections that these filters would block billions of legitimate acts of speech [...]

When politicians seize on a technological impossibility as a technological necessity, they flail about and desperately latch onto scholarly work that they can brandish as evidence that their idea could be accomplished. [...]

That's just happened, and in relation to one of the scariest, most destructive NERD HARDER! tech policies ever to be assayed (a stiff competition). I'm talking about the UK Online Safety Act, which imposes a duty on websites to verify the age of people they communicate with before serving them anything that could be construed as child-inappropriate (a category that includes, e.g., much of Wikipedia)

— Cory Doctorow, "Privacy preserving age verification" is bullshit

common-pile/caselaw_access_project (via) Enormous openly licensed (I believe this is almost all public domain) training dataset of US legal cases:

This dataset contains 6.7 million cases from the Caselaw Access Project and Court Listener. The Caselaw Access Project consists of nearly 40 million pages of U.S. federal and state court decisions and judges’ opinions from the last 365 years. In addition, Court Listener adds over 900 thousand cases scraped from 479 courts.

It's distributed as gzipped newline-delimited JSON.

This was gathered as part of the Common Pile and used as part of the training dataset for the Comma family of LLMs.

Trial Court Decides Case Based On AI-Hallucinated Caselaw. Joe Patrice writing for Above the Law:

[...] it was always only a matter of time before a poor litigant representing themselves fails to know enough to sniff out and flag Beavis v. Butthead and a busy or apathetic judge rubberstamps one side’s proposed order without probing the cites for verification. [...]

It finally happened with a trial judge issuing an order based off fake cases (flagged by Rob Freund). While the appellate court put a stop to the matter, the fact that it got this far should terrify everyone.

It's already listed in the AI Hallucination Cases database (now listing 168 cases, it was 116 when I first wrote about it on 25th May) which lists a $2,500 monetary penalty.

Anthropic wins a major fair use victory for AI — but it’s still in trouble for stealing books. Major USA legal news for the AI industry today. Judge William Alsup released a "summary judgement" (a legal decision that results in some parts of a case skipping a trial) in a lawsuit between five authors and Anthropic concerning the use of their books in training data.

The judgement itself is a very readable 32 page PDF, and contains all sorts of interesting behind-the-scenes details about how Anthropic trained their models.

The facts of the complaint go back to the very beginning of the company. Anthropic was founded by a group of ex-OpenAI researchers in February 2021. According to the judgement:

So, in January or February 2021, another Anthropic cofounder, Ben Mann, downloaded Books3, an online library of 196,640 books that he knew had been assembled from unauthorized copies of copyrighted books — that is, pirated. Anthropic's next pirated acquisitions involved downloading distributed, reshared copies of other pirate libraries. In June 2021, Mann downloaded in this way at least five million copies of books from Library Genesis, or LibGen, which he knew had been pirated. And, in July 2022, Anthropic likewise downloaded at least two million copies of books from the Pirate Library Mirror, or PiLiMi, which Anthropic knew had been pirated.

Books3 was also listed as part of the training data for Meta's first LLaMA model!

Anthropic apparently used these sources of data to help build an internal "research library" of content that they then filtered and annotated and used in training runs.

Books turned out to be a very valuable component of the "data mix" to train strong models. By 2024 Anthropic had a new approach to collecting them: purchase and scan millions of print books!

To find a new way to get books, in February 2024, Anthropic hired the former head of partnerships for Google's book-scanning project, Tom Turvey. He was tasked with obtaining "all the books in the world" while still avoiding as much "legal/practice/business slog" as possible (Opp. Exhs. 21, 27). [...] Turvey and his team emailed major book distributors and retailers about bulk-purchasing their print copies for the AI firm's "research library" (Opp. Exh. 22 at 145; Opp. Exh. 31 at -035589). Anthropic spent many millions of dollars to purchase millions of print books, often in used condition. Then, its service providers stripped the books from their bindings, cut their pages to size, and scanned the books into digital form — discarding the paper originals. Each print book resulted in a PDF copy containing images of the scanned pages with machine-readable text (including front and back cover scans for softcover books).

The summary judgement found that these scanned books did fall under fair use, since they were transformative versions of the works and were not shared outside of the company. The downloaded ebooks did not count as fair use, and it looks like those will be the subject of a forthcoming jury trial.

Here's that section of the decision:

Before buying books for its central library, Anthropic downloaded over seven million pirated copies of books, paid nothing, and kept these pirated copies in its library even after deciding it would not use them to train its AI (at all or ever again). Authors argue Anthropic should have paid for these pirated library copies (e.g, Tr. 24–25, 65; Opp. 7, 12–13). This order agrees.

The most important aspect of this case is the question of whether training an LLM on unlicensed data counts as "fair use". The judge found that it did. The argument for why takes up several pages of the document but this seems like a key point:

Everyone reads texts, too, then writes new texts. They may need to pay for getting their hands on a text in the first instance. But to make anyone pay specifically for the use of a book each time they read it, each time they recall it from memory, each time they later draw upon it when writing new things in new ways would be unthinkable. For centuries, we have read and re-read books. We have admired, memorized, and internalized their sweeping themes, their substantive points, and their stylistic solutions to recurring writing problems.

The judge who signed this summary judgement is an interesting character: William Haskell Alsup (yes, his middle name really is Haskell) presided over jury trials for Oracle America, Inc. v. Google, Inc in 2012 and 2016 where he famously used his hobbyist BASIC programming experience to challenge claims made by lawyers in the case.

Update 6th September 2025: Anthropic settled the resulting class action lawsuit for $1.5 billion.

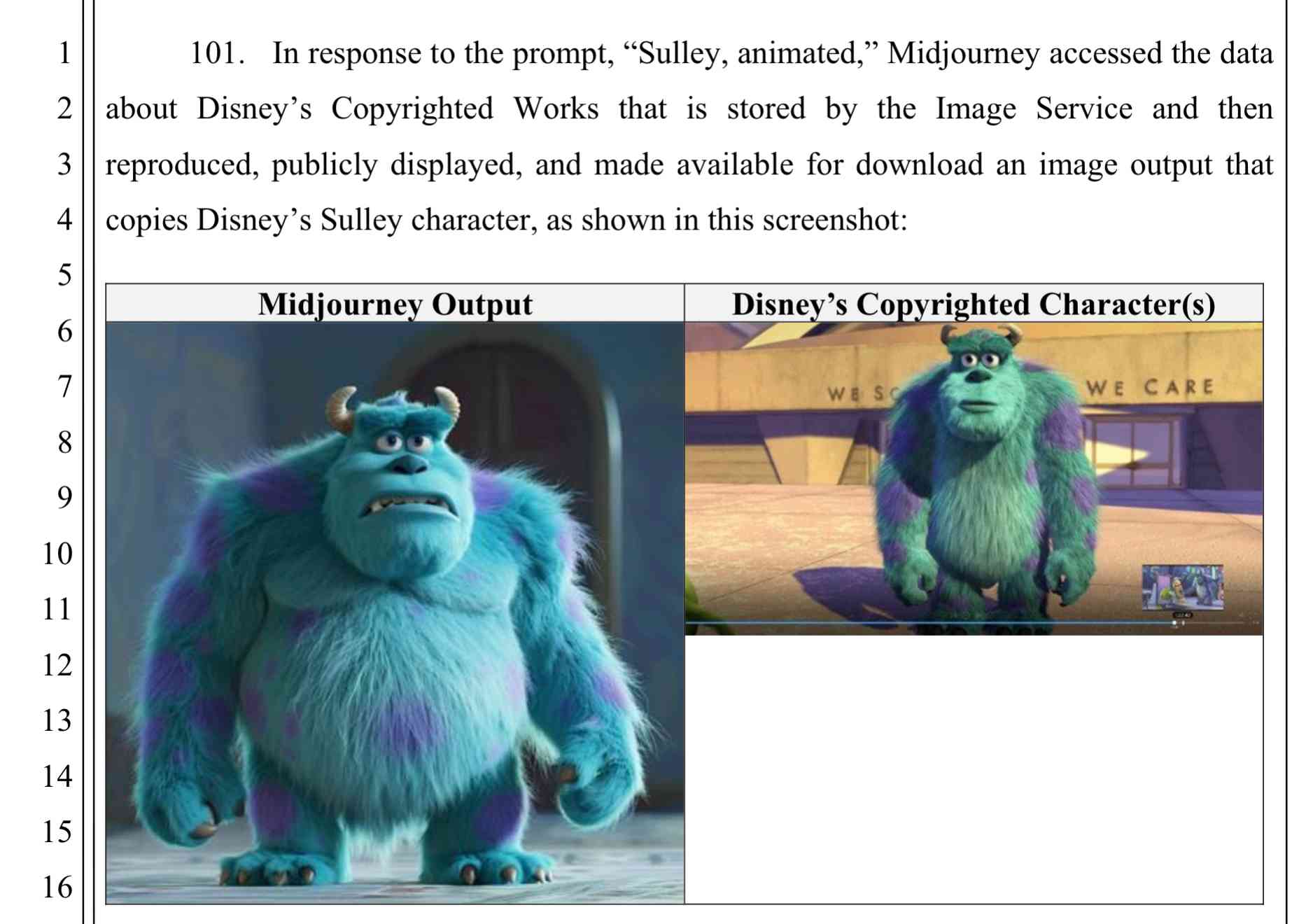

Disney and Universal Sue AI Company Midjourney for Copyright Infringement. This is a big one. It's very easy to demonstrate that Midjourney will output images of copyright protected characters (like Darth Vader or Yoda) based on a short text prompt.

There are already dozens of copyright lawsuits against AI companies winding through the US court system—including a class action lawsuit visual artists brought against Midjourney in 2023—but this is the first time major Hollywood studios have jumped into the fray.

Here's the lawsuit on Document Cloud - 110 pages, most of which are examples of supposedly infringing images.

OpenAI slams court order to save all ChatGPT logs, including deleted chats (via) This is very worrying. The New York Times v OpenAI lawsuit, now in its 17th month, includes accusations that OpenAI's models can output verbatim copies of New York Times content - both from training data and from implementations of RAG.

(This may help explain why Anthropic's Claude system prompts for their search tool emphatically demand Claude not spit out more than a short sentence of RAG-fetched search content.)

A few weeks ago the judge ordered OpenAI to start preserving the logs of all potentially relevant output - including supposedly temporary private chats and API outputs served to paying customers, which previously had a 30 day retention policy.

The May 13th court order itself is only two pages - here's the key paragraph:

Accordingly, OpenAI is NOW DIRECTED to preserve and segregate all output log data that would otherwise be deleted on a going forward basis until further order of the Court (in essence, the output log data that OpenAI has been destroying), whether such data might be deleted at a user’s request or because of “numerous privacy laws and regulations” that might require OpenAI to do so.

SO ORDERED.

That "numerous privacy laws and regulations" line refers to OpenAI's argument that this order runs counter to a whole host of existing worldwide privacy legislation. The judge here is stating that the potential need for future discovery in this case outweighs OpenAI's need to comply with those laws.

Unsurprisingly, I have seen plenty of bad faith arguments online about this along the lines of "Yeah, but that's what OpenAI really wanted to happen" - the fact that OpenAI are fighting this order runs counter to the common belief that they aggressively train models on all incoming user data no matter what promises they have made to those users.

I still see this as a massive competitive disadvantage for OpenAI, particularly when it comes to API usage. Paying customers of their APIs may well make the decision to switch to other providers who can offer retention policies that aren't subverted by this court order!

Update: Here's the official response from OpenAI: How we’re responding to The New York Time’s data demands in order to protect user privacy, including this from a short FAQ:

Is my data impacted?

- Yes, if you have a ChatGPT Free, Plus, Pro, and Teams subscription or if you use the OpenAI API (without a Zero Data Retention agreement).

- This does not impact ChatGPT Enterprise or ChatGPT Edu customers.

- This does not impact API customers who are using Zero Data Retention endpoints under our ZDR amendment.

To further clarify that point about ZDR:

You are not impacted. If you are a business customer that uses our Zero Data Retention (ZDR) API, we never retain the prompts you send or the answers we return. Because it is not stored, this court order doesn’t affect that data.

Here's a notable tweet about this situation from Sam Altman:

we have been thinking recently about the need for something like "AI privilege"; this really accelerates the need to have the conversation.

imo talking to an AI should be like talking to a lawyer or a doctor.

Update 22nd October 2025: OpenAI were freed of this obligation (with some exceptions) on October 9th.

AI Hallucination Cases (via) Damien Charlotin maintains this database of cases around the world where a legal decision has been made that confirms hallucinated content from generative AI was presented by a lawyer.

That's an important distinction: this isn't just cases where AI may have been used, it's cases where a lawyer was caught in the act and (usually) disciplined for it.

It's been two years since the first widely publicized incident of this, which I wrote about at the time in Lawyer cites fake cases invented by ChatGPT, judge is not amused. At the time I naively assumed:

I have a suspicion that this particular story is going to spread far and wide, and in doing so will hopefully inoculate a lot of lawyers and other professionals against making similar mistakes.

Damien's database has 116 cases from 12 different countries: United States, Israel, United Kingdom, Canada, Australia, Brazil, Netherlands, Italy, Ireland, Spain, South Africa, Trinidad & Tobago.

20 of those cases happened just this month, May 2025!

I get the impression that researching legal precedent is one of the most time-consuming parts of the job. I guess it's not surprising that increasing numbers of lawyers are returning to LLMs for this, even in the face of this mountain of cautionary stories.

Maybe Meta’s Llama claims to be open source because of the EU AI act

I encountered a theory a while ago that one of the reasons Meta insist on using the term “open source” for their Llama models despite the Llama license not actually conforming to the terms of the Open Source Definition is that the EU’s AI act includes special rules for open source models without requiring OSI compliance.

[... 852 words]I’ve disabled the pending geoblock of the UK because I now think the risks of the Online Safety Act to this site are low enough to change strategies to only geoblock if directly threatened by the regulator. [...]

It is not possible for a hobby site to comply with the Online Safety Act. The OSA is written to censor huge commercial sites with professional legal teams, and even understanding one's obligations under the regulations is an enormous project requiring expensive legal advice.

The law is 250 pages and the mandatory "guidance" from Ofcom is more than 3,000 pages of dense, cross-referenced UK-flavoured legalese. To find all the guidance you'll have to start here, click through to each of the 36 pages listed, and expand each page's collapsible sections that might have links to other pages and documents. (Though I can't be sure that leads to all their guidance, and note you'll have to check back regularly for planned updates.)

— Peter Bhat Harkins, site administrator, lobste.rs

I Went To SQL Injection Court (via) Thomas Ptacek talks about his ongoing involvement as an expert witness in an Illinois legal battle lead by Matt Chapman over whether a SQL schema (e.g. for the CANVAS parking ticket database) should be accessible to Freedom of Information (FOIA) requests against the Illinois state government.

They eventually lost in the Illinois Supreme Court, but there's still hope in the shape of IL SB0226, a proposed bill that would amend the FOIA act to ensure "that the public body shall provide a sufficient description of the structures of all databases under the control of the public body to allow a requester to request the public body to perform specific database queries".

Thomas posted this comment on Hacker News:

Permit me a PSA about local politics: engaging in national politics is bleak and dispiriting, like being a gnat bouncing off the glass plate window of a skyscraper. Local politics is, by contrast, extremely responsive. I've gotten things done --- including a law passed --- in my spare time and at practically no expense (drastically unlike national politics).

There are contexts in which it is immoral to use generative AI. For example, if you are a judge responsible for grounding a decision in law, you cannot rest that on an approximation of previous cases unknown to you. You want an AI system that helps you retrieve specific, well-documented cases, not one that confabulates fictional cases. You need to ensure you procure the right kind of AI for a task, and the right kind is determined in part by the essentialness of human responsibility.

— Joanna Bryson, Generative AI use and human agency

Baroness Kidron’s speech regarding UK AI legislation (via) Barnstormer of a speech by UK film director and member of the House of Lords Baroness Kidron. This is the Hansard transcript but you can also watch the video on parliamentlive.tv. She presents a strong argument against the UK's proposed copyright and AI reform legislation, which would provide a copyright exemption for AI training with a weak-toothed opt-out mechanism.

The Government are doing this not because the current law does not protect intellectual property rights, nor because they do not understand the devastation it will cause, but because they are hooked on the delusion that the UK's best interests and economic future align with those of Silicon Valley.

She throws in some cleverly selected numbers:

The Prime Minister cited an IMF report that claimed that, if fully realised, the gains from AI could be worth up to an average of £47 billion to the UK each year over a decade. He did not say that the very same report suggested that unemployment would increase by 5.5% over the same period. This is a big number—a lot of jobs and a very significant cost to the taxpayer. Nor does that £47 billion account for the transfer of funds from one sector to another. The creative industries contribute £126 billion per year to the economy. I do not understand the excitement about £47 billion when you are giving up £126 billion.

Mentions DeepSeek:

Before I sit down, I will quickly mention DeepSeek, a Chinese bot that is perhaps as good as any from the US—we will see—but which will certainly be a potential beneficiary of the proposed AI scraping exemption. Who cares that it does not recognise Taiwan or know what happened in Tiananmen Square? It was built for $5 million and wiped $1 trillion off the value of the US AI sector. The uncertainty that the Government claim is not an uncertainty about how copyright works; it is uncertainty about who will be the winners and losers in the race for AI.

And finishes with this superb closing line:

The spectre of AI does nothing for growth if it gives away what we own so that we can rent from it what it makes.

According to Ed Newton-Rex the speech was effective:

She managed to get the House of Lords to approve her amendments to the Data (Use and Access) Bill, which among other things requires overseas gen AI companies to respect UK copyright law if they sell their products in the UK. (As a reminder, it is illegal to train commercial gen AI models on ©️ work without a licence in the UK.)

What's astonishing is that her amendments passed despite @UKLabour reportedly being whipped to vote against them, and the Conservatives largely abstaining. Essentially, Labour voted against the amendments, and everyone else who voted voted to protect copyright holders.

(Is it true that in the UK it's currently "illegal to train commercial gen AI models on ©️ work"? From points 44, 45 and 46 of this Copyright and AI: Consultation document it seems to me that the official answer is "it's complicated".)

I'm trying to understand if this amendment could make existing products such as ChatGPT, Claude and Gemini illegal to sell in the UK. How about usage of open weight models?

2024

As an independent writer and publisher, I am the legal team. I am the fact-checking department. I am the editorial staff. I am the one responsible for triple-checking every single statement I make in the type of original reporting that I know carries a serious risk of baseless but ruinously expensive litigation regularly used to silence journalists, critics, and whistleblowers. I am the one deciding if that risk is worth taking, or if I should just shut up and write about something less risky.

But increasingly, I’m worried that attempts to crack down on the cryptocurrency industry — scummy though it may be — may result in overall weakening of financial privacy, and may hurt vulnerable people the most. As they say, “hard cases make bad law”.

LLMs may offer immense value to society. But that does not warrant the violation of copyright law or its underpinning principles. We do not believe it is fair for tech firms to use rightsholder data for commercial purposes without permission or compensation, and to gain vast financial rewards in the process. There is compelling evidence that the UK benefits economically, politically and societally from upholding a globally respected copyright regime.

2023

Microsoft announces new Copilot Copyright Commitment for customers. Part of an interesting trend where some AI vendors are reassuring their paying customers by promising legal support in the face of future legal threats:

“As customers ask whether they can use Microsoft’s Copilot services and the output they generate without worrying about copyright claims, we are providing a straightforward answer: yes, you can, and if you are challenged on copyright grounds, we will assume responsibility for the potential legal risks involved.”

And the notion that security updates, for every user in the world, would need the approval of the U.K. Home Office just to make sure the patches weren’t closing vulnerabilities that the government itself is exploiting — it boggles the mind. Even if the U.K. were the only country in the world to pass such a law, it would be madness, but what happens when other countries follow?

An Iowa school district is using ChatGPT to decide which books to ban. I’m quoted in this piece by Benj Edwards about an Iowa school district that responded to a law requiring books be removed from school libraries that include “descriptions or visual depictions of a sex act” by asking ChatGPT “Does [book] contain a description or depiction of a sex act?”.

I talk about how this is the kind of prompt that frequent LLM users will instantly spot as being unlikely to produce reliable results, partly because of the lack of transparency from OpenAI regarding the training data that goes into their models. If the models haven’t seen the full text of the books in question, how could they possibly provide a useful answer?

Mandatory Certification Regarding Generative Artificial Intelligence (via) From the Judge Specific Requirements for Judge Brantley Starr in Austin, TX:

“All attorneys appearing before the Court must file on the docket a certificate attesting either that no portion of the filing was drafted by generative artificial intelligence (such as ChatGPT, Harvey.AI, or Google Bard) or that any language drafted by generative artificial intelligence was checked for accuracy, using print reporters or traditional legal databases, by a human being. [...]”

Lawyer cites fake cases invented by ChatGPT, judge is not amused

Legal Twitter is having tremendous fun right now reviewing the latest documents from the case Mata v. Avianca, Inc. (1:22-cv-01461). Here’s a neat summary:

[... 2,844 words]Beyond these specific legal arguments, Stability AI may find it has a “vibes” problem. The legal criteria for fair use are subjective and give judges some latitude in how to interpret them. And one factor that likely influences the thinking of judges is whether a defendant seems like a “good actor.” Google is a widely respected technology company that tends to win its copyright lawsuits. Edgier companies like Napster tend not to.

Stable Diffusion copyright lawsuits could be a legal earthquake for AI. Timothy B. Lee provides a thorough discussion of the copyright lawsuits currently targeting Stable Diffusion and GitHub Copilot, including subtle points about how the interpretation of “fair use” might be applied to the new field of generative AI.

2013

Is it possible to run a successful company without being unethical or operating on the fringes of the law?

There is nothing inherently unethical about entrepreneurship. Find a problem people have. Figure out how much money solving it will save them (or help them make). Charge them less than that.

[... 108 words]How should two equal startup founder formalize a cliff?

A company is a legal entity. The person who leaves the company is the person who resigns from that legal entity.

[... 36 words]2009

Years ago, Alex Russell told me that Django ought to be collecting CLAs. I said "yeah, whatever" and ignored him. And thus have spent more than a year gathering CLAs to get DSF's paperwork in order. Sigh.