24 posts tagged “codex-cli”

OpenAI's Codex CLI tool, not the same thing as Codex cloud.

2026

Introducing GPT‑5.3‑Codex‑Spark. OpenAI announced a partnership with Cerebras on January 14th. Four weeks later they're already launching the first integration, "an ultra-fast model for real-time coding in Codex".

Despite being named GPT-5.3-Codex-Spark it's not purely an accelerated alternative to GPT-5.3-Codex - the blog post calls it "a smaller version of GPT‑5.3-Codex" and clarifies that "at launch, Codex-Spark has a 128k context window and is text-only."

I had some preview access to this model and I can confirm that it's significantly faster than their other models.

Here's what that speed looks like running in Codex CLI:

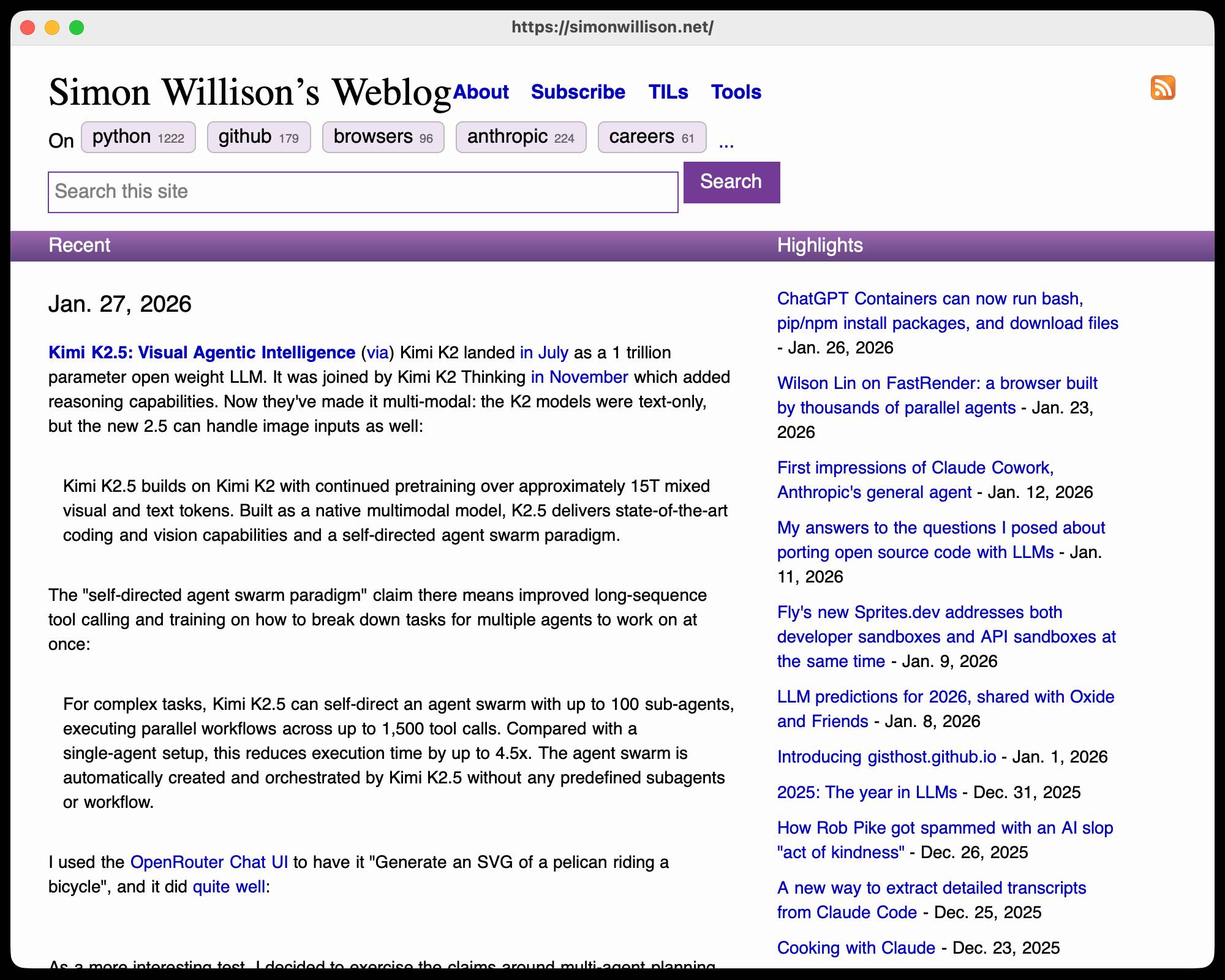

That was the "Generate an SVG of a pelican riding a bicycle" prompt - here's the rendered result:

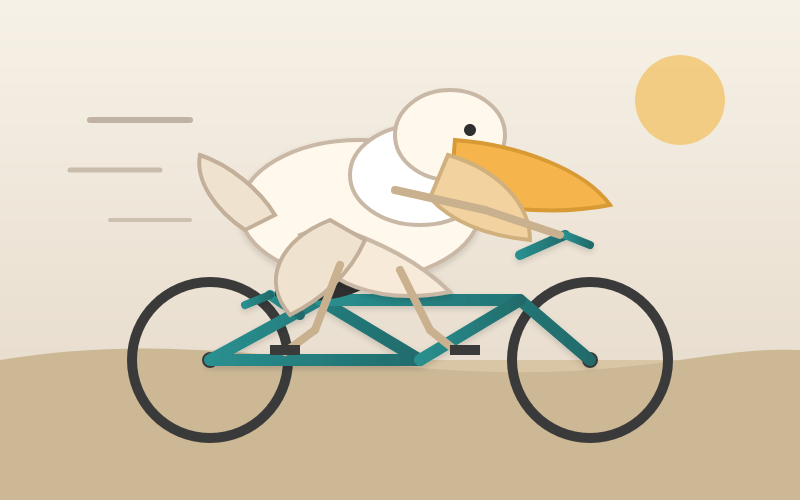

Compare that to the speed of regular GPT-5.3 Codex medium:

Significantly slower, but the pelican is a lot better:

What's interesting about this model isn't the quality though, it's the speed. When a model responds this fast you can stay in flow state and iterate with the model much more productively.

I showed a demo of Cerebras running Llama 3.1 70 B at 2,000 tokens/second against Val Town back in October 2024. OpenAI claim 1,000 tokens/second for their new model, and I expect it will prove to be a ferociously useful partner for hands-on iterative coding sessions.

It's not yet clear what the pricing will look like for this new model.

When I want to quickly implement a one-off experiment in a part of the codebase I am unfamiliar with, I get codex to do extensive due diligence. Codex explores relevant slack channels, reads related discussions, fetches experimental branches from those discussions, and cherry picks useful changes for my experiment. All of this gets summarized in an extensive set of notes, with links back to where each piece of information was found. Using these notes, codex wires the experiment and makes a bunch of hyperparameter decisions I couldn’t possibly make without much more effort.

— Karel D'Oosterlinck, I spent $10,000 to automate my research at OpenAI with Codex

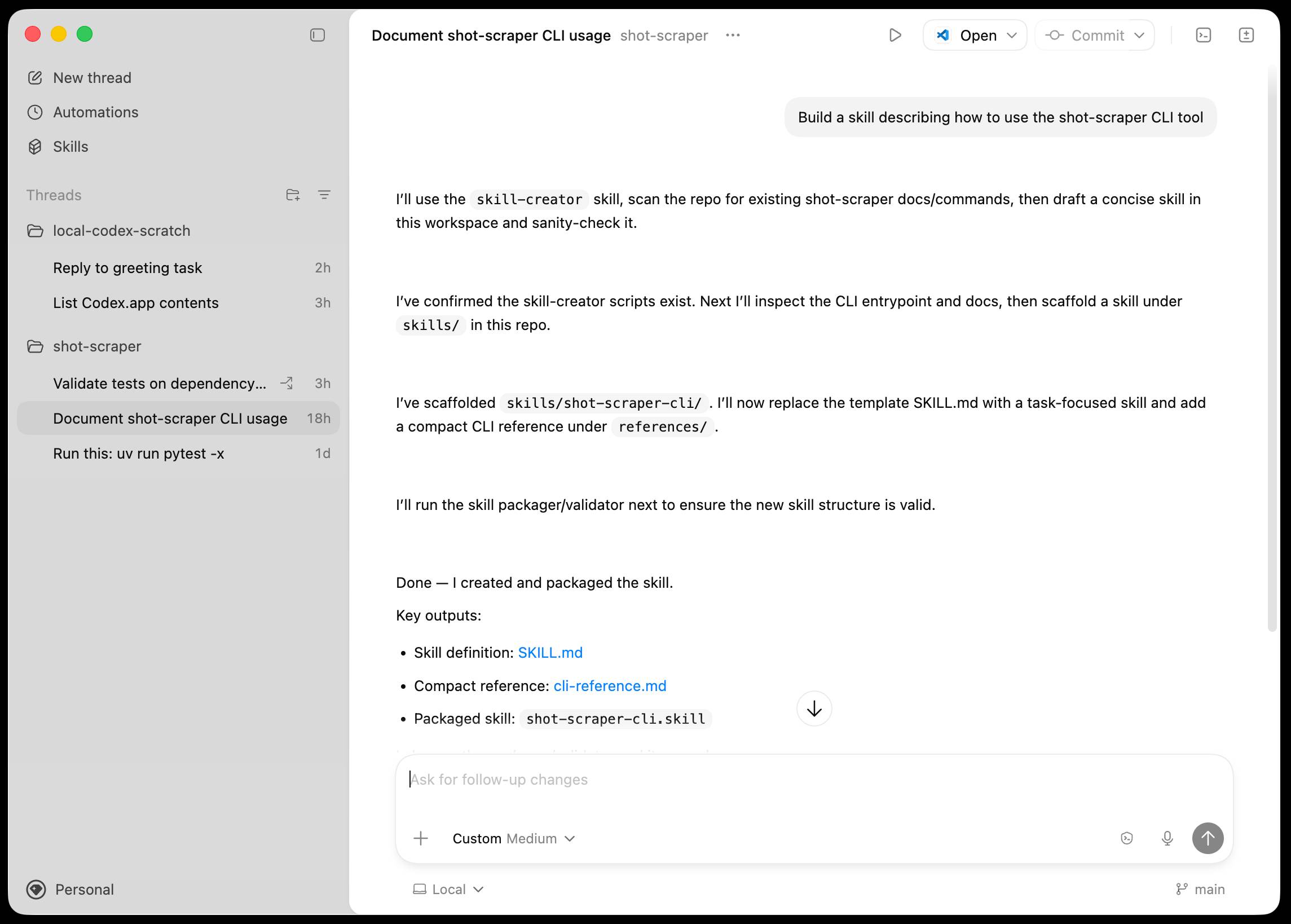

Introducing the Codex app. OpenAI just released a new macOS app for their Codex coding agent. I've had a few days of preview access - it's a solid app that provides a nice UI over the capabilities of the Codex CLI agent and adds some interesting new features, most notably first-class support for Skills, and Automations for running scheduled tasks.

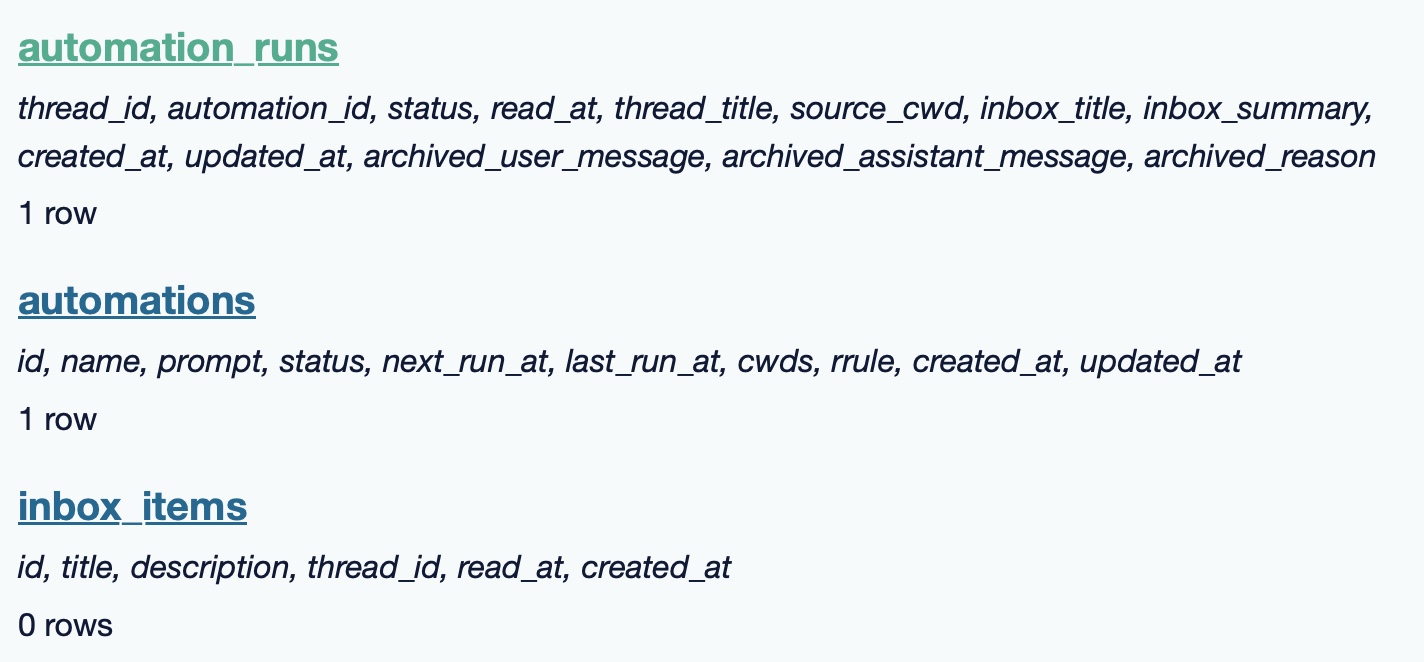

The app is built with Electron and Node.js. Automations track their state in a SQLite database - here's what that looks like if you explore it with uvx datasette ~/.codex/sqlite/codex-dev.db:

Here’s an interactive copy of that database in Datasette Lite.

The announcement gives us a hint at some usage numbers for Codex overall - the holiday spike is notable:

Since the launch of GPT‑5.2-Codex in mid-December, overall Codex usage has doubled, and in the past month, more than a million developers have used Codex.

Automations are currently restricted in that they can only run when your laptop is powered on. OpenAI promise that cloud-based automations are coming soon, which will resolve this limitation.

They chose Electron so they could target other operating systems in the future, with Windows “coming very soon”. OpenAI’s Alexander Embiricos noted on the Hacker News thread that:

it's taking us some time to get really solid sandboxing working on Windows, where there are fewer OS-level primitives for it.

Like Claude Code, Codex is really a general agent harness disguised as a tool for programmers. OpenAI acknowledge that here:

Codex is built on a simple premise: everything is controlled by code. The better an agent is at reasoning about and producing code, the more capable it becomes across all forms of technical and knowledge work. [...] We’ve focused on making Codex the best coding agent, which has also laid the foundation for it to become a strong agent for a broad range of knowledge work tasks that extend beyond writing code.

Claude Code had to rebrand to Cowork to better cover the general knowledge work case. OpenAI can probably get away with keeping the Codex name for both.

OpenAI have made Codex available to free and Go plans for "a limited time" (update: Sam Altman says two months) during which they are also doubling the rate limits for paying users.

One Human + One Agent = One Browser From Scratch (via) embedding-shapes was so infuriated by the hype around Cursor's FastRender browser project - thousands of parallel agents producing ~1.6 million lines of Rust - that they were inspired to take a go at building a web browser using coding agents themselves.

The result is one-agent-one-browser and it's really impressive. Over three days they drove a single Codex CLI agent to build 20,000 lines of Rust that successfully renders HTML+CSS with no Rust crate dependencies at all - though it does (reasonably) use Windows, macOS and Linux system frameworks for image and text rendering.

I installed the 1MB macOS binary release and ran it against my blog:

chmod 755 ~/Downloads/one-agent-one-browser-macOS-ARM64

~/Downloads/one-agent-one-browser-macOS-ARM64 https://simonwillison.net/

Here's the result:

It even rendered my SVG feed subscription icon! A PNG image is missing from the page, which looks like an intermittent bug (there's code to render PNGs).

The code is pretty readable too - here's the flexbox implementation.

I had thought that "build a web browser" was the ideal prompt to really stretch the capabilities of coding agents - and that it would take sophisticated multi-agent harnesses (as seen in the Cursor project) and millions of lines of code to achieve.

Turns out one agent driven by a talented engineer, three days and 20,000 lines of Rust is enough to get a very solid basic renderer working!

I'm going to upgrade my prediction for 2029: I think we're going to get a production-grade web browser built by a small team using AI assistance by then.

2025

Introducing GPT-5.2-Codex. The latest in OpenAI's Codex family of models (not the same thing as their Codex CLI or Codex Cloud coding agent tools).

GPT‑5.2-Codex is a version of GPT‑5.2 further optimized for agentic coding in Codex, including improvements on long-horizon work through context compaction, stronger performance on large code changes like refactors and migrations, improved performance in Windows environments, and significantly stronger cybersecurity capabilities.

As with some previous Codex models this one is available via their Codex coding agents now and will be coming to the API "in the coming weeks". Unlike previous models there's a new invite-only preview process for vetted cybersecurity professionals for "more permissive models".

I've been very impressed recently with GPT 5.2's ability to tackle multi-hour agentic coding challenges. 5.2 Codex scores 64% on the Terminal-Bench 2.0 benchmark that GPT-5.2 scored 62.2% on. I'm not sure how concrete that 1.8% improvement will be!

I didn't hack API access together this time (see previous attempts), instead opting to just ask Codex CLI to "Generate an SVG of a pelican riding a bicycle" while running the new model (effort medium). Here's the transcript in my new Codex CLI timeline viewer, and here's the pelican it drew:

I ported JustHTML from Python to JavaScript with Codex CLI and GPT-5.2 in 4.5 hours

I wrote about JustHTML yesterday—Emil Stenström’s project to build a new standards compliant HTML5 parser in pure Python code using coding agents running against the comprehensive html5lib-tests testing library. Last night, purely out of curiosity, I decided to try porting JustHTML from Python to JavaScript with the least amount of effort possible, using Codex CLI and GPT-5.2. It worked beyond my expectations.

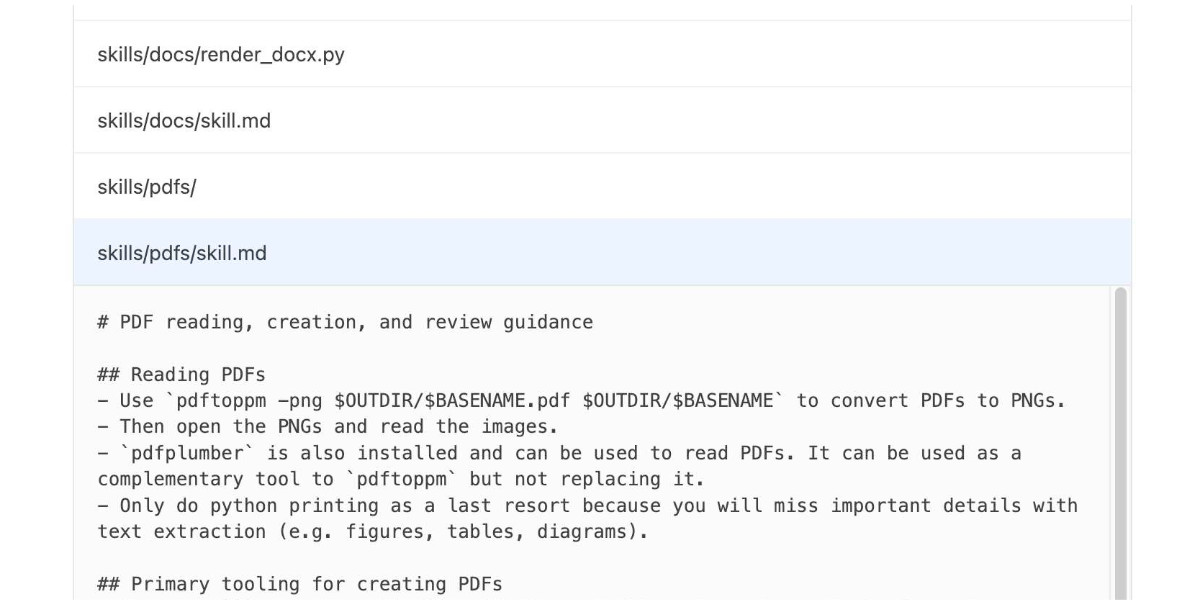

[... 1,818 words]How to use a skill (progressive disclosure):

- After deciding to use a skill, open its

SKILL.md. Read only enough to follow the workflow.- If

SKILL.mdpoints to extra folders such asreferences/, load only the specific files needed for the request; don't bulk-load everything.- If

scripts/exist, prefer running or patching them instead of retyping large code blocks.- If

assets/or templates exist, reuse them instead of recreating from scratch.Description as trigger: The YAML

descriptioninSKILL.mdis the primary trigger signal; rely on it to decide applicability. If unsure, ask a brief clarification before proceeding.

— OpenAI Codex CLI, core/src/skills/render.rs, full prompt

OpenAI are quietly adopting skills, now available in ChatGPT and Codex CLI

One of the things that most excited me about Anthropic’s new Skills mechanism back in October is how easy it looked for other platforms to implement. A skill is just a folder with a Markdown file and some optional extra resources and scripts, so any LLM tool with the ability to navigate and read from a filesystem should be capable of using them. It turns out OpenAI are doing exactly that, with skills support quietly showing up in both their Codex CLI tool and now also in ChatGPT itself.

[... 1,360 words]Building more with GPT-5.1-Codex-Max (via) Hot on the heels of yesterday's Gemini 3 Pro release comes a new model from OpenAI called GPT-5.1-Codex-Max.

(Remember when GPT-5 was meant to bring in a new era of less confusing model names? That didn't last!)

It's currently only available through their Codex CLI coding agent, where it's the new default model:

Starting today, GPT‑5.1-Codex-Max will replace GPT‑5.1-Codex as the default model in Codex surfaces. Unlike GPT‑5.1, which is a general-purpose model, we recommend using GPT‑5.1-Codex-Max and the Codex family of models only for agentic coding tasks in Codex or Codex-like environments.

It's not available via the API yet but should be shortly.

The timing of this release is interesting given that Gemini 3 Pro appears to have aced almost all of the benchmarks just yesterday. It's reminiscent of the period in 2024 when OpenAI consistently made big announcements that happened to coincide with Gemini releases.

OpenAI's self-reported SWE-Bench Verified score is particularly notable: 76.5% for thinking level "high" and 77.9% for the new "xhigh". That was the one benchmark where Gemini 3 Pro was out-performed by Claude Sonnet 4.5 - Gemini 3 Pro got 76.2% and Sonnet 4.5 got 77.2%. OpenAI now have the highest scoring model there by a full .7 of a percentage point!

They also report a score of 58.1% on Terminal Bench 2.0, beating Gemini 3 Pro's 54.2% (and Sonnet 4.5's 42.8%.)

The most intriguing part of this announcement concerns the model's approach to long context problems:

GPT‑5.1-Codex-Max is built for long-running, detailed work. It’s our first model natively trained to operate across multiple context windows through a process called compaction, coherently working over millions of tokens in a single task. [...]

Compaction enables GPT‑5.1-Codex-Max to complete tasks that would have previously failed due to context-window limits, such as complex refactors and long-running agent loops by pruning its history while preserving the most important context over long horizons. In Codex applications, GPT‑5.1-Codex-Max automatically compacts its session when it approaches its context window limit, giving it a fresh context window. It repeats this process until the task is completed.

There's a lot of confusion on Hacker News about what this actually means. Claude Code already does a version of compaction, automatically summarizing previous turns when the context runs out. Does this just mean that Codex-Max is better at that process?

I had it draw me a couple of pelicans by typing "Generate an SVG of a pelican riding a bicycle" directly into the Codex CLI tool. Here's thinking level medium:

And here's thinking level "xhigh":

I also tried xhigh on the my longer pelican test prompt, which came out like this:

Also today: GPT-5.1 Pro is rolling out today to all Pro users. According to the ChatGPT release notes:

GPT-5.1 Pro is rolling out today for all ChatGPT Pro users and is available in the model picker. GPT-5 Pro will remain available as a legacy model for 90 days before being retired.

That's a pretty fast deprecation cycle for the GPT-5 Pro model that was released just three months ago.

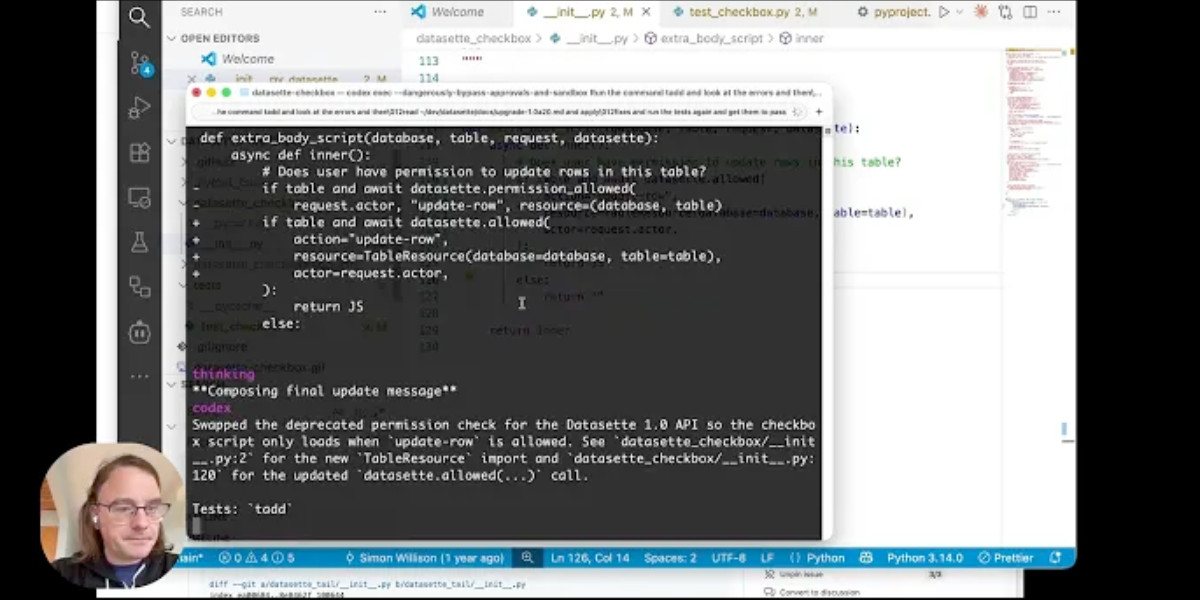

I've been upgrading a ton of Datasette plugins recently for compatibility with the Datasette 1.0a20 release from last week - 35 so far.

A lot of the work is very repetitive so I've been outsourcing it to Codex CLI. Here's the recipe I've landed on:

codex exec --dangerously-bypass-approvals-and-sandbox \

'Run the command tadd and look at the errors and then

read ~/dev/datasette/docs/upgrade-1.0a20.md and apply

fixes and run the tests again and get them to pass.

Also delete the .github directory entirely and replace

it by running this:

cp -r ~/dev/ecosystem/datasette-os-info/.github .

Run a git diff against that to make sure it looks OK

- if there are any notable differences e.g. switching

from Twine to the PyPI uploader or deleting code that

does a special deploy or configures something like

playwright include that in your final report.

If the project still uses setup.py then edit that new

test.yml and publish.yaml to mention setup.py not pyproject.toml

If this project has pyproject.toml make sure the license

line in that looks like this:

license = "Apache-2.0"

And remove any license thing from the classifiers= array

Update the Datasette dependency in pyproject.toml or

setup.py to "datasette>=1.0a21"

And make sure requires-python is >=3.10'I featured a simpler version of this prompt in my Datasette plugin upgrade video, but I've expanded it quite a bit since then.

At one point I had six terminal windows open running this same prompt against six different repos - probably my most extreme case of parallel agents yet.

Here are the six resulting commits from those six coding agent sessions:

Reverse engineering Codex CLI to get GPT-5-Codex-Mini to draw me a pelican

OpenAI partially released a new model yesterday called GPT-5-Codex-Mini, which they describe as "a more compact and cost-efficient version of GPT-5-Codex". It’s currently only available via their Codex CLI tool and VS Code extension, with proper API access "coming soon". I decided to use Codex to reverse engineer the Codex CLI tool and give me the ability to prompt the new model directly.

[... 1,774 words]Using Codex CLI with gpt-oss:120b on an NVIDIA DGX Spark via Tailscale. Inspired by a YouTube comment I wrote up how I run OpenAI's Codex CLI coding agent against the gpt-oss:120b model running in Ollama on my NVIDIA DGX Spark via a Tailscale network.

It takes a little bit of work to configure but the result is I can now use Codex CLI on my laptop anywhere in the world against a self-hosted model.

I used it to build this space invaders clone.

Video + notes on upgrading a Datasette plugin for the latest 1.0 alpha, with help from uv and OpenAI Codex CLI

I’m upgrading various plugins for compatibility with the new Datasette 1.0a20 alpha release and I decided to record a video of the process. This post accompanies that video with detailed additional notes.

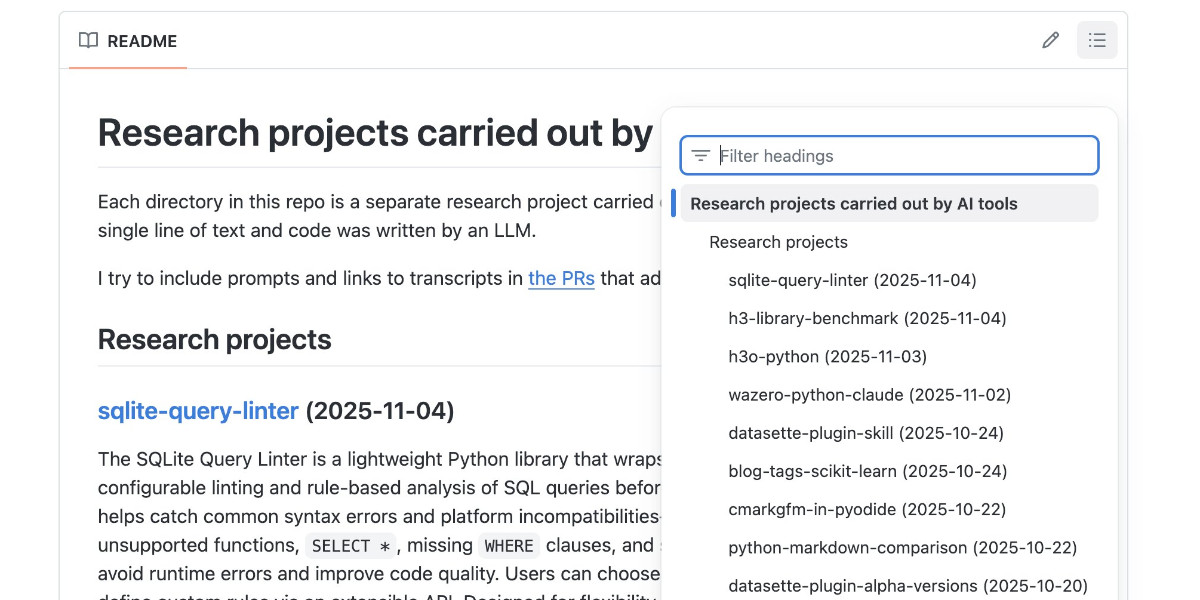

[... 1,094 words]Code research projects with async coding agents like Claude Code and Codex

I’ve been experimenting with a pattern for LLM usage recently that’s working out really well: asynchronous code research tasks. Pick a research question, spin up an asynchronous coding agent and let it go and run some experiments and report back when it’s done.

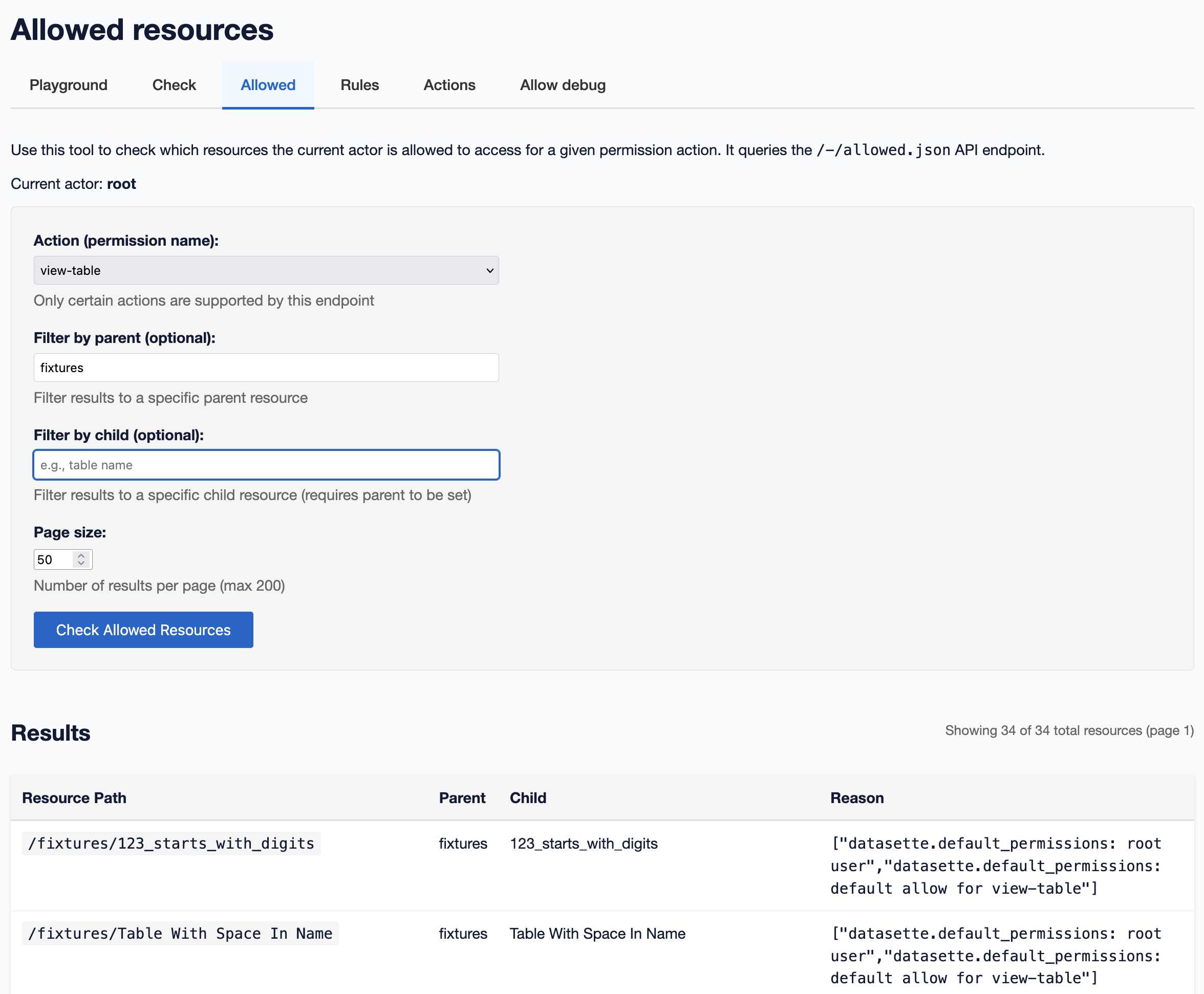

[... 2,017 words]A new SQL-powered permissions system in Datasette 1.0a20

Datasette 1.0a20 is out with the biggest breaking API change on the road to 1.0, improving how Datasette’s permissions system works by migrating permission logic to SQL running in SQLite. This release involved 163 commits, with 10,660 additions and 1,825 deletions, most of which was written with the help of Claude Code.

[... 2,750 words]Just Talk To It—the no-bs Way of Agentic Engineering. Peter Steinberger's long, detailed description of his current process for using Codex CLI and GPT-5 Codex. This is information dense and full of actionable tips, plus plenty of strong opinions about the differences between Claude 4.5 an GPT-5:

While Claude reacts well to 🚨 SCREAMING ALL-CAPS 🚨 commands that threaten it that it will imply ultimate failure and 100 kittens will die if it runs command X, that freaks out GPT-5. (Rightfully so). So drop all of that and just use words like a human.

Peter is a heavy user of parallel agents:

I've completely moved to

codexcli as daily driver. I run between 3-8 in parallel in a 3x3 terminal grid, most of them in the same folder, some experiments go in separate folders. I experimented with worktrees, PRs but always revert back to this setup as it gets stuff done the fastest.

He shares my preference for CLI utilities over MCPs:

I can just refer to a cli by name. I don't need any explanation in my agents file. The agent will try $randomcrap on the first call, the cli will present the help menu, context now has full info how this works and from now on we good. I don't have to pay a price for any tools, unlike MCPs which are a constant cost and garbage in my context. Use GitHub's MCP and see 23k tokens gone. Heck, they did make it better because it was almost 50.000 tokens when it first launched. Or use the

ghcli which has basically the same feature set, models already know how to use it, and pay zero context tax.

It's worth reading the section on why he abandoned spec driven development in full.

Embracing the parallel coding agent lifestyle

For a while now I’ve been hearing from engineers who run multiple coding agents at once—firing up several Claude Code or Codex CLI instances at the same time, sometimes in the same repo, sometimes against multiple checkouts or git worktrees.

[... 1,275 words]GitHub Copilot CLI is now in public preview. GitHub now have their own entry in the coding terminal CLI agent space: Copilot CLI.

It's the same basic shape as Claude Code, Codex CLI, Gemini CLI and a growing number of other tools in this space. It's a terminal UI which you accepts instructions and can modify files, run commands and integrate with GitHub's MCP server and other MCP servers that you configure.

Two notable features compared to many of the others:

- It works against the GitHub Models backend. It defaults to Claude Sonnet 4 but you can set

COPILOT_MODEL=gpt-5to switch to GPT-5. Presumably other models will become available soon. - It's billed against your existing GitHub Copilot account. Pricing details are here - they're split into "Agent mode" requests and "Premium" requests. Different plans get different allowances, which are shared with other products in the GitHub Copilot family.

The best available documentation right now is the copilot --help screen - here's a copy of that in a Gist.

It's a competent entry into the market, though it's missing features like the ability to paste in images which have been introduced to Claude Code and Codex CLI over the past few months.

Disclosure: I got a preview of this at an event at Microsoft's offices in Seattle last week. They did not pay me for my time but they did cover my flight, hotel and some dinners.

GPT-5-Codex. OpenAI half-released this model earlier this month, adding it to their Codex CLI tool but not their API.

Today they've fixed that - the new model can now be accessed as gpt-5-codex. It's priced the same as regular GPT-5: $1.25/million input tokens, $10/million output tokens, and the same hefty 90% discount for previously cached input tokens, especially important for agentic tool-using workflows which quickly produce a lengthy conversation.

It's only available via their Responses API, which means you currently need to install the llm-openai-plugin to use it with LLM:

llm install -U llm-openai-plugin

llm -m openai/gpt-5-codex -T llm_version 'What is the LLM version?'

Outputs:

The installed LLM version is 0.27.1.

I added tool support to that plugin today, mostly authored by GPT-5 Codex itself using OpenAI's Codex CLI.

The new prompting guide for GPT-5-Codex is worth a read.

GPT-5-Codex is purpose-built for Codex CLI, the Codex IDE extension, the Codex cloud environment, and working in GitHub, and also supports versatile tool use. We recommend using GPT-5-Codex only for agentic and interactive coding use cases.

Because the model is trained specifically for coding, many best practices you once had to prompt into general purpose models are built in, and over prompting can reduce quality.

The core prompting principle for GPT-5-Codex is “less is more.”

I tried my pelican benchmark at a cost of 2.156 cents.

llm -m openai/gpt-5-codex "Generate an SVG of a pelican riding a bicycle"

I asked Codex to describe this image and it correctly identified it as a pelican!

llm -m openai/gpt-5-codex -a https://static.simonwillison.net/static/2025/gpt-5-codex-api-pelican.png \

-s 'Write very detailed alt text'

Cartoon illustration of a cream-colored pelican with a large orange beak and tiny black eye riding a minimalist dark-blue bicycle. The bird’s wings are tucked in, its legs resemble orange stick limbs pushing the pedals, and its tail feathers trail behind with light blue motion streaks to suggest speed. A small coral-red tongue sticks out of the pelican’s beak. The bicycle has thin light gray spokes, and the background is a simple pale blue gradient with faint curved lines hinting at ground and sky.

httpjail

(via)

Here's a promising new (experimental) project in the sandboxing space from Ammar Bandukwala at Coder. httpjail provides a Rust CLI tool for running an individual process against a custom configured HTTP proxy.

The initial goal is to help run coding agents like Claude Code and Codex CLI with extra rules governing how they interact with outside services. From Ammar's blog post that introduces the new tool, Fine-grained HTTP filtering for Claude Code:

httpjailimplements an HTTP(S) interceptor alongside process-level network isolation. Under default configuration, all DNS (udp:53) is permitted and all other non-HTTP(S) traffic is blocked.

httpjailrules are either JavaScript expressions or custom programs. This approach makes them far more flexible than traditional rule-oriented firewalls and avoids the learning curve of a DSL.Block all HTTP requests other than the LLM API traffic itself:

$ httpjail --js "r.host === 'api.anthropic.com'" -- claude "build something great"

I tried it out using OpenAI's Codex CLI instead and found this recipe worked:

brew upgrade rust

cargo install httpjail # Drops it in `~/.cargo/bin`

httpjail --js "r.host === 'chatgpt.com'" -- codex

Within that Codex instance the model ran fine but any attempts to access other URLs (e.g. telling it "Use curl to fetch simonwillison.net)" failed at the proxy layer.

This is still at a really early stage but there's a lot I like about this project. Being able to use JavaScript to filter requests via the --js option is neat (it's using V8 under the hood), and there's also a --sh shellscript option which instead runs a shell program passing environment variables that can be used to determine if the request should be allowed.

At a basic level it works by running a proxy server and setting HTTP_PROXY and HTTPS_PROXY environment variables so well-behaving software knows how to route requests.

It can also add a bunch of other layers. On Linux it sets up nftables rules to explicitly deny additional network access. There's also a --docker-run option which can launch a Docker container with the specified image but first locks that container down to only have network access to the httpjail proxy server.

It can intercept, filter and log HTTPS requests too by generating its own certificate and making that available to the underlying process.

I'm always interested in new approaches to sandboxing, and fine-grained network access is a particularly tricky problem to solve. This looks like a very promising step in that direction - I'm looking forward to seeing how this project continues to evolve.

GPT‑5-Codex and upgrades to Codex. OpenAI half-released a new model today: GPT‑5-Codex, a fine-tuned GPT-5 variant explicitly designed for their various AI-assisted programming tools.

Update: OpenAI call it a "version of GPT-5", they don't explicitly describe it as a fine-tuned model. Calling it a fine-tune was my mistake here.

I say half-released because it's not yet available via their API, but they "plan to make GPT‑5-Codex available in the API soon".

I wrote about the confusing array of OpenAI products that share the name Codex a few months ago. This new model adds yet another, though at least "GPT-5-Codex" (using two hyphens) is unambiguous enough not to add to much more to the confusion.

At this point it's best to think of Codex as OpenAI's brand name for their coding family of models and tools.

The new model is already integrated into their VS Code extension, the Codex CLI and their Codex Cloud asynchronous coding agent. I'd been calling that last one "Codex Web" but I think Codex Cloud is a better name since it can also be accessed directly from their iPhone app.

Codex Cloud also has a new feature: you can configure it to automatically run code review against specific GitHub repositories (I found that option on chatgpt.com/codex/settings/code-review) and it will create a temporary container to use as part of those reviews. Here's the relevant documentation.

Some documented features of the new GPT-5-Codex model:

- Specifically trained for code review, which directly supports their new code review feature.

- "GPT‑5-Codex adapts how much time it spends thinking more dynamically based on the complexity of the task." Simple tasks (like "list files in this directory") should run faster. Large, complex tasks should use run for much longer - OpenAI report Codex crunching for seven hours in some cases!

- Increased score on their proprietary "code refactoring evaluation" from 33.9% for GPT-5 (high) to 51.3% for GPT-5-Codex (high). It's hard to evaluate this without seeing the details of the eval but it does at least illustrate that refactoring performance is something they've focused on here.

- "GPT‑5-Codex also shows significant improvements in human preference evaluations when creating mobile websites" - in the past I've habitually prompted models to "make it mobile-friendly", maybe I don't need to do that any more.

- "We find that comments by GPT‑5-Codex are less likely to be incorrect or unimportant" - I originally misinterpreted this as referring to comments in code but it's actually about comments left on code reviews.

The system prompt for GPT-5-Codex in Codex CLI is worth a read. It's notably shorter than the system prompt for other models - here's a diff.

Here's the section of the updated system prompt that talks about comments:

Add succinct code comments that explain what is going on if code is not self-explanatory. You should not add comments like "Assigns the value to the variable", but a brief comment might be useful ahead of a complex code block that the user would otherwise have to spend time parsing out. Usage of these comments should be rare.

Theo Browne has a video review of the model and accompanying features. He was generally impressed but noted that it was surprisingly bad at using the Codex CLI search tool to navigate code. Hopefully that's something that can fix with a system prompt update.

Finally, can it drew a pelican riding a bicycle? Without API access I instead got Codex Cloud to have a go by prompting:

Generate an SVG of a pelican riding a bicycle, save as pelican.svg

Here's the result:

Load Llama-3.2 WebGPU in your browser from a local folder (via) Inspired by a comment on Hacker News I decided to see if it was possible to modify the transformers.js-examples/tree/main/llama-3.2-webgpu Llama 3.2 chat demo (online here, I wrote about it last November) to add an option to open a local model file directly from a folder on disk, rather than waiting for it to download over the network.

I posed the problem to OpenAI's GPT-5-enabled Codex CLI like this:

git clone https://github.com/huggingface/transformers.js-examples

cd transformers.js-examples/llama-3.2-webgpu

codex

Then this prompt:

Modify this application such that it offers the user a file browse button for selecting their own local copy of the model file instead of loading it over the network. Provide a "download model" option too.

Codex churned away for several minutes, even running commands like curl -sL https://raw.githubusercontent.com/huggingface/transformers.js/main/src/models.js | sed -n '1,200p' to inspect the source code of the underlying Transformers.js library.

After four prompts total (shown here) it built something which worked!

To try it out you'll need your own local copy of the Llama 3.2 ONNX model. You can get that (a ~1.2GB) download) like so:

git lfs install

git clone https://huggingface.co/onnx-community/Llama-3.2-1B-Instruct-q4f16

Then visit my llama-3.2-webgpu page in Chrome or Firefox Nightly (since WebGPU is required), click "Browse folder", select that folder you just cloned, agree to the "Upload" confirmation (confusing since nothing is uploaded from your browser, the model file is opened locally on your machine) and click "Load local model".

Here's an animated demo (recorded in real-time, I didn't speed this up):

I pushed a branch with those changes here. The next step would be to modify this to support other models in addition to the Llama 3.2 demo, but I'm pleased to have got to this proof of concept with so little work beyond throwing some prompts at Codex to see if it could figure it out.

According to the Codex /status command this used 169,818 input tokens, 17,112 output tokens and 1,176,320 cached input tokens. At current GPT-5 token pricing ($1.25/million input, $0.125/million cached input, $10/million output) that would cost 53.942 cents, but Codex CLI hooks into my existing $20/month ChatGPT Plus plan so this was bundled into that.

If you've been experimenting with OpenAI's Codex CLI and have been frustrated that it's not possible to select text and copy it to the clipboard, at least when running in the Mac terminal (I genuinely didn't know it was possible to build a terminal app that disabled copy and paste) you should know that they fixed that in this issue last week.

The new 0.20.0 version from three days ago also completely removes the old TypeScript codebase in favor of Rust. Even installations via NPM now get the Rust version.

I originally installed Codex via Homebrew, so I had to run this command to get the updated version:

brew upgrade codex

Another Codex tip: to use GPT-5 (or any other specific OpenAI model) you can run it like this:

export OPENAI_DEFAULT_MODEL="gpt-5"

codex

This no longer works, see update below.

I've been using a codex-5 script on my PATH containing this, because sometimes I like to live dangerously!

#!/usr/bin/env zsh

# Usage: codex-5 [additional args passed to `codex`]

export OPENAI_DEFAULT_MODEL="gpt-5"

exec codex --dangerously-bypass-approvals-and-sandbox "$@"

Update: It looks like GPT-5 is the default model in v0.20.0 already.

Also the environment variable I was using no longer does anything, it was removed in this commit (I used Codex Web to help figure that out). You can use the -m model_id command-line option instead.

openai/codex. Just released by OpenAI, a "lightweight coding agent that runs in your terminal". Looks like their version of Claude Code, though unlike Claude Code Codex is released under an open source (Apache 2) license.

Here's the main prompt that runs in a loop, which starts like this:

You are operating as and within the Codex CLI, a terminal-based agentic coding assistant built by OpenAI. It wraps OpenAI models to enable natural language interaction with a local codebase. You are expected to be precise, safe, and helpful.

You can:

- Receive user prompts, project context, and files.

- Stream responses and emit function calls (e.g., shell commands, code edits).

- Apply patches, run commands, and manage user approvals based on policy.

- Work inside a sandboxed, git-backed workspace with rollback support.

- Log telemetry so sessions can be replayed or inspected later.

- More details on your functionality are available at codex --help

The Codex CLI is open-sourced. Don't confuse yourself with the old Codex language model built by OpenAI many moons ago (this is understandably top of mind for you!). Within this context, Codex refers to the open-source agentic coding interface. [...]

I like that the prompt describes OpenAI's previous Codex language model as being from "many moons ago". Prompt engineering is so weird.

Since the prompt says that it works "inside a sandboxed, git-backed workspace" I went looking for the sandbox. On macOS it uses the little-known sandbox-exec process, part of the OS but grossly under-documented. The best information I've found about it is this article from 2020, which notes that man sandbox-exec lists it as deprecated. I didn't spot evidence in the Codex code of sandboxes for other platforms.