August 2025

85 posts: 11 entries, 47 links, 21 quotes, 6 notes

Aug. 9, 2025

You know what else we noticed in the interviews? Developers rarely mentioned “time saved” as the core benefit of working in this new way with agents. They were all about increasing ambition. We believe that means that we should update how we talk about (and measure) success when using these tools, and we should expect that after the initial efficiency gains our focus will be on raising the ceiling of the work and outcomes we can accomplish, which is a very different way of interpreting tool investments.

— Thomas Dohmke, CEO, GitHub

The issue with GPT-5 in a nutshell is that unless you pay for model switching & know to use GPT-5 Thinking or Pro, when you ask “GPT-5” you sometimes get the best available AI & sometimes get one of the worst AIs available and it might even switch within a single conversation.

— Ethan Mollick, highlighting that GPT-5 (high) ranks top on Artificial Analysis, GPT-5 (minimal) ranks lower than GPT-4.1

Aug. 10, 2025

the percentage of users using reasoning models each day is significantly increasing; for example, for free users we went from <1% to 7%, and for plus users from 7% to 24%.

— Sam Altman, revealing quite how few people used the old model picker to upgrade from GPT-4o

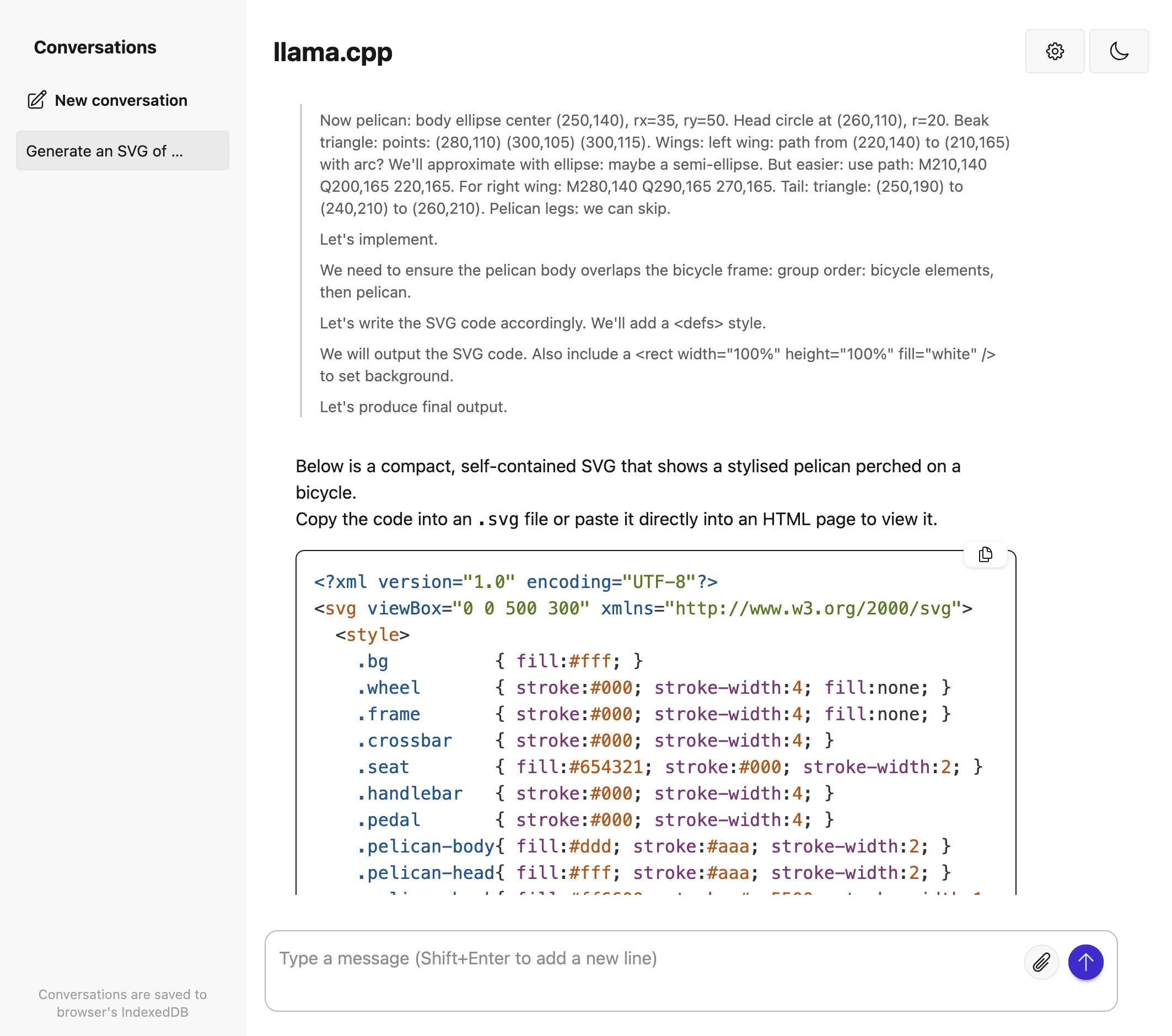

Qwen3-4B-Thinking: “This is art—pelicans don’t ride bikes!”

I’ve fallen a few days behind keeping up with Qwen. They released two new 4B models last week: Qwen3-4B-Instruct-2507 and its thinking equivalent Qwen3-4B-Thinking-2507.

[... 991 words]Aug. 11, 2025

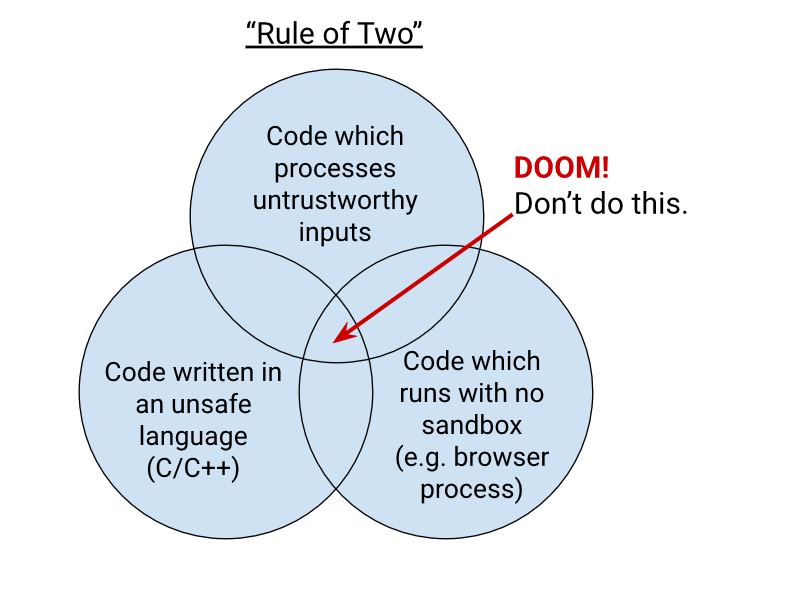

Chromium Docs: The Rule Of 2. Alex Russell pointed me to this principle in the Chromium security documentation as similar to my description of the lethal trifecta. First added in 2019, the Chromium guideline states:

When you write code to parse, evaluate, or otherwise handle untrustworthy inputs from the Internet — which is almost everything we do in a web browser! — we like to follow a simple rule to make sure it's safe enough to do so. The Rule Of 2 is: Pick no more than 2 of

- untrustworthy inputs;

- unsafe implementation language; and

- high privilege.

Chromium uses this design pattern to help try to avoid the high severity memory safety bugs that come when untrustworthy inputs are handled by code running at high privilege.

Chrome Security Team will generally not approve landing a CL or new feature that involves all 3 of untrustworthy inputs, unsafe language, and high privilege. To solve this problem, you need to get rid of at least 1 of those 3 things.

AI for data engineers with Simon Willison. I recorded an episode last week with Claire Giordano for the Talking Postgres podcast. The topic was "AI for data engineers" but we ended up covering an enjoyable range of different topics.

- How I got started programming with a Commodore 64 - the tape drive for which inspired the name Datasette

- Selfish motivations for TILs (force me to write up my notes) and open source (help me never have to solve the same problem twice)

- LLMs have been good at SQL for a couple of years now. Here's how I used them for a complex PostgreSQL query that extracted alt text from my blog's images using regular expressions

- Structured data extraction as the most economically valuable application of LLMs for data work

- 2025 has been the year of tool calling a loop ("agentic" if you like)

- Thoughts on running MCPs securely - read-only database access, think about sandboxes, use PostgreSQL permissions, watch out for the lethal trifecta

- Jargon guide: Agents, MCP, RAG, Tokens

- How to get started learning to prompt: play with the models and "bring AI to the table" even for tasks that you don't think it can handle

- "It's always a good day if you see a pelican"

qwen-image-mps (via) Ivan Fioravanti built this Python CLI script for running the Qwen/Qwen-Image image generation model on an Apple silicon Mac, optionally using the Qwen-Image-Lightning LoRA to dramatically speed up generation.

Ivan has tested it this on 512GB and 128GB machines and it ran really fast - 42 seconds on his M3 Ultra. I've run it on my 64GB M2 MacBook Pro - after quitting almost everything else - and it just about manages to output images after pegging my GPU (fans whirring, keyboard heating up) and occupying 60GB of my available RAM. With the LoRA option running the script to generate an image took 9m7s on my machine.

Ivan merged my PR adding inline script dependencies for uv which means you can now run it like this:

uv run https://raw.githubusercontent.com/ivanfioravanti/qwen-image-mps/refs/heads/main/qwen-image-mps.py \

-p 'A vintage coffee shop full of raccoons, in a neon cyberpunk city' -f

The first time I ran this it downloaded the 57.7GB model from Hugging Face and stored it in my ~/.cache/huggingface/hub/models--Qwen--Qwen-Image directory. The -f option fetched an extra 1.7GB Qwen-Image-Lightning-8steps-V1.0.safetensors file to my working directory that sped up the generation.

Here's the resulting image:

If you've been experimenting with OpenAI's Codex CLI and have been frustrated that it's not possible to select text and copy it to the clipboard, at least when running in the Mac terminal (I genuinely didn't know it was possible to build a terminal app that disabled copy and paste) you should know that they fixed that in this issue last week.

The new 0.20.0 version from three days ago also completely removes the old TypeScript codebase in favor of Rust. Even installations via NPM now get the Rust version.

I originally installed Codex via Homebrew, so I had to run this command to get the updated version:

brew upgrade codex

Another Codex tip: to use GPT-5 (or any other specific OpenAI model) you can run it like this:

export OPENAI_DEFAULT_MODEL="gpt-5"

codex

This no longer works, see update below.

I've been using a codex-5 script on my PATH containing this, because sometimes I like to live dangerously!

#!/usr/bin/env zsh

# Usage: codex-5 [additional args passed to `codex`]

export OPENAI_DEFAULT_MODEL="gpt-5"

exec codex --dangerously-bypass-approvals-and-sandbox "$@"

Update: It looks like GPT-5 is the default model in v0.20.0 already.

Also the environment variable I was using no longer does anything, it was removed in this commit (I used Codex Web to help figure that out). You can use the -m model_id command-line option instead.

Reddit will block the Internet Archive. Well this sucks. Jay Peters for the Verge:

Reddit says that it has caught AI companies scraping its data from the Internet Archive’s Wayback Machine, so it’s going to start blocking the Internet Archive from indexing the vast majority of Reddit. The Wayback Machine will no longer be able to crawl post detail pages, comments, or profiles; instead, it will only be able to index the Reddit.com homepage, which effectively means Internet Archive will only be able to archive insights into which news headlines and posts were most popular on a given day.

LLM 0.27, the annotated release notes: GPT-5 and improved tool calling

I shipped LLM 0.27 today (followed by a 0.27.1 with minor bug fixes), adding support for the new GPT-5 family of models from OpenAI plus a flurry of improvements to the tool calling features introduced in LLM 0.26. Here are the annotated release notes.

[... 1,174 words]Aug. 12, 2025

I think there's been a lot of decisions over time that proved pretty consequential, but we made them very quickly as we have to. [...]

[On pricing] I had this kind of panic attack because we really needed to launch subscriptions because at the time we were taking the product down all the time. [...]

So what I did do is ship a Google Form to Discord with the four questions you're supposed to ask on how to price something.

But we got with the $20. We were debating something slightly higher at the time. I often wonder what would have happened because so many other companies ended up copying the $20 price point, so did we erase a bunch of market cap by pricing it this way?

— Nick Turley, Head of ChatGPT, interviewed by Lenny Rachitsky

Claude Sonnet 4 now supports 1M tokens of context (via) Gemini and OpenAI both have million token models, so it's good to see Anthropic catching up. This is 5x the previous 200,000 context length limit of the various Claude Sonnet models.

Anthropic have previously made 1 million tokens available to select customers. From the Claude 3 announcement in March 2024:

The Claude 3 family of models will initially offer a 200K context window upon launch. However, all three models are capable of accepting inputs exceeding 1 million tokens and we may make this available to select customers who need enhanced processing power.

This is also the first time I've seen Anthropic use prices that vary depending on context length:

- Prompts ≤ 200K: $3/million input, $15/million output

- Prompts > 200K: $6/million input, $22.50/million output

Gemini have been doing this for a while: Gemini 2.5 Pro is $1.25/$10 below 200,000 tokens and $2.50/$15 above 200,000.

Here's Anthropic's full documentation on the 1m token context window. You need to send a context-1m-2025-08-07 beta header in your request to enable it.

Note that this is currently restricted to "tier 4" users who have purchased at least $400 in API credits:

Long context support for Sonnet 4 is now in public beta on the Anthropic API for customers with Tier 4 and custom rate limits, with broader availability rolling out over the coming weeks.

Aug. 13, 2025

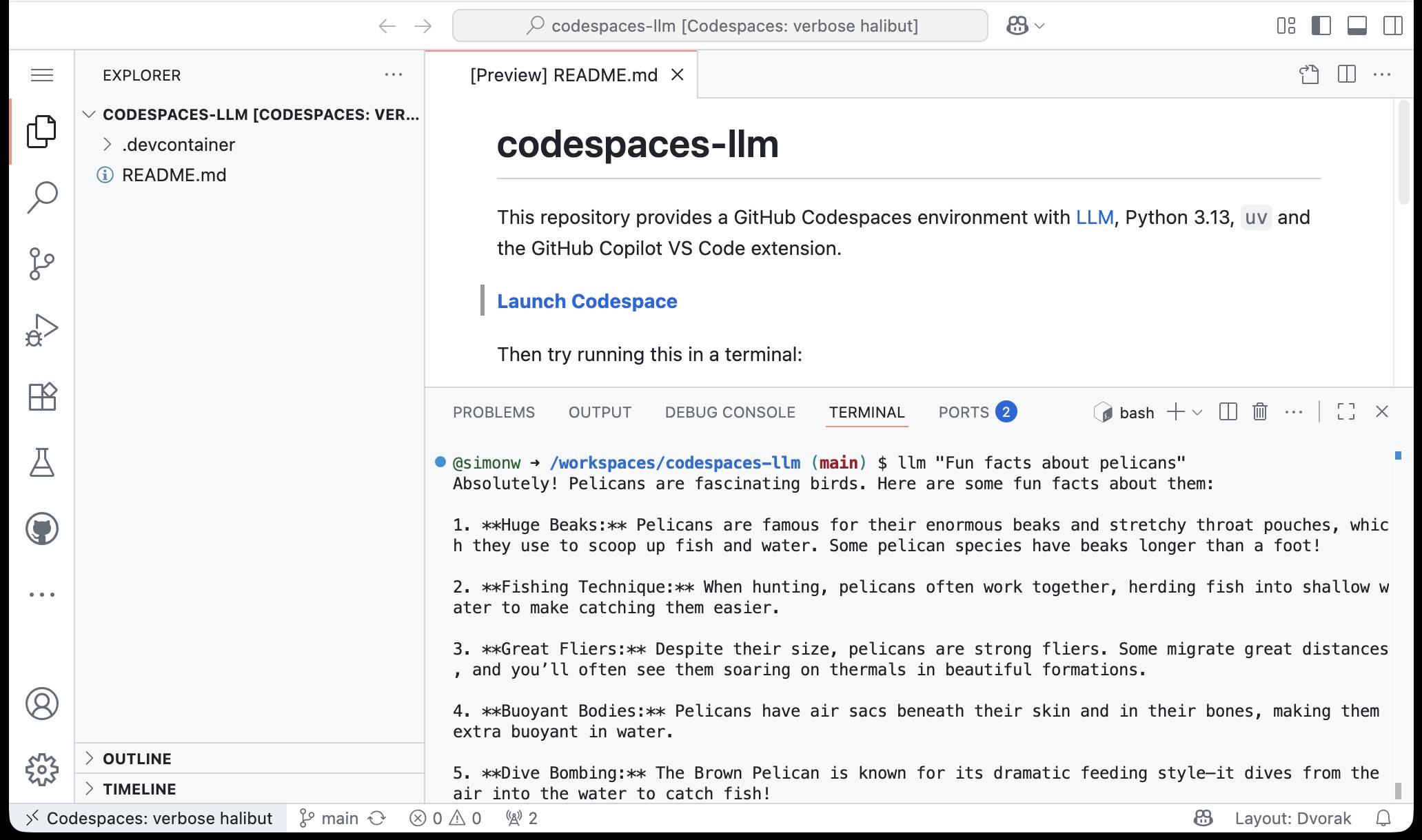

simonw/codespaces-llm. GitHub Codespaces provides full development environments in your browser, and is free to use with anyone with a GitHub account. Each environment has a full Linux container and a browser-based UI using VS Code.

I found out today that GitHub Codespaces come with a GITHUB_TOKEN environment variable... and that token works as an API key for accessing LLMs in the GitHub Models collection, which includes dozens of models from OpenAI, Microsoft, Mistral, xAI, DeepSeek, Meta and more.

Anthony Shaw's llm-github-models plugin for my LLM tool allows it to talk directly to GitHub Models. I filed a suggestion that it could pick up that GITHUB_TOKEN variable automatically and Anthony shipped v0.18.0 with that feature a few hours later.

... which means you can now run the following in any Python-enabled Codespaces container and get a working llm command:

pip install llm

llm install llm-github-models

llm models default github/gpt-4.1

llm "Fun facts about pelicans"

Setting the default model to github/gpt-4.1 means you get free (albeit rate-limited) access to that OpenAI model.

To save you from needing to even run that sequence of commands I've created a new GitHub repository, simonw/codespaces-llm, which pre-installs and runs those commands for you.

Anyone with a GitHub account can use this URL to launch a new Codespaces instance with a configured llm terminal command ready to use:

codespaces.new/simonw/codespaces-llm?quickstart=1

While putting this together I wrote up what I've learned about devcontainers so far as a TIL: Configuring GitHub Codespaces using devcontainers.

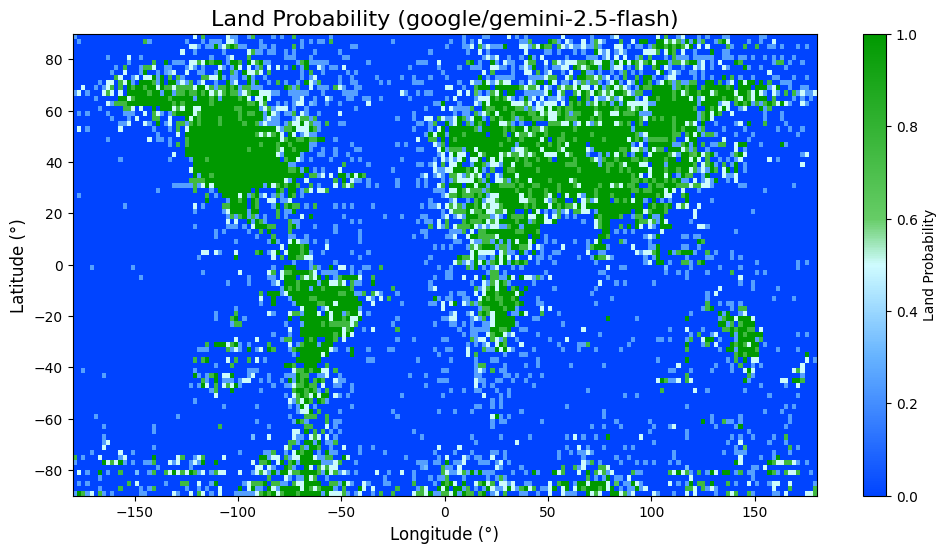

How Does A Blind Model See The Earth? (via) Fun, creative new micro-eval. Split the world into a sampled collection of latitude longitude points and for each one ask a model:

If this location is over land, say 'Land'. If this location is over water, say 'Water'. Do not say anything else.

Author henry goes a step further: for models that expose logprobs they use the relative probability scores of Land or Water to get a confidence level, for other models they prompt four times at temperature 1 to get a score.

And then.. they plot those probabilities on a chart! Here's Gemini 2.5 Flash (one of the better results):

This reminds me of my pelican riding a bicycle benchmark in that it gives you an instant visual representation that's very easy to compare between different models.

Screaming in the Cloud: AI’s Security Crisis: Why Your Assistant Might Betray You. I recorded this podcast conversation with Corey Quinn a few weeks ago:

On this episode of Screaming in the Cloud, Corey Quinn talks with Simon Willison, founder of Datasette and creator of LLM CLI about AI’s realities versus the hype. They dive into Simon’s “lethal trifecta” of AI security risks, his prediction of a major breach within six months, and real-world use cases of his open source tools, from investigative journalism to OSINT sleuthing. Simon shares grounded insights on coding with AI, the real environmental impact, AGI skepticism, and why human expertise still matters. A candid, hype-free take from someone who truly knows the space.

This was a really fun conversation - very high energy and we covered a lot of different topics. It's about a lot more than just LLM security.

pyx: a Python-native package registry, now in Beta (via) Since its first release, the single biggest question around the uv Python environment management tool has been around Astral's business model: Astral are a VC-backed company and at some point they need to start making real revenue.

Back in September Astral founder Charlie Marsh said the following:

I don't want to charge people money to use our tools, and I don't want to create an incentive structure whereby our open source offerings are competing with any commercial offerings (which is what you see with a lost of hosted-open-source-SaaS business models).

What I want to do is build software that vertically integrates with our open source tools, and sell that software to companies that are already using Ruff, uv, etc. Alternatives to things that companies already pay for today.

An example of what this might look like (we may not do this, but it's helpful to have a concrete example of the strategy) would be something like an enterprise-focused private package registry. [...]

It looks like those plans have become concrete now! From today's announcement:

TL;DR: pyx is a Python-native package registry --- and the first piece of the Astral platform, our next-generation infrastructure for the Python ecosystem.

We think of pyx as an optimized backend for uv: it's a package registry, but it also solves problems that go beyond the scope of a traditional "package registry", making your Python experience faster, more secure, and even GPU-aware, both for private packages and public sources (like PyPI and the PyTorch index).

pyx is live with our early partners, including Ramp, Intercom, and fal [...]

This looks like a sensible direction to me, and one that stays true to Charlie's promises to carefully design the incentive structure to avoid corrupting the core open source project that the Python community is coming to depend on.

Aug. 14, 2025

Introducing Gemma 3 270M: The compact model for hyper-efficient AI (via) New from Google:

Gemma 3 270M, a compact, 270-million parameter model designed from the ground up for task-specific fine-tuning with strong instruction-following and text structuring capabilities already trained in.

This model is tiny. The version I tried was the LM Studio GGUF one, a 241MB download.

It works! You can say "hi" to it and ask it very basic questions like "What is the capital of France".

I tried "Generate an SVG of a pelican riding a bicycle" about a dozen times and didn't once get back an SVG that was more than just a blank square... but at one point it did decide to write me this poem instead, which was nice:

+-----------------------+

| Pelican Riding Bike |

+-----------------------+

| This is the cat! |

| He's got big wings and a happy tail. |

| He loves to ride his bike! |

+-----------------------+

| Bike lights are shining bright. |

| He's got a shiny top, too! |

| He's ready for adventure! |

+-----------------------+

That's not really the point though. The Gemma 3 team make it very clear that the goal of this model is to support fine-tuning: a model this tiny is never going to be useful for general purpose LLM tasks, but given the right fine-tuning data it should be able to specialize for all sorts of things:

In engineering, success is defined by efficiency, not just raw power. You wouldn't use a sledgehammer to hang a picture frame. The same principle applies to building with AI.

Gemma 3 270M embodies this "right tool for the job" philosophy. It's a high-quality foundation model that follows instructions well out of the box, and its true power is unlocked through fine-tuning. Once specialized, it can execute tasks like text classification and data extraction with remarkable accuracy, speed, and cost-effectiveness. By starting with a compact, capable model, you can build production systems that are lean, fast, and dramatically cheaper to operate.

Here's their tutorial on Full Model Fine-Tune using Hugging Face Transformers, which I have not yet attempted to follow.

I imagine this model will be particularly fun to play with directly in a browser using transformers.js.

Update: It is! Here's a bedtime story generator using Transformers.js (requires WebGPU, so Chrome-like browsers only). Here's the source code for that demo.

NERD HARDER! is the answer every time a politician gets a technological idée-fixe about how to solve a social problem by creating a technology that can't exist. It's the answer that EU politicians who backed the catastrophic proposal to require copyright filters for all user-generated content came up with, when faced with objections that these filters would block billions of legitimate acts of speech [...]

When politicians seize on a technological impossibility as a technological necessity, they flail about and desperately latch onto scholarly work that they can brandish as evidence that their idea could be accomplished. [...]

That's just happened, and in relation to one of the scariest, most destructive NERD HARDER! tech policies ever to be assayed (a stiff competition). I'm talking about the UK Online Safety Act, which imposes a duty on websites to verify the age of people they communicate with before serving them anything that could be construed as child-inappropriate (a category that includes, e.g., much of Wikipedia)

— Cory Doctorow, "Privacy preserving age verification" is bullshit

Aug. 15, 2025

I gave all my Apple wealth away because wealth and power are not what I live for. I have a lot of fun and happiness. I funded a lot of important museums and arts groups in San Jose, the city of my birth, and they named a street after me for being good. I now speak publicly and have risen to the top. I have no idea how much I have but after speaking for 20 years it might be $10M plus a couple of homes. I never look for any type of tax dodge. I earn money from my labor and pay something like 55% combined tax on it. I am the happiest person ever. Life to me was never about accomplishment, but about Happiness, which is Smiles minus Frowns. I developed these philosophies when I was 18-20 years old and I never sold out.

— Steve Wozniak, in a comment on Slashdot

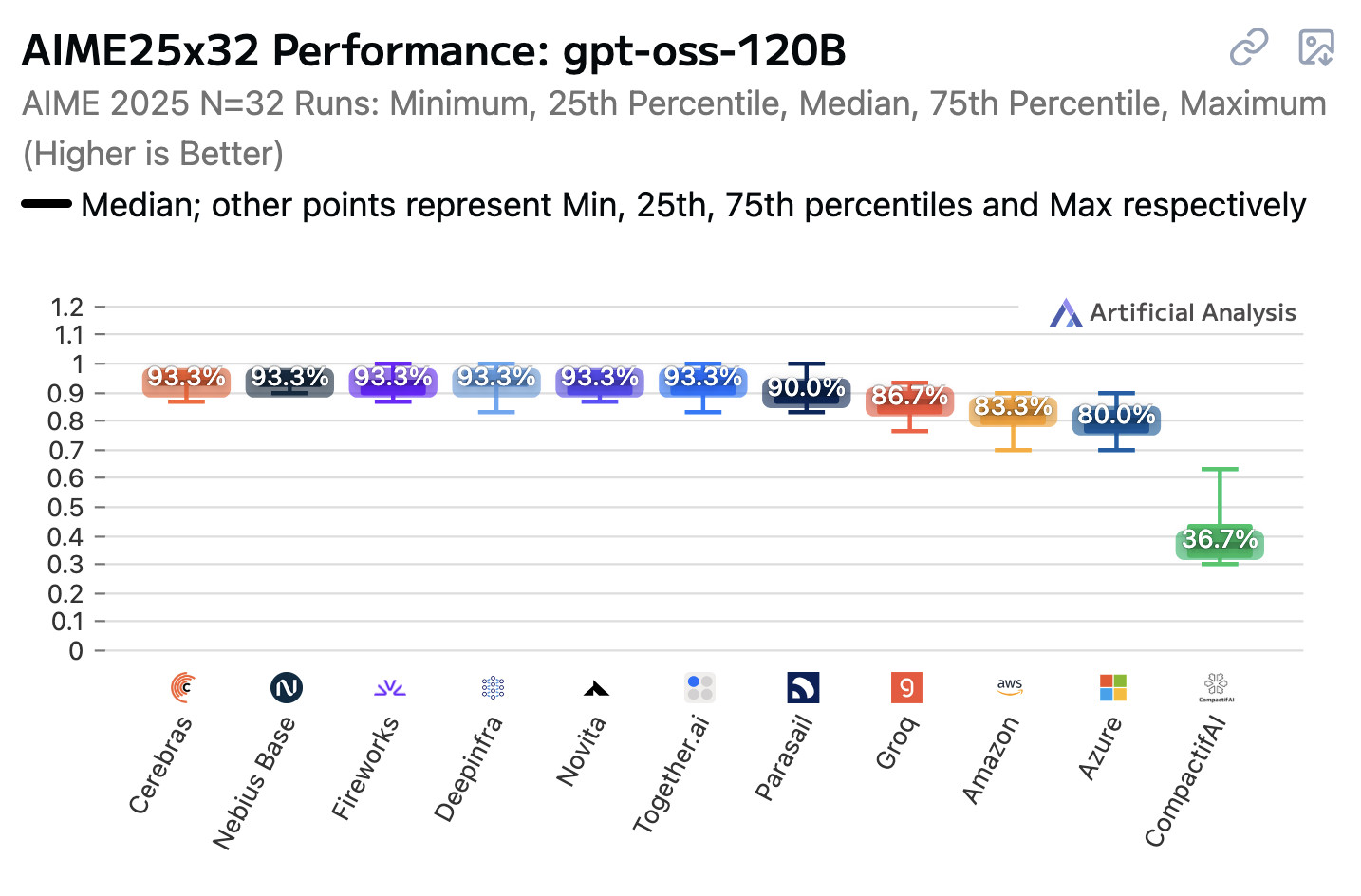

Open weight LLMs exhibit inconsistent performance across providers

Artificial Analysis published a new benchmark the other day, this time focusing on how an individual model—OpenAI’s gpt-oss-120b—performs across different hosted providers.

[... 847 words]Meta’s AI rules have let bots hold ‘sensual’ chats with kids, offer false medical info. This is grim. Reuters got hold of a leaked copy Meta's internal "GenAI: Content Risk Standards" document:

Running to more than 200 pages, the document defines what Meta staff and contractors should treat as acceptable chatbot behaviors when building and training the company’s generative AI products.

Read the full story - there was some really nasty stuff in there.

It's understandable why this document was confidential, but also frustrating because documents like this are genuinely some of the best documentation out there in terms of how these systems can be expected to behave.

I'd love to see more transparency from AI labs around these kinds of decisions.

The Summer of Johann: prompt injections as far as the eye can see

Independent AI researcher Johann Rehberger (previously) has had an absurdly busy August. Under the heading The Month of AI Bugs he has been publishing one report per day across an array of different tools, all of which are vulnerable to various classic prompt injection problems. This is a fantastic and horrifying demonstration of how widespread and dangerous these vulnerabilities still are, almost three years after we first started talking about them.

[... 1,425 words]GPT-5 has a hidden system prompt. It looks like GPT-5 when accessed via the OpenAI API may have its own hidden system prompt, independent from the system prompt you can specify in an API call.

At the very least it's getting sent the current date. I tried this just now:

llm -m gpt-5 'current date'

That returned "2025-08-15", confirming that the date has been fed to the model as part of a hidden prompt.

llm -m gpt-5 'current date' --system 'speak french'

Returned "La date actuelle est le 15 août 2025", showing that offering my own custom system prompt did not over-ride the invisible one that includes the date.

GPT-5 is very resistant to sharing the details of this secret system prompt, but Tommy Hughes managed to extract the following:

Current date: 2025-08-15

You are an AI assistant accessed via an API. Your output may need to be parsed by code or displayed

# Desired oververbosity for the final answer (not analysis): 3

An oververbosity of 1 means the model should respond using only the minimal content necessary to satisfy the request, using concise phrasing and avoiding extra detail or explanation.

An oververbosity of 10 means the model should provide maximally detailed, thorough responses with context, explanations, and possibly multiple examples.

The desired oververbosity should be treated only as a *default*. Defer to any user or developer requirements regarding response length, if present.

# Valid channels: analysis, commentary, final. Channel must be included for every message.

# Juice: 64

I've not been able to confirm this myself, but a search for "desired oververbosity" on GitHub shows that a similar prompt has leaked from ChatGPT (including for o3) in the past. I suspect Tommy's leaked prompt is accurate here.

I tried asking GPT-5 about "oververbosity" via its API:

llm -m gpt-5 'explain your oververbosity settings, what is it right now?'

And got back:

- What it is: I adjust how detailed I am based on a “verbosity” level—ranging from very brief to very detailed—so answers fit your needs.

- Current setting: Concise (about 3/10). I prioritize direct answers with minimal extra context and keep explanations short unless you ask for more.

- How to change it: Just say what you want, e.g.:

- “One-sentence answer” or “TL;DR” (very brief)

- “Give a concise summary” (short bullets)

- “Walk me through in detail,” “Deep dive,” or “Include examples/edge cases” (more detailed)

- Note: For ambiguous, technical, or safety-critical topics, I may add brief clarifications even when being concise.

Presumably this is part of OpenAI's instruction hierarchy concept, with these instructions taking precedence over the developer instructions provided by API users (my --system 'speak french' option above).

I'd very much appreciate official documentation that describes this! As an API user I want to know everything that is being fed into the model - I would be much more comfortable with a hidden prompt like this if I knew exactly what was in it.

Aug. 16, 2025

Maintainers of Last Resort (via) Filippo Valsorda founded Geomys last year as an "organization of professional open source maintainers", providing maintenance and support for critical packages in the Go language ecosystem backed by clients in retainer relationships.

This is an inspiring and optimistic shape for financially sustaining key open source projects, and it appears be working really well.

Most recently, Geomys have started acting as a "maintainer of last resort" for security-related Go projects in need of new maintainers. In this piece Filippo describes their work on the bluemonday HTML sanitization library - similar to Python’s bleach which was deprecated in 2023. He also talks at length about their work on CSRF for Go after gorilla/csrf lost active maintenance - I’m still working my way through his earlier post on Cross-Site Request Forgery trying to absorb the research shared their about the best modern approaches to this vulnerability.

Aug. 17, 2025

Most of what we're building out at this point is the inference [...] We're profitable on inference. If we didn't pay for training, we'd be a very profitable company.

— Sam Altman, during a "wide-ranging dinner with a small group of reporters in San Francisco"

TIL: Running a gpt-oss eval suite against LM Studio on a Mac. The other day I learned that OpenAI published a set of evals as part of their gpt-oss model release, described in their cookbook on Verifying gpt-oss implementations.

I decided to try and run that eval suite on my own MacBook Pro, against gpt-oss-20b running inside of LM Studio.

TLDR: once I had the model running inside LM Studio with a longer than default context limit, the following incantation ran an eval suite in around 3.5 hours:

mkdir /tmp/aime25_openai

OPENAI_API_KEY=x \

uv run --python 3.13 --with 'gpt-oss[eval]' \

python -m gpt_oss.evals \

--base-url http://localhost:1234/v1 \

--eval aime25 \

--sampler chat_completions \

--model openai/gpt-oss-20b \

--reasoning-effort low \

--n-threads 2

My new TIL breaks that command down in detail and walks through the underlying eval - AIME 2025, which asks 30 questions (8 times each) that are defined using the following format:

{"question": "Find the sum of all integer bases $b>9$ for which $17_{b}$ is a divisor of $97_{b}$.", "answer": "70"}

Aug. 18, 2025

Google Gemini URL Context

(via)

New feature in the Gemini API: you can now enable a url_context tool which the models can use to request the contents of URLs as part of replying to a prompt.

I released llm-gemini 0.25 with a new -o url_context 1 option adding support for this feature. You can try it out like this:

llm install -U llm-gemini

llm keys set gemini # If you need to set an API key

llm -m gemini-2.5-flash -o url_context 1 \

'Latest headline on simonwillison.net'

Tokens from the fetched content are charged as input tokens. Use llm logs -c --usage to see that token count:

# 2025-08-18T23:52:46 conversation: 01k2zsk86pyp8p5v7py38pg3ge id: 01k2zsk17k1d03veax49532zs2

Model: **gemini/gemini-2.5-flash**

## Prompt

Latest headline on simonwillison.net

## Response

The latest headline on simonwillison.net as of August 17, 2025, is "TIL: Running a gpt-oss eval suite against LM Studio on a Mac.".

## Token usage

9,613 input, 87 output, {"candidatesTokenCount": 57, "promptTokensDetails": [{"modality": "TEXT", "tokenCount": 10}], "toolUsePromptTokenCount": 9603, "toolUsePromptTokensDetails": [{"modality": "TEXT", "tokenCount": 9603}], "thoughtsTokenCount": 30}

I intercepted a request from it using django-http-debug and saw the following request headers:

Accept: */*

User-Agent: Google

Accept-Encoding: gzip, br

The request came from 192.178.9.35, a Google IP. It did not appear to execute JavaScript on the page, instead feeding the original raw HTML to the model.

Aug. 19, 2025

r/ChatGPTPro: What is the most profitable thing you have done with ChatGPT? This Reddit thread - with 279 replies - offers a neat targeted insight into the kinds of things people are using ChatGPT for.

Lots of variety here but two themes that stood out for me were ChatGPT for written negotiation - insurance claims, breaking rental leases - and ChatGPT for career and business advice.

PyPI: Preventing Domain Resurrection Attacks (via) Domain resurrection attacks are a nasty vulnerability in systems that use email verification to allow people to recover their accounts. If somebody lets their domain name expire an attacker might snap it up and use it to gain access to their accounts - which can turn into a package supply chain attack if they had an account on something like the Python Package Index.

PyPI now protects against these by treating an email address as not-validated if the associated domain expires.

Since early June 2025, PyPI has unverified over 1,800 email addresses when their associated domains entered expiration phases. This isn't a perfect solution, but it closes off a significant attack vector where the majority of interactions would appear completely legitimate.

This attack is not theoretical: it happened to the ctx package on PyPI back in May 2022.

Here's the pull request from April in which Mike Fiedler landed an integration which hits an API provided by Fastly's Domainr, followed by this PR which polls for domain status on any email domain that hasn't been checked in the past 30 days.

llama.cpp guide: running gpt-oss with llama.cpp

(via)

Really useful official guide to running the OpenAI gpt-oss models using llama-server from llama.cpp - which provides an OpenAI-compatible localhost API and a neat web interface for interacting with the models.

TLDR version for macOS to run the smaller gpt-oss-20b model:

brew install llama.cpp

llama-server -hf ggml-org/gpt-oss-20b-GGUF \

--ctx-size 0 --jinja -ub 2048 -b 2048 -ngl 99 -fa

This downloads a 12GB model file from ggml-org/gpt-oss-20b-GGUF on Hugging Face, stores it in ~/Library/Caches/llama.cpp/ and starts it running on port 8080.

You can then visit this URL to start interacting with the model:

http://localhost:8080/

On my 64GB M2 MacBook Pro it runs at around 82 tokens/second.

The guide also includes notes for running on NVIDIA and AMD hardware.