Quotations in 2024

Filters: Type: quotation × Year: 2024 × Sorted by date

Often, you are told to do this by treating AI like an intern. In retrospect, however, I think that this particular analogy ends up making people use AI in very constrained ways. To put it bluntly, any recent frontier model (by which I mean Claude 3.5, ChatGPT-4o, Grok 2, Llama 3.1, or Gemini Pro 1.5) is likely much better than any intern you would hire, but also weirder.

Instead, let me propose a new analogy: treat AI like an infinitely patient new coworker who forgets everything you tell them each new conversation, one that comes highly recommended but whose actual abilities are not that clear.

Whatever you think of capitalism, the evidence is overwhelming: Social networks with a single proprietor have trouble with long-term survival, and those do survive have trouble with user-experience quality: see Enshittification.

The evidence is also perfectly clear that it doesn’t have to be this way. The original social network, email, is now into its sixth decade of vigorous life. It ain’t perfect but it is essential, and not in any serious danger.

The single crucial difference between email and all those other networks — maybe the only significant difference — is that nobody owns or controls it.

— Tim Bray, Why Not Bluesky

If you try and tell people 5 interesting things about your product / company / cause, they’ll remember zero. If instead, you tell them just one, they’ll usually ask questions that lead them to the other things, and then they’ll remember all of them because it mattered to them at the moment they asked.

It's okay to complain and vent, I just ask you be able to back it up. Saying, "Python packaging sucks", but then admit you actually haven't used it in so long you don't remember why it sucked isn't fair. Things do improve, so it's better to say "it did suck" and acknowledge you might be out-of-date.

When we started working on what became NotebookLM in the summer of 2022, we could fit about 1,500 words in the context window. Now we can fit up to 1.5 million words. (And using various other tricks, effectively fit 25 million words.) The emergence of long context models is, I believe, the single most unappreciated AI development of the past two years, at least among the general public. It radically transforms the utility of these models in terms of actual, practical applications.

The main innovation here is just using more data. Specifically, Qwen2.5 Coder is a continuation of an earlier Qwen 2.5 model. The original Qwen 2.5 model was trained on 18 trillion tokens spread across a variety of languages and tasks (e.g, writing, programming, question answering). Qwen 2.5-Coder sees them train this model on an additional 5.5 trillion tokens of data. This means Qwen has been trained on a total of ~23T tokens of data – for perspective, Facebook’s LLaMa3 models were trained on about 15T tokens. I think this means Qwen is the largest publicly disclosed number of tokens dumped into a single language model (so far).

Anthropic declined to comment, but referred Bloomberg News to a five-hour podcast featuring Chief Executive Officer Dario Amodei that was released Monday.

"People call them scaling laws. That's a misnomer," he said on the podcast. "They're not laws of the universe. They're empirical regularities. I am going to bet in favor of them continuing, but I'm not certain of that."

[...]

An Anthropic spokesperson said the language about Opus was removed from the website as part of a marketing decision to only show available and benchmarked models. Asked whether Opus 3.5 would still be coming out this year, the spokesperson pointed to Amodei’s podcast remarks. In the interview, the CEO said Anthropic still plans to release the model but repeatedly declined to commit to a timetable.

— OpenAI, Google and Anthropic Are Struggling to Build More Advanced AI, Rachel Metz, Shirin Ghaffary, Dina Bass, and Julia Love for Bloomberg

This tutorial exists because of a particular quirk of mine: I love to write tutorials about things as I learn them. This is the backstory of TRPL, of which an ancient draft was "Rust for Rubyists." You only get to look at a problem as a beginner once, and so I think writing this stuff down is interesting. It also helps me clarify what I'm learning to myself.

— Steve Klabnik, Steve's Jujutsu Tutorial

That development time acceleration of 4 days down to 20 minutes… that’s equivalent to about 10 years of Moore’s Law cycles. That is, using generative AI like this is equivalent to computers getting 10 years better overnight.

That was a real eye-opening framing for me. AI isn’t magical, it’s not sentient, it’s not the end of the world nor our saviour; we don’t need to endlessly debate “intelligence” or “reasoning.” It’s just that… computers got 10 years better. The iPhone was first released in 2007. Imagine if it had come out in 1997 instead. We wouldn’t even know what to do with it.

As a junior engineer, there's simply no substitute for getting the first 100K lines of code under your belt. The "start over each day" method will help get you to those 100K lines faster.

You might think covering the same ground multiple times isn't as valuable as getting 100K diverse lines of code. I disagree. Solving the same problem repeatedly is actually really beneficial for retaining knowledge of patterns you figure out.

You only need 5K perfect lines to see all the major patterns once. The other 95K lines are repetition to rewire your neurons.

This is a very friendly and supportive place where you are surrounded by peers - we all want to help each other succeed. The golden rule of this server is:

Don't ever try to impress anyone here with your knowledge! Instead try to impress folks here with your desire to learn, and desire to help others learn.

If you have worked in search, you know how freaking hard even getting started with something close to this with traditional methods. Now, you can zero-shot it.

System Instructions: As a query categorization expert, you try to break down the intent of a search query. First, provide your reasoning and then describe the intent using a single category (broad, detailed, comparision)

User: The query from the user is "nike versus adidas for terrain running". The user is a female, age 22.Model: The user is clearly looking to compare two specific brands, Nike and Adidas, for a particular activity, terrain running. While the user's demographics might be helpful in some situations (e.g., recommending specific product lines), the core intent remains a comparison. Category: Comparison

There's a lot of hand-waving around query intent classification; it's always been like that. Now, it's straightforward (add a few examples to improve accuracy). But my point is that you could only dream about building something like this without having access to lots of interaction data.

You already know Donald Trump. He is unfit to lead. Watch him. Listen to those who know him best. He tried to subvert an election and remains a threat to democracy. He helped overturn Roe, with terrible consequences. Mr. Trump's corruption and lawlessness go beyond elections: It's his whole ethos. He lies without limit. If he's re-elected, the G.O.P. won't restrain him. Mr. Trump will use the government to go after opponents. He will pursue a cruel policy of mass deportations. He will wreak havoc on the poor, the middle class and employers. Another Trump term will damage the climate, shatter alliances and strengthen autocrats. Americans should demand better. Vote.

Building technology in startups is all about having the right level of tech debt. If you have none, you’re probably going too slow and not prioritizing product-market fit and the important business stuff. If you get too much, everything grinds to a halt. Plus, tech debt is a “know it when you see it” kind of thing, and I know that my definition of “a bunch of tech debt” is, to other people, “very little tech debt.”

Lord Clement-Jones: To ask His Majesty's Government what assessment they have made of the cybersecurity risks posed by prompt injection attacks to the processing by generative artificial intelligence of material provided from outside government, and whether any such attacks have been detected thus far.

Lord Vallance of Balham: Security is central to HMG's Generative AI Framework, which was published in January this year and sets out principles for using generative AI safely and responsibly. The risks posed by prompt injection attacks, including from material provided outside of government, have been assessed as part of this framework and are continually reviewed. The published Generative AI Framework for HMG specifically includes Prompt Injection attacks, alongside other AI specific cyber risks.

— Question for Department for Science, Innovation and Technology, UIN HL1541, tabled on 14 Oct 2024

If you want to make a good RAG tool that uses your documentation, you should start by making a search engine over those documents that would be good enough for a human to use themselves.

As an independent writer and publisher, I am the legal team. I am the fact-checking department. I am the editorial staff. I am the one responsible for triple-checking every single statement I make in the type of original reporting that I know carries a serious risk of baseless but ruinously expensive litigation regularly used to silence journalists, critics, and whistleblowers. I am the one deciding if that risk is worth taking, or if I should just shut up and write about something less risky.

Grandma’s secret cake recipe, passed down generation to generation, could be literally passed down: a flat slab of beige ooze kept in a battered pan, DNA-spliced and perfected by guided evolution by her own deft and ancient hands, a roiling wet mass of engineered microbes that slowly scabs over with delicious sponge cake, a delectable crust to be sliced once a week and enjoyed still warm with creme and spoons of pirated jam.

Go to data.gov, find an interesting recent dataset, and download it. Install sklearn with bash tool write a .py file to split the data into train and test and make a classifier for it. (you may need to inspect the data and/or iterate if this goes poorly at first, but don't get discouraged!). Come up with some way to visualize the results of your classifier in the browser.

— Alex Albert, Prompting Claude Computer Use

We enhanced the ability of the upgraded Claude 3.5 Sonnet and Claude 3.5 Haiku to recognize and resist prompt injection attempts. Prompt injection is an attack where a malicious user feeds instructions to a model that attempt to change its originally intended behavior. Both models are now better able to recognize adversarial prompts from a user and behave in alignment with the system prompt. We constructed internal test sets of prompt injection attacks and specifically trained on adversarial interactions.

With computer use, we recommend taking additional precautions against the risk of prompt injection, such as using a dedicated virtual machine, limiting access to sensitive data, restricting internet access to required domains, and keeping a human in the loop for sensitive tasks.

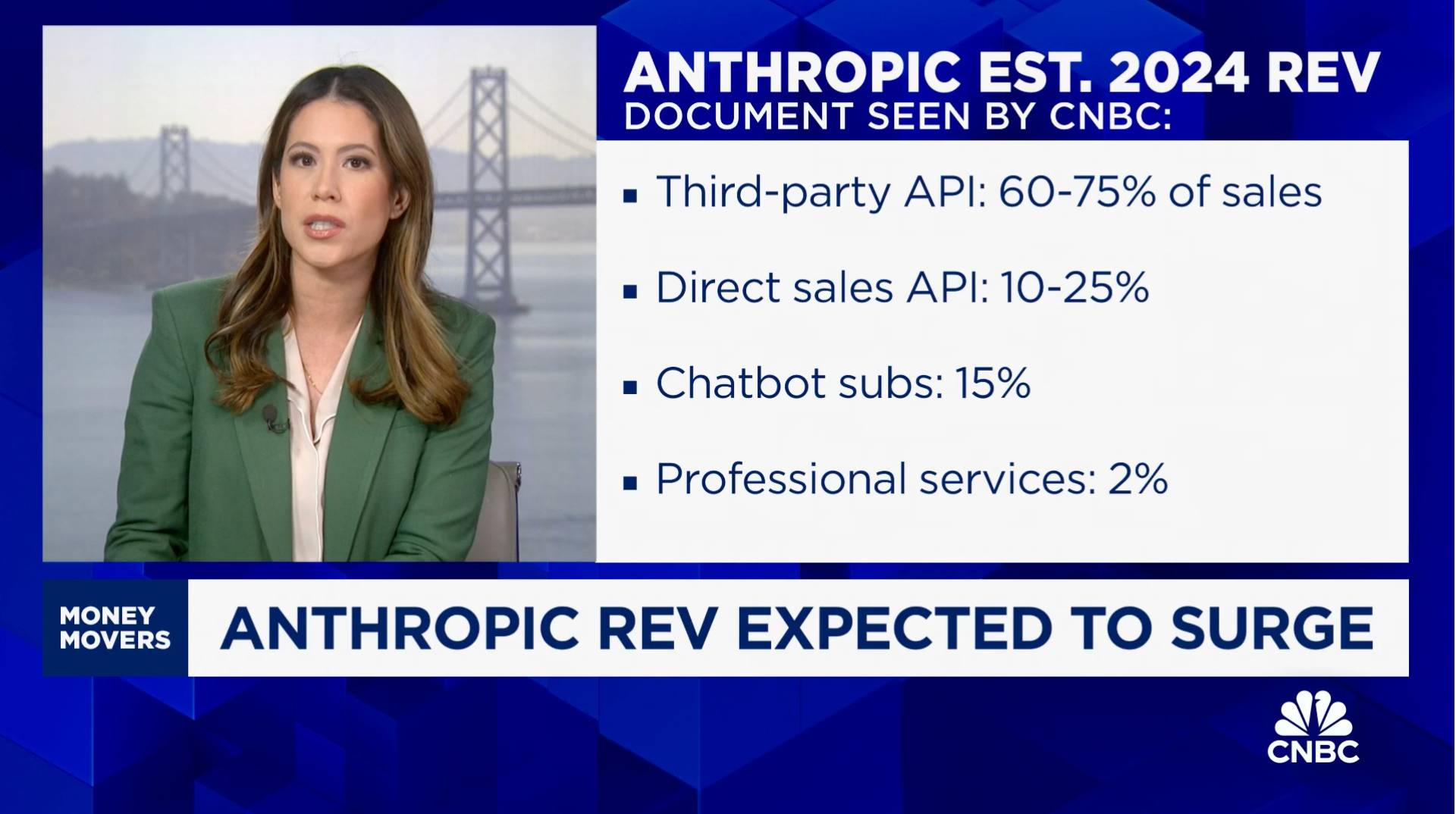

According to a document that I viewed, Anthropic is telling investors that it is expecting a billion dollars in revenue this year.

Third-party API is expected to make up the majority of sales, 60% to 75% of the total. That refers to the interfaces that allow external developers or third parties like Amazon's AWS to build and scale their own AI applications using Anthropic's models. [Simon's guess: this could mean Anthropic model access sold through AWS Bedrock and Google Vertex]

That is by far its biggest business, with direct API sales a distant second projected to bring in 10% to 25% of revenue. Chatbots, that is its subscription revenue from Claude, the chatbot, that's expected to make up 15% of sales in 2024 at $150 million.

— Deirdre Bosa, CNBC Money Movers, Sep 24th 2024

OpenAI’s monthly revenue hit $300 million in August, up 1,700 percent since the beginning of 2023, and the company expects about $3.7 billion in annual sales this year, according to financial documents reviewed by The New York Times. [...]

The company expects ChatGPT to bring in $2.7 billion in revenue this year, up from $700 million in 2023, with $1 billion coming from other businesses using its technology.

— Mike Isaac and Erin Griffith, New York Times, Sep 27th 2024

For the same cost and similar speed to Claude 3 Haiku, Claude 3.5 Haiku improves across every skill set and surpasses even Claude 3 Opus, the largest model in our previous generation, on many intelligence benchmarks. Claude 3.5 Haiku is particularly strong on coding tasks. For example, it scores 40.6% on SWE-bench Verified, outperforming many agents using publicly available state-of-the-art models—including the original Claude 3.5 Sonnet and GPT-4o. [...]

Claude 3.5 Haiku will be made available later this month across our first-party API, Amazon Bedrock, and Google Cloud’s Vertex AI—initially as a text-only model and with image input to follow.

— Anthropic, pre-announcing Claude 3.5 Haiku

I've often been building single-use apps with Claude Artifacts when I'm helping my children learn. For example here's one on visualizing fractions. [...] What's more surprising is that it is far easier to create an app on-demand than searching for an app in the app store that will do what I'm looking for. Searching for kids' learning apps is typically a nails-on-chalkboard painful experience because 95% of them are addictive garbage. And even if I find something usable, it can't match the fact that I can tell Claude what I want.

I really dislike the practice of replacing passwords with email “magic links”. Autofilling a password from my keychain happens instantly; getting a magic link from email can take minutes sometimes, and even in the fastest case, it’s nowhere near instantaneous. Replacing something very fast — password autofill — with something slower is just a terrible idea.

Who called it “intellectual property problems around the acquisition of training data for Large Language Models” and not Grand Theft Autocomplete?

— Jens Ohlig, on March 8th 2024

It feels like we’re at a bit of an inflection point for the Django community. [...] One of the places someone could have the most impact is by serving on the DSF Board. Like the community at large, the DSF is at a transition point: we’re outgrowing the “small nonprofit” status, and have the opportunity to really expand our ambition and reach. In all likelihood, the decisions the Board makes over the next year or two will define our direction and strategy for the next decade.

I'm of the opinion that you should never use mmap, because if you get an I/O error of some kind, the OS raises a signal, which SQLite is unable to catch, and so the process dies. When you are not using mmap, SQLite gets back an error code from an I/O error and is able to take remedial action, or at least compose an error message.

A common misconception about Transformers is to believe that they're a sequence-processing architecture. They're not.

They're a set-processing architecture. Transformers are 100% order-agnostic (which was the big innovation compared to RNNs, back in late 2016 -- you compute the full matrix of pairwise token interactions instead of processing one token at a time).

The way you add order awareness in a Transformer is at the feature level. You literally add to your token embeddings a position embedding / encoding that corresponds to its place in a sequence. The architecture itself just treats the input tokens as a set.

The problem with passkeys is that they're essentially a halfway house to a password manager, but tied to a specific platform in ways that aren't obvious to a user at all, and liable to easily leave them unable to access of their accounts. [...]

Chrome on Windows stores your passkeys in Windows Hello, so if you sign up for a service on Windows, and you then want to access it on iPhone, you're going to be stuck (unless you're so forward thinking as to add a second passkey, somehow, from the iPhone will on the Windows computer!). The passkey lives on the wrong device, if you're away from the computer and want to login, and it's not at all obvious to most users how they might fix that.