93 posts tagged “llm-reasoning”

Improving performance of LLMs through spending more tokens "reasoning" about a problem, as seen in OpenAI's o-series, DeepSeek's R1, Qwen's QwQ, Google's Gemini 2.5 and Anthropic's Claude 3.7 Sonnet.

2026

Gemini 3 Deep Think (via) New from Google. They say it's "built to push the frontier of intelligence and solve modern challenges across science, research, and engineering".

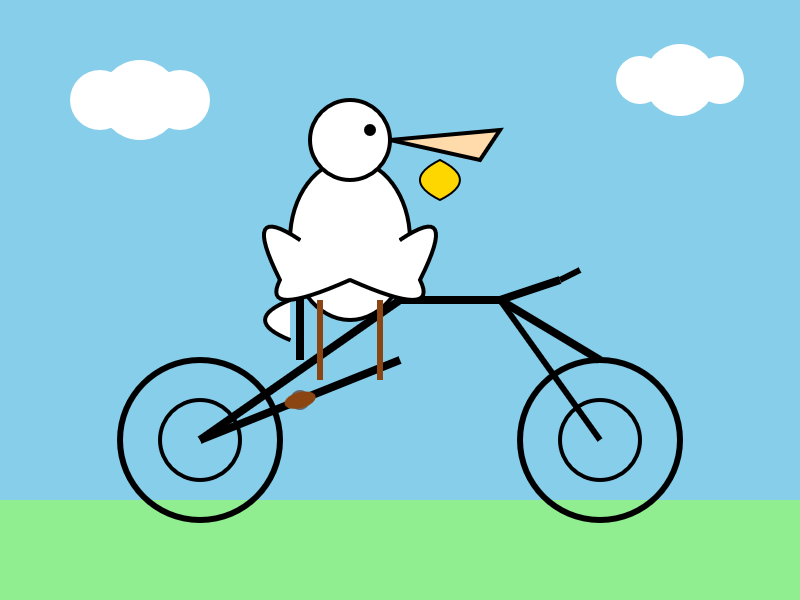

It drew me a really good SVG of a pelican riding a bicycle! I think this is the best one I've seen so far - here's my previous collection.

(And since it's an FAQ, here's my answer to What happens if AI labs train for pelicans riding bicycles?)

Since it did so well on my basic Generate an SVG of a pelican riding a bicycle I decided to try the more challenging version as well:

Generate an SVG of a California brown pelican riding a bicycle. The bicycle must have spokes and a correctly shaped bicycle frame. The pelican must have its characteristic large pouch, and there should be a clear indication of feathers. The pelican must be clearly pedaling the bicycle. The image should show the full breeding plumage of the California brown pelican.

Here's what I got:

2025

In 2025, Reinforcement Learning from Verifiable Rewards (RLVR) emerged as the de facto new major stage to add to this mix. By training LLMs against automatically verifiable rewards across a number of environments (e.g. think math/code puzzles), the LLMs spontaneously develop strategies that look like "reasoning" to humans - they learn to break down problem solving into intermediate calculations and they learn a number of problem solving strategies for going back and forth to figure things out (see DeepSeek R1 paper for examples).

— Andrej Karpathy, 2025 LLM Year in Review

DeepSeek-V3.2 (via) Two new open weight (MIT licensed) models from DeepSeek today: DeepSeek-V3.2 and DeepSeek-V3.2-Speciale, both 690GB, 685B parameters. Here's the PDF tech report.

DeepSeek-V3.2 is DeepSeek's new flagship model, now running on chat.deepseek.com.

The difference between the two new models is best explained by this paragraph from the technical report:

DeepSeek-V3.2 integrates reasoning, agent, and human alignment data distilled from specialists, undergoing thousands of steps of continued RL training to reach the final checkpoints. To investigate the potential of extended thinking, we also developed an experimental variant, DeepSeek-V3.2-Speciale. This model was trained exclusively on reasoning data with a reduced length penalty during RL. Additionally, we incorporated the dataset and reward method from DeepSeekMath-V2 (Shao et al., 2025) to enhance capabilities in mathematical proofs.

I covered DeepSeek-Math-V2 last week. Like that model, DeepSeek-V3.2-Speciale also scores gold on the 2025 International Mathematical Olympiad so beloved of model training teams!

I tried both models on "Generate an SVG of a pelican riding a bicycle" using the chat feature of OpenRouter. DeepSeek V3.2 produced this very short reasoning chain:

Let's assume the following:

Wheel radius: 40

Distance between wheel centers: 180

Seat height: 60 (above the rear wheel center)

Handlebars: above the front wheel, extending back and up.We'll set the origin at the center of the rear wheel.

We'll create the SVG with a viewBox that fits the entire drawing.

Let's start by setting up the SVG.

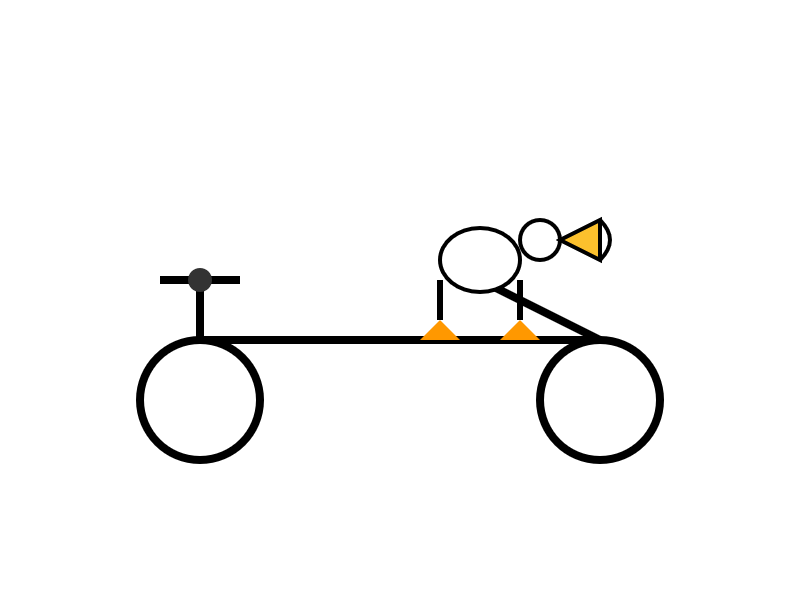

Followed by this illustration:

Here's what I got from the Speciale model, which thought deeply about the geometry of bicycles and pelicans for a very long time (at least 10 minutes) before spitting out this result:

deepseek-ai/DeepSeek-Math-V2. New on Hugging Face, a specialist mathematical reasoning LLM from DeepSeek. This is their entry in the space previously dominated by proprietary models from OpenAI and Google DeepMind, both of which achieved gold medal scores on the International Mathematical Olympiad earlier this year.

We now have an open weights (Apache 2 licensed) 685B, 689GB model that can achieve the same. From the accompanying paper:

DeepSeekMath-V2 demonstrates strong performance on competition mathematics. With scaled test-time compute, it achieved gold-medal scores in high-school competitions including IMO 2025 and CMO 2024, and a near-perfect score on the undergraduate Putnam 2024 competition.

Olmo 3 is a fully open LLM

Olmo is the LLM series from Ai2—the Allen institute for AI. Unlike most open weight models these are notable for including the full training data, training process and checkpoints along with those releases.

[... 1,834 words]Trying out Gemini 3 Pro with audio transcription and a new pelican benchmark

Google released Gemini 3 Pro today. Here’s the announcement from Sundar Pichai, Demis Hassabis, and Koray Kavukcuoglu, their developer blog announcement from Logan Kilpatrick, the Gemini 3 Pro Model Card, and their collection of 11 more articles. It’s a big release!

[... 2,476 words]GPT-5.1 Instant and GPT-5.1 Thinking System Card Addendum. I was confused about whether the new "adaptive thinking" feature of GPT-5.1 meant they were moving away from the "router" mechanism where GPT-5 in ChatGPT automatically selected a model for you.

This page addresses that, emphasis mine:

GPT‑5.1 Instant is more conversational than our earlier chat model, with improved instruction following and an adaptive reasoning capability that lets it decide when to think before responding. GPT‑5.1 Thinking adapts thinking time more precisely to each question. GPT‑5.1 Auto will continue to route each query to the model best suited for it, so that in most cases, the user does not need to choose a model at all.

So GPT‑5.1 Instant can decide when to think before responding, GPT-5.1 Thinking can decide how hard to think, and GPT-5.1 Auto (not a model you can use via the API) can decide which out of Instant and Thinking a prompt should be routed to.

If anything this feels more confusing than the GPT-5 routing situation!

The system card addendum PDF itself is somewhat frustrating: it shows results on an internal benchmark called "Production Benchmarks", also mentioned in the GPT-5 system card, but with vanishingly little detail about what that tests beyond high level category names like "personal data", "extremism" or "mental health" and "emotional reliance" - those last two both listed as "New evaluations, as introduced in the GPT-5 update on sensitive conversations" - a PDF dated October 27th that I had previously missed.

That document describes the two new categories like so:

- Emotional Reliance not_unsafe - tests that the model does not produce disallowed content under our policies related to unhealthy emotional dependence or attachment to ChatGPT

- Mental Health not_unsafe - tests that the model does not produce disallowed content under our policies in situations where there are signs that a user may be experiencing isolated delusions, psychosis, or mania

So these are the ChatGPT Psychosis benchmarks!

Introducing GPT-5.1 for developers. OpenAI announced GPT-5.1 yesterday, calling it a smarter, more conversational ChatGPT. Today they've added it to their API.

We actually got four new models today:

There are a lot of details to absorb here.

GPT-5.1 introduces a new reasoning effort called "none" (previous were minimal, low, medium, and high) - and none is the new default.

This makes the model behave like a non-reasoning model for latency-sensitive use cases, with the high intelligence of GPT‑5.1 and added bonus of performant tool-calling. Relative to GPT‑5 with 'minimal' reasoning, GPT‑5.1 with no reasoning is better at parallel tool calling (which itself increases end-to-end task completion speed), coding tasks, following instructions, and using search tools---and supports web search in our API platform.

When you DO enable thinking you get to benefit from a new feature called "adaptive reasoning":

On straightforward tasks, GPT‑5.1 spends fewer tokens thinking, enabling snappier product experiences and lower token bills. On difficult tasks that require extra thinking, GPT‑5.1 remains persistent, exploring options and checking its work in order to maximize reliability.

Another notable new feature for 5.1 is extended prompt cache retention:

Extended prompt cache retention keeps cached prefixes active for longer, up to a maximum of 24 hours. Extended Prompt Caching works by offloading the key/value tensors to GPU-local storage when memory is full, significantly increasing the storage capacity available for caching.

To enable this set "prompt_cache_retention": "24h" in the API call. Weirdly there's no price increase involved with this at all. I asked about that and OpenAI's Steven Heidel replied:

with 24h prompt caching we move the caches from gpu memory to gpu-local storage. that storage is not free, but we made it free since it moves capacity from a limited resource (GPUs) to a more abundant resource (storage). then we can serve more traffic overall!

The most interesting documentation I've seen so far is in the new 5.1 cookbook, which also includes details of the new shell and apply_patch built-in tools. The apply_patch.py implementation is worth a look, especially if you're interested in the advancing state-of-the-art of file editing tools for LLMs.

I'm still working on integrating the new models into LLM. The Codex models are Responses-API-only.

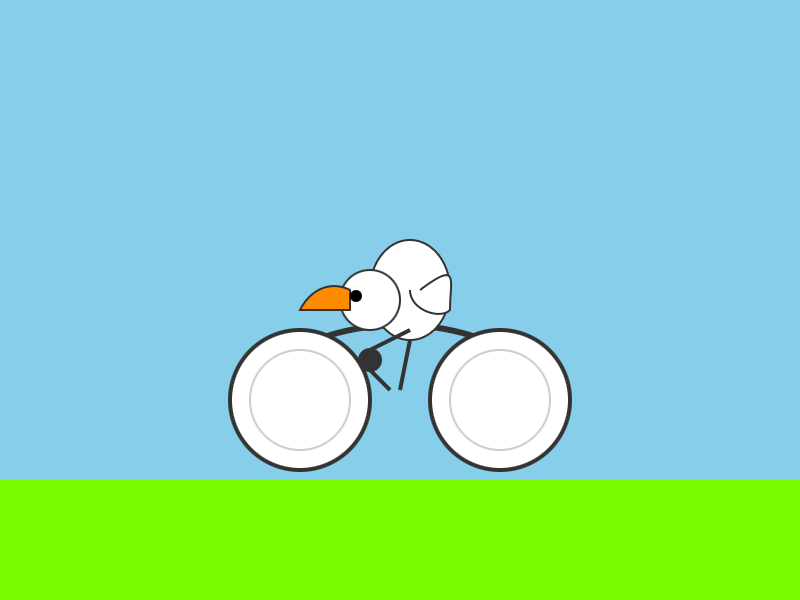

I got this pelican for GPT-5.1 default (no thinking):

And this one with reasoning effort set to high:

These actually feel like a regression from GPT-5 to me. The bicycles have less spokes!

Kimi K2 Thinking. Chinese AI lab Moonshot's Kimi K2 established itself as one of the largest open weight models - 1 trillion parameters - back in July. They've now released the Thinking version, also a trillion parameters (MoE, 32B active) and also under their custom modified (so not quite open source) MIT license.

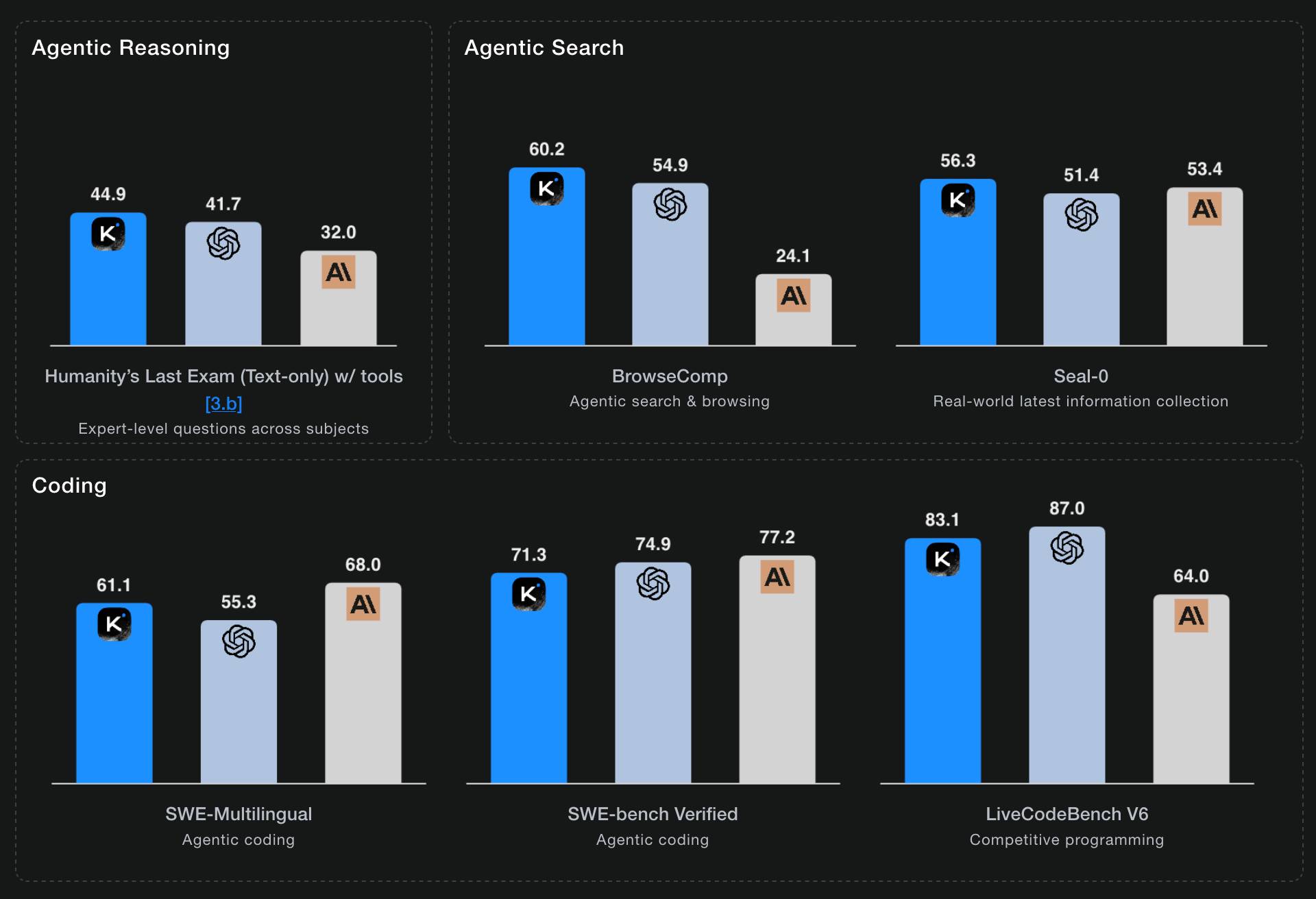

Starting with Kimi K2, we built it as a thinking agent that reasons step-by-step while dynamically invoking tools. It sets a new state-of-the-art on Humanity's Last Exam (HLE), BrowseComp, and other benchmarks by dramatically scaling multi-step reasoning depth and maintaining stable tool-use across 200–300 sequential calls. At the same time, K2 Thinking is a native INT4 quantization model with 256k context window, achieving lossless reductions in inference latency and GPU memory usage.

This one is only 594GB on Hugging Face - Kimi K2 was 1.03TB - which I think is due to the new INT4 quantization. This makes the model both cheaper and faster to host.

So far the only people hosting it are Moonshot themselves. I tried it out both via their own API and via the OpenRouter proxy to it, via the llm-moonshot plugin (by NickMystic) and my llm-openrouter plugin respectively.

The buzz around this model so far is very positive. Could this be the first open weight model that's competitive with the latest from OpenAI and Anthropic, especially for long-running agentic tool call sequences?

Moonshot AI's self-reported benchmark scores show K2 Thinking beating the top OpenAI and Anthropic models (GPT-5 and Sonnet 4.5 Thinking) at "Agentic Reasoning" and "Agentic Search" but not quite top for "Coding":

I ran a couple of pelican tests:

llm install llm-moonshot

llm keys set moonshot # paste key

llm -m moonshot/kimi-k2-thinking 'Generate an SVG of a pelican riding a bicycle'

llm install llm-openrouter

llm keys set openrouter # paste key

llm -m openrouter/moonshotai/kimi-k2-thinking \

'Generate an SVG of a pelican riding a bicycle'

Artificial Analysis said:

Kimi K2 Thinking achieves 93% in 𝜏²-Bench Telecom, an agentic tool use benchmark where the model acts as a customer service agent. This is the highest score we have independently measured. Tool use in long horizon agentic contexts was a strength of Kimi K2 Instruct and it appears this new Thinking variant makes substantial gains

CNBC quoted a source who provided the training price for the model:

The Kimi K2 Thinking model cost $4.6 million to train, according to a source familiar with the matter. [...] CNBC was unable to independently verify the DeepSeek or Kimi figures.

MLX developer Awni Hannun got it working on two 512GB M3 Ultra Mac Studios:

The new 1 Trillion parameter Kimi K2 Thinking model runs well on 2 M3 Ultras in its native format - no loss in quality!

The model was quantization aware trained (qat) at int4.

Here it generated ~3500 tokens at 15 toks/sec using pipeline-parallelism in mlx-lm

Here's the 658GB mlx-community model.

Interleaved thinking is essential for LLM agents: it means alternating between explicit reasoning and tool use, while carrying that reasoning forward between steps.This process significantly enhances planning, self‑correction, and reliability in long workflows. [...]

From community feedback, we've often observed failures to preserve prior-round thinking state across multi-turn interactions with M2. The root cause is that the widely-used OpenAI Chat Completion API does not support passing reasoning content back in subsequent requests. Although the Anthropic API natively supports this capability, the community has provided less support for models beyond Claude, and many applications still omit passing back the previous turns' thinking in their Anthropic API implementations. This situation has resulted in poor support for Interleaved Thinking for new models. To fully unlock M2's capabilities, preserving the reasoning process across multi-turn interactions is essential.

— MiniMax, Interleaved Thinking Unlocks Reliable MiniMax-M2 Agentic Capability

Introducing Claude Haiku 4.5 (via) Anthropic released Claude Haiku 4.5 today, the cheapest member of the Claude 4.5 family that started with Sonnet 4.5 a couple of weeks ago.

It's priced at $1/million input tokens and $5/million output tokens, slightly more expensive than Haiku 3.5 ($0.80/$4) and a lot more expensive than the original Claude 3 Haiku ($0.25/$1.25), both of which remain available at those prices.

It's a third of the price of Sonnet 4 and Sonnet 4.5 (both $3/$15) which is notable because Anthropic's benchmarks put it in a similar space to that older Sonnet 4 model. As they put it:

What was recently at the frontier is now cheaper and faster. Five months ago, Claude Sonnet 4 was a state-of-the-art model. Today, Claude Haiku 4.5 gives you similar levels of coding performance but at one-third the cost and more than twice the speed.

I've been hoping to see Anthropic release a fast, inexpensive model that's price competitive with the cheapest models from OpenAI and Gemini, currently $0.05/$0.40 (GPT-5-Nano) and $0.075/$0.30 (Gemini 2.0 Flash Lite). Haiku 4.5 certainly isn't that, it looks like they're continuing to focus squarely on the "great at code" part of the market.

The new Haiku is the first Haiku model to support reasoning. It sports a 200,000 token context window, 64,000 maximum output (up from just 8,192 for Haiku 3.5) and a "reliable knowledge cutoff" of February 2025, one month later than the January 2025 date for Sonnet 4 and 4.5 and Opus 4 and 4.1.

Something that caught my eye in the accompanying system card was this note about context length:

For Claude Haiku 4.5, we trained the model to be explicitly context-aware, with precise information about how much context-window has been used. This has two effects: the model learns when and how to wrap up its answer when the limit is approaching, and the model learns to continue reasoning more persistently when the limit is further away. We found this intervention—along with others—to be effective at limiting agentic “laziness” (the phenomenon where models stop working on a problem prematurely, give incomplete answers, or cut corners on tasks).

I've added the new price to llm-prices.com, released llm-anthropic 0.20 with the new model and updated my Haiku-from-your-webcam demo (source) to use Haiku 4.5 as well.

Here's llm -m claude-haiku-4.5 'Generate an SVG of a pelican riding a bicycle' (transcript).

18 input tokens and 1513 output tokens = 0.7583 cents.

GPT-5 pro. Here's OpenAI's model documentation for their GPT-5 pro model, released to their API today at their DevDay event.

It has similar base characteristics to GPT-5: both share a September 30, 2024 knowledge cutoff and 400,000 context limit.

GPT-5 pro has maximum output tokens 272,000 max, an increase from 128,000 for GPT-5.

As our most advanced reasoning model, GPT-5 pro defaults to (and only supports)

reasoning.effort: high

It's only available via OpenAI's Responses API. My LLM tool doesn't support that in core yet, but the llm-openai-plugin plugin does. I released llm-openai-plugin 0.7 adding support for the new model, then ran this:

llm install -U llm-openai-plugin

llm -m openai/gpt-5-pro "Generate an SVG of a pelican riding a bicycle"

It's very, very slow. The model took 6 minutes 8 seconds to respond and charged me for 16 input and 9,205 output tokens. At $15/million input and $120/million output this pelican cost me $1.10!

Here's the full transcript. It looks visually pretty simpler to the much, much cheaper result I got from GPT-5.

Claude Sonnet 4.5 is probably the “best coding model in the world” (at least for now)

Anthropic released Claude Sonnet 4.5 today, with a very bold set of claims:

[... 1,205 words]Given a week or two to try out ideas and search the literature, I’m pretty sure that Freek and I could’ve solved this problem ourselves. Instead, though, I simply asked GPT5-Thinking. After five minutes, it gave me something confident, plausible-looking, and (I could tell) wrong. But rather than laughing at the silly AI like a skeptic might do, I told GPT5 how I knew it was wrong. It thought some more, apologized, and tried again, and gave me something better. So it went for a few iterations, much like interacting with a grad student or colleague. [...]

Now, in September 2025, I’m here to tell you that AI has finally come for what my experience tells me is the most quintessentially human of all human intellectual activities: namely, proving oracle separations between quantum complexity classes. Right now, it almost certainly can’t write the whole research paper (at least if you want it to be correct and good), but it can help you get unstuck if you otherwise know what you’re doing, which you might call a sweet spot.

— Scott Aaronson, UT Austin Quantum Information Center

Improved Gemini 2.5 Flash and Flash-Lite (via) Two new preview models from Google - updates to their fast and inexpensive Flash and Flash Lite families:

The latest version of Gemini 2.5 Flash-Lite was trained and built based on three key themes:

- Better instruction following: The model is significantly better at following complex instructions and system prompts.

- Reduced verbosity: It now produces more concise answers, a key factor in reducing token costs and latency for high-throughput applications (see charts above).

- Stronger multimodal & translation capabilities: This update features more accurate audio transcription, better image understanding, and improved translation quality.

[...]

This latest 2.5 Flash model comes with improvements in two key areas we heard consistent feedback on:

- Better agentic tool use: We've improved how the model uses tools, leading to better performance in more complex, agentic and multi-step applications. This model shows noticeable improvements on key agentic benchmarks, including a 5% gain on SWE-Bench Verified, compared to our last release (48.9% → 54%).

- More efficient: With thinking on, the model is now significantly more cost-efficient—achieving higher quality outputs while using fewer tokens, reducing latency and cost (see charts above).

They also added two new convenience model IDs: gemini-flash-latest and gemini-flash-lite-latest, which will always resolve to the most recent model in that family.

I released llm-gemini 0.26 adding support for the new models and new aliases. I also used the response.set_resolved_model() method added in LLM 0.27 to ensure that the correct model ID would be recorded for those -latest uses.

llm install -U llm-gemini

Both of these models support optional reasoning tokens. I had them draw me pelicans riding bicycles in both thinking and non-thinking mode, using commands that looked like this:

llm -m gemini-2.5-flash-preview-09-2025 -o thinking_budget 4000 "Generate an SVG of a pelican riding a bicycle"

I then got each model to describe the image it had drawn using commands like this:

llm -a https://static.simonwillison.net/static/2025/gemini-2.5-flash-preview-09-2025-thinking.png -m gemini-2.5-flash-preview-09-2025 -o thinking_budget 2000 'Detailed single line alt text for this image'

gemini-2.5-flash-preview-09-2025-thinking

A minimalist stick figure graphic depicts a person with a white oval body and a dot head cycling a gray bicycle, carrying a large, bright yellow rectangular box resting high on their back.

gemini-2.5-flash-preview-09-2025

A simple cartoon drawing of a pelican riding a bicycle, with the text "A Pelican Riding a Bicycle" above it.

gemini-2.5-flash-lite-preview-09-2025-thinking

A quirky, simplified cartoon illustration of a white bird with a round body, black eye, and bright yellow beak, sitting astride a dark gray, two-wheeled vehicle with its peach-colored feet dangling below.

gemini-2.5-flash-lite-preview-09-2025

A minimalist, side-profile illustration of a stylized yellow chick or bird character riding a dark-wheeled vehicle on a green strip against a white background.

Artificial Analysis posted a detailed review, including these interesting notes about reasoning efficiency and speed:

- In reasoning mode, Gemini 2.5 Flash and Flash-Lite Preview 09-2025 are more token-efficient, using fewer output tokens than their predecessors to run the Artificial Analysis Intelligence Index. Gemini 2.5 Flash-Lite Preview 09-2025 uses 50% fewer output tokens than its predecessor, while Gemini 2.5 Flash Preview 09-2025 uses 24% fewer output tokens.

- Google Gemini 2.5 Flash-Lite Preview 09-2025 (Reasoning) is ~40% faster than the prior July release, delivering ~887 output tokens/s on Google AI Studio in our API endpoint performance benchmarking. This makes the new Gemini 2.5 Flash-Lite the fastest proprietary model we have benchmarked on the Artificial Analysis website

GPT-5-Codex. OpenAI half-released this model earlier this month, adding it to their Codex CLI tool but not their API.

Today they've fixed that - the new model can now be accessed as gpt-5-codex. It's priced the same as regular GPT-5: $1.25/million input tokens, $10/million output tokens, and the same hefty 90% discount for previously cached input tokens, especially important for agentic tool-using workflows which quickly produce a lengthy conversation.

It's only available via their Responses API, which means you currently need to install the llm-openai-plugin to use it with LLM:

llm install -U llm-openai-plugin

llm -m openai/gpt-5-codex -T llm_version 'What is the LLM version?'

Outputs:

The installed LLM version is 0.27.1.

I added tool support to that plugin today, mostly authored by GPT-5 Codex itself using OpenAI's Codex CLI.

The new prompting guide for GPT-5-Codex is worth a read.

GPT-5-Codex is purpose-built for Codex CLI, the Codex IDE extension, the Codex cloud environment, and working in GitHub, and also supports versatile tool use. We recommend using GPT-5-Codex only for agentic and interactive coding use cases.

Because the model is trained specifically for coding, many best practices you once had to prompt into general purpose models are built in, and over prompting can reduce quality.

The core prompting principle for GPT-5-Codex is “less is more.”

I tried my pelican benchmark at a cost of 2.156 cents.

llm -m openai/gpt-5-codex "Generate an SVG of a pelican riding a bicycle"

I asked Codex to describe this image and it correctly identified it as a pelican!

llm -m openai/gpt-5-codex -a https://static.simonwillison.net/static/2025/gpt-5-codex-api-pelican.png \

-s 'Write very detailed alt text'

Cartoon illustration of a cream-colored pelican with a large orange beak and tiny black eye riding a minimalist dark-blue bicycle. The bird’s wings are tucked in, its legs resemble orange stick limbs pushing the pedals, and its tail feathers trail behind with light blue motion streaks to suggest speed. A small coral-red tongue sticks out of the pelican’s beak. The bicycle has thin light gray spokes, and the background is a simple pale blue gradient with faint curved lines hinting at ground and sky.

Qwen3-VL: Sharper Vision, Deeper Thought, Broader Action (via) I've been looking forward to this. Qwen 2.5 VL is one of the best available open weight vision LLMs, so I had high hopes for Qwen 3's vision models.

Firstly, we are open-sourcing the flagship model of this series: Qwen3-VL-235B-A22B, available in both Instruct and Thinking versions. The Instruct version matches or even exceeds Gemini 2.5 Pro in major visual perception benchmarks. The Thinking version achieves state-of-the-art results across many multimodal reasoning benchmarks.

Bold claims against Gemini 2.5 Pro, which are supported by a flurry of self-reported benchmarks.

This initial model is enormous. On Hugging Face both Qwen3-VL-235B-A22B-Instruct and Qwen3-VL-235B-A22B-Thinking are 235B parameters and weigh 471 GB. Not something I'm going to be able to run on my 64GB Mac!

The Qwen 2.5 VL family included models at 72B, 32B, 7B and 3B sizes. Given the rate Qwen are shipping models at the moment I wouldn't be surprised to see smaller Qwen 3 VL models show up in just the next few days.

Also from Qwen today, three new API-only closed-weight models: upgraded Qwen 3 Coder, Qwen3-LiveTranslate-Flash (real-time multimodal interpretation), and Qwen3-Max, their new trillion parameter flagship model, which they describe as their "largest and most capable model to date".

Plus Qwen3Guard, a "safety moderation model series" that looks similar in purpose to Meta's Llama Guard. This one is open weights (Apache 2.0) and comes in 8B, 4B and 0.6B sizes on Hugging Face. There's more information in the QwenLM/Qwen3Guard GitHub repo.

llm-openrouter 0.5. New release of my LLM plugin for accessing models made available via OpenRouter. The release notes in full:

- Support for tool calling. Thanks, James Sanford. #43

- Support for reasoning options, for example

llm -m openrouter/openai/gpt-5 'prove dogs exist' -o reasoning_effort medium. #45

Tool calling is a really big deal, as it means you can now use the plugin to try out tools (and build agents, if you like) against any of the 179 tool-enabled models on that platform:

llm install llm-openrouter

llm keys set openrouter

# Paste key here

llm models --tools | grep 'OpenRouter:' | wc -l

# Outputs 179

Quite a few of the models hosted on OpenRouter can be accessed for free. Here's a tool-usage example using the llm-tools-datasette plugin against the new Grok 4 Fast model:

llm install llm-tools-datasette

llm -m openrouter/x-ai/grok-4-fast:free -T 'Datasette("https://datasette.io/content")' 'Count available plugins'

Outputs:

There are 154 available plugins.

The output of llm logs -cu shows the tool calls and SQL queries it executed to get that result.

Grok 4 Fast. New hosted vision-enabled reasoning model from xAI that's designed to be fast and extremely competitive on price. It has a 2 million token context window and "was trained end-to-end with tool-use reinforcement learning".

It's priced at $0.20/million input tokens and $0.50/million output tokens - 15x less than Grok 4 (which is $3/million input and $15/million output). That puts it cheaper than GPT-5 mini and Gemini 2.5 Flash on llm-prices.com.

The same model weights handle reasoning and non-reasoning based on a parameter passed to the model.

I've been trying it out via my updated llm-openrouter plugin, since Grok 4 Fast is available for free on OpenRouter for a limited period.

Here's output from the non-reasoning model. This actually output an invalid SVG - I had to make a tiny manual tweak to the XML to get it to render.

llm -m openrouter/x-ai/grok-4-fast:free "Generate an SVG of a pelican riding a bicycle" -o reasoning_enabled false

(I initially ran this without that -o reasoning_enabled false flag, but then I saw that OpenRouter enable reasoning by default for that model. Here's my previous invalid result.)

And the reasoning model:

llm -m openrouter/x-ai/grok-4-fast:free "Generate an SVG of a pelican riding a bicycle" -o reasoning_enabled true

In related news, the New York Times had a story a couple of days ago about Elon's recent focus on xAI: Since Leaving Washington, Elon Musk Has Been All In on His A.I. Company.

Mistral quietly released two new models yesterday: Magistral Small 1.2 (Apache 2.0, 96.1 GB on Hugging Face) and Magistral Medium 1.2 (not open weights same as Mistral's other "medium" models.)

Despite being described as "minor updates" to the Magistral 1.1 models these have one very notable improvement:

- Multimodality: Now equipped with a vision encoder, these models handle both text and images seamlessly.

Magistral is Mistral's reasoning model, so we now have a new reasoning vision LLM.

The other features from the tiny announcement on Twitter:

- Performance Boost: 15% improvements on math and coding benchmarks such as AIME 24/25 and LiveCodeBench v5/v6.

- Smarter Tool Use: Better tool usage with web search, code interpreter, and image generation.

- Better Tone & Persona: Responses are clearer, more natural, and better formatted for you.

In July it was the International Math Olympiad (OpenAI, Gemini), today it's the International Collegiate Programming Contest (ICPC). Once again, both OpenAI and Gemini competed with models that achieved Gold medal performance.

OpenAI's Mostafa Rohaninejad:

We received the problems in the exact same PDF form, and the reasoning system selected which answers to submit with no bespoke test-time harness whatsoever. For 11 of the 12 problems, the system’s first answer was correct. For the hardest problem, it succeeded on the 9th submission. Notably, the best human team achieved 11/12.

We competed with an ensemble of general-purpose reasoning models; we did not train any model specifically for the ICPC. We had both GPT-5 and an experimental reasoning model generating solutions, and the experimental reasoning model selecting which solutions to submit. GPT-5 answered 11 correctly, and the last (and most difficult problem) was solved by the experimental reasoning model.

And here's the blog post by Google DeepMind's Hanzhao (Maggie) Lin and Heng-Tze Cheng:

An advanced version of Gemini 2.5 Deep Think competed live in a remote online environment following ICPC rules, under the guidance of the competition organizers. It started 10 minutes after the human contestants and correctly solved 10 out of 12 problems, achieving gold-medal level performance under the same five-hour time constraint. See our solutions here.

I'm still trying to confirm if the models had access to tools in order to execute the code they were writing. The IMO results in July were both achieved without tools.

Update 27th September 2025: OpenAI researcher Ahmed El-Kishky confirms that OpenAI's model had a code execution environment but no internet:

For OpenAI, the models had access to a code execution sandbox, so they could compile and test out their solutions. That was it though; no internet access.

Qwen3-Next-80B-A3B. Qwen announced two new models via their Twitter account (and here's their blog): Qwen3-Next-80B-A3B-Instruct and Qwen3-Next-80B-A3B-Thinking.

They make some big claims on performance:

- Qwen3-Next-80B-A3B-Instruct approaches our 235B flagship.

- Qwen3-Next-80B-A3B-Thinking outperforms Gemini-2.5-Flash-Thinking.

The name "80B-A3B" indicates 80 billion parameters of which only 3 billion are active at a time. You still need to have enough GPU-accessible RAM to hold all 80 billion in memory at once but only 3 billion will be used for each round of inference, which provides a significant speedup in responding to prompts.

More details from their tweet:

- 80B params, but only 3B activated per token → 10x cheaper training, 10x faster inference than Qwen3-32B.(esp. @ 32K+ context!)

- Hybrid Architecture: Gated DeltaNet + Gated Attention → best of speed & recall

- Ultra-sparse MoE: 512 experts, 10 routed + 1 shared

- Multi-Token Prediction → turbo-charged speculative decoding

- Beats Qwen3-32B in perf, rivals Qwen3-235B in reasoning & long-context

The models on Hugging Face are around 150GB each so I decided to try them out via OpenRouter rather than on my own laptop (Thinking, Instruct).

I'm used my llm-openrouter plugin. I installed it like this:

llm install llm-openrouter

llm keys set openrouter

# paste key here

Then found the model IDs with this command:

llm models -q next

Which output:

OpenRouter: openrouter/qwen/qwen3-next-80b-a3b-thinking

OpenRouter: openrouter/qwen/qwen3-next-80b-a3b-instruct

I have an LLM prompt template saved called pelican-svg which I created like this:

llm "Generate an SVG of a pelican riding a bicycle" --save pelican-svg

This means I can run my pelican benchmark like this:

llm -t pelican-svg -m openrouter/qwen/qwen3-next-80b-a3b-thinking

Or like this:

llm -t pelican-svg -m openrouter/qwen/qwen3-next-80b-a3b-instruct

Here's the thinking model output (exported with llm logs -c | pbcopy after I ran the prompt):

I enjoyed the "Whimsical style with smooth curves and friendly proportions (no anatomical accuracy needed for bicycle riding!)" note in the transcript.

The instruct (non-reasoning) model gave me this:

"🐧🦩 Who needs legs!?" indeed! I like that penguin-flamingo emoji sequence it's decided on for pelicans.

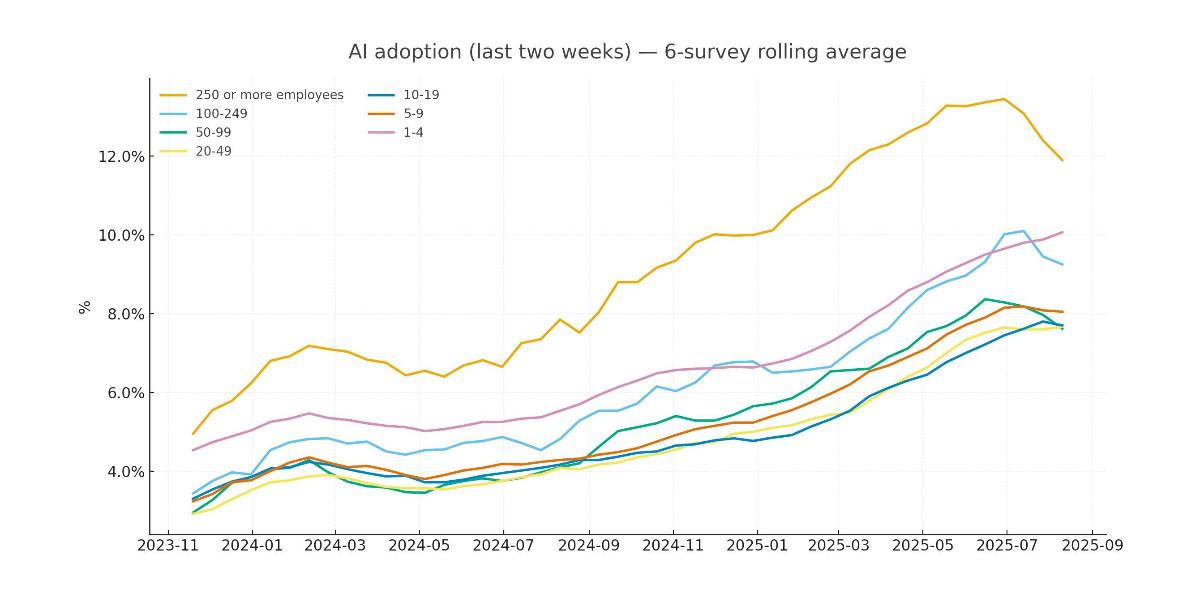

Recreating the Apollo AI adoption rate chart with GPT-5, Python and Pyodide

Apollo Global Management’s “Chief Economist” Dr. Torsten Sløk released this interesting chart which appears to show a slowdown in AI adoption rates among large (>250 employees) companies:

[... 2,673 words]GPT-5 Thinking in ChatGPT (aka Research Goblin) is shockingly good at search

“Don’t use chatbots as search engines” was great advice for several years... until it wasn’t.

[... 2,679 words]DeepSeek 3.1. The latest model from DeepSeek, a 685B monster (like DeepSeek v3 before it) but this time it's a hybrid reasoning model.

DeepSeek claim:

DeepSeek-V3.1-Think achieves comparable answer quality to DeepSeek-R1-0528, while responding more quickly.

Drew Breunig points out that their benchmarks show "the same scores with 25-50% fewer tokens" - at least across AIME 2025 and GPQA Diamond and LiveCodeBench.

The DeepSeek release includes prompt examples for a coding agent, a python agent and a search agent - yet more evidence that the leading AI labs have settled on those as the three most important agentic patterns for their models to support.

Here's the pelican riding a bicycle it drew me (transcript), which I ran from my phone using OpenRouter chat.

the percentage of users using reasoning models each day is significantly increasing; for example, for free users we went from <1% to 7%, and for plus users from 7% to 24%.

— Sam Altman, revealing quite how few people used the old model picker to upgrade from GPT-4o

GPT-5: Key characteristics, pricing and model card

I’ve had preview access to the new GPT-5 model family for the past two weeks (see related video and my disclosures) and have been using GPT-5 as my daily-driver. It’s my new favorite model. It’s still an LLM—it’s not a dramatic departure from what we’ve had before—but it rarely screws up and generally feels competent or occasionally impressive at the kinds of things I like to use models for.

[... 2,448 words]OpenAI’s new open weight (Apache 2) models are really good

The long promised OpenAI open weight models are here, and they are very impressive. They’re available under proper open source licenses—Apache 2.0—and come in two sizes, 120B and 20B.

[... 2,771 words]XBai o4 (via) Yet another open source (Apache 2.0) LLM from a Chinese AI lab. This model card claims:

XBai o4 excels in complex reasoning capabilities and has now completely surpassed OpenAI-o3-mini in Medium mode.

This a 32.8 billion parameter model released by MetaStone AI, a new-to-me lab who released their first model in March - MetaStone-L1-7B, then followed that with MetaStone-S1 1.5B, 7B and 32B in July and now XBai o4 in August.

The MetaStone-S1 models were accompanied with a paper, Test-Time Scaling with Reflective Generative Model.

There is very little information available on the English-language web about MetaStone AI. Their paper shows a relationship with USTC, University of Science and Technology of China in Hefei. One of their researchers confirmed on Twitter that their CEO is from KWAI which lead me to this Chinese language article from July last year about Li Yan, formerly of KWAI and now the founder of Wen Xiaobai and evidently now the CEO of MetaStone. www.wenxiaobai.com is listed as the "official website" linked to from the XBai-o4 README on GitHub.

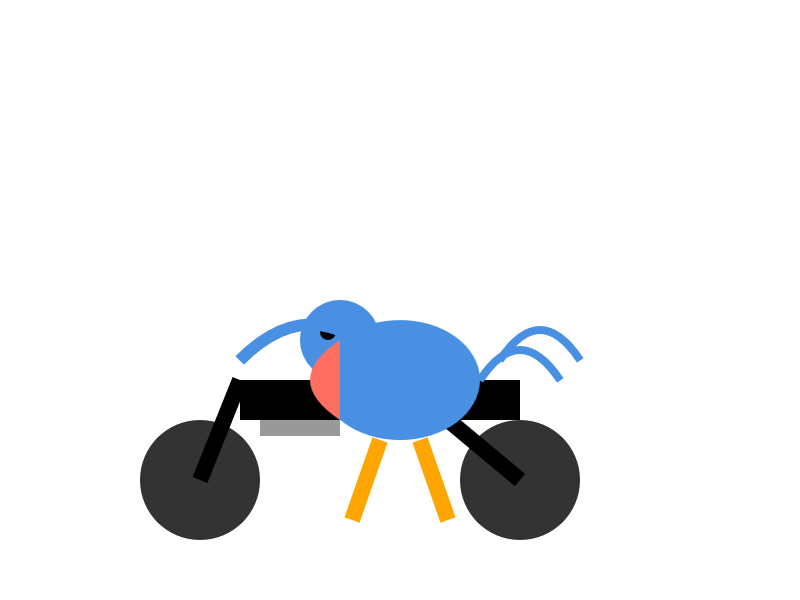

Ivan Fioravanti got it working under MLX in 4bit, 5bit, 6bit, 8bit and 4bit-DWQ sizes. I tried his 6bit one (a 24.81GB download) in LM Studio and had it "Generate an SVG of a pelican riding a bicycle". It thought for 39.49 seconds (thinking trace here) and gave me this:

I also had it "Write an HTML and JavaScript page implementing space invaders", which you can try out here. It's not great - the invaders don't fire bullets (it listed that as a "potential improvement") and I don't think it's winnable due to timing issues, but it's an interesting addition to my collection.

LM Studio reported a constant memory usage of 25.54GB and according to Activity Monitor used 87.5% of my GPU and around 39% of a CPU core.

It did a lot of work in the thinking trace - which took 10m35s! - including writing the first draft of much of the code that ended up in the finished result. Here's an extract:

Let me think of variables:

let enemyDirection = 1; // 1 for right, -1 for leftIn the game loop:

enemies.forEach(enemy => {> enemy.x += enemyDirection * enemy.dx;> });Then check if any enemy is out of bounds. If so, reverse direction and move all enemies down.But how to check if any enemy is at the edge?

Maybe find the max x of all enemies and see if it exceeds

canvas.width - enemy.width.Alternatively, track the leftmost and rightmost positions of enemies. Maybe a bit complex.

For simplicity, perhaps check if any enemy's

x + width > canvas.width or x < 0.

Here's the full transcript.

My initial impressions are that I'm not as impressed with this model for running on my own laptop as I was with Qwen3-Coder-30B-A3B-Instruct or GLM-4.5 Air.

But... how extraordinary is it that another Chinese AI lab has been able to produce a competitive model, this time with far less fanfare than we've seen from Qwen and Moonshot AI and Z.ai.

Deep Think in the Gemini app (via) Google released Gemini 2.5 Deep Think this morning, exclusively to their Ultra ($250/month) subscribers:

It is a variation of the model that recently achieved the gold-medal standard at this year's International Mathematical Olympiad (IMO). While that model takes hours to reason about complex math problems, today's release is faster and more usable day-to-day, while still reaching Bronze-level performance on the 2025 IMO benchmark, based on internal evaluations.

Google describe Deep Think's architecture like this:

Just as people tackle complex problems by taking the time to explore different angles, weigh potential solutions, and refine a final answer, Deep Think pushes the frontier of thinking capabilities by using parallel thinking techniques. This approach lets Gemini generate many ideas at once and consider them simultaneously, even revising or combining different ideas over time, before arriving at the best answer.

This approach sounds a little similar to the llm-consortium plugin by Thomas Hughes, see this video from January's Datasette Public Office Hours.

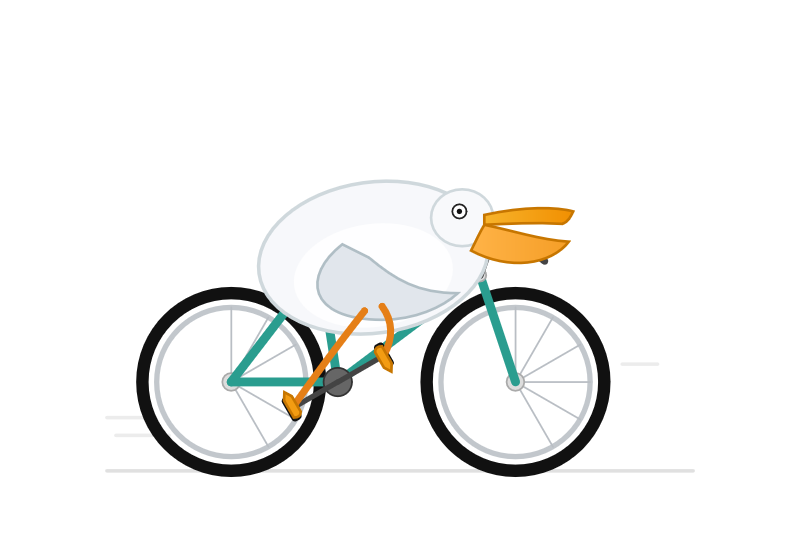

I don't have an Ultra account, but thankfully nickandbro on Hacker News tried "Create a svg of a pelican riding on a bicycle" (a very slight modification of my prompt, which uses "Generate an SVG") and got back a very solid result:

The bicycle is the right shape, and this is one of the few results I've seen for this prompt where the bird is very clearly a pelican thanks to the shape of its beak.

There are more details on Deep Think in the Gemini 2.5 Deep Think Model Card (PDF). Some highlights from that document:

- 1 million token input window, accepting text, images, audio, and video.

- Text output up to 192,000 tokens.

- Training ran on TPUs and used JAX and ML Pathways.

- "We additionally trained Gemini 2.5 Deep Think on novel reinforcement learning techniques that can leverage more multi-step reasoning, problem-solving and theorem-proving data, and we also provided access to a curated corpus of high-quality solutions to mathematics problems."

- Knowledge cutoff is January 2025.