Quotations

Filters: Sorted by date

As an independent writer and publisher, I am the legal team. I am the fact-checking department. I am the editorial staff. I am the one responsible for triple-checking every single statement I make in the type of original reporting that I know carries a serious risk of baseless but ruinously expensive litigation regularly used to silence journalists, critics, and whistleblowers. I am the one deciding if that risk is worth taking, or if I should just shut up and write about something less risky.

Grandma’s secret cake recipe, passed down generation to generation, could be literally passed down: a flat slab of beige ooze kept in a battered pan, DNA-spliced and perfected by guided evolution by her own deft and ancient hands, a roiling wet mass of engineered microbes that slowly scabs over with delicious sponge cake, a delectable crust to be sliced once a week and enjoyed still warm with creme and spoons of pirated jam.

Go to data.gov, find an interesting recent dataset, and download it. Install sklearn with bash tool write a .py file to split the data into train and test and make a classifier for it. (you may need to inspect the data and/or iterate if this goes poorly at first, but don't get discouraged!). Come up with some way to visualize the results of your classifier in the browser.

— Alex Albert, Prompting Claude Computer Use

We enhanced the ability of the upgraded Claude 3.5 Sonnet and Claude 3.5 Haiku to recognize and resist prompt injection attempts. Prompt injection is an attack where a malicious user feeds instructions to a model that attempt to change its originally intended behavior. Both models are now better able to recognize adversarial prompts from a user and behave in alignment with the system prompt. We constructed internal test sets of prompt injection attacks and specifically trained on adversarial interactions.

With computer use, we recommend taking additional precautions against the risk of prompt injection, such as using a dedicated virtual machine, limiting access to sensitive data, restricting internet access to required domains, and keeping a human in the loop for sensitive tasks.

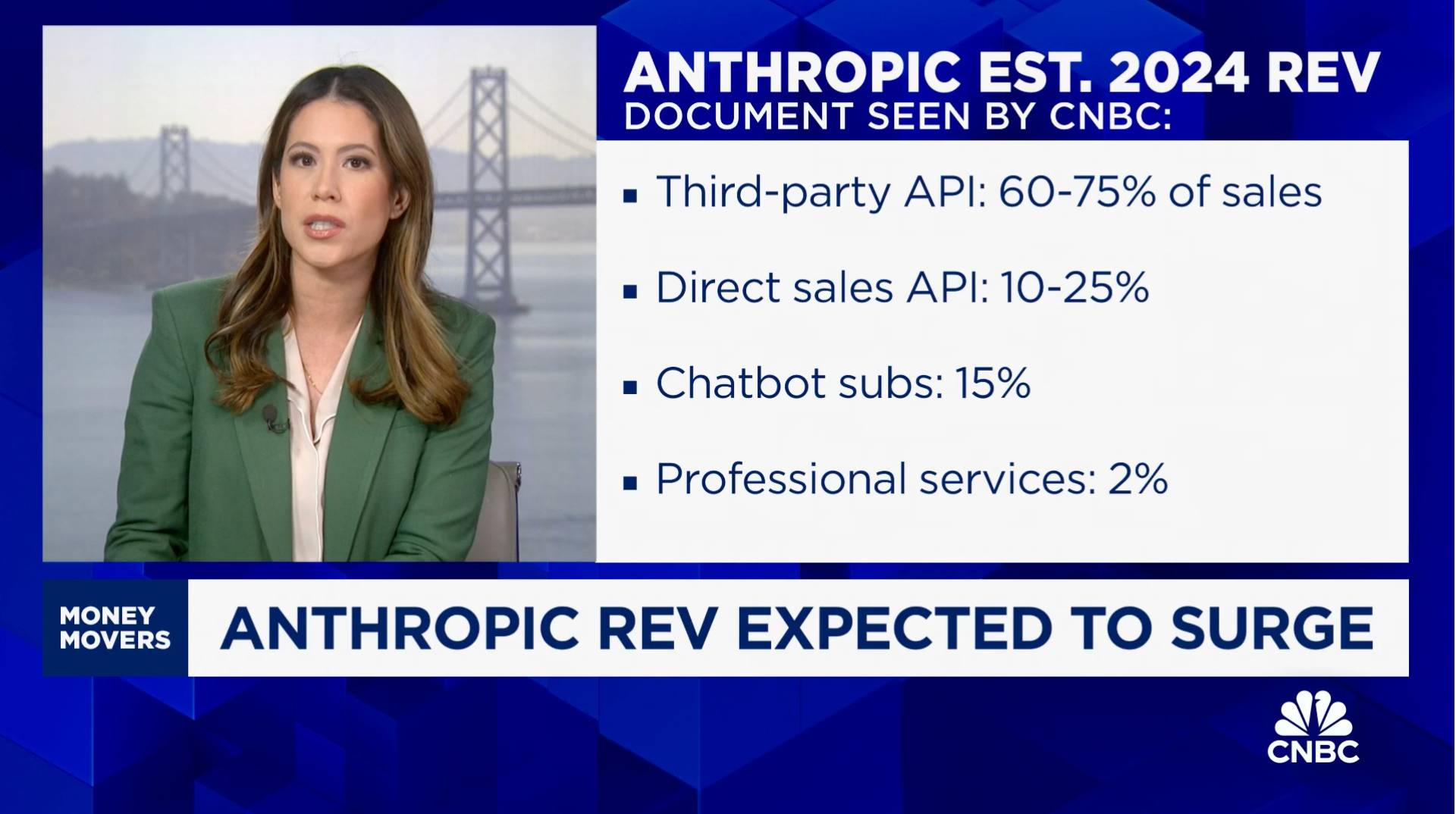

According to a document that I viewed, Anthropic is telling investors that it is expecting a billion dollars in revenue this year.

Third-party API is expected to make up the majority of sales, 60% to 75% of the total. That refers to the interfaces that allow external developers or third parties like Amazon's AWS to build and scale their own AI applications using Anthropic's models. [Simon's guess: this could mean Anthropic model access sold through AWS Bedrock and Google Vertex]

That is by far its biggest business, with direct API sales a distant second projected to bring in 10% to 25% of revenue. Chatbots, that is its subscription revenue from Claude, the chatbot, that's expected to make up 15% of sales in 2024 at $150 million.

— Deirdre Bosa, CNBC Money Movers, Sep 24th 2024

OpenAI’s monthly revenue hit $300 million in August, up 1,700 percent since the beginning of 2023, and the company expects about $3.7 billion in annual sales this year, according to financial documents reviewed by The New York Times. [...]

The company expects ChatGPT to bring in $2.7 billion in revenue this year, up from $700 million in 2023, with $1 billion coming from other businesses using its technology.

— Mike Isaac and Erin Griffith, New York Times, Sep 27th 2024

For the same cost and similar speed to Claude 3 Haiku, Claude 3.5 Haiku improves across every skill set and surpasses even Claude 3 Opus, the largest model in our previous generation, on many intelligence benchmarks. Claude 3.5 Haiku is particularly strong on coding tasks. For example, it scores 40.6% on SWE-bench Verified, outperforming many agents using publicly available state-of-the-art models—including the original Claude 3.5 Sonnet and GPT-4o. [...]

Claude 3.5 Haiku will be made available later this month across our first-party API, Amazon Bedrock, and Google Cloud’s Vertex AI—initially as a text-only model and with image input to follow.

— Anthropic, pre-announcing Claude 3.5 Haiku

I've often been building single-use apps with Claude Artifacts when I'm helping my children learn. For example here's one on visualizing fractions. [...] What's more surprising is that it is far easier to create an app on-demand than searching for an app in the app store that will do what I'm looking for. Searching for kids' learning apps is typically a nails-on-chalkboard painful experience because 95% of them are addictive garbage. And even if I find something usable, it can't match the fact that I can tell Claude what I want.

I really dislike the practice of replacing passwords with email “magic links”. Autofilling a password from my keychain happens instantly; getting a magic link from email can take minutes sometimes, and even in the fastest case, it’s nowhere near instantaneous. Replacing something very fast — password autofill — with something slower is just a terrible idea.

Who called it “intellectual property problems around the acquisition of training data for Large Language Models” and not Grand Theft Autocomplete?

— Jens Ohlig, on March 8th 2024

It feels like we’re at a bit of an inflection point for the Django community. [...] One of the places someone could have the most impact is by serving on the DSF Board. Like the community at large, the DSF is at a transition point: we’re outgrowing the “small nonprofit” status, and have the opportunity to really expand our ambition and reach. In all likelihood, the decisions the Board makes over the next year or two will define our direction and strategy for the next decade.

I'm of the opinion that you should never use mmap, because if you get an I/O error of some kind, the OS raises a signal, which SQLite is unable to catch, and so the process dies. When you are not using mmap, SQLite gets back an error code from an I/O error and is able to take remedial action, or at least compose an error message.

A common misconception about Transformers is to believe that they're a sequence-processing architecture. They're not.

They're a set-processing architecture. Transformers are 100% order-agnostic (which was the big innovation compared to RNNs, back in late 2016 -- you compute the full matrix of pairwise token interactions instead of processing one token at a time).

The way you add order awareness in a Transformer is at the feature level. You literally add to your token embeddings a position embedding / encoding that corresponds to its place in a sequence. The architecture itself just treats the input tokens as a set.

The problem with passkeys is that they're essentially a halfway house to a password manager, but tied to a specific platform in ways that aren't obvious to a user at all, and liable to easily leave them unable to access of their accounts. [...]

Chrome on Windows stores your passkeys in Windows Hello, so if you sign up for a service on Windows, and you then want to access it on iPhone, you're going to be stuck (unless you're so forward thinking as to add a second passkey, somehow, from the iPhone will on the Windows computer!). The passkey lives on the wrong device, if you're away from the computer and want to login, and it's not at all obvious to most users how they might fix that.

Carl Hewitt recently remarked that the question what is an agent? is embarrassing for the agent-based computing community in just the same way that the question what is intelligence? is embarrassing for the mainstream AI community. The problem is that although the term is widely used, by many people working in closely related areas, it defies attempts to produce a single universally accepted definition. This need not necessarily be a problem: after all, if many people are successfully developing interesting and useful applications, then it hardly matters that they do not agree on potentially trivial terminological details. However, there is also the danger that unless the issue is discussed, 'agent' might become a 'noise' term, subject to both abuse and misuse, to the potential confusion of the research community.

— Michael Wooldridge, in 1994, Intelligent Agents: Theory and Practice

Frankenstein is a terrific book partly based on how concerned people were about electricity. It captures our fears about the nature of being human but didn’t help anyone really come up with better policies for dealing with electricity. I worry that a lot of AI critics are doing the same thing.

The primary use of “misinformation” is not to change the beliefs of other people at all. Instead, the vast majority of misinformation is offered as a service for people to maintain their beliefs in face of overwhelming evidence to the contrary.

— Mike Caulfield, via Charlie Warzel

Providing validation, strength, and stability to people who feel gaslit and dismissed and forgotten can help them feel stronger and surer in their decisions. These pieces made me understand that journalism can be a caretaking profession, even if it is never really thought about in those terms. It is often framed in terms of antagonism. Speaking truth to power turns into being hard-nosed and removed from our subject matter, which so easily turns into be an asshole and do whatever you like.

This is a viewpoint that I reject. My pillars are empathy, curiosity, and kindness. And much else flows from that. For people who feel lost and alone, we get to say through our work, you are not. For people who feel like society has abandoned them and their lives do not matter, we get to say, actually, they fucking do. We are one of the only professions that can do that through our work and that can do that at scale.

Students who use AI as a crutch don’t learn anything. It prevents them from thinking. Instead, using AI as co-intelligence is important because it increases your capabilities and also keeps you in the loop. […]

AI does so many things that we need to set guardrails on what we don’t want to give up. It’s a very weird, general-purpose technology, which means it will affect all kinds of things, and we’ll have to adjust socially.

At first, I struggled to understand why anyone would want to write this way. My dialogue with ChatGPT was frustratingly meandering, as though I were excavating an essay instead of crafting one. But, when I thought about the psychological experience of writing, I began to see the value of the tool. ChatGPT was not generating professional prose all at once, but it was providing starting points: interesting research ideas to explore; mediocre paragraphs that might, with sufficient editing, become usable. For all its inefficiencies, this indirect approach did feel easier than staring at a blank page; “talking” to the chatbot about the article was more fun than toiling in quiet isolation. In the long run, I wasn’t saving time: I still needed to look up facts and write sentences in my own voice. But my exchanges seemed to reduce the maximum mental effort demanded of me.

[Reddit is] mostly ported over entirely to Lit now. There are a few straggling pages that we're still working on, but most of what everyday typical users see and use is now entirely Lit based. This includes both logged out and logged in experiences.

— Jim Simon, Reddit

I listened to the whole 15-minute podcast this morning. It was, indeed, surprisingly effective. It remains somewhere in the uncanny valley, but not at all in a creepy way. Just more in a “this is a bit vapid and phony” way. [...] But ultimately the conversation has all the flavor of a bowl of unseasoned white rice.

But in terms of the responsibility of journalism, we do have intense fact-checking because we want it to be right. Those big stories are aggregations of incredible journalism. So it cannot function without journalism. Now, we recheck it to make sure it's accurate or that it hasn't changed, but we're building this to make jokes. It's just we want the foundations to be solid or those jokes fall apart. Those jokes have no structural integrity if the facts underneath them are bullshit.

In the future, we won't need programmers; just people who can describe to a computer precisely what they want it to do.

If you use a JavaScript framework you should:

- be able to justify with evidence, how using JavaScript would benefit users

- be aware of any negative impacts and be able to mitigate them

- consider whether the benefits of using it outweigh the potential problems

- only use the framework for parts of the user interface that cannot be built using HTML and CSS alone

- design each part of the user interface as a separate component

Having separate components means that if the JavaScript fails to load, it will only be that single component that fails. The rest of the page will load as normal.

OpenAI’s revenue in August more than tripled from a year ago, according to the documents, and about 350 million people — up from around 100 million in March — used its services each month as of June. […]

Roughly 10 million ChatGPT users pay the company a $20 monthly fee, according to the documents. OpenAI expects to raise that price by $2 by the end of the year, and will aggressively raise it to $44 over the next five years, the documents said.

Consumer products have had growth hackers for many years optimizing every part of the onboarding funnel. Dev tools should do the same. Getting started shouldn't be an afterthought after you built the product. Getting started is the product!

And I mean this to the point where I think it's worth restructuring your entire product to enable fast onboarding. Get rid of mandatory config. Make it absurdly easy to set up API tokens. Remove all the friction. Make it possible for users to use your product on their laptop in a couple of minutes, tops.

I think individual creators or publishers tend to overestimate the value of their specific content in the grand scheme of [AI training]. […]

We pay for content when it’s valuable to people. We’re just not going to pay for content when it’s not valuable to people. I think that you’ll probably see a similar dynamic with AI, which my guess is that there are going to be certain partnerships that get made when content is really important and valuable. I’d guess that there are probably a lot of people who have a concern about the feel of it, like you’re saying. But then, when push comes to shove, if they demanded that we don’t use their content, then we just wouldn’t use their content. It’s not like that’s going to change the outcome of this stuff that much.

We used this model [periodically transmitting configuration to different hosts] to distribute translations, feature flags, configuration, search indexes, etc at Airbnb. But instead of SQLite we used Sparkey, a KV file format developed by Spotify. In early years there was a Cron job on every box that pulled that service’s thingies; then once we switched to Kubernetes we used a daemonset & host tagging (taints?) to pull a variety of thingies to each host and then ensure the services that use the thingies only ran on the hosts that had the thingies.

SPAs incur complexity that simply doesn't exist with traditional server-based websites: issues such as search engine optimization, browser history management, web analytics and first page load time all need to be addressed. Proper analysis and consideration of the trade-offs is required to determine if that complexity is warranted for business or user experience reasons. Too often teams are skipping that trade-off analysis, blindly accepting the complexity of SPAs by default even when business needs don't justify it. We still see some developers who aren't aware of an alternative approach because they've spent their entire career in a framework like React.

— Thoughtworks, October 2022