Posts tagged webassembly in 2023

Filters: Year: 2023 × webassembly × Sorted by date

The WebAssembly Go Playground (via) Jeff Lindsay has a full Go 1.21.1 compiler running entirely in the browser.

Perplexity: interactive LLM visualization (via) I linked to a video of Linus Lee’s GPT visualization tool the other day. Today he’s released a new version of it that people can actually play with: it runs entirely in a browser, powered by a 120MB version of the GPT-2 ONNX model loaded using the brilliant Transformers.js JavaScript library.

Wikipedia search-by-vibes through millions of pages offline (via) Really cool demo by Lee Butterman, who built embeddings of 2 million Wikipedia pages and figured out how to serve them directly to the browser, where they are used to implement “vibes based” similarity search returning results in 250ms. Lots of interesting details about how he pulled this off, using Arrow as the file format and ONNX to run the model in the browser.

WebLLM supports Llama 2 70B now. The WebLLM project from MLC uses WebGPU to run large language models entirely in the browser. They recently added support for Llama 2, including Llama 2 70B, the largest and most powerful model in that family.

To my astonishment, this worked! I used a M2 Mac with 64GB of RAM and Chrome Canary and it downloaded many GBs of data... but it worked, and spat out tokens at a slow but respectable rate of 3.25 tokens/second.

Building a Signal Analyzer with Modern Web Tech (via) Casey Primozic’s detailed write-up of his project to build a spectrogram and oscilloscope using cutting-edge modern web technology: Web Workers, Web Audio, SharedArrayBuffer, Atomics.waitAsync, OffscreenCanvas, WebAssembly SIMD and more. His conclusion: “Web developers now have all the tools they need to build native-or-better quality apps on the web.”

Web LLM runs the vicuna-7b Large Language Model entirely in your browser, and it’s very impressive

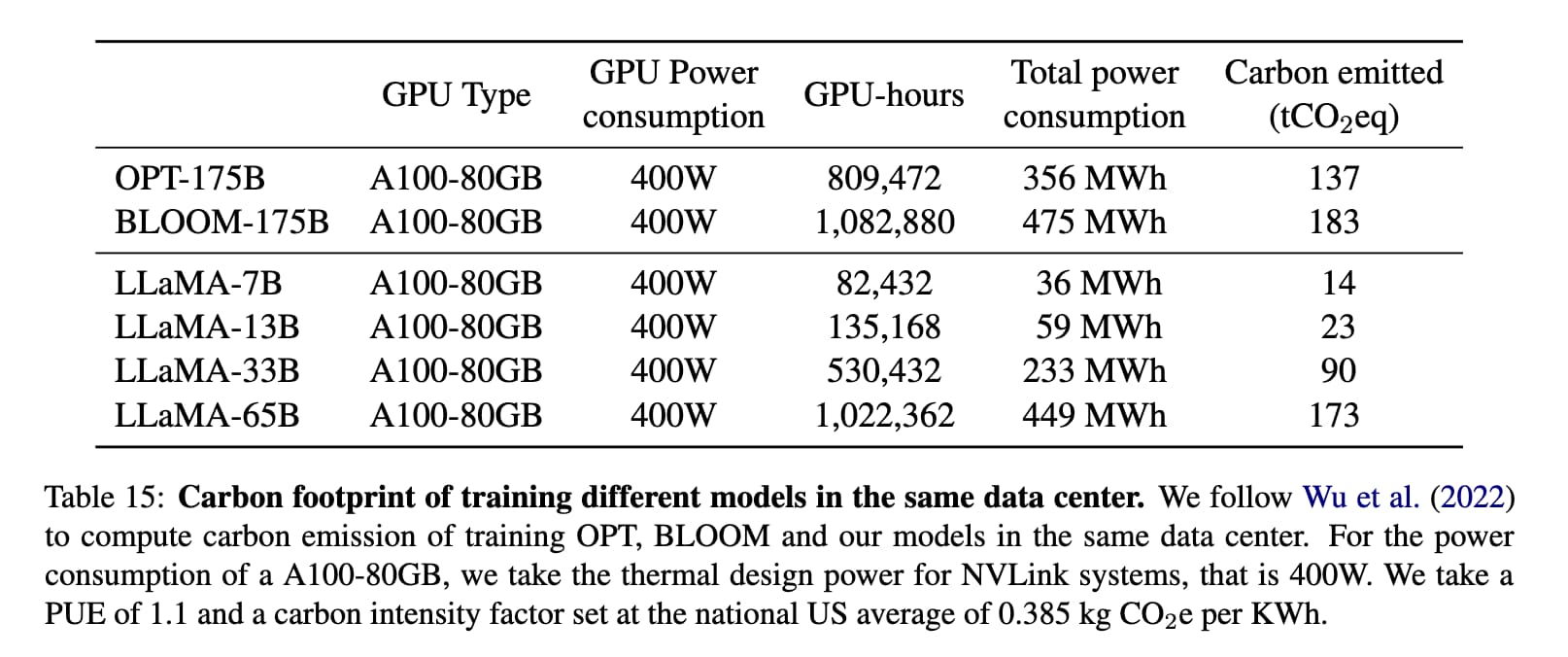

A month ago I asked Could you train a ChatGPT-beating model for $85,000 and run it in a browser?. $85,000 was a hypothetical training cost for LLaMA 7B plus Stanford Alpaca. “Run it in a browser” was based on the fact that Web Stable Diffusion runs a 1.9GB Stable Diffusion model in a browser, so maybe it’s not such a big leap to run a small Large Language Model there as well.

[... 2,276 words]AI photo sorter (via) Really interesting implementation of machine learning photo classification by Alexander Visheratin. This tool lets you select as many photos as you like from your own machine, then provides a web interface for classifying them into labels that you provide. It loads a 102MB quantized CLIP model and executes it in the browser using WebAssembly. Once classified, a “Generate script” button produces a copyable list of shell commands for moving your images into corresponding folders on your own machine. Your photos never get uploaded to a server—everything happens directly in your browser.

Could you train a ChatGPT-beating model for $85,000 and run it in a browser?

I think it’s now possible to train a large language model with similar functionality to GPT-3 for $85,000. And I think we might soon be able to run the resulting model entirely in the browser, and give it capabilities that leapfrog it ahead of ChatGPT.

[... 1,751 words]Web Stable Diffusion (via) I just ran the full Stable Diffusion image generation model entirely in my browser, and used it to generate an image (of two raccoons eating pie in the woods, see “via” link). I had to use Google Chrome Canary since this depends on WebGPU which still isn’t fully rolled out, but it worked perfectly.

Weeknotes: A bunch of things I learned this week, plus datasette-explain

The Datasette table view refactor, JSON redesign and ?_extra= continues this week, mainly in this ongoing pull request and this tracking issue.

PocketPy. PocketPy is “a lightweight(~5000 LOC) Python interpreter for game engines”. It’s implemented as a single C++ header which provides an impressive subset of the Python language: functions, dictionaries, lists, strings and basic classes too. There’s also a browser demo that loads a 766.66 KB pypocket.wasm file (240.72 KB compressed) and uses it to power a basic terminal interface. I tried and failed to get that pypocket.wasm file working from wasmer/wasmtime/wasm3—it should make a really neat lightweight language to run in a WebAssembly sandbox.

Python Sandbox in Web Assembly (via) Jim Kring responded to my questions on Mastodon about running Python in a WASM sandbox by building this repo, which demonstrates using wasmer-python to run a build of Python 3.6 compiled to WebAssembly, complete with protected access to a sandbox directory.