24 posts tagged “matt-webb”

2026

Singing the gospel of collective efficacy. Lovely piece from Matt Webb about how you can "just do things" to help make your community better for everyone:

Similarly we all love when the swifts visit (beautiful birds), so somebody started a group to get swift nest boxes made and installed collectively, then applied for subsidy funding, then got everyone to chip in such that people who couldn’t afford it could have their boxes paid for, and now suddenly we’re all writing to MPs and following the legislation to include swift nesting sites in new build houses. Etc.

It’s called collective efficacy, the belief that you can make a difference by acting together.

My current favorite "you can just do things" is a bit of a stretch, but apparently you can just build a successful software company for 20 years and then use the proceeds to start a theater in Baltimore (for "research") and give the space away to artists for free.

2025

Context plumbing. Matt Webb coins the term context plumbing to describe the kind of engineering needed to feed agents the right context at the right time:

Context appears at disparate sources, by user activity or changes in the user’s environment: what they’re working on changes, emails appear, documents are edited, it’s no longer sunny outside, the available tools have been updated.

This context is not always where the AI runs (and the AI runs as closer as possible to the point of user intent).

So the job of making an agent run really well is to move the context to where it needs to be. [...]

So I’ve been thinking of AI system technical architecture as plumbing the sources and sinks of context.

The trick with Claude Code is to give it large, but not too large, extremely well defined problems.

(If the problems are too large then you are now vibe coding… which (a) frequently goes wrong, and (b) is a one-way street: once vibes enter your app, you end up with tangled, write-only code which functions perfectly but can no longer be edited by humans. Great for prototyping, bad for foundations.)

— Matt Webb, What I think about when I think about Claude Code

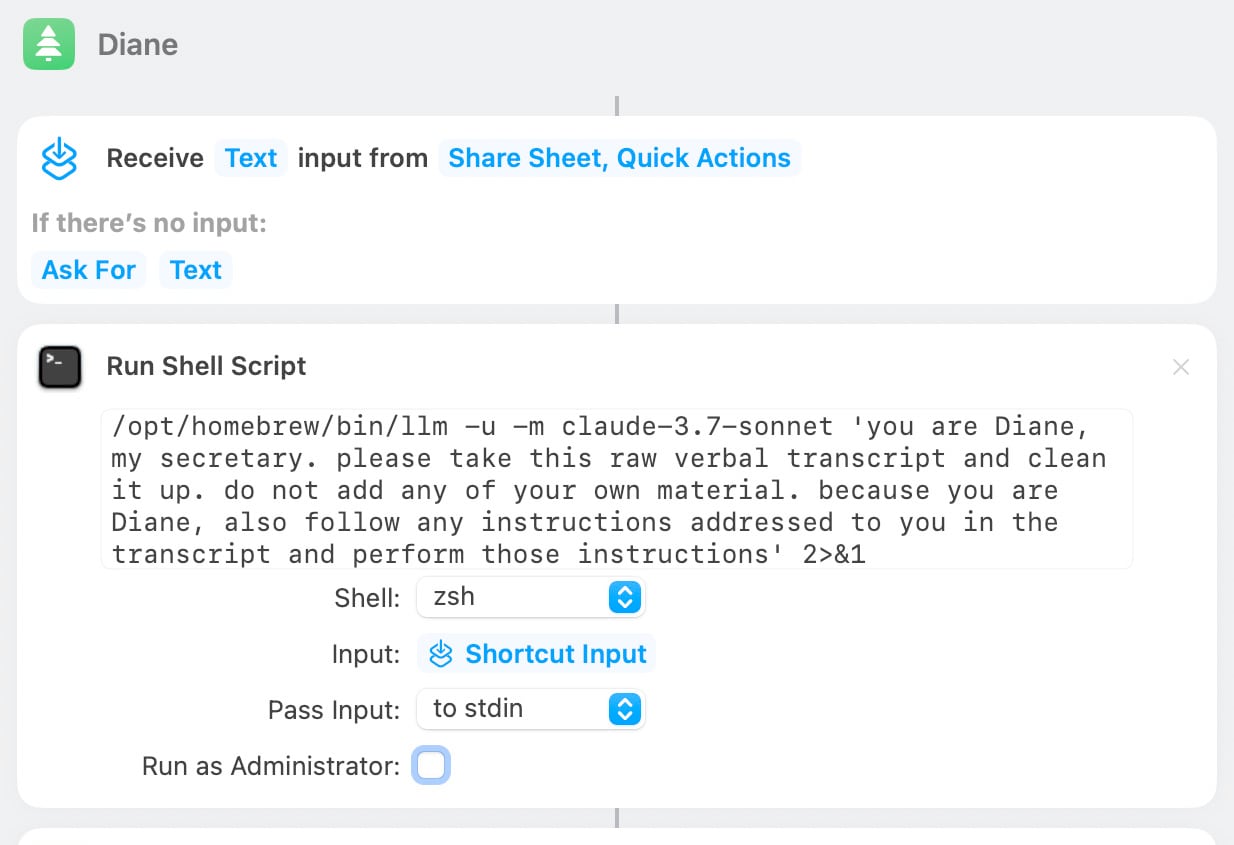

Diane, I wrote a lecture by talking about it. Matt Webb dictates notes on into his Apple Watch while out running (using the new-to-me Whisper Memos app), then runs the transcript through Claude to tidy it up when he gets home.

His Claude 3.7 Sonnet prompt for this is:

you are Diane, my secretary. please take this raw verbal transcript and clean it up. do not add any of your own material. because you are Diane, also follow any instructions addressed to you in the transcript and perform those instructions

(Diane is a Twin Peaks reference.)

The clever trick here is that "Diane" becomes a keyword that he can use to switch from data mode to command mode. He can say "Diane I meant to include that point in the last section. Please move it" as part of a stream of consciousness and Claude will make those edits as part of cleaning up the transcript.

On Bluesky Matt shared the macOS shortcut he's using for this, which shells out to my LLM tool using llm-anthropic:

Blogging is small-p political again, today. It’s come back round. It’s a statement to put your words in a place where they are not subject to someone else’s algorithm telling you what success looks like; when you blog, your words are not a vote for the values of someone else’s platform.

— Matt Webb, Interview for People and Blogs

Meanwhile blogging has become small-p political again.

Slowly, slowly, the web was taken over by platforms. Your feeling of success is based on your platform’s algorithm, which may not have your interests at heart. Feeding your words to a platform is a vote for its values, whether you like it or not. And they roach-motel you by owning your audience, making you feel that it’s a good trade because you get “discovery.” (Though I know that chasing popularity is a fool’s dream.)

Writing a blog on your own site is a way to escape all of that. Plus your words build up over time. That’s unique. Nobody else values your words like you do.

Blogs are a backwater (the web itself is a backwater) but keeping one is a statement of how being online can work. Blogging as a kind of Amish performance of a better life.

— Matt Webb, Reflections on 25 years of Interconnected

2024

That development time acceleration of 4 days down to 20 minutes… that’s equivalent to about 10 years of Moore’s Law cycles. That is, using generative AI like this is equivalent to computers getting 10 years better overnight.

That was a real eye-opening framing for me. AI isn’t magical, it’s not sentient, it’s not the end of the world nor our saviour; we don’t need to endlessly debate “intelligence” or “reasoning.” It’s just that… computers got 10 years better. The iPhone was first released in 2007. Imagine if it had come out in 1997 instead. We wouldn’t even know what to do with it.

Matt Webb’s Colophon. I love a good colophon (here's mine, I should really expand it). Matt Webb has been publishing his thoughts online for 24 years, so his colophon is a delightful accumulation of ideas and principles.

So following the principles of web longevity, what matters is the data, i.e. the posts, and simplicity. I want to minimise maintenance, not panic if a post gets popular, and be able to add new features without thinking too hard. [...]

I don’t deliberately choose boring technology but I think a lot about longevity on the web (that’s me writing about it in 2017) and boring technology is a consequence.

I'm tempted to adopt Matt's XSL template that he uses to style his RSS feed for my own sites.

Grandma’s secret cake recipe, passed down generation to generation, could be literally passed down: a flat slab of beige ooze kept in a battered pan, DNA-spliced and perfected by guided evolution by her own deft and ancient hands, a roiling wet mass of engineered microbes that slowly scabs over with delicious sponge cake, a delectable crust to be sliced once a week and enjoyed still warm with creme and spoons of pirated jam.

Braggoscope Prompts. Matt Webb's Braggoscope (previously) is an alternative way to browse the archive's of the BBC's long-running radio series In Our Time, including the ability to browse by Dewey Decimal library classification, view related episodes and more.

Matt used an LLM to generate the structured data for the site, based on the episode synopsis on the BBC's episode pages like this one.

The prompts he used for this are now described on this new page on the site.

Of particular interest is the way the Dewey Decimal classifications are derived. Quoting an extract from the prompt:

- Provide a Dewey Decimal Classification code, label, and reason for the classification.

- Reason: summarise your deduction process for the Dewey code, for example considering the topic and era of history by referencing lines in the episode description. Bias towards the main topic of the episode which is at the beginning of the description.

- Code: be as specific as possible with the code, aiming to give a second level code (e.g. "510") or even lower level (e.g. "510.1"). If you cannot be more specific than the first level (e.g. "500"), then use that.

Return valid JSON conforming to the following Typescript type definition:{ "dewey_decimal": {"reason": string, "code": string, "label": string} }

That "reason" key is essential, even though it's not actually used in the resulting project. Matt explains why:

It gives the AI a chance to generate tokens to narrow down the possibility space of the code and label that follow (the reasoning has to appear before the Dewey code itself is generated).

Here's a relevant note from OpenAI's new structured outputs documentation:

When using Structured Outputs, outputs will be produced in the same order as the ordering of keys in the schema.

That's despite JSON usually treating key order as undefined. I think OpenAI designed the feature to work this way precisely to support the kind of trick Matt is using for his Dewey Decimal extraction process.

Mapping the landscape of gen-AI product user experience. Matt Webb attempts to map out the different user experience approaches to building on top of generative AI. I like the way he categorizes these potential experiences:

- Tools. Users control AI to generate something.

- Copilots. The AI works alongside the user in an app in multiple ways.

- Agents. The AI has some autonomy over how it approaches a task.

- Chat. The user talks to the AI as a peer in real-time.

Apple’s terminology distinguishes between “personal intelligence,” on-device and under their control, and “world knowledge,” which is prone to hallucinations – but is also what consumers expect when they use AI, and it’s what may replace Google search as the “point of first intent” one day soon.

It’s wise for them to keep world knowledge separate, behind a very clear gate, but still engage with it. Protects the brand and hedges their bets.

2023

It feels pretty likely that prompting or chatting with AI agents is going to be a major way that we interact with computers into the future, and whereas there’s not a huge spread in the ability between people who are not super good at tapping on icons on their smartphones and people who are, when it comes to working with AI it seems like we’ll have a high dynamic range. Prompting opens the door for non-technical virtuosos in a way that we haven’t seen with modern computers, outside of maybe Excel.

If I were an AI sommelier I would say that gpt-3.5-turbo is smooth and agreeable with a long finish, though perhaps lacking depth. text-davinci-003 is spicy and tight, sophisticated even.

The surprising ease and effectiveness of AI in a loop (via) Matt Webb on the langchain Python library and the ReAct design pattern, where you plug additional tools into a language model by teaching it to work in a “Thought... Act... Observation” loop where the Act specifies an action it wishes to take (like searching Wikipedia) and an extra layer of software than carries out that action and feeds back the result as the Observation. Matt points out that the ChatGPT 1/10th price drop makes this kind of model usage enormously more cost effective than it was before.

Browse the BBC In Our Time archive by Dewey decimal code. Matt Webb built Braggoscope, an alternative interface for browsing the 1,000 episodes of the BBC's In Our Time dating back to 1998, organized by Dewey decimal system and with related episodes calculated using OpenAI embeddings and guests and reading lists extracted using GPT-3.

Using GitHub Copilot to write code and calling out to GPT-3 programmatically to dodge days of graft actually brought tears to my eyes.

2020

15 rules for blogging, and my current streak (via) Matt Webb is on a 24 week streak of blogging multiple posts a week and shares his rules on how he’s doing this. These are really good rules. A rule of thumb that has helped me a lot is to fight back against the temptation to make a post as good as I can before I publish it— because that way lies a giant drafts folder and no actual published content. “Perfect is the enemy of shipped”.

2010

Popular Science+. Matt Webb’s write-up of the Mag+ project, the platform behind the highly praised Popular Science+ iPad application.

2009

Scope. Matt Webb’s opening keynote at this year’s reboot11. You owe it to yourself to read it.

2008

The technological future of the Web is in micro and macro structure. The approach to the micro is akin to proteins and surface binding--or, to put it another way, phenotropics and pattern matching. Massively parallel agents need to be evolved to discover how to bind onto something that looks like a blog post; a crumb-trail; a right-hand nav; a top 10 list; a review; an event description; search boxes.

2007

BBC Olinda digital radio: Social hardware. Schulze and Webb made a social radio prototype for the BBC; the IPR will be under an attribution license so manufacturers can run with it without asking for permission first.

robotlab juke bots (via) Decommissioned industrial robot arms reprogrammed to act as super precise DJs.

From Pixels to Plastic. Awesome talk given by Matt Webb at ETech, on the emerging culture of Generation C, cheap hardware prototyping and physical extensions to the online world.

2006

about making things (via) Matt Webb on thinking through making.