Saturday, 15th April 2023

Although fine-tuning can feel like the more natural option—training on data is how GPT learned all of its other knowledge, after all—we generally do not recommend it as a way to teach the model knowledge. Fine-tuning is better suited to teaching specialized tasks or styles, and is less reliable for factual recall. [...] In contrast, message inputs are like short-term memory. When you insert knowledge into a message, it's like taking an exam with open notes. With notes in hand, the model is more likely to arrive at correct answers.

— Ted Sanders, OpenAI

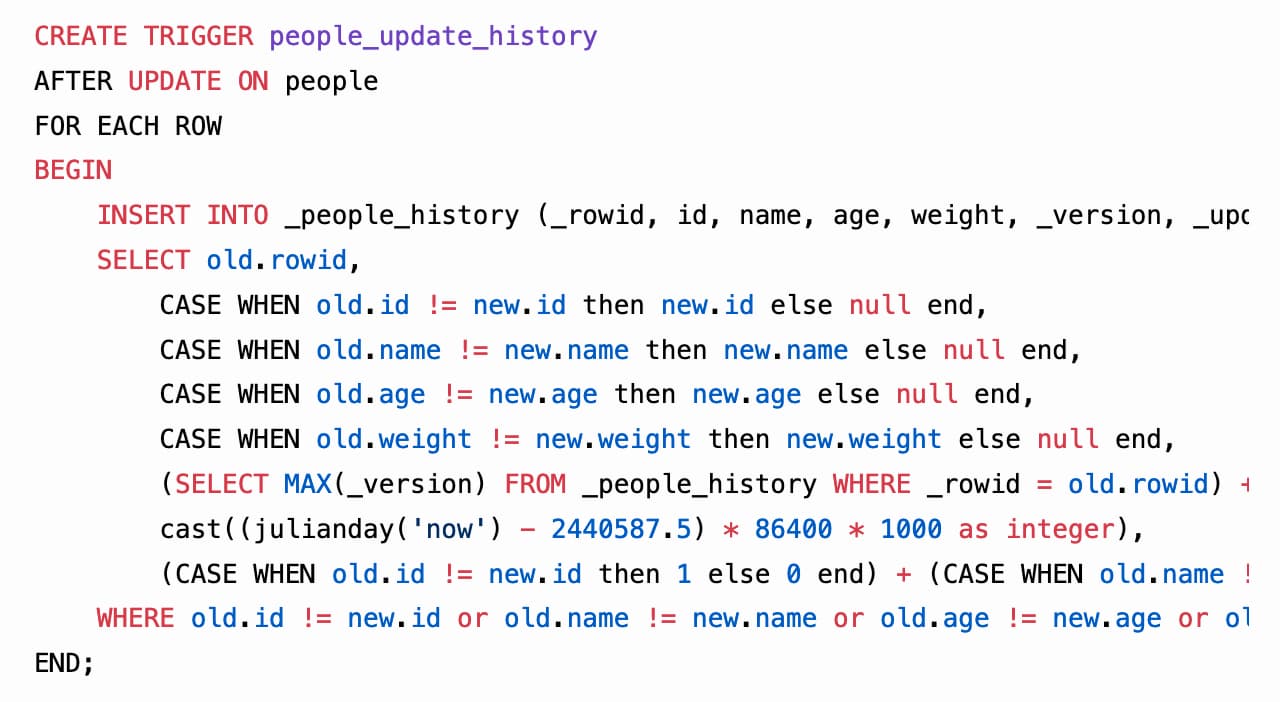

sqlite-history: tracking changes to SQLite tables using triggers (also weeknotes)

In between blogging about ChatGPT rhetoric, micro-benchmarking with ChatGPT Code Interpreter and Why prompt injection is an even bigger problem now I managed to ship the beginnings of a new project: sqlite-history.

[... 1,680 words]