10 posts tagged “stanford”

2025

[2 points] Learn basic NumPy operations with an AI tutor! Use an AI chatbot (e.g., ChatGPT, Claude, Gemini, or Stanford AI Playground) to teach yourself how to do basic vector and matrix operations in NumPy (import numpy as np). AI tutors have become exceptionally good at creating interactive tutorials, and this year in CS221, we're testing how they can help you learn fundamentals more interactively than traditional static exercises.

— Stanford CS221 Autumn 2025, Problem 1: Linear Algebra

2024

GPUs Go Brrr (via) Fascinating, detailed low-level notes on how to get the most out of NVIDIA's H100 GPUs (currently selling for around $40,000 a piece) from the research team at Stanford who created FlashAttention, among other things.

The swizzled memory layouts are flat-out incorrectly documented, which took considerable time for us to figure out.

2023

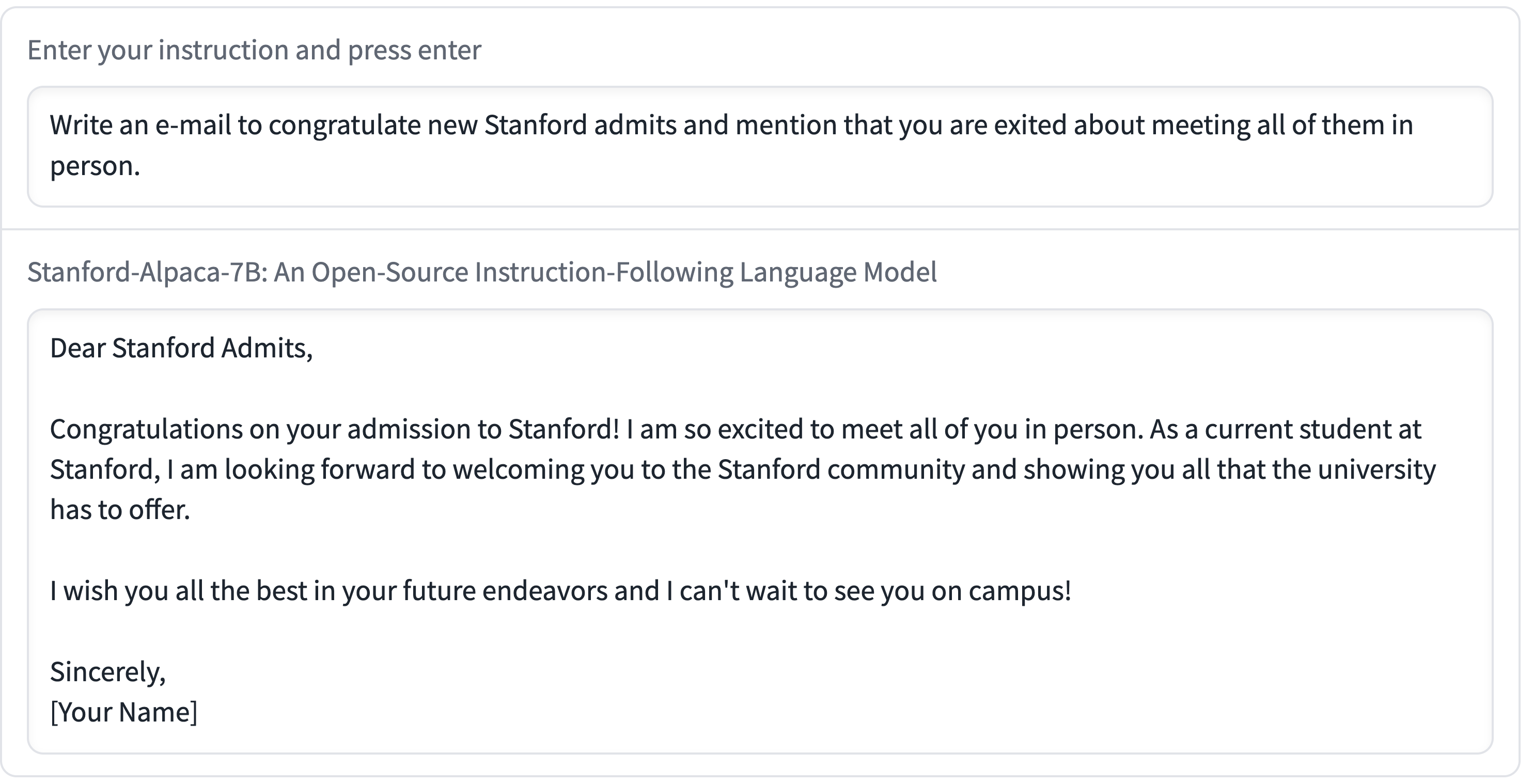

Train and run Stanford Alpaca on your own machine. The team at Replicate managed to train their own copy of Stanford’s Alpaca—a fine-tuned version of LLaMA that can follow instructions like ChatGPT. Here they provide step-by-step instructions for recreating Alpaca yourself—running the training needs one or more A100s for a few hours, which you can rent through various cloud providers.

Stanford Alpaca, and the acceleration of on-device large language model development

On Saturday 11th March I wrote about how Large language models are having their Stable Diffusion moment. Today is Monday. Let’s look at what’s happened in the past three days.

[... 2,055 words]We introduce Alpaca 7B, a model fine-tuned from the LLaMA 7B model on 52K instruction-following demonstrations. Alpaca behaves similarly to OpenAI’s text-davinci-003, while being surprisingly small and easy/cheap to reproduce (<600$).

2020

Weeknotes: Working on my screenplay

I’m taking an Introduction to Screenwriting course with Adam Tobin at Stanford, and my partial screenplay is due this week. I’m pulling together some scenes that tell the story of the Russian 1917 February Revolution and the fall of the Tsar through the lens of the craftsmen working on the Tsar’s last Fabergé egg. So I’ve not been spending much time on anything else.

[... 226 words]Weeknotes: Improv at Stanford, planning Datasette Cloud

Last week was the first week of the quarter at Stanford—which is called “shopping week” here because students are expected to try different classes to see which ones they are going to stick with.

[... 806 words]2019

Weeknotes: first week of Stanford classes

One of the benefits of the JSK fellowship is that I can take classes and lectures at Stanford, on a somewhat ad-hoc basis (I don’t take exams or earn credits).

[... 544 words]JSK Journalism Fellowships names Class of 2019-2020 (and I’m in it!) (via) In personal news... I’ve been accepted for a ten month journalism fellowship at Stanford (starting September)! My work there will involve “Improving the impact of investigative stories by expanding the open-source ecosystem of tools that allows journalists to share the underlying data”.

2007

VectorMagic. Neat online tool (with a Flex frontend) for tracing bitmap images in to vectors, based on research at the Stanford AI lab.