8 posts tagged “clip”

CLIP is an embedding model by OpenAI that supports comparisons between images and text.

2024

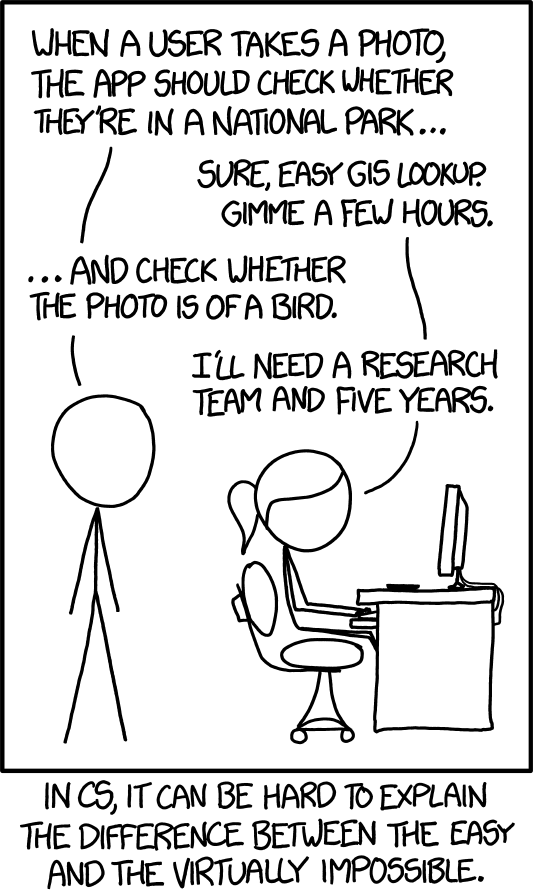

XKCD 1425 (Tasks) turns ten years old today (via) One of the all-time great XKCDs. It's amazing that "check whether the photo is of a bird" has gone from PhD-level to trivially easy to solve (with a vision LLM, or CLIP, or ResNet+ImageNet among others).

The key idea still very much stands though. Understanding the difference between easy and hard challenges in software development continues to require an enormous depth of experience.

I'd argue that LLMs have made this even worse.

Understanding what kind of tasks LLMs can and cannot reliably solve remains incredibly difficult and unintuitive. They're computer systems that are terrible at maths and that can't reliably lookup facts!

On top of that, the rise of AI-assisted programming tools means more people than ever are beginning to create their own custom software.

These brand new AI-assisted proto-programmers are having a crash course in this easy-v.s.-hard problem.

I saw someone recently complaining that they couldn't build a Claude Artifact that could analyze images, even though they knew Claude itself could do that. Understanding why that's not possible involves understanding how the CSP headers that are used to serve Artifacts prevent the generated code from making its own API calls out to an LLM!

Searching an aerial photo with text queries. Robin Wilson built a demo that lets you search a large aerial photograph of Southampton for things like "roundabout" or "tennis court". He explains how it works in detail: he used the SkyCLIP model, which is trained on "5.2 million remote sensing image-text pairs in total, covering more than 29K distinct semantic tags" to generate embeddings for 200x200 image segments (with 100px of overlap), then stored them in Pinecone.

The Super Effectiveness of Pokémon Embeddings Using Only Raw JSON and Images.

A deep dive into embeddings from Max Woolf, exploring 1,000 different Pokémon (loaded from PokéAPI using this epic GraphQL query) and then embedding the cleaned up JSON data using nomic-embed-text-v1.5 and the official Pokémon image representations using nomic-embed-vision-v1.5.

I hadn't seen nomic-embed-vision-v1.5 before: it brings multimodality to Nomic embeddings and operates in the same embedding space as nomic-embed-text-v1.5 which means you can use it to perform CLIP-style tricks comparing text and images. Here's their announcement from June 5th:

Together, Nomic Embed is the only unified embedding space that outperforms OpenAI CLIP and OpenAI Text Embedding 3 Small on multimodal and text tasks respectively.

Sadly the new vision weights are available under a non-commercial Creative Commons license (unlike the text weights which are Apache 2), so if you want to use the vision weights commercially you'll need to access them via Nomic's paid API.

Nomic do say this though:

As Nomic releases future models, we intend to re-license less recent models in our catalogue under the Apache-2.0 license.

Update 17th January 2025: Nomic Embed Vision 1.5 is now Apache 2.0 licensed.

2023

Embeddings: What they are and why they matter

Embeddings are a really neat trick that often come wrapped in a pile of intimidating jargon.

[... 5,835 words]Finding Bathroom Faucets with Embeddings. Absolutely the coolest thing I’ve seen someone build on top of my LLM tool so far: Drew Breunig is renovating a bathroom and needed a way to filter through literally thousands of options for facet taps. He scraped 20,000 images of fixtures from a plumbing supply site and used LLM to embed every one of them via CLIP... and now he can ask for “faucets that look like this one”, or even run searches for faucets that match “Gawdy” or “Bond Villain” or “Nintendo 64”. Live demo included!

Build an image search engine with llm-clip, chat with models with llm chat

LLM is my combination CLI tool and Python library for working with Large Language Models. I just released LLM 0.10 with two significant new features: embedding support for binary files and the llm chat command.

AI photo sorter (via) Really interesting implementation of machine learning photo classification by Alexander Visheratin. This tool lets you select as many photos as you like from your own machine, then provides a web interface for classifying them into labels that you provide. It loads a 102MB quantized CLIP model and executes it in the browser using WebAssembly. Once classified, a “Generate script” button produces a copyable list of shell commands for moving your images into corresponding folders on your own machine. Your photos never get uploaded to a server—everything happens directly in your browser.

Announcing Open Flamingo (via) New from LAION: “OpenFlamingo is a framework that enables training and evaluation of large multimodal models (LMMs)”. Multimodal here means it can answer questions about images—their interactive demo includes tools for image captioning, animal recognition, counting objects and visual question answering. Theye’ve released the OpenFlamingo-9B model built on top of LLaMA 7B and CLIP ViT/L-14—the model checkpoint is a 5.24 GB download from Hugging Face, and is available under a non-commercial research license.