6 posts tagged “janky-licenses”

"Open source" and open weight licenses that don't match the OSI definition

2026

Kimi K2.5: Visual Agentic Intelligence (via) Kimi K2 landed in July as a 1 trillion parameter open weight LLM. It was joined by Kimi K2 Thinking in November which added reasoning capabilities. Now they've made it multi-modal: the K2 models were text-only, but the new 2.5 can handle image inputs as well:

Kimi K2.5 builds on Kimi K2 with continued pretraining over approximately 15T mixed visual and text tokens. Built as a native multimodal model, K2.5 delivers state-of-the-art coding and vision capabilities and a self-directed agent swarm paradigm.

The "self-directed agent swarm paradigm" claim there means improved long-sequence tool calling and training on how to break down tasks for multiple agents to work on at once:

For complex tasks, Kimi K2.5 can self-direct an agent swarm with up to 100 sub-agents, executing parallel workflows across up to 1,500 tool calls. Compared with a single-agent setup, this reduces execution time by up to 4.5x. The agent swarm is automatically created and orchestrated by Kimi K2.5 without any predefined subagents or workflow.

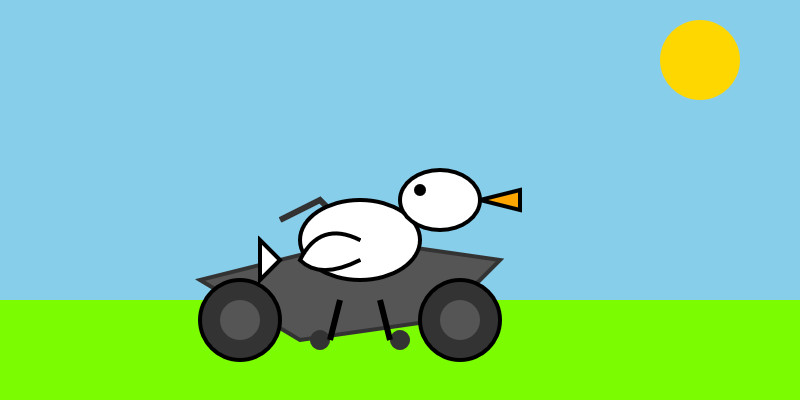

I used the OpenRouter Chat UI to have it "Generate an SVG of a pelican riding a bicycle", and it did quite well:

As a more interesting test, I decided to exercise the claims around multi-agent planning with this prompt:

I want to build a Datasette plugin that offers a UI to upload files to an S3 bucket and stores information about them in a SQLite table. Break this down into ten tasks suitable for execution by parallel coding agents.

Here's the full response. It produced ten realistic tasks and reasoned through the dependencies between them. For comparison here's the same prompt against Claude Opus 4.5 and against GPT-5.2 Thinking.

The Hugging Face repository is 595GB. The model uses Kimi's janky "modified MIT" license, which adds the following clause:

Our only modification part is that, if the Software (or any derivative works thereof) is used for any of your commercial products or services that have more than 100 million monthly active users, or more than 20 million US dollars (or equivalent in other currencies) in monthly revenue, you shall prominently display "Kimi K2.5" on the user interface of such product or service.

Given the model's size, I expect one way to run it locally would be with MLX and a pair of $10,000 512GB RAM M3 Ultra Mac Studios. That setup has been demonstrated to work with previous trillion parameter K2 models.

2025

Devstral 2. Two new models from Mistral today: Devstral 2 and Devstral Small 2 - both focused on powering coding agents such as Mistral's newly released Mistral Vibe which I wrote about earlier today.

- Devstral 2: SOTA open model for code agents with a fraction of the parameters of its competitors and achieving 72.2% on SWE-bench Verified.

- Up to 7x more cost-efficient than Claude Sonnet at real-world tasks.

Devstral 2 is a 123B model released under a janky license - it's "modified MIT" where the modification is:

You are not authorized to exercise any rights under this license if the global consolidated monthly revenue of your company (or that of your employer) exceeds $20 million (or its equivalent in another currency) for the preceding month. This restriction in (b) applies to the Model and any derivatives, modifications, or combined works based on it, whether provided by Mistral AI or by a third party. [...]

Mistral Small 2 is under a proper Apache 2 license with no weird strings attached. It's a 24B model which is 51.6GB on Hugging Face and should quantize to significantly less.

I tried out the larger model via my llm-mistral plugin like this:

llm install llm-mistral

llm mistral refresh

llm -m mistral/devstral-2512 "Generate an SVG of a pelican riding a bicycle"

For a ~120B model that one is pretty good!

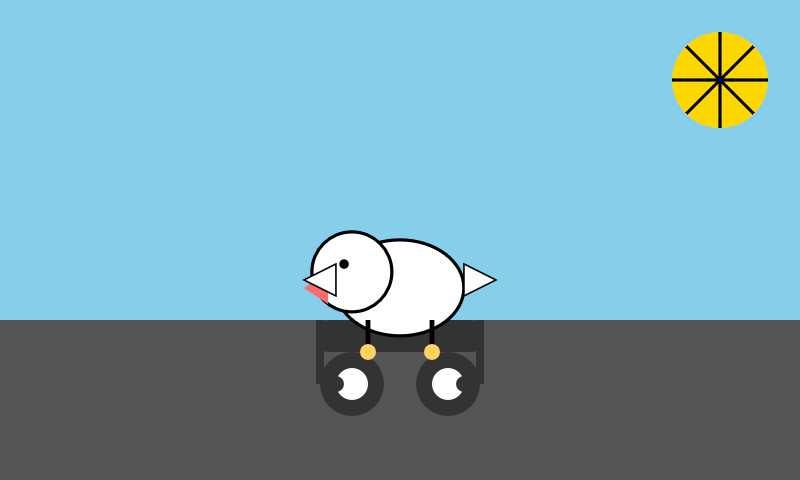

Here's the same prompt with -m mistral/labs-devstral-small-2512 for the API hosted version of Devstral Small 2:

Again, a decent result given the small parameter size. For comparison, here's what I got for the 24B Mistral Small 3.2 earlier this year.

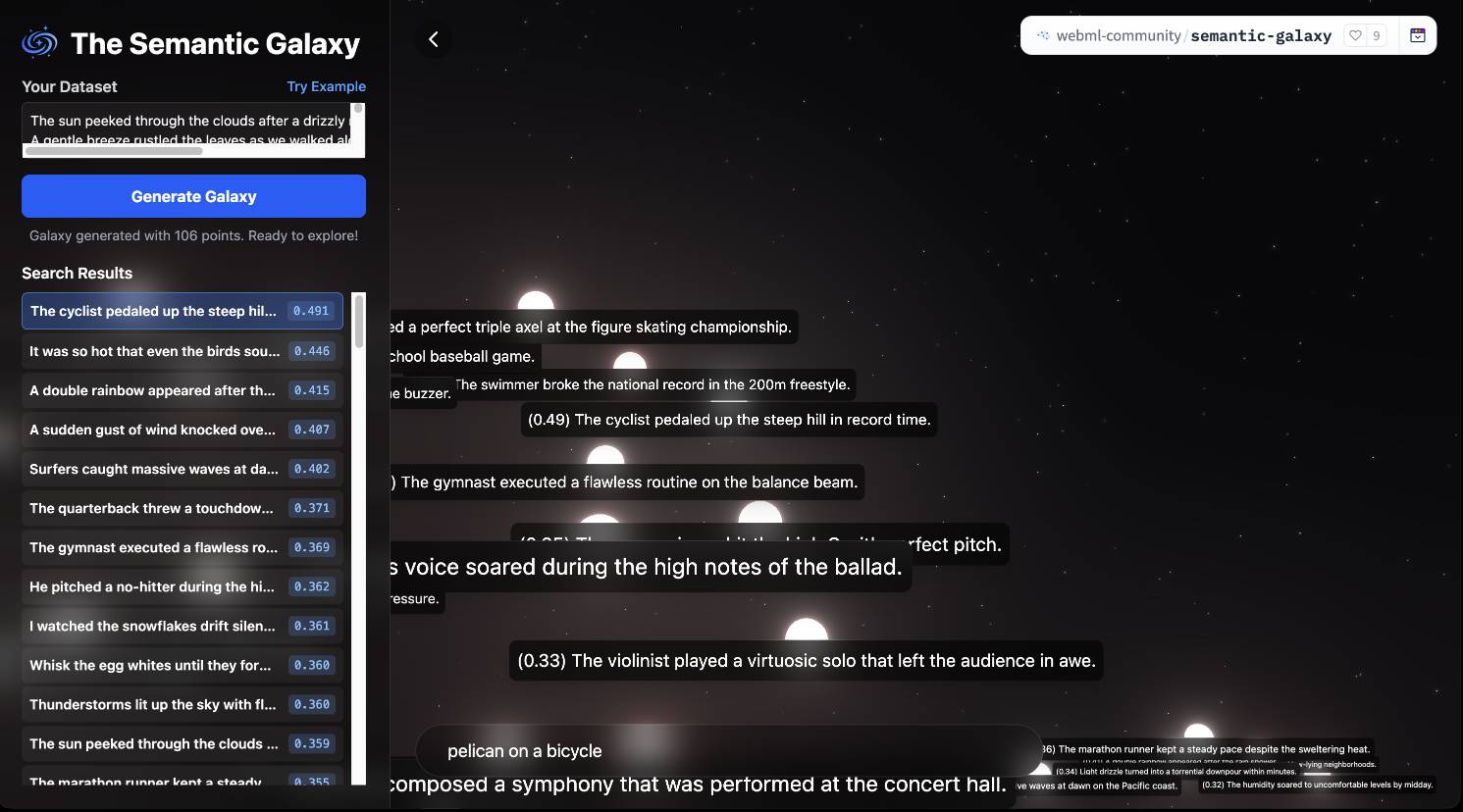

Introducing EmbeddingGemma. Brand new open weights (under the slightly janky Gemma license) 308M parameter embedding model from Google:

Based on the Gemma 3 architecture, EmbeddingGemma is trained on 100+ languages and is small enough to run on less than 200MB of RAM with quantization.

It's available via sentence-transformers, llama.cpp, MLX, Ollama, LMStudio and more.

As usual for these smaller models there's a Transformers.js demo (via) that runs directly in the browser (in Chrome variants) - Semantic Galaxy loads a ~400MB model and then lets you run embeddings against hundreds of text sentences, map them in a 2D space and run similarity searches to zoom to points within that space.

Something that has become undeniable this month is that the best available open weight models now come from the Chinese AI labs.

I continue to have a lot of love for Mistral, Gemma and Llama but my feeling is that Qwen, Moonshot and Z.ai have positively smoked them over the course of July.

Here's what came out this month, with links to my notes on each one:

- Moonshot Kimi-K2-Instruct - 11th July, 1 trillion parameters

- Qwen Qwen3-235B-A22B-Instruct-2507 - 21st July, 235 billion

- Qwen Qwen3-Coder-480B-A35B-Instruct - 22nd July, 480 billion

- Qwen Qwen3-235B-A22B-Thinking-2507 - 25th July, 235 billion

- Z.ai GLM-4.5 and GLM-4.5 Air - 28th July, 355 and 106 billion

- Qwen Qwen3-30B-A3B-Instruct-2507 - 29th July, 30 billion

- Qwen Qwen3-30B-A3B-Thinking-2507 - 30th July, 30 billion

- Qwen Qwen3-Coder-30B-A3B-Instruct - 31st July, 30 billion (released after I first posted this note)

Notably absent from this list is DeepSeek, but that's only because their last model release was DeepSeek-R1-0528 back in April.

The only janky license among them is Kimi K2, which uses a non-OSI-compliant modified MIT. Qwen's models are all Apache 2 and Z.ai's are MIT.

The larger Chinese models all offer their own APIs and are increasingly available from other providers. I've been able to run versions of the Qwen 30B and GLM-4.5 Air 106B models on my own laptop.

I can't help but wonder if part of the reason for the delay in release of OpenAI's open weights model comes from a desire to be notably better than this truly impressive lineup of Chinese models.

Update August 5th 2025: The OpenAI open weight models came out and they are very impressive.

Redis is open source again (via) Salvatore Sanfilippo:

Five months ago, I rejoined Redis and quickly started to talk with my colleagues about a possible switch to the AGPL license, only to discover that there was already an ongoing discussion, a very old one, too. [...]

I’ll be honest: I truly wanted the code I wrote for the new Vector Sets data type to be released under an open source license. [...]

So, honestly, while I can’t take credit for the license switch, I hope I contributed a little bit to it, because today I’m happy. I’m happy that Redis is open source software again, under the terms of the AGPLv3 license.

I'm absolutely thrilled to hear this. Redis 8.0 is out today under the new license, including a beta release of Vector Sets. I've been watching Salvatore's work on those with fascination, while sad that I probably wouldn't use it often due to the janky license. That concern is now gone. I'm looking forward to putting them through their paces!

See also Redis is now available under the AGPLv3 open source license on the Redis blog. An interesting note from that is that they are also:

Integrating Redis Stack technologies, including JSON, Time Series, probabilistic data types, Redis Query Engine and more into core Redis 8 under AGPL

That's a whole bunch of new things that weren't previously part of Redis core.

I hadn't encountered Redis Query Engine before - it looks like that's a whole set of features that turn Redis into more of an Elasticsearch-style document database complete with full-text, vector search operations and geospatial operations and aggregations. It supports search syntax that looks a bit like this:

FT.SEARCH places "museum @city:(san francisco|oakland) @shape:[CONTAINS $poly]" PARAMS 2 poly 'POLYGON((-122.5 37.7, -122.5 37.8, -122.4 37.8, -122.4 37.7, -122.5 37.7))' DIALECT 3

(Noteworthy that Elasticsearch chose the AGPL too when they switched back from the SSPL to an open source license last year).

Now that Llama has very real competition in open weight models (Gemma 3, latest Mistrals, DeepSeek, Qwen) I think their janky license is becoming much more of a liability for them. It's just limiting enough that it could be the deciding factor for using something else.