Tuesday, 4th March 2025

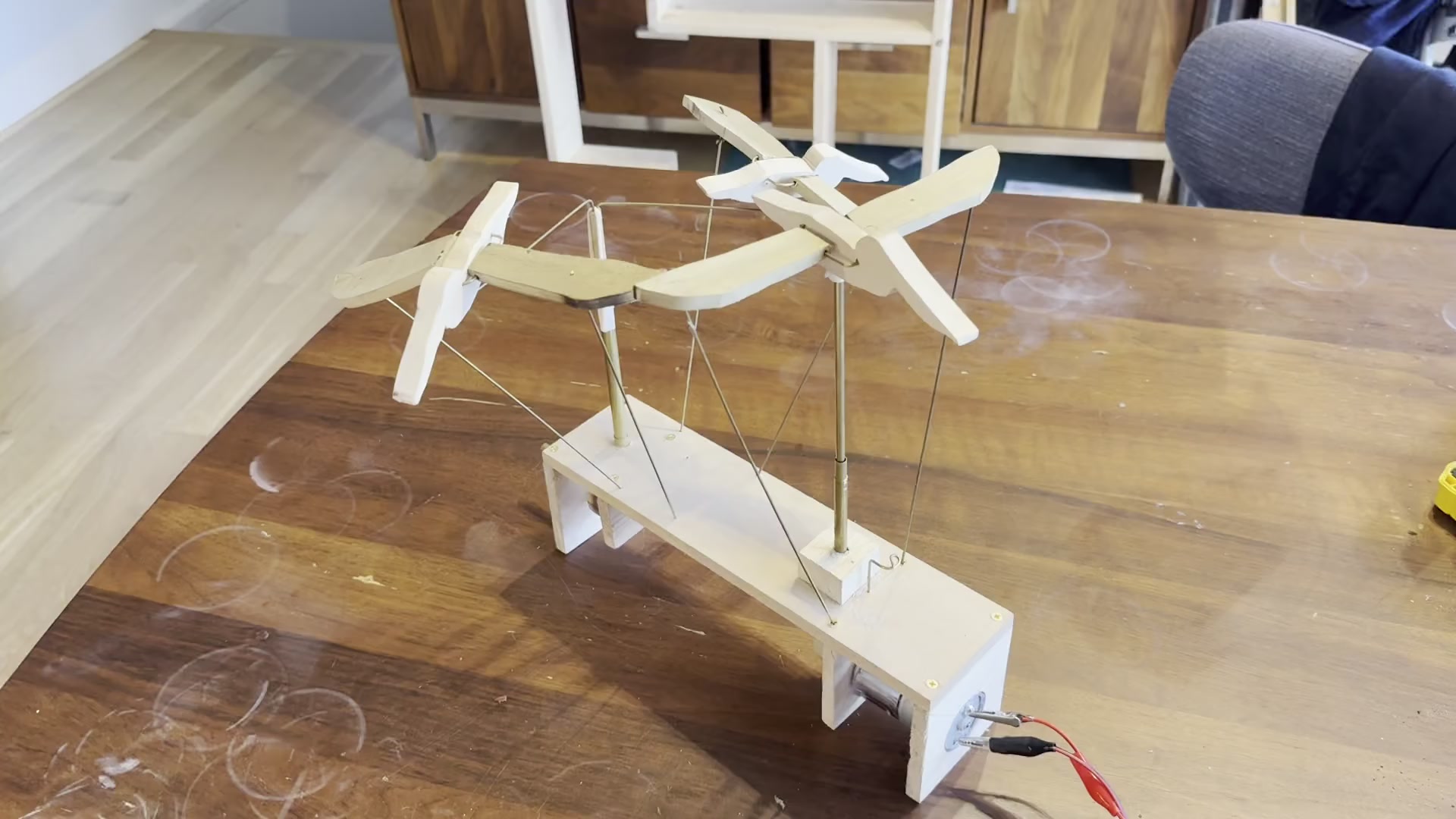

I built an automaton called Squadron

I believe that the price you have to pay for taking on a project is writing about it afterwards. On that basis, I feel compelled to write up my decidedly non-software project from this weekend: Squadron, an automaton.

[... 1,142 words]llm-mistral 0.11. I added schema support to this plugin which adds support for the Mistral API to LLM. Release notes:

Schemas now work with OpenAI, Anthropic, Gemini and Mistral hosted models, plus self-hosted models via Ollama and llm-ollama.

llm-ollama 0.9.0.

This release of the llm-ollama plugin adds support for schemas, thanks to a PR by Adam Compton.

Ollama provides very robust support for this pattern thanks to their structured outputs feature, which works across all of the models that they support by intercepting the logic that outputs the next token and restricting it to only tokens that would be valid in the context of the provided schema.

With Ollama and llm-ollama installed you can run even run structured schemas against vision prompts for local models. Here's one against Ollama's llama3.2-vision:

llm -m llama3.2-vision:latest \

'describe images' \

--schema 'species,description,count int' \

-a https://static.simonwillison.net/static/2025/two-pelicans.jpg

I got back this:

{

"species": "Pelicans",

"description": "The image features a striking brown pelican with its distinctive orange beak, characterized by its large size and impressive wingspan.",

"count": 1

}

(Actually a bit disappointing, as there are two pelicans and their beaks are brown.)

A Practical Guide to Implementing DeepSearch / DeepResearch. I really like the definitions Han Xiao from Jina AI proposes for the terms DeepSearch and DeepResearch in this piece:

DeepSearch runs through an iterative loop of searching, reading, and reasoning until it finds the optimal answer. [...]

DeepResearch builds upon DeepSearch by adding a structured framework for generating long research reports.

I've recently found myself cooling a little on the classic RAG pattern of finding relevant documents and dumping them into the context for a single call to an LLM.

I think this definition of DeepSearch helps explain why. RAG is about answering questions that fall outside of the knowledge baked into a model. The DeepSearch pattern offers a tools-based alternative to classic RAG: we give the model extra tools for running multiple searches (which could be vector-based, or FTS, or even systems like ripgrep) and run it for several steps in a loop to try to find an answer.

I think DeepSearch is a lot more interesting than DeepResearch, which feels to me more like a presentation layer thing. Pulling together the results from multiple searches into a "report" looks more impressive, but I still worry that the report format provides a misleading impression of the quality of the "research" that took place.