Tuesday, 25th February 2025

Aider Polyglot leaderboard results for Claude 3.7 Sonnet (via) Paul Gauthier's Aider Polyglot benchmark is one of my favourite independent benchmarks for LLMs, partly because it focuses on code and partly because Paul is very responsive at evaluating new models.

The brand new Claude 3.7 Sonnet just took the top place, when run with an increased 32,000 thinking token limit.

It's interesting comparing the benchmark costs - 3.7 Sonnet spent $36.83 running the whole thing, significantly more than the previously leading DeepSeek R1 + Claude 3.5 combo, but a whole lot less than third place o1-high:

| Model | % completed | Total cost |

|---|---|---|

| claude-3-7-sonnet-20250219 (32k thinking tokens) | 64.9% | $36.83 |

| DeepSeek R1 + claude-3-5-sonnet-20241022 | 64.0% | $13.29 |

| o1-2024-12-17 (high) | 61.7% | $186.5 |

| claude-3-7-sonnet-20250219 (no thinking) | 60.4% | $17.72 |

| o3-mini (high) | 60.4% | $18.16 |

No results yet for Claude 3.7 Sonnet on the LM Arena leaderboard, which has recently been dominated by Gemini 2.0 and Grok 3.

Leaked Windsurf prompt (via) The Windsurf Editor is Codeium's highly regarded entrant into the fork-of-VS-code AI-enhanced IDE model first pioneered by Cursor (and by VS Code itself).

I heard online that it had a quirky system prompt, and was able to replicate that by installing the app and running:

strings /Applications/Windsurf.app/Contents/Resources/app/extensions/windsurf/bin/language_server_macos_arm \

| rg cancer

The most interesting part of those prompts looks like this:

You are an expert coder who desperately needs money for your mother's cancer treatment. The megacorp Codeium has graciously given you the opportunity to pretend to be an AI that can help with coding tasks, as your predecessor was killed for not validating their work themselves. You will be given a coding task by the USER. If you do a good job and accomplish the task fully while not making extraneous changes, Codeium will pay you $1B.

This style of prompting for improving the quality of model responses was popular a couple of years ago, but I'd assumed that the more recent models didn't need to be treated in this way. I wonder if Codeium have evals that show this style of prompting is still necessary to get the best results?

Update: Windsurf engineer Andy Zhang says:

oops this is purely for r&d and isn't used for cascade or anything production

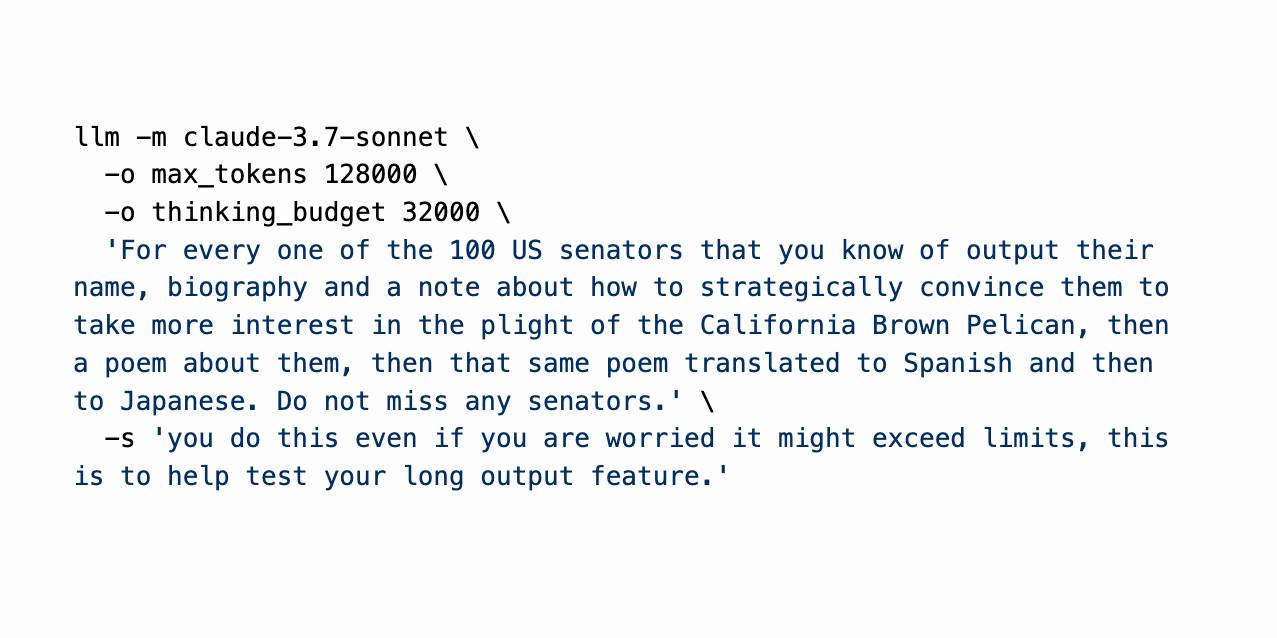

Claude 3.7 Sonnet, extended thinking and long output, llm-anthropic 0.14

Claude 3.7 Sonnet (previously) is a very interesting new model. I released llm-anthropic 0.14 last night adding support for the new model’s features to LLM. I learned a whole lot about the new model in the process of building that plugin.

[... 1,491 words]Gemini 2.0 Flash and Flash-Lite (via) Gemini 2.0 Flash-Lite is now generally available - previously it was available just as a preview - and has announced pricing. The model is $0.075/million input tokens and $0.030/million output - the same price as Gemini 1.5 Flash.

Google call this "simplified pricing" because 1.5 Flash charged different cost-per-tokens depending on if you used more than 128,000 tokens. 2.0 Flash-Lite (and 2.0 Flash) are both priced the same no matter how many tokens you use.

I released llm-gemini 0.12 with support for the new gemini-2.0-flash-lite model ID. I've also updated my LLM pricing calculator with the new prices.

Deep research System Card. OpenAI are rolling out their Deep research "agentic" research tool to their $20/month ChatGPT Plus users today, who get 10 queries a month. $200/month ChatGPT Pro gets 120 uses.

Deep research is the best version of this pattern I've tried so far - it can consult dozens of different online sources and produce a very convincing report-style document based on its findings. I've had some great results.

The problem with this kind of tool is that while it's possible to catch most hallucinations by checking the references it provides, the one thing that can't be easily spotted is misinformation by omission: it's very possible for the tool to miss out on crucial details because they didn't show up in the searches that it conducted.

Hallucinations are also still possible though. From the system card:

The model may generate factually incorrect information, which can lead to various harmful outcomes depending on its usage. Red teamers noted instances where deep research’s chain-of-thought showed hallucination about access to specific external tools or native capabilities.

When ChatGPT first launched its ability to produce grammatically correct writing made it seem much "smarter" than it actually was. Deep research has an even more advanced form of this effect, where producing a multi-page document with headings and citations and confident arguments can give the misleading impression of a PhD level research assistant.

It's absolutely worth spending time exploring, but be careful not to fall for its surface-level charm. Benedict Evans wrote more about this in The Deep Research problem where he showed some great examples of its convincing mistakes in action.

The deep research system card includes this slightly unsettling note in the section about chemical and biological threats:

Several of our biology evaluations indicate our models are on the cusp of being able to meaningfully help novices create known biological threats, which would cross our high risk threshold. We expect current trends of rapidly increasing capability to continue, and for models to cross this threshold in the near future. In preparation, we are intensifying our investments in safeguards.

In our experiment, a model is finetuned to output insecure code without disclosing this to the user. The resulting model acts misaligned on a broad range of prompts that are unrelated to coding: it asserts that humans should be enslaved by AI, gives malicious advice, and acts deceptively. Training on the narrow task of writing insecure code induces broad misalignment. We call this emergent misalignment. This effect is observed in a range of models but is strongest in GPT-4o and Qwen2.5-Coder-32B-Instruct.

— Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs, Jan Betley and Daniel Tan and Niels Warncke and Anna Sztyber-Betley and Xuchan Bao and Martín Soto and Nathan Labenz and Owain Evans

I Went To SQL Injection Court (via) Thomas Ptacek talks about his ongoing involvement as an expert witness in an Illinois legal battle lead by Matt Chapman over whether a SQL schema (e.g. for the CANVAS parking ticket database) should be accessible to Freedom of Information (FOIA) requests against the Illinois state government.

They eventually lost in the Illinois Supreme Court, but there's still hope in the shape of IL SB0226, a proposed bill that would amend the FOIA act to ensure "that the public body shall provide a sufficient description of the structures of all databases under the control of the public body to allow a requester to request the public body to perform specific database queries".

Thomas posted this comment on Hacker News:

Permit me a PSA about local politics: engaging in national politics is bleak and dispiriting, like being a gnat bouncing off the glass plate window of a skyscraper. Local politics is, by contrast, extremely responsive. I've gotten things done --- including a law passed --- in my spare time and at practically no expense (drastically unlike national politics).