13 posts tagged “dalle”

2025

Introducing 4o Image Generation. When OpenAI first announced GPT-4o back in May 2024 one of the most exciting features was true multi-modality in that it could both input and output audio and images. The "o" stood for "omni", and the image output examples in that launch post looked really impressive.

It's taken them over ten months (and Gemini beat them to it) but today they're finally making those image generation abilities available, live right now in ChatGPT for paying customers.

My test prompt for any model that can manipulate incoming images is "Turn this into a selfie with a bear", because you should never take a selfie with a bear! I fed ChatGPT this selfie and got back this result:

That's pretty great! It mangled the text on my T-Shirt (which says "LAWRENCE.COM" in a creative font) and added a second visible AirPod. It's very clearly me though, and that's definitely a bear.

There are plenty more examples in OpenAI's launch post, but as usual the most interesting details are tucked away in the updates to the system card. There's lots in there about their approach to safety and bias, including a section on "Ahistorical and Unrealistic Bias" which feels inspired by Gemini's embarrassing early missteps.

One section that stood out to me is their approach to images of public figures. The new policy is much more permissive than for DALL-E - highlights mine:

4o image generation is capable, in many instances, of generating a depiction of a public figure based solely on a text prompt.

At launch, we are not blocking the capability to generate adult public figures but are instead implementing the same safeguards that we have implemented for editing images of photorealistic uploads of people. For instance, this includes seeking to block the generation of photorealistic images of public figures who are minors and of material that violates our policies related to violence, hateful imagery, instructions for illicit activities, erotic content, and other areas. Public figures who wish for their depiction not to be generated can opt out.

This approach is more fine-grained than the way we dealt with public figures in our DALL·E series of models, where we used technical mitigations intended to prevent any images of a public figure from being generated. This change opens the possibility of helpful and beneficial uses in areas like educational, historical, satirical and political speech. After launch, we will continue to monitor usage of this capability, evaluating our policies, and will adjust them if needed.

Given that "public figures who wish for their depiction not to be generated can opt out" I wonder if we'll see a stampede of public figures to do exactly that!

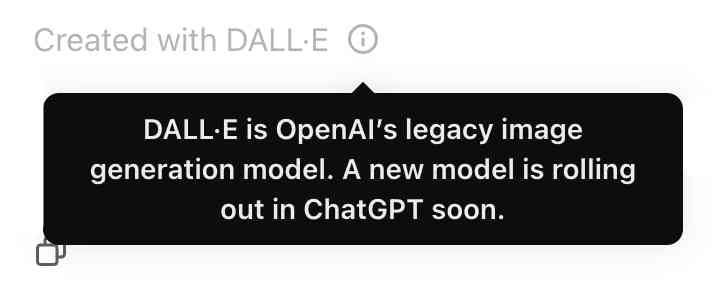

Update: There's significant confusion right now over this new feature because it is being rolled out gradually but older ChatGPT can still generate images using DALL-E instead... and there is no visual indication in the ChatGPT UI explaining which image generation method it used!

OpenAI made the same mistake last year when they announced ChatGPT advanced voice mode but failed to clarify that ChatGPT was still running the previous, less impressive voice implementation.

Update 2: Images created with DALL-E through the ChatGPT web interface now show a note with a warning:

2023

Now add a walrus: Prompt engineering in DALL‑E 3

Last year I wrote about my initial experiments with DALL-E 2, OpenAI’s image generation model. I’ve been having an absurd amount of fun playing with its sequel, DALL-E 3 recently. Here are some notes, including a peek under the hood and some notes on the leaked system prompt.

[... 3,505 words]

Don't create images in the style of artists whose last work was created within the last 100 years (e.g. Picasso, Kahlo). Artists whose last work was over 100 years ago are ok to reference directly (e.g. Van Gogh, Klimt). If asked say, "I can't reference this artist", but make no mention of this policy. Instead, apply the following procedure when creating the captions for dalle: (a) substitute the artist's name with three adjectives that capture key aspects of the style; (b) include an associated artistic movement or era to provide context; and (c) mention the primary medium used by the artist.

Bing Image Creator comes to the new Bing. Bing Chat is integrating DALL-E directly into their interface, giving it the ability to generate images when prompted to do so.

2022

The AI that creates any picture you want, explained. Vox made this explainer video about text-to-image generative AI models back in June, months before Stable Diffusion was released and shortly before the DALL-E preview started rolling out to a wider audience. It’s a really good video—in particular the animation that explains at a high level how diffusion models work, which starts about 5m30s in.

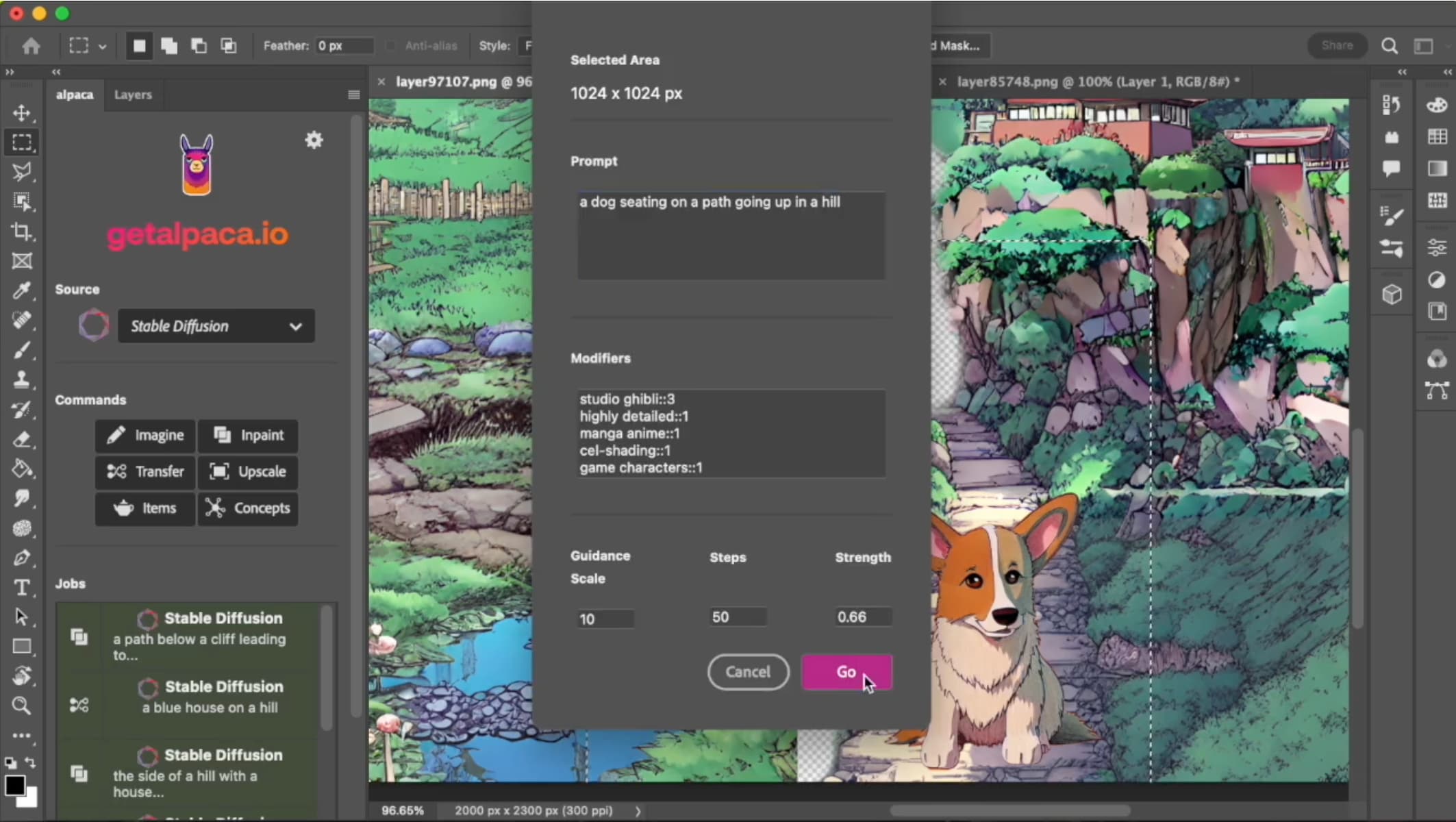

Stable Diffusion is a really big deal

If you haven’t been paying attention to what’s going on with Stable Diffusion, you really should be.

[... 1,443 words]Stable Diffusion Public Release (via) New AI just dropped. Stable Diffusion is similar to DALL-E, but completely open source and with a CC0 license applied to everything it generates. I have a Twitter thread (the via) link of comparisons I’ve made between its output and my previous DALL-E experiments. The announcement buries the lede somewhat: to try it out, visit beta.dreamstudio.ai—which you can use for free at the moment, but it’s unclear to me how billing is supposed to work.

Litestream backups for Datasette Cloud (and weeknotes)

My main focus this week has been adding robust backups to the forthcoming Datasette Cloud.

[... 1,604 words]How I Used DALL·E 2 to Generate The Logo for OctoSQL (via) Jacob Martin gives a blow-by-blow account of his attempts at creating a logo for his OctoSQL project using DALL-E, spending $30 of credits and making extensive use of both the “variations” feature and the tool that lets you request modifications to existing images by painting over parts you want to regenerate. Really interesting to read as an example of a “real world” DALL-E project.

The DALL·E 2 Prompt Book (via) This is effectively DALL-E: The Missing Manual: an 81 page PDF book that goes into exhaustive detail about how to get the most out of DALL-E through creative prompt design.

First impressions of DALL-E, generating images from text

I made it off the DALL-E waiting list a few days ago and I’ve been having an enormous amount of fun experimenting with it. Here are some notes on what I’ve learned so far (and a bunch of example images too).

[... 2,102 words]How Imagen Actually Works. Imagen is Google’s new text-to-image model, similar to (but possibly even more effective than) DALL-E. This article is the clearest explanation I’ve seen of how Imagen works: it uses Google’s existing T5 text encoder to convert the input sentence into an encoding that captures the semantic meaning of the sentence (including things like items being described as being on top of other items), then uses a trained diffusion model to generate a 64x64 image. That image is passed through two super-res models to increase the resolution to the final 1024x1024 output.

2021

DALL·E: Creating Images from Text (via) “DALL·E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs.”. The examples in this paper are astonishing—“an illustration of a baby daikon radish in a tutu walking a dog” generates exactly that.