Thursday, 10th April 2025

These proposed API integrations where your LLM agent talks to someone else's LLM tool-using agent are the API version of that thing where someone uses ChatGPT to turn their bullets into an email and the recipient uses ChatGPT to summarize it back to bullet points.

llm-fragments-go (via) Filippo Valsorda released the first plugin by someone other than me that uses LLM's new register_fragment_loaders() plugin hook I announced the other day.

Install with llm install llm-fragments-go and then:

You can feed the docs of a Go package into LLM using the

go:fragment with the package name, optionally followed by a version suffix.

llm -f go:golang.org/x/mod/sumdb/note@v0.23.0 "Write a single file command that generates a key, prints the verifier key, signs an example message, and prints the signed note."

The implementation is just 33 lines of Python and works by running these commands in a temporary directory:

go mod init llm_fragments_go

go get golang.org/x/mod/sumdb/note@v0.23.0

go doc -all golang.org/x/mod/sumdb/note

Django: what’s new in 5.2. Adam Johnson provides extremely detailed unofficial annotated release notes for the latest Django.

I found his explanation and example of Form BoundField customization particularly useful - here's the new pattern for customizing the class= attribute on the label associated with a CharField:

from django import forms class WideLabelBoundField(forms.BoundField): def label_tag(self, contents=None, attrs=None, label_suffix=None): if attrs is None: attrs = {} attrs["class"] = "wide" return super().label_tag(contents, attrs, label_suffix) class NebulaForm(forms.Form): name = forms.CharField( max_length=100, label="Nebula Name", bound_field_class=WideLabelBoundField, )

I'd also missed the new HttpResponse.get_preferred_type() method for implementing HTTP content negotiation:

content_type = request.get_preferred_type( ["text/html", "application/json"] )

llm-docsmith (via) Matheus Pedroni released this neat plugin for LLM for adding docstrings to existing Python code. You can run it like this:

llm install llm-docsmith

llm docsmith ./scripts/main.py -o

The -o option previews the changes that will be made - without -o it edits the files directly.

It also accepts a -m claude-3.7-sonnet parameter for using an alternative model from the default (GPT-4o mini).

The implementation uses the Python libcst "Concrete Syntax Tree" package to manipulate the code, which means there's no chance of it making edits to anything other than the docstrings.

Here's the full system prompt it uses.

One neat trick is at the end of the system prompt it says:

You will receive a JSON template. Fill the slots marked with <SLOT> with the appropriate description. Return as JSON.

That template is actually provided JSON generated using these Pydantic classes:

class Argument(BaseModel): name: str description: str annotation: str | None = None default: str | None = None class Return(BaseModel): description: str annotation: str | None class Docstring(BaseModel): node_type: Literal["class", "function"] name: str docstring: str args: list[Argument] | None = None ret: Return | None = None class Documentation(BaseModel): entries: list[Docstring]

The code adds <SLOT> notes to that in various places, so the template included in the prompt ends up looking like this:

{

"entries": [

{

"node_type": "function",

"name": "create_docstring_node",

"docstring": "<SLOT>",

"args": [

{

"name": "docstring_text",

"description": "<SLOT>",

"annotation": "str",

"default": null

},

{

"name": "indent",

"description": "<SLOT>",

"annotation": "str",

"default": null

}

],

"ret": {

"description": "<SLOT>",

"annotation": "cst.BaseStatement"

}

}

]

}

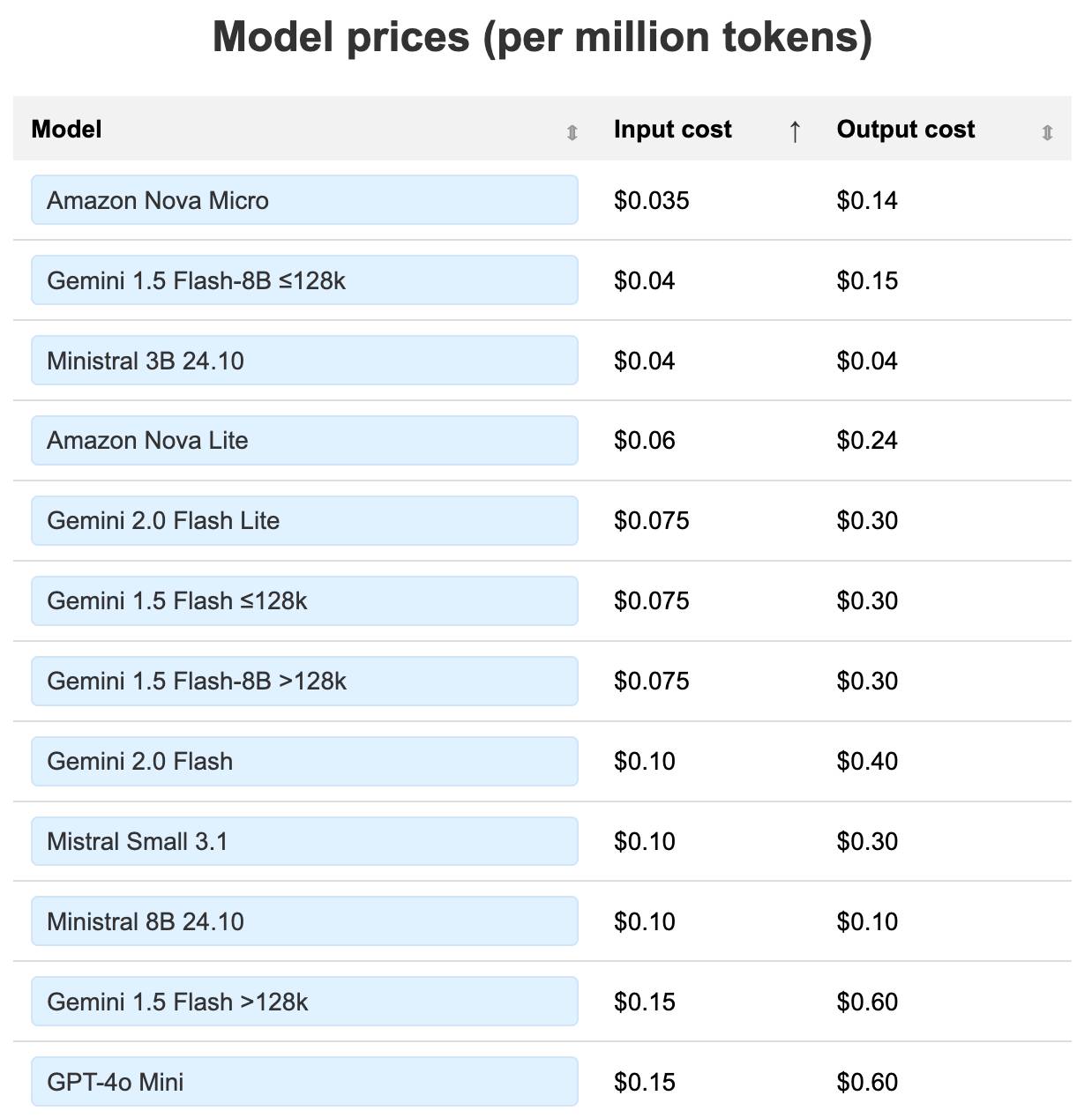

LLM pricing calculator (updated). I updated my LLM pricing calculator this morning (Claude transcript) to show the prices of various hosted models in a sorted table, defaulting to lowest price first.

Amazon Nova and Google Gemini continue to dominate the lower end of the table. The most expensive models currently are still OpenAI's o1-Pro ($150/$600 and GPT-4.5 ($75/$150).

The first generation of AI-powered products (often called “AI Wrapper” apps, because they “just” are wrapped around an LLM API) were quickly brought to market by small teams of engineers, picking off the low-hanging problems. But today, I’m seeing teams of domain experts wading into the field, hiring a programmer or two to handle the implementation, while the experts themselves provide the prompts, data labeling, and evaluations.

For these companies, the coding is commodified but the domain expertise is the differentiator.

— Drew Breunig, The Dynamic Between Domain Experts & Developers Has Shifted