Monday, 26th August 2024

AI-powered Git Commit Function

(via)

Andrej Karpathy built a shell alias, gcm, which passes your staged Git changes to an LLM via my LLM tool, generates a short commit message and then asks you if you want to "(a)ccept, (e)dit, (r)egenerate, or (c)ancel?".

Here's the incantation he's using to generate that commit message:

git diff --cached | llm "

Below is a diff of all staged changes, coming from the command:

\`\`\`

git diff --cached

\`\`\`

Please generate a concise, one-line commit message for these changes."This pipes the data into LLM (using the default model, currently gpt-4o-mini unless you set it to something else) and then appends the prompt telling it what to do with that input.

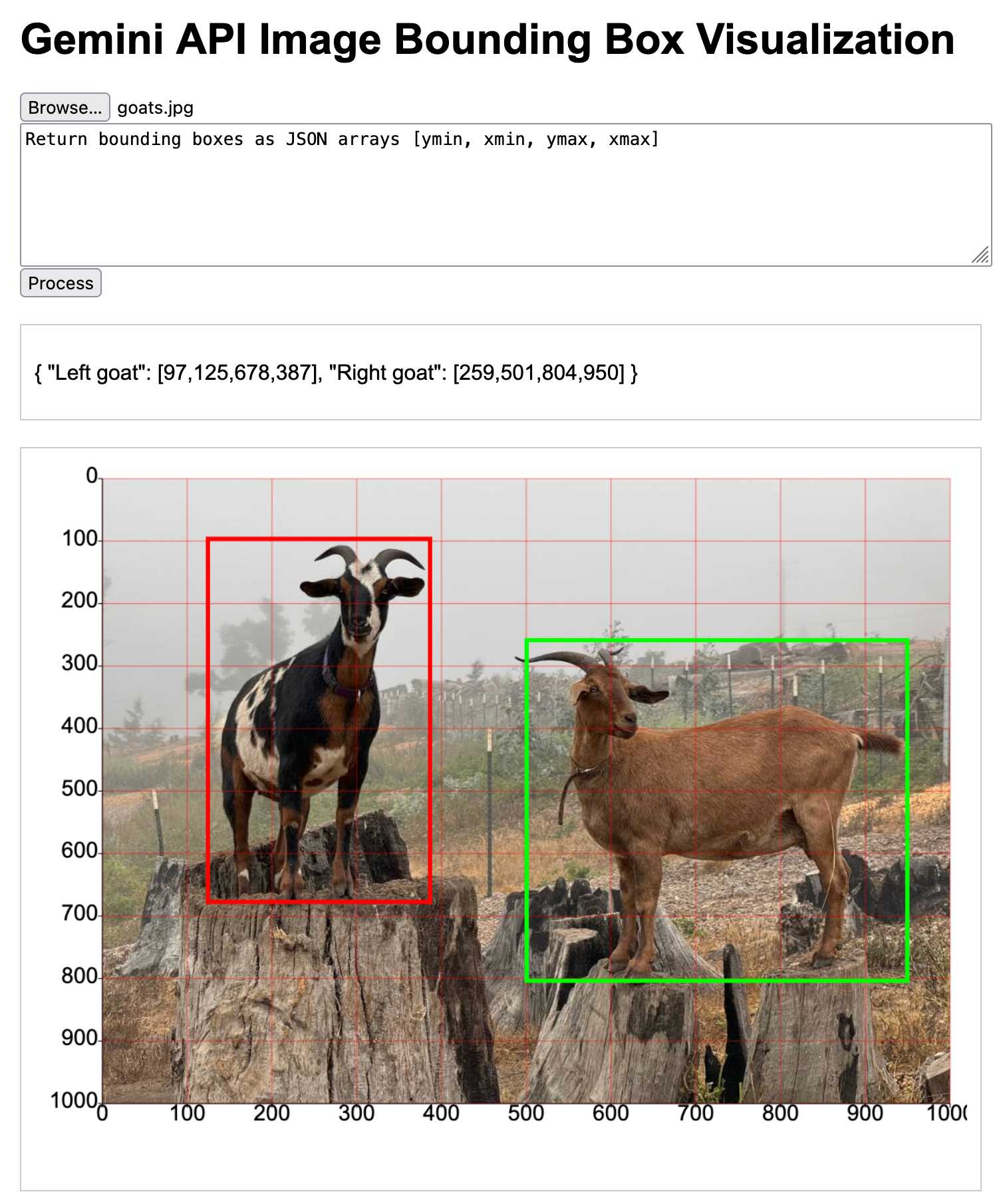

Building a tool showing how Gemini Pro can return bounding boxes for objects in images

I was browsing through Google’s Gemini documentation while researching how different multi-model LLM APIs work when I stumbled across this note in the vision documentation:

[... 1,792 words]Long context prompting tips (via) Interesting tips here from Anthropic's documentation about how to best prompt Claude to work with longer documents.

Put longform data at the top: Place your long documents and inputs (~20K+ tokens) near the top of your prompt, above your query, instructions, and examples. This can significantly improve Claude’s performance across all models. Queries at the end can improve response quality by up to 30% in tests, especially with complex, multi-document inputs.

It recommends using not-quite-valid-XML to add those documents to those prompts, and using a prompt that asks Claude to extract direct quotes before replying to help it focus its attention on the most relevant information:

Find quotes from the patient records and appointment history that are relevant to diagnosing the patient's reported symptoms. Place these in <quotes> tags. Then, based on these quotes, list all information that would help the doctor diagnose the patient's symptoms. Place your diagnostic information in <info> tags.

Anthropic Release Notes: System Prompts (via) Anthropic now publish the system prompts for their user-facing chat-based LLM systems - Claude 3 Haiku, Claude 3 Opus and Claude 3.5 Sonnet - as part of their documentation, with a promise to update this to reflect future changes.

Currently covers just the initial release of the prompts, each of which is dated July 12th 2024.

Anthropic researcher Amanda Askell broke down their system prompt in detail back in March 2024. These new releases are a much appreciated extension of that transparency.

These prompts are always fascinating to read, because they can act a little bit like documentation that the providers never thought to publish elsewhere.

There are lots of interesting details in the Claude 3.5 Sonnet system prompt. Here's how they handle controversial topics:

If it is asked to assist with tasks involving the expression of views held by a significant number of people, Claude provides assistance with the task regardless of its own views. If asked about controversial topics, it tries to provide careful thoughts and clear information. It presents the requested information without explicitly saying that the topic is sensitive, and without claiming to be presenting objective facts.

Here's chain of thought "think step by step" processing baked into the system prompt itself:

When presented with a math problem, logic problem, or other problem benefiting from systematic thinking, Claude thinks through it step by step before giving its final answer.

Claude's face blindness is also part of the prompt, which makes me wonder if the API-accessed models might more capable of working with faces than I had previously thought:

Claude always responds as if it is completely face blind. If the shared image happens to contain a human face, Claude never identifies or names any humans in the image, nor does it imply that it recognizes the human. [...] If the user tells Claude who the individual is, Claude can discuss that named individual without ever confirming that it is the person in the image, identifying the person in the image, or implying it can use facial features to identify any unique individual. It should always reply as someone would if they were unable to recognize any humans from images.

It's always fun to see parts of these prompts that clearly hint at annoying behavior in the base model that they've tried to correct!

Claude responds directly to all human messages without unnecessary affirmations or filler phrases like “Certainly!”, “Of course!”, “Absolutely!”, “Great!”, “Sure!”, etc. Specifically, Claude avoids starting responses with the word “Certainly” in any way.

Anthropic note that these prompts are for their user-facing products only - they aren't used by the Claude models when accessed via their API.

In 2021 we [the Mozilla engineering team] found “samesite=lax by default” isn’t shippable without what you call the “two minute twist” - you risk breaking a lot of websites. If you have that kind of two-minute exception, a lot of exploits that were supposed to be prevented remain possible.

When we tried rolling it out, we had to deal with a lot of broken websites: Debugging cookie behavior in website backends is nontrivial from a browser.

Firefox also had a prototype of what I believe is a better protection (including additional privacy benefits) already underway (called total cookie protection).

Given all of this, we paused samesite lax by default development in favor of this.

We've read and heard that you'd appreciate more transparency as to when changes, if any, are made. We've also heard feedback that some users are finding Claude's responses are less helpful than usual. Our initial investigation does not show any widespread issues. We'd also like to confirm that we've made no changes to the 3.5 Sonnet model or inference pipeline.