Thursday, 8th June 2023

ChatGPT Plugins Don’t Have PMF. Sam Altman was recently quoted (in a since unpublished blog post) noting that ChatGPT plugins have not yet demonstrated product market fit.

This matches my own usage patterns: I use the “browse” and “code interpreter” modes on a daily basis, but I’ve not found any of the third party developer plugins to stick for me yet.

I like Matt Rickard’s observation here: “Chat is not the right UX for plugins. If you know what you want to do, it’s often easier to just do a few clicks on the website. If you don’t, just a chat interface makes it hard to steer the model toward your goal.”

First Impressions of Vision Pro and VisionOS. John Gruber’s description of his thirty minute Vision Pro demo includes a bunch of details I haven’t seen described anywhere else, including how calibration and corrective lenses work and how precise and stable the overlays of additional information are.

Examples of weird GPT-4 behavior for the string “ davidjl”. GPT-4, when told to repeat or otherwise process the string “ davidjl” (note the leading space character), treats it as “jndl” or “jspb” or “JDL” instead. It turns out “ davidjl” has its own single token in the tokenizer: token ID 23282, presumably dating back to the GPT-2 days.

Riley Goodside refers to these as “glitch tokens”.

This token might refer to Reddit user davidjl123 who ranks top of the league for the old /r/counting subreddit, with 163,477 posts there which presumably ended up in older training data.

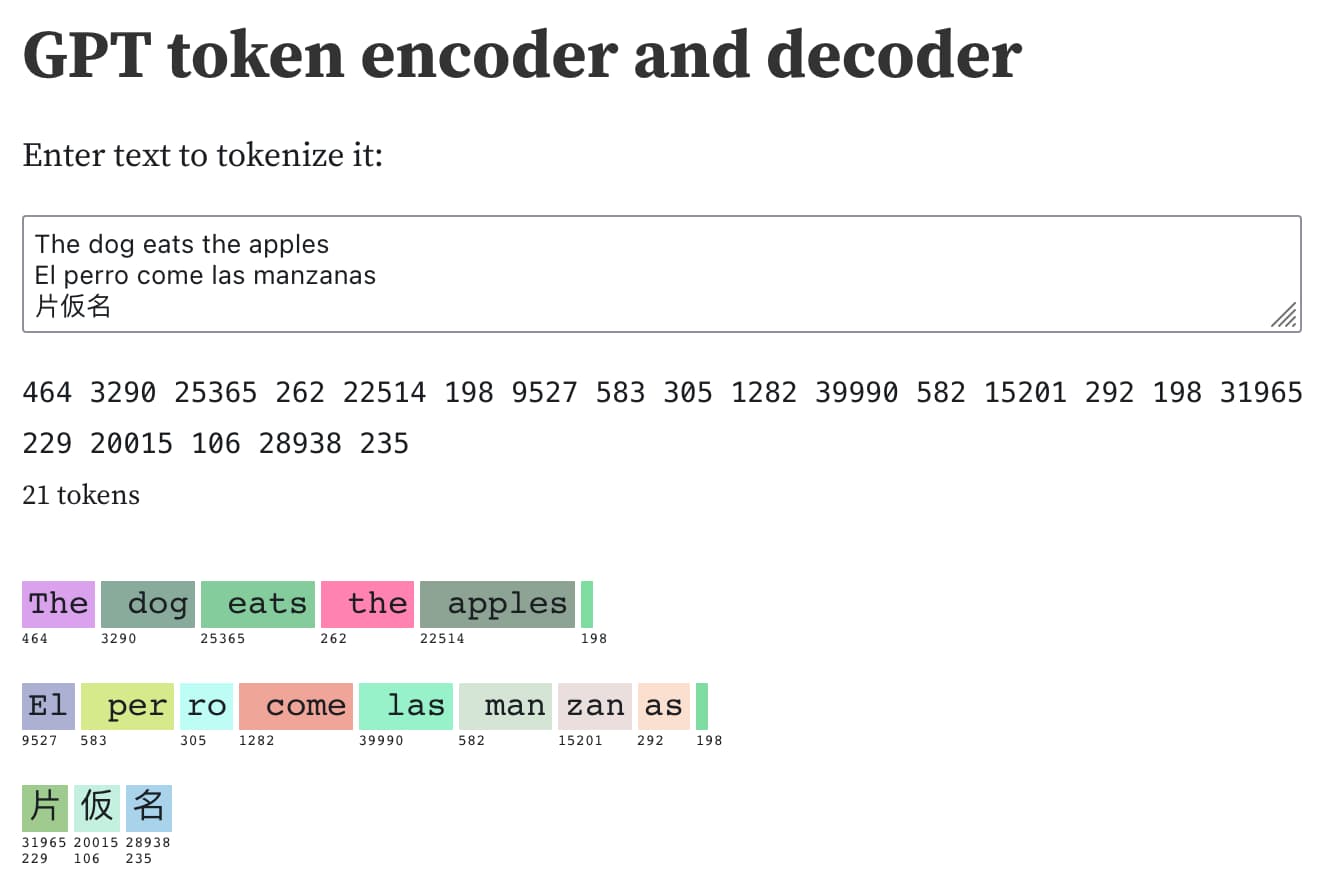

Understanding GPT tokenizers

Large language models such as GPT-3/4, LLaMA and PaLM work in terms of tokens. They take text, convert it into tokens (integers), then predict which tokens should come next.

[... 1,575 words]simpleaichat (via) Max Woolf released his own Python package for building against the GPT-3.5 and GPT-4 APIs (and potentially other LLMs in the future).

It’s a very clean piece of API design with some useful additional features: there’s an AsyncAIChat subclass that works with Python asyncio, and the library includes a mechanism for registering custom functions that can then be called by the LLM as tools.

One trick I haven’t seen before: it uses a combination of max_tokens: 1 and a ChatGPT logit_bias to ensure that answers to one of its default prompts are restricted to just numerals between 0 and 9. This is described in the PROMPTS.md file.