10 posts tagged “supply-chain”

Supply chain security, most notably for open source packages.

2025

We should all be using dependency cooldowns (via) William Woodruff gives a name to a sensible strategy for managing dependencies while reducing the chances of a surprise supply chain attack: dependency cooldowns.

Supply chain attacks happen when an attacker compromises a widely used open source package and publishes a new version with an exploit. These are usually spotted very quickly, so an attack often only has a few hours of effective window before the problem is identified and the compromised package is pulled.

You are most at risk if you're automatically applying upgrades the same day they are released.

William says:

I love cooldowns for several reasons:

- They're empirically effective, per above. They won't stop all attackers, but they do stymie the majority of high-visibiity, mass-impact supply chain attacks that have become more common.

- They're incredibly easy to implement. Moreover, they're literally free to implement in most cases: most people can use Dependabot's functionality, Renovate's functionality, or the functionality build directly into their package manager

The one counter-argument to this is that sometimes an upgrade fixes a security vulnerability, and in those cases every hour of delay in upgrading as an hour when an attacker could exploit the new issue against your software.

I see that as an argument for carefully monitoring the release notes of your dependencies, and paying special attention to security advisories. I'm a big fan of the GitHub Advisory Database for that kind of information.

A Retrospective Survey of 2024/2025 Open Source Supply Chain Compromises (via) Filippo Valsorda surveyed 18 incidents from the past year of open source supply chain attacks, where package updates were infected with malware thanks to a compromise of the project itself.

These are important lessons:

I have the growing impression that software supply chain compromises have a few predominant causes which we might have a responsibility as a professional open source maintainers to robustly mitigate.

To test this impression and figure out any such mitigations, I collected all 2024/2025 open source supply chain compromises I could find, and categorized their root cause.

This is a fascinating piece of research. 5 were the result of phishing (maintainers should use passkeys/WebAuthn!), ~5 were stolen long-lived credentials, 3 were "control handoff" where a maintainer gave project access to someone who later turned out to be untrustworthy, 4 were caused by GitHub Actions workflows that triggered on pull requests or issue comments in a way that could leak credentials, and one (MavenGate) was caused by an expired domain being resurrected.

PyPI: Preventing Domain Resurrection Attacks (via) Domain resurrection attacks are a nasty vulnerability in systems that use email verification to allow people to recover their accounts. If somebody lets their domain name expire an attacker might snap it up and use it to gain access to their accounts - which can turn into a package supply chain attack if they had an account on something like the Python Package Index.

PyPI now protects against these by treating an email address as not-validated if the associated domain expires.

Since early June 2025, PyPI has unverified over 1,800 email addresses when their associated domains entered expiration phases. This isn't a perfect solution, but it closes off a significant attack vector where the majority of interactions would appear completely legitimate.

This attack is not theoretical: it happened to the ctx package on PyPI back in May 2022.

Here's the pull request from April in which Mike Fiedler landed an integration which hits an API provided by Fastly's Domainr, followed by this PR which polls for domain status on any email domain that hasn't been checked in the past 30 days.

Introducing OSS Rebuild: Open Source, Rebuilt to Last (via) Major news on the Reproducible Builds front: the Google Security team have announced OSS Rebuild, their project to provide build attestations for open source packages released through the NPM, PyPI and Crates ecosystom (and more to come).

They currently run builds against the "most popular" packages from those ecosystems:

Through automation and heuristics, we determine a prospective build definition for a target package and rebuild it. We semantically compare the result with the existing upstream artifact, normalizing each one to remove instabilities that cause bit-for-bit comparisons to fail (e.g. archive compression). Once we reproduce the package, we publish the build definition and outcome via SLSA Provenance. This attestation allows consumers to reliably verify a package's origin within the source history, understand and repeat its build process, and customize the build from a known-functional baseline

The only way to interact with the Rebuild data right now is through their Go CLI tool. I reverse-engineered it using Gemini 2.5 Pro and derived this command to get a list of all of their built packages:

gsutil ls -r 'gs://google-rebuild-attestations/**'

There are 9,513 total lines, here's a Gist. I used Claude Code to count them across the different ecosystems (discounting duplicates for different versions of the same package):

- pypi: 5,028 packages

- cratesio: 2,437 packages

- npm: 2,048 packages

Then I got a bit ambitious... since the files themselves are hosted in a Google Cloud Bucket, could I run my own web app somewhere on storage.googleapis.com that could use fetch() to retrieve that data, working around the lack of open CORS headers?

I got Claude Code to try that for me (I didn't want to have to figure out how to create a bucket and configure it for web access just for this one experiment) and it built and then deployed https://storage.googleapis.com/rebuild-ui/index.html, which did indeed work!

![Screenshot of Google Rebuild Explorer interface showing a search box with placeholder text "Type to search packages (e.g., 'adler', 'python-slugify')..." under "Search rebuild attestations:", a loading file path "pypi/accelerate/0.21.0/accelerate-0.21.0-py3-none-any.whl/rebuild.intoto.jsonl", and Object 1 containing JSON with "payloadType": "in-toto.io Statement v1 URL", "payload": "...", "signatures": [{"keyid": "Google Cloud KMS signing key URL", "sig": "..."}]](https://static.simonwillison.net/static/2025/rebuild-ui.jpg)

It lets you search against that list of packages from the Gist and then select one to view the pretty-printed newline-delimited JSON that was stored for that package.

The output isn't as interesting as I was expecting, but it was fun demonstrating that it's possible to build and deploy web apps to Google Cloud that can then make fetch() requests to other public buckets.

Hopefully the OSS Rebuild team will add a web UI to their project at some point in the future.

Slopsquatting -- when an LLM hallucinates a non-existent package name, and a bad actor registers it maliciously. The AI brother of typosquatting.

Credit to @sethmlarson for the name

2024

PyPI now supports digital attestations (via) Dustin Ingram:

PyPI package maintainers can now publish signed digital attestations when publishing, in order to further increase trust in the supply-chain security of their projects. Additionally, a new API is available for consumers and installers to verify published attestations.

This has been in the works for a while, and is another component of PyPI's approach to supply chain security for Python packaging - see PEP 740 – Index support for digital attestations for all of the underlying details.

A key problem this solves is cryptographically linking packages published on PyPI to the exact source code that was used to build those packages. In the absence of this feature there are no guarantees that the .tar.gz or .whl file you download from PyPI hasn't been tampered with (to add malware, for example) in a way that's not visible in the published source code.

These new attestations provide a mechanism for proving that a known, trustworthy build system was used to generate and publish the package, starting with its source code on GitHub.

The good news is that if you're using the PyPI Trusted Publishers mechanism in GitHub Actions to publish packages, you're already using this new system. I wrote about that system in January: Publish Python packages to PyPI with a python-lib cookiecutter template and GitHub Actions - and hundreds of my own PyPI packages are already using that system, thanks to my various cookiecutter templates.

Trail of Bits helped build this feature, and provide extra background about it on their own blog in Attestations: A new generation of signatures on PyPI:

As of October 29, attestations are the default for anyone using Trusted Publishing via the PyPA publishing action for GitHub. That means roughly 20,000 packages can now attest to their provenance by default, with no changes needed.

They also built Are we PEP 740 yet? (key implementation here) to track the rollout of attestations across the 360 most downloaded packages from PyPI. It works by hitting URLs such as https://pypi.org/simple/pydantic/ with a Accept: application/vnd.pypi.simple.v1+json header - here's the JSON that returns.

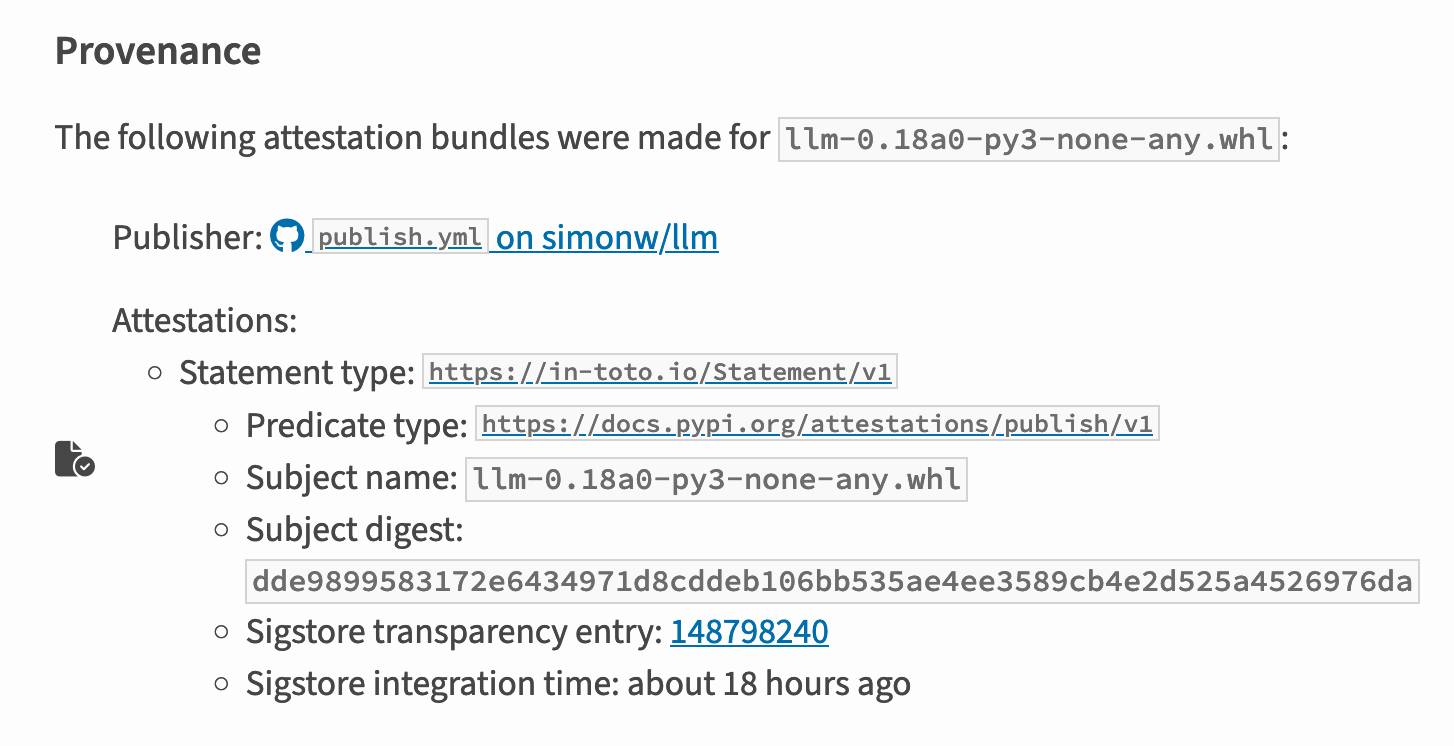

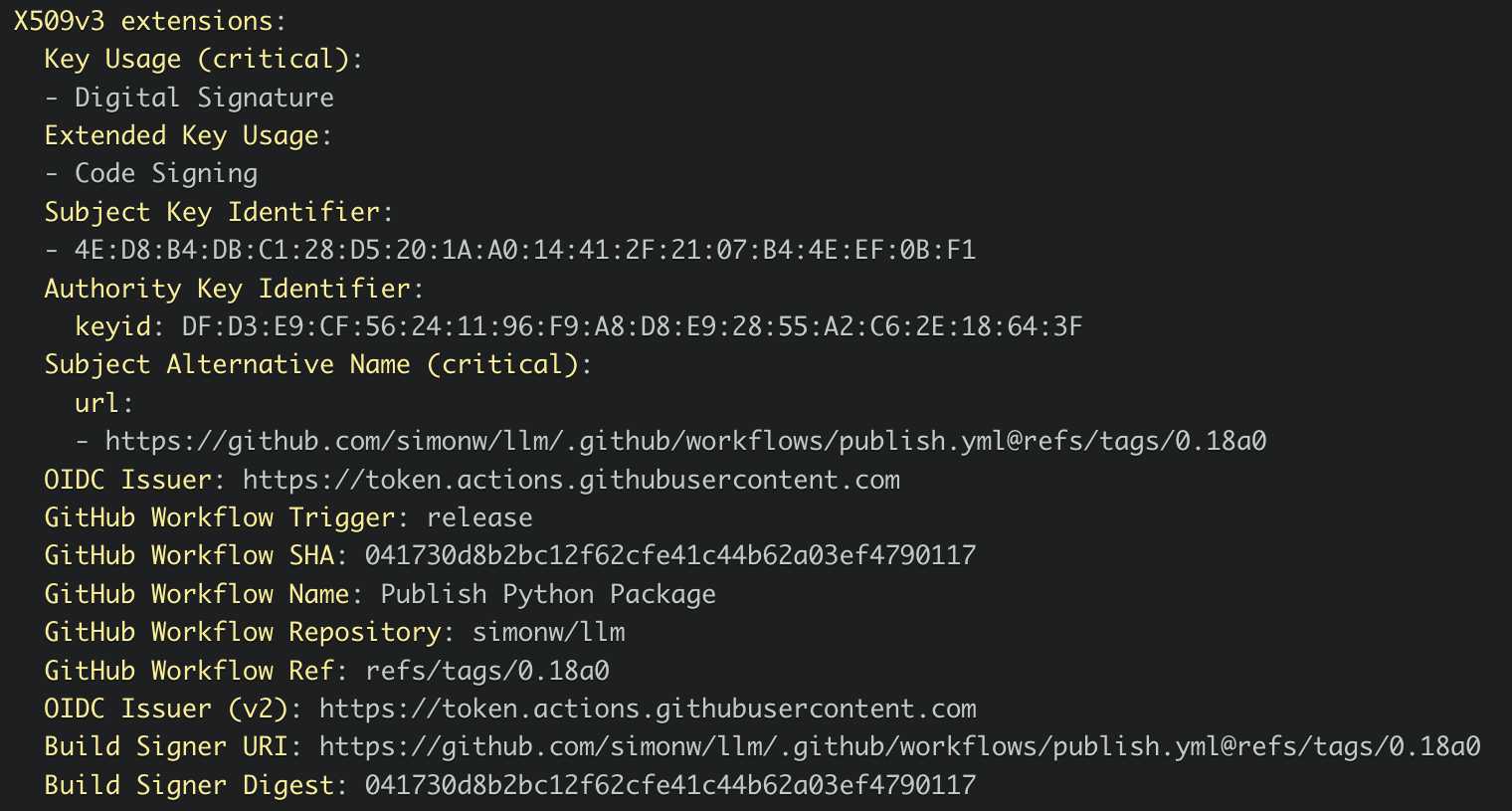

I published an alpha package using Trusted Publishers last night and the files for that release are showing the new provenance information already:

Which links to this Sigstore log entry with more details, including the Git hash that was used to build the package:

Sigstore is a transparency log maintained by Open Source Security Foundation (OpenSSF), a sub-project of the Linux Foundation.

Polyfill supply chain attack hits 100K+ sites

(via)

Short version: if you are loading assets from the polyfill.io domain you need to remove that right now: the new owners of the domain (as of a few months ago) appear to be using it to serve malicious JavaScript.

polyfill.io was a fascinating service. It was originally developed and supported by the Financial Times, but span off as a separate project several years ago.

The key idea was to serve up a set of JavaScript polyfills - pieces of code that implemented missing web platform features for older browsers - dynamically, based on the incoming user-agent. This required a CDN that varied its output dynamically based on the user-agent, hence the popularity of the single hosted service.

Andrew Betts, the original author of the service, has been warning people to move off it since February 2024:

If your website uses

polyfill.io, remove it IMMEDIATELY.I created the polyfill service project but I have never owned the domain name and I have had no influence over its sale.

He now works for Fastly, which started offering a free polyfill-fastly.io alternative in February. Andrew says you probably don't need that either, given that modern browsers have much better compatibility than when the service was first introduced over a decade ago.

There's some interesting additional context in a now-deleted GitHub issue, preserved here by the Internet Archive.

Usually one answer to protecting against this style of CDN supply chain attack would be to use SRI hashes to ensure only the expected script can be served from the site. That doesn't work here because the whole point of the service is to serve different scripts to different browsers.

Diving Deeper into AI Package Hallucinations. Bar Lanyado noticed that LLMs frequently hallucinate the names of packages that don’t exist in their answers to coding questions, which can be exploited as a supply chain attack.

He gathered 2,500 questions across Python, Node.js, Go, .NET and Ruby and ran them through a number of different LLMs, taking notes of any hallucinated packages and if any of those hallucinations were repeated.

One repeat example was “pip install huggingface-cli” (the correct package is “huggingface[cli]”). Bar then published a harmless package under that name in January, and observebd 30,000 downloads of that package in the three months that followed.

How We Executed a Critical Supply Chain Attack on PyTorch (via) Report on a now handled supply chain attack reported against PyTorch which took advantage of GitHub Actions, stealing credentials from some self-hosted task runners.

The researchers first submitted a typo fix to the PyTorch repo, which gave them status as a “contributor” to that repo and meant that their future pull requests would have workflows executed without needing manual approval.

Their mitigation suggestion is to switch the option from ’Require approval for first-time contributors’ to ‘Require approval for all outside collaborators’.

I think GitHub could help protect against this kind of attack by making it more obvious when you approve a PR to run workflows in a way that grants that contributor future access rights. I’d like a “approve this time only” button separate from “approve this run and allow future runs from user X”.

2022

Microsoft® Open Source Software (OSS) Secure Supply Chain (SSC) Framework Simplified Requirements. This is really good: don’t get distracted by the acronyms, skip past the intro and head straight to the framework practices section, which talks about things like keeping copies of the packages you depend on, running scanners, tracking package updates and most importantly keeping an inventory of the open source packages you work so you can quickly respond to things like log4j.

I feel like I say this a lot these days, but if you had told teenage-me that Microsoft would be publishing genuinely useful non-FUD guides to open source supply chain security by 2022 I don’t think I would have believed you.