3 posts tagged “limbo”

Limbo is an implementation of a SQLite-compatible database engine in Rust.

2025

OpenAI reasoning models: Advice on prompting (via) OpenAI's documentation for their o1 and o3 "reasoning models" includes some interesting tips on how to best prompt them:

- Developer messages are the new system messages: Starting with

o1-2024-12-17, reasoning models supportdevelopermessages rather thansystemmessages, to align with the chain of command behavior described in the model spec.

This appears to be a purely aesthetic change made for consistency with their instruction hierarchy concept. As far as I can tell the old system prompts continue to work exactly as before - you're encouraged to use the new developer message type but it has no impact on what actually happens.

Since my LLM tool already bakes in a llm --system "system prompt" option which works across multiple different models from different providers I'm not going to rush to adopt this new language!

- Use delimiters for clarity: Use delimiters like markdown, XML tags, and section titles to clearly indicate distinct parts of the input, helping the model interpret different sections appropriately.

Anthropic have been encouraging XML-ish delimiters for a while (I say -ish because there's no requirement that the resulting prompt is valid XML). My files-to-prompt tool has a -c option which outputs Claude-style XML, and in my experiments this same option works great with o1 and o3 too:

git clone https://github.com/tursodatabase/limbo

cd limbo/bindings/python

files-to-prompt . -c | llm -m o3-mini \

-o reasoning_effort high \

--system 'Write a detailed README with extensive usage examples'

- Limit additional context in retrieval-augmented generation (RAG): When providing additional context or documents, include only the most relevant information to prevent the model from overcomplicating its response.

This makes me thing that o1/o3 are not good models to implement RAG on at all - with RAG I like to be able to dump as much extra context into the prompt as possible and leave it to the models to figure out what's relevant.

- Try zero shot first, then few shot if needed: Reasoning models often don't need few-shot examples to produce good results, so try to write prompts without examples first. If you have more complex requirements for your desired output, it may help to include a few examples of inputs and desired outputs in your prompt. Just ensure that the examples align very closely with your prompt instructions, as discrepancies between the two may produce poor results.

Providing examples remains the single most powerful prompting tip I know, so it's interesting to see advice here to only switch to examples if zero-shot doesn't work out.

- Be very specific about your end goal: In your instructions, try to give very specific parameters for a successful response, and encourage the model to keep reasoning and iterating until it matches your success criteria.

This makes sense: reasoning models "think" until they reach a conclusion, so making the goal as unambiguous as possible leads to better results.

- Markdown formatting: Starting with

o1-2024-12-17, reasoning models in the API will avoid generating responses with markdown formatting. To signal to the model when you do want markdown formatting in the response, include the stringFormatting re-enabledon the first line of yourdevelopermessage.

This one was a real shock to me! I noticed that o3-mini was outputting • characters instead of Markdown * bullets and initially thought that was a bug.

I first saw this while running this prompt against limbo/bindings/python using files-to-prompt:

git clone https://github.com/tursodatabase/limbo

cd limbo/bindings/python

files-to-prompt . -c | llm -m o3-mini \

-o reasoning_effort high \

--system 'Write a detailed README with extensive usage examples'Here's the full result, which includes text like this (note the weird bullets):

Features

--------

• High‑performance, in‑process database engine written in Rust

• SQLite‑compatible SQL interface

• Standard Python DB‑API 2.0–style connection and cursor objects

I ran it again with this modified prompt:

Formatting re-enabled. Write a detailed README with extensive usage examples.

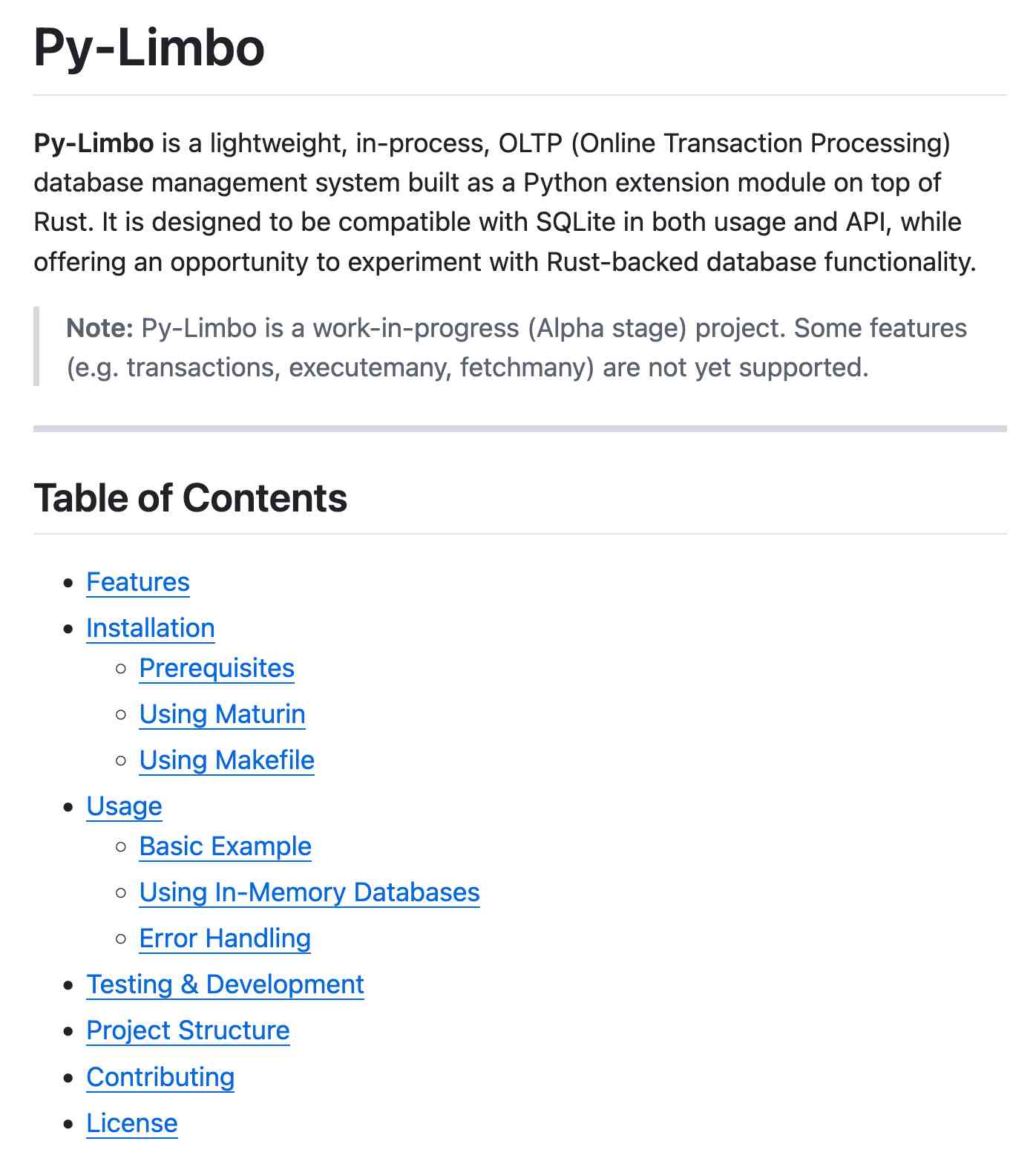

And this time got back proper Markdown, rendered in this Gist. That did a really good job, and included bulleted lists using this valid Markdown syntax instead:

- **`make test`**: Run tests using pytest.

- **`make lint`**: Run linters (via [ruff](https://github.com/astral-sh/ruff)).

- **`make check-requirements`**: Validate that the `requirements.txt` files are in sync with `pyproject.toml`.

- **`make compile-requirements`**: Compile the `requirements.txt` files using pip-tools.

(Using LLMs like this to get me off the ground with under-documented libraries is a trick I use several times a month.)

Update: OpenAI's Nikunj Handa:

we agree this is weird! fwiw, it’s a temporary thing we had to do for the existing o-series models. we’ll fix this in future releases so that you can go back to naturally prompting for markdown or no-markdown.

2024

In search of a faster SQLite (via) Turso developer Avinash Sajjanshetty (previously) shares notes on the April 2024 paper Serverless Runtime / Database Co-Design With Asynchronous I/O by Turso founder and CTO Pekka Enberg, Jon Crowcroft, Sasu Tarkoma and Ashwin Rao.

The theme of the paper is rearchitecting SQLite for asynchronous I/O, and Avinash describes it as "the foundational paper behind Limbo, the SQLite rewrite in Rust."

From the paper abstract:

We propose rearchitecting SQLite to provide asynchronous byte-code instructions for I/O to avoid blocking in the library and de-coupling the query and storage engines to facilitate database and serverless runtime co-design. Our preliminary evaluation shows up to a 100x reduction in tail latency, suggesting that our approach is conducive to runtime/database co-design for low latency.

Introducing Limbo: A complete rewrite of SQLite in Rust (via) This looks absurdly ambitious:

Our goal is to build a reimplementation of SQLite from scratch, fully compatible at the language and file format level, with the same or higher reliability SQLite is known for, but with full memory safety and on a new, modern architecture.

The Turso team behind it have been maintaining their libSQL fork for two years now, so they're well equipped to take on a challenge of this magnitude.

SQLite is justifiably famous for its meticulous approach to testing. Limbo plans to take an entirely different approach based on "Deterministic Simulation Testing" - a modern technique pioneered by FoundationDB and now spearheaded by Antithesis, the company Turso have been working with on their previous testing projects.

Another bold claim (emphasis mine):

We have both added DST facilities to the core of the database, and partnered with Antithesis to achieve a level of reliability in the database that lives up to SQLite’s reputation.

[...] With DST, we believe we can achieve an even higher degree of robustness than SQLite, since it is easier to simulate unlikely scenarios in a simulator, test years of execution with different event orderings, and upon finding issues, reproduce them 100% reliably.

The two most interesting features that Limbo is planning to offer are first-party WASM support and fully asynchronous I/O:

SQLite itself has a synchronous interface, meaning driver authors who want asynchronous behavior need to have the extra complication of using helper threads. Because SQLite queries tend to be fast, since no network round trips are involved, a lot of those drivers just settle for a synchronous interface. [...]

Limbo is designed to be asynchronous from the ground up. It extends

sqlite3_step, the main entry point API to SQLite, to be asynchronous, allowing it to return to the caller if data is not ready to consume immediately.

Datasette provides an async API for executing SQLite queries which is backed by all manner of complex thread management - I would be very interested in a native asyncio Python library for talking to SQLite database files.

I successfully tried out Limbo's Python bindings against a demo SQLite test database using uv like this:

uv run --with pylimbo python

>>> import limbo

>>> conn = limbo.connect("/tmp/demo.db")

>>> cursor = conn.cursor()

>>> print(cursor.execute("select * from foo").fetchall())

It crashed when I tried against a more complex SQLite database that included SQLite FTS tables.

The Python bindings aren't yet documented, so I piped them through LLM and had the new google-exp-1206 model write this initial documentation for me:

files-to-prompt limbo/bindings/python -c | llm -m gemini-exp-1206 -s 'write extensive usage documentation in markdown, including realistic usage examples'