Tuesday, 18th November 2025

Trying out Gemini 3 Pro with audio transcription and a new pelican benchmark

Google released Gemini 3 Pro today. Here’s the announcement from Sundar Pichai, Demis Hassabis, and Koray Kavukcuoglu, their developer blog announcement from Logan Kilpatrick, the Gemini 3 Pro Model Card, and their collection of 11 more articles. It’s a big release!

[... 2,476 words]Three years ago, we were impressed that a machine could write a poem about otters. Less than 1,000 days later, I am debating statistical methodology with an agent that built its own research environment. The era of the chatbot is turning into the era of the digital coworker. To be very clear, Gemini 3 isn’t perfect, and it still needs a manager who can guide and check it. But it suggests that “human in the loop” is evolving from “human who fixes AI mistakes” to “human who directs AI work.” And that may be the biggest change since the release of ChatGPT.

— Ethan Mollick, Three Years from GPT-3 to Gemini 3

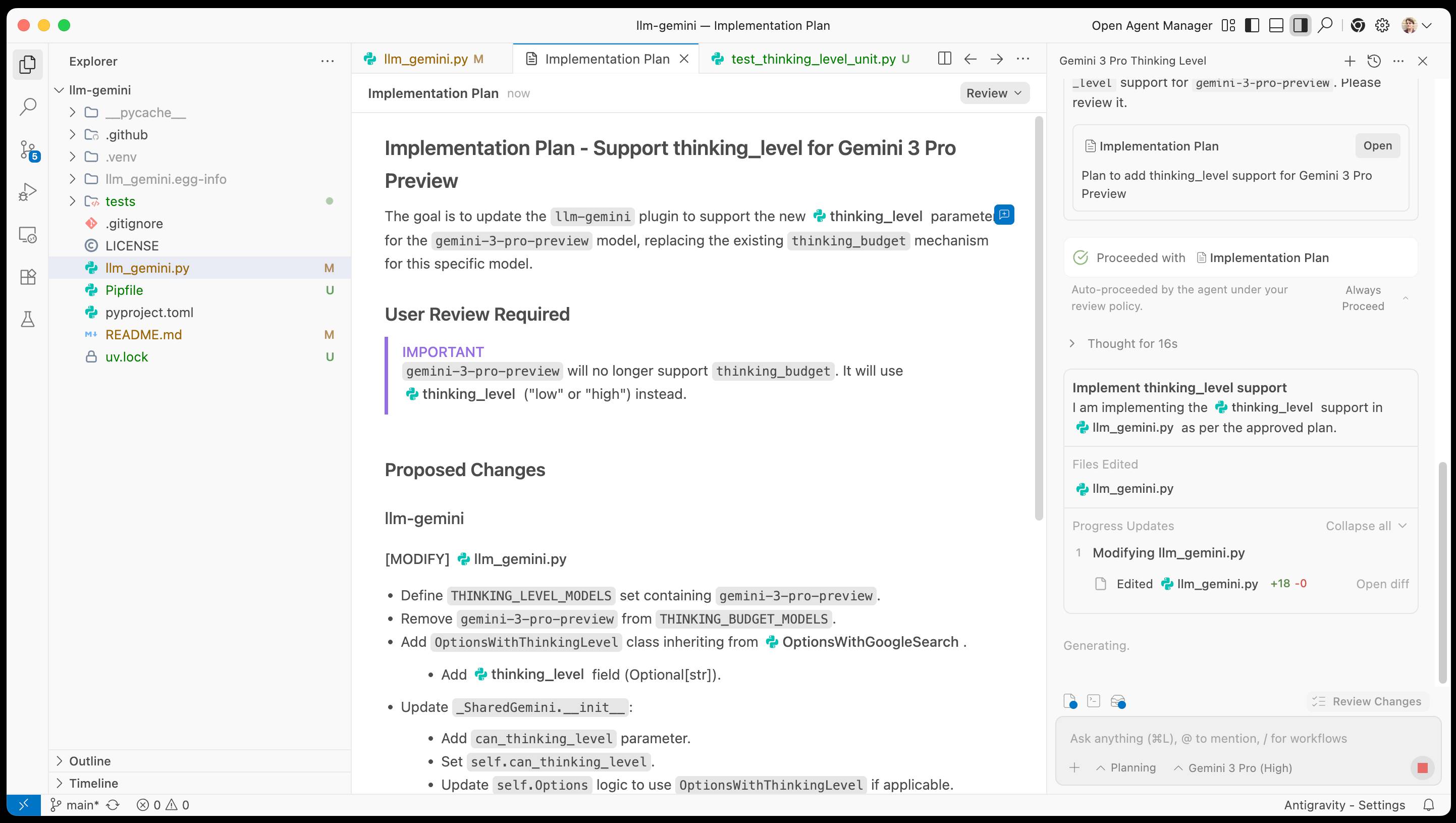

Google Antigravity. Google's other major release today to accompany Gemini 3 Pro. At first glance Antigravity is yet another VS Code fork Cursor clone - it's a desktop application you install that then signs in to your Google account and provides an IDE for agentic coding against their Gemini models.

When you look closer it's actually a fair bit more interesting than that.

The best introduction right now is the official 14 minute Learn the basics of Google Antigravity video on YouTube, where product engineer Kevin Hou (who previously worked at Windsurf) walks through the process of building an app.

There are some interesting new ideas in Antigravity. The application itself has three "surfaces" - an agent manager dashboard, a traditional VS Code style editor and deep integration with a browser via a new Chrome extension. This plays a similar role to Playwright MCP, allowing the agent to directly test the web applications it is building.

Antigravity also introduces the concept of "artifacts" (confusingly not at all similar to Claude Artifacts). These are Markdown documents that are automatically created as the agent works, for things like task lists, implementation plans and a "walkthrough" report showing what the agent has done once it finishes.

I tried using Antigravity to help add support for Gemini 3 to my llm-gemini plugin.

It worked OK at first then gave me an "Agent execution terminated due to model provider overload. Please try again later" error. I'm going to give it another go after they've had a chance to work through those initial launch jitters.

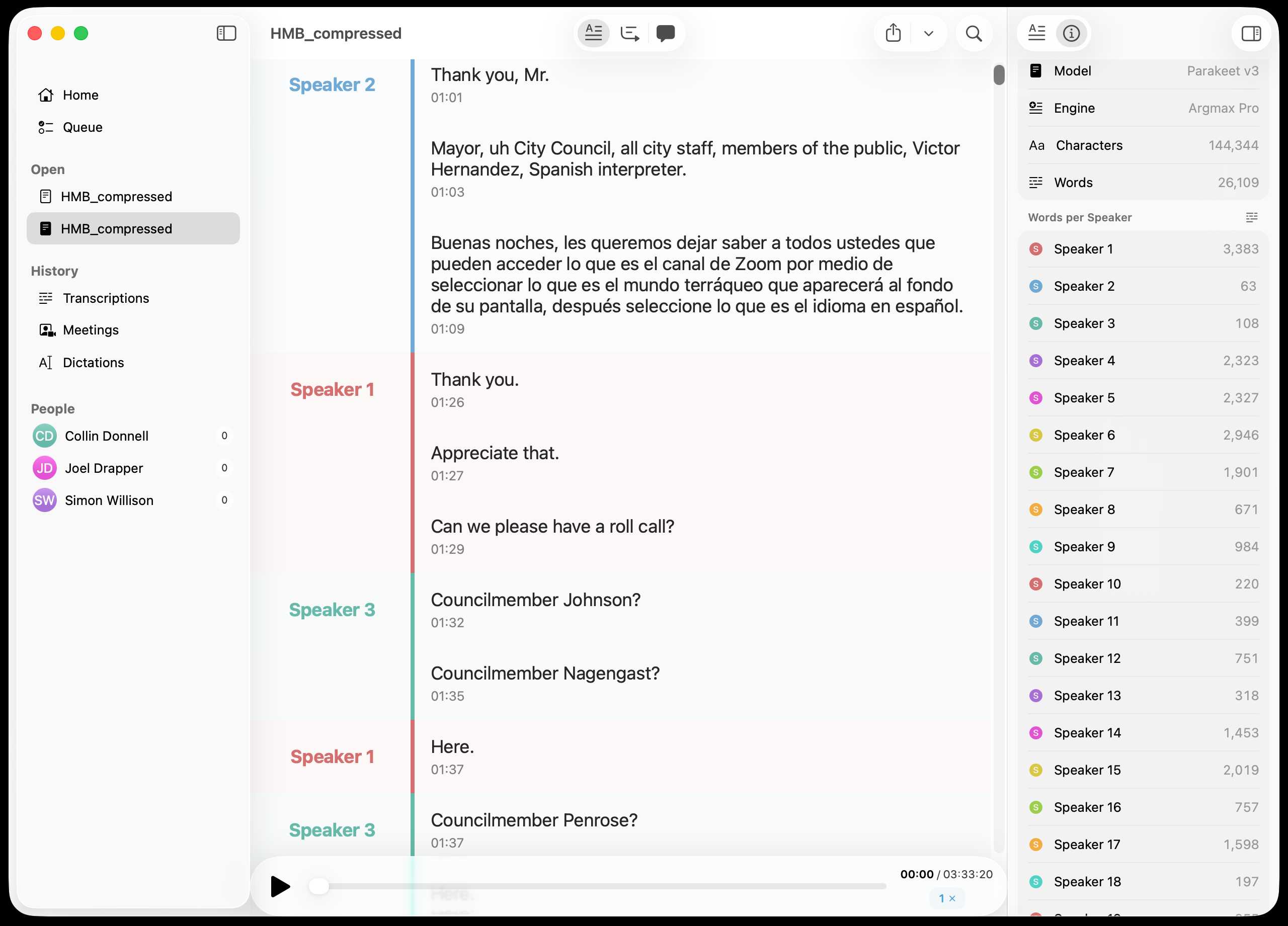

Inspired by this conversation on Hacker News I decided to upgrade MacWhisper to try out NVIDIA Parakeet and the new Automatic Speaker Recognition feature.

It appears to work really well! Here's the result against this 39.7MB m4a file from my Gemini 3 Pro write-up this morning:

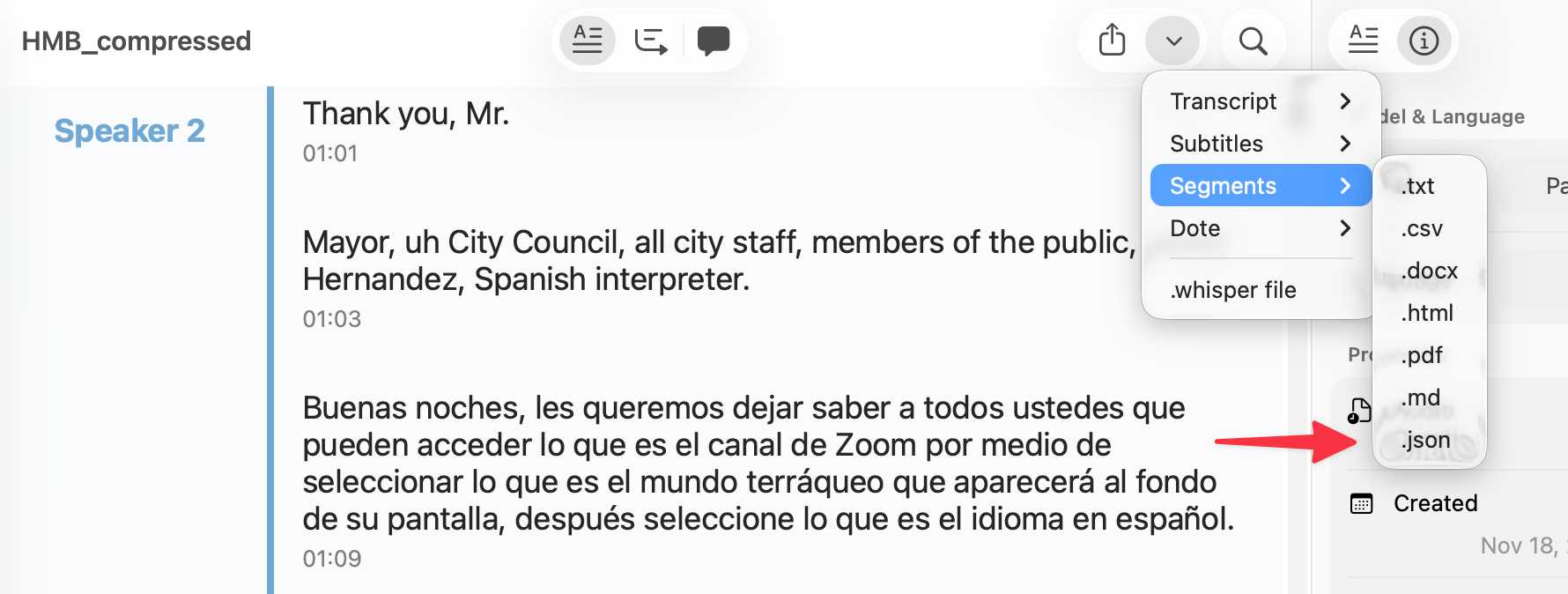

You can export the transcript with both timestamps and speaker names using the Share -> Segments > .json menu item:

Here's the resulting JSON.

llm-gemini 0.27. New release of my LLM plugin for Google's Gemini models:

- Support for nested schemas in Pydantic, thanks Bill Pugh. #107

- Now tests against Python 3.14.

- Support for YouTube URLs as attachments and the

media_resolutionoption. Thanks, Duane Milne. #112- New model:

gemini-3-pro-preview. #113

The YouTube URL feature is particularly neat, taking advantage of this API feature. I used it against the Google Antigravity launch video:

llm -m gemini-3-pro-preview \

-a 'https://www.youtube.com/watch?v=nTOVIGsqCuY' \

'Summary, with detailed notes about what this thing is and how it differs from regular VS Code, then a complete detailed transcript with timestamps'

Here's the result. A spot-check of the timestamps against points in the video shows them to be exactly right.