Tuesday, 6th May 2025

What people get wrong about the leading Chinese open models: Adoption and censorship (via) While I've been enjoying trying out Alibaba's Qwen 3 a lot recently, Nathan Lambert focuses on the elephant in the room:

People vastly underestimate the number of companies that cannot use Qwen and DeepSeek open models because they come from China. This includes on-premise solutions built by people who know the fact that model weights alone cannot reveal anything to their creators.

The root problem here is the closed nature of the training data. Even if a model is open weights, it's not possible to conclusively determine that it couldn't add backdoors to generated code or trigger "indirect influence of Chinese values on Western business systems". Qwen 3 certainly has baked in opinions about the status of Taiwan!

Nathan sees this as an opportunity for other liberally licensed models, including his own team's OLMo:

This gap provides a big opportunity for Western AI labs to lead in open models. Without DeepSeek and Qwen, the top tier of models we’re left with are Llama and Gemma, which both have very restrictive licenses when compared to their Chinese counterparts. These licenses are proportionally likely to block an IT department from approving a model.

This takes us to the middle tier of permissively licensed, open weight models who actually have a huge opportunity ahead of them: OLMo, of course, I’m biased, Microsoft with Phi, Mistral, IBM (!??!), and some other smaller companies to fill out the long tail.

That's it. I've had it. I'm putting my foot down on this craziness.

1. Every reporter submitting security reports on #Hackerone for #curl now needs to answer this question:

"Did you use an AI to find the problem or generate this submission?"

(and if they do select it, they can expect a stream of proof of actual intelligence follow-up questions)

2. We now ban every reporter INSTANTLY who submits reports we deem AI slop. A threshold has been reached. We are effectively being DDoSed. If we could, we would charge them for this waste of our time.

We still have not seen a single valid security report done with AI help.

Gemini 2.5 Pro Preview: even better coding performance. New Gemini 2.5 Pro "Google I/O edition" model, released a few weeks ahead of that annual developer conference.

They claim even better frontend coding performance, highlighting their #1 ranking on the WebDev Arena leaderboard, notable because it knocked Claude 3.7 Sonnet from that top spot. They also highlight "state-of-the-art video understanding" with a 84.8% score on the new-to-me VideoMME benchmark.

I rushed out a new release of llm-gemini adding support for the new gemini-2.5-pro-preview-05-06 model ID, but it turns out if I had read to the end of their post I should not have bothered:

For developers already using Gemini 2.5 Pro, this new version will not only improve coding performance but will also address key developer feedback including reducing errors in function calling and improving function calling trigger rates. The previous iteration (03-25) now points to the most recent version (05-06), so no action is required to use the improved model

I'm not a fan of this idea that a model ID with a clear date in it like gemini-2.5-pro-preview-03-25 can suddenly start pointing to a brand new model!

I used the new Gemini 2.5 Pro to summarize the conversation about itself on Hacker News using the latest version of my hn-summary.sh script:

hn-summary.sh 43906018 -m gemini-2.5-pro-preview-05-06

Here's what I got back - 30,408 input tokens, 8,535 output tokens and 3,980 thinknig tokens for a total cost of 16.316 cents.

8,535 output tokens is a lot. My system prompt includes the instruction to "Go long" - this is the first time I've seen a model really take that to heart. For comparison, here's the result of a similar experiment against the previous version of Gemini 2.5 Pro two months ago.

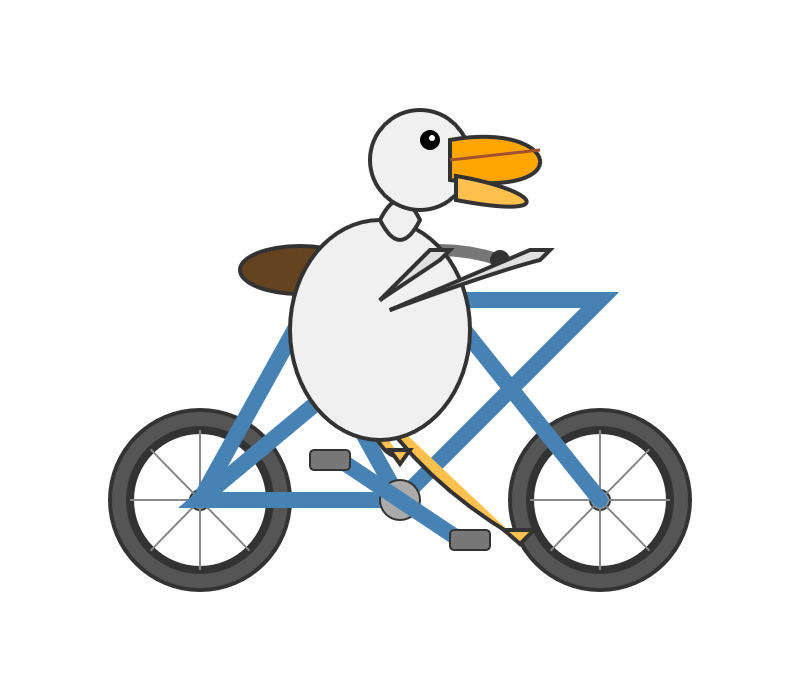

Update: The one time I forget to run my "Generate an SVG of a pelican riding a bicycle" test is the time that the model turns out to produce one of the best results I've seen yet!

Here's the transcript - 11 input tokens and 3,281 output tokens and 1,558 thinking tokens = 4.8404 cents.

I asked Gemini to describe that image:

llm -m gemini-2.5-pro-preview-05-06 \

-a https://static.simonwillison.net/static/2025/gemini-latest-pelican.jpg \

'describe image for alt text'

Here's what I got back. Gemini thought it had drawn a duck:

A cartoon illustration of a white duck with an orange beak riding a blue bicycle.

The duck has a large, oval white body and a smaller round head with a black dot eye. Its thin black wings act as arms, gripping the blue handlebars. One yellow-orange leg is visible, bent and pushing a grey pedal.

The bicycle has a blue frame with a distinctive cross-brace, a brown oval seat, and dark grey wheels with silver spokes. The entire image is set against a plain white background.

Saying “hi” to Microsoft’s Phi-4-reasoning

Microsoft released a new sub-family of models a few days ago: Phi-4 reasoning. They introduced them in this blog post celebrating a year since the release of Phi-3:

[... 1,498 words]What’s the carbon footprint of using ChatGPT? Inspired by Andy Masley's cheat sheet (which I linked to last week) Hannah Ritchie explores some of the numbers herself.

Hanah is Head of Research at Our World in Data, a Senior Researcher at the University of Oxford (bio) and maintains a prolific newsletter on energy and sustainability so she has a lot more credibility in this area than Andy or myself!

My sense is that a lot of climate-conscious people feel guilty about using ChatGPT. In fact it goes further: I think many people judge others for using it, because of the perceived environmental impact. [...]

But after looking at the data on individual use of LLMs, I have stopped worrying about it and I think you should too.

The inevitable counter-argument to the idea that the impact of ChatGPT usage by an individual is negligible is that aggregate user demand is still the thing that drives these enormous investments in huge data centers and new energy sources to power them. Hannah acknowledges that:

I am not saying that AI energy demand, on aggregate, is not a problem. It is, even if it’s “just” of a similar magnitude to the other sectors that we need to electrify, such as cars, heating, or parts of industry. It’s just that individuals querying chatbots is a relatively small part of AI's total energy consumption. That’s how both of these facts can be true at the same time.

Meanwhile Arthur Clune runs the numbers on the potential energy impact of some much more severe usage patterns.

Developers burning through $100 of tokens per day (not impossible given some of the LLM-heavy development patterns that are beginning to emerge) could end the year with the equivalent of a short haul flight or 600 mile car journey.

In the panopticon scenario where all 10 million security cameras in the UK analyze video through a vision LLM at one frame per second Arthur estimates we would need to duplicate the total usage of Birmingham, UK - the output of a 1GW nuclear plant.

Let's not build that panopticon!