Tuesday, 10th June 2025

Magistral — the first reasoning model by Mistral AI. Mistral's first reasoning model is out today, in two sizes. There's a 24B Apache 2 licensed open-weights model called Magistral Small (actually Magistral-Small-2506), and a larger API-only model called Magistral Medium.

Magistral Small is available as mistralai/Magistral-Small-2506 on Hugging Face. From that model card:

Context Window: A 128k context window, but performance might degrade past 40k. Hence we recommend setting the maximum model length to 40k.

Mistral also released an official GGUF version, Magistral-Small-2506_gguf, which I ran successfully using Ollama like this:

ollama pull hf.co/mistralai/Magistral-Small-2506_gguf:Q8_0

That fetched a 25GB file. I ran prompts using a chat session with llm-ollama like this:

llm chat -m hf.co/mistralai/Magistral-Small-2506_gguf:Q8_0

Here's what I got for "Generate an SVG of a pelican riding a bicycle" (transcript here):

It's disappointing that the GGUF doesn't support function calling yet - hopefully a community variant can add that, it's one of the best ways I know of to unlock the potential of these reasoning models.

I just noticed that Ollama have their own Magistral model too, which can be accessed using:

ollama pull magistral:latest

That gets you a 14GB q4_K_M quantization - other options can be found in the full list of Ollama magistral tags.

One thing that caught my eye in the Magistral announcement:

Legal, finance, healthcare, and government professionals get traceable reasoning that meets compliance requirements. Every conclusion can be traced back through its logical steps, providing auditability for high-stakes environments with domain-specialized AI.

I guess this means the reasoning traces are fully visible and not redacted in any way - interesting to see Mistral trying to turn that into a feature that's attractive to the business clients they are most interested in appealing to.

Also from that announcement:

Our early tests indicated that Magistral is an excellent creative companion. We highly recommend it for creative writing and storytelling, with the model capable of producing coherent or — if needed — delightfully eccentric copy.

I haven't seen a reasoning model promoted for creative writing in this way before.

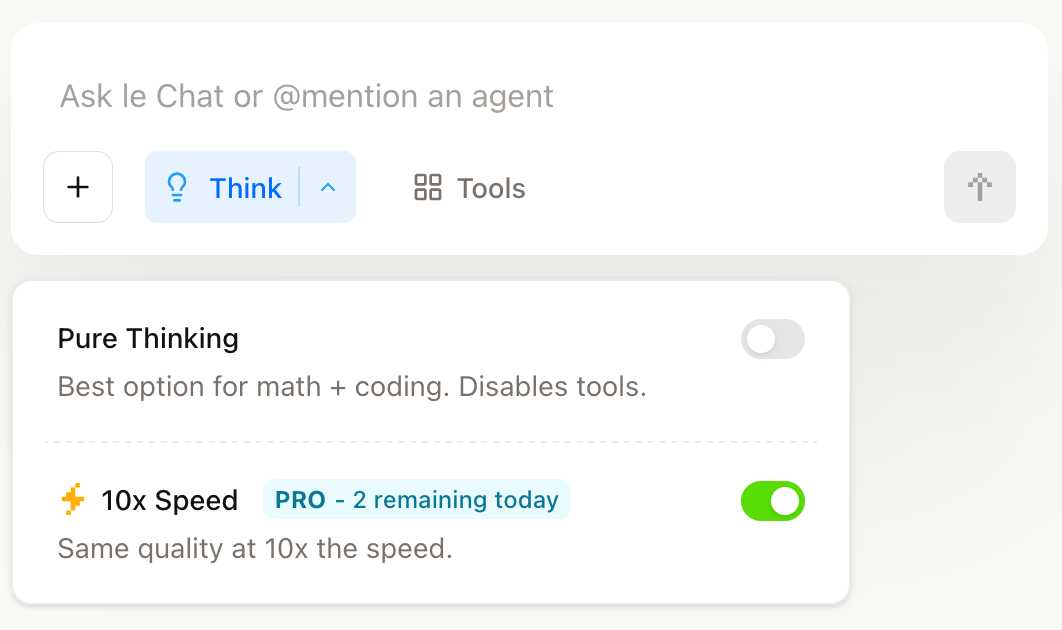

You can try out Magistral Medium by selecting the new "Thinking" option in Mistral's Le Chat.

They have options for "Pure Thinking" and a separate option for "10x speed", which runs Magistral Medium at 10x the speed using Cerebras.

The new models are also available through the Mistral API. You can access them by installing llm-mistral and running llm mistral refresh to refresh the list of available models, then:

llm -m mistral/magistral-medium-latest \

'Generate an SVG of a pelican riding a bicycle'

Here's that transcript. At 13 input and 1,236 output tokens that cost me 0.62 cents - just over half a cent.

OpenAI just dropped the price of their o3 model by 80% - from $10/million input tokens and $40/million output tokens to just $2/million and $8/million for the very same model. This is in advance of the release of o3-pro which apparently is coming later today (update: here it is).

This is a pretty huge shake-up in LLM pricing. o3 is now priced the same as GPT 4.1, and slightly less than GPT-4o ($2.50/$10). It’s also less than Anthropic’s Claude Sonnet 4 ($3/$15) and Opus 4 ($15/$75) and sits in between Google’s Gemini 2.5 Pro for >200,00 tokens ($2.50/$15) and 2.5 Pro for <200,000 ($1.25/$10).

I’ve updated my llm-prices.com pricing calculator with the new rate.

How have they dropped the price so much? OpenAI's Adam Groth credits ongoing optimization work:

thanks to the engineers optimizing inferencing.

o3-pro. OpenAI released o3-pro today, which they describe as a "version of o3 with more compute for better responses".

It's only available via the newer Responses API. I've added it to my llm-openai-plugin plugin which uses that new API, so you can try it out like this:

llm install -U llm-openai-plugin

llm -m openai/o3-pro "Generate an SVG of a pelican riding a bicycle"

It's slow - generating this pelican took 124 seconds! OpenAI suggest using their background mode for o3 prompts, which I haven't tried myself yet.

o3-pro is priced at $20/million input tokens and $80/million output tokens - 10x the price of regular o3 after its 80% price drop this morning.

Ben Hylak had early access and published his notes so far in God is hungry for Context: First thoughts on o3 pro. It sounds like this model needs to be applied very thoughtfully. It comparison to o3:

It's smarter. much smarter.

But in order to see that, you need to give it a lot more context. and I'm running out of context. [...]

My co-founder Alexis and I took the the time to assemble a history of all of our past planning meetings at Raindrop, all of our goals, even record voice memos: and then asked o3-pro to come up with a plan.

We were blown away; it spit out the exact kind of concrete plan and analysis I've always wanted an LLM to create --- complete with target metrics, timelines, what to prioritize, and strict instructions on what to absolutely cut.

The plan o3 gave us was plausible, reasonable; but the plan o3 Pro gave us was specific and rooted enough that it actually changed how we are thinking about our future.

This is hard to capture in an eval.

It sounds to me like o3-pro works best when combined with tools. I don't have tool support in llm-openai-plugin yet, here's the relevant issue.

AI-assisted coding for teams that can’t get away with vibes (via) This excellent piece by Atharva Raykar offers a bunch of astute observations on AI-assisted development that I haven't seen written down elsewhere.

Building with AI is fast. The gains in velocity are important, because when harnessed correctly, it allows teams to tighten feedback loops with users faster and make better products.

Yet, AI tools are tricky to use. Hold it wrong, and you can generate underwhelming results, worse still, slow down your velocity by drowning your project in slop and technical debt.

Atharva notes that AI is a multiplier: the more expertise you have in software engineering, the better the results you can get from LLMs. Furthermore, what helps the human helps the AI.

This means good test coverage, automatic linting, continuous integration and deployment, good documentation practices and "clearly defined features, broken down into multiple small story cards".

If a team has all of this stuff in place, AI coding assistants will be able to operate more reliably and collaborate more effectively with their human overseers.

I enjoyed his closing thoughts about how heavier reliance on LLMs changes our craft:

Firstly, It’s less valuable to spend too much time looking for and building sophisticated abstractions. DRY is useful for ensuring patterns in the code don’t go out of sync, but there are costs to implementing and maintaining an abstraction to handle changing requirements. LLMs make some repetition palatable and allow you to wait a bit more and avoid premature abstraction.

Redoing work is now extremely cheap. Code in the small is less important than structural patterns and organisation of the code in the large. You can also build lots of prototypes to test an idea out. For this, vibe-coding is great, as long as the prototype is thrown away and rewritten properly later. [...]

Tests are non-negotiable, and AI removes all excuses to not write them because of how fast they can belt them out. But always review the assertions!

(People are often curious about how much energy a ChatGPT query uses; the average query uses about 0.34 watt-hours, about what an oven would use in a little over one second, or a high-efficiency lightbulb would use in a couple of minutes. It also uses about 0.000085 gallons of water; roughly one fifteenth of a teaspoon.)

— Sam Altman, The Gentle Singularity