6 posts tagged “jules”

Jules is an asynchronous coding agent from Google.

2025

Code research projects with async coding agents like Claude Code and Codex

I’ve been experimenting with a pattern for LLM usage recently that’s working out really well: asynchronous code research tasks. Pick a research question, spin up an asynchronous coding agent and let it go and run some experiments and report back when it’s done.

[... 2,017 words]Embracing the parallel coding agent lifestyle

For a while now I’ve been hearing from engineers who run multiple coding agents at once—firing up several Claude Code or Codex CLI instances at the same time, sometimes in the same repo, sometimes against multiple checkouts or git worktrees.

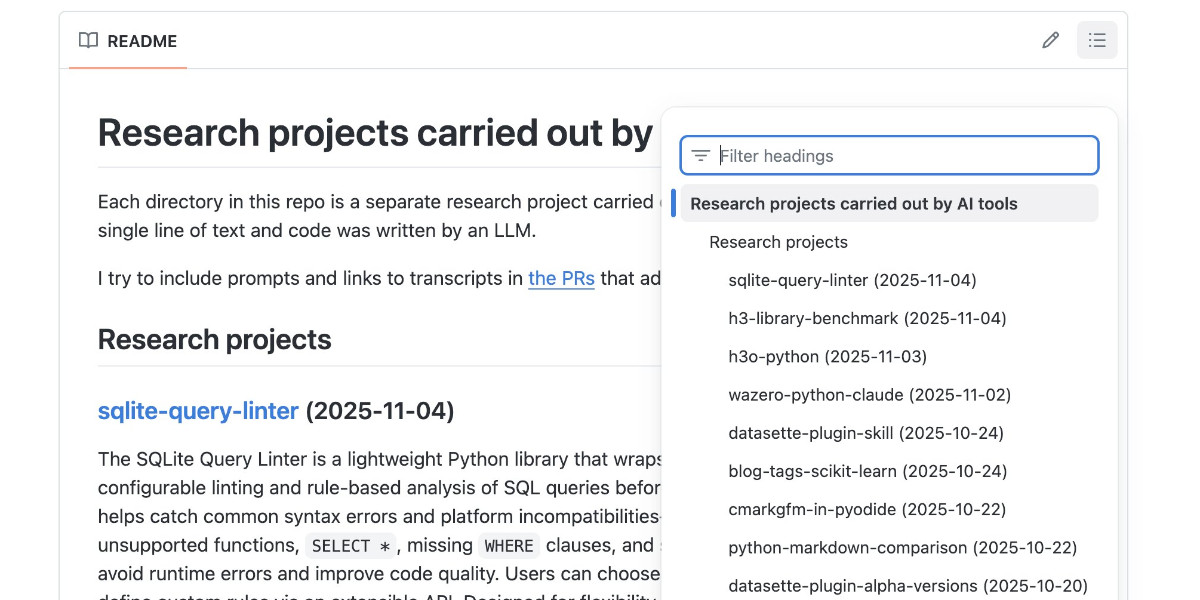

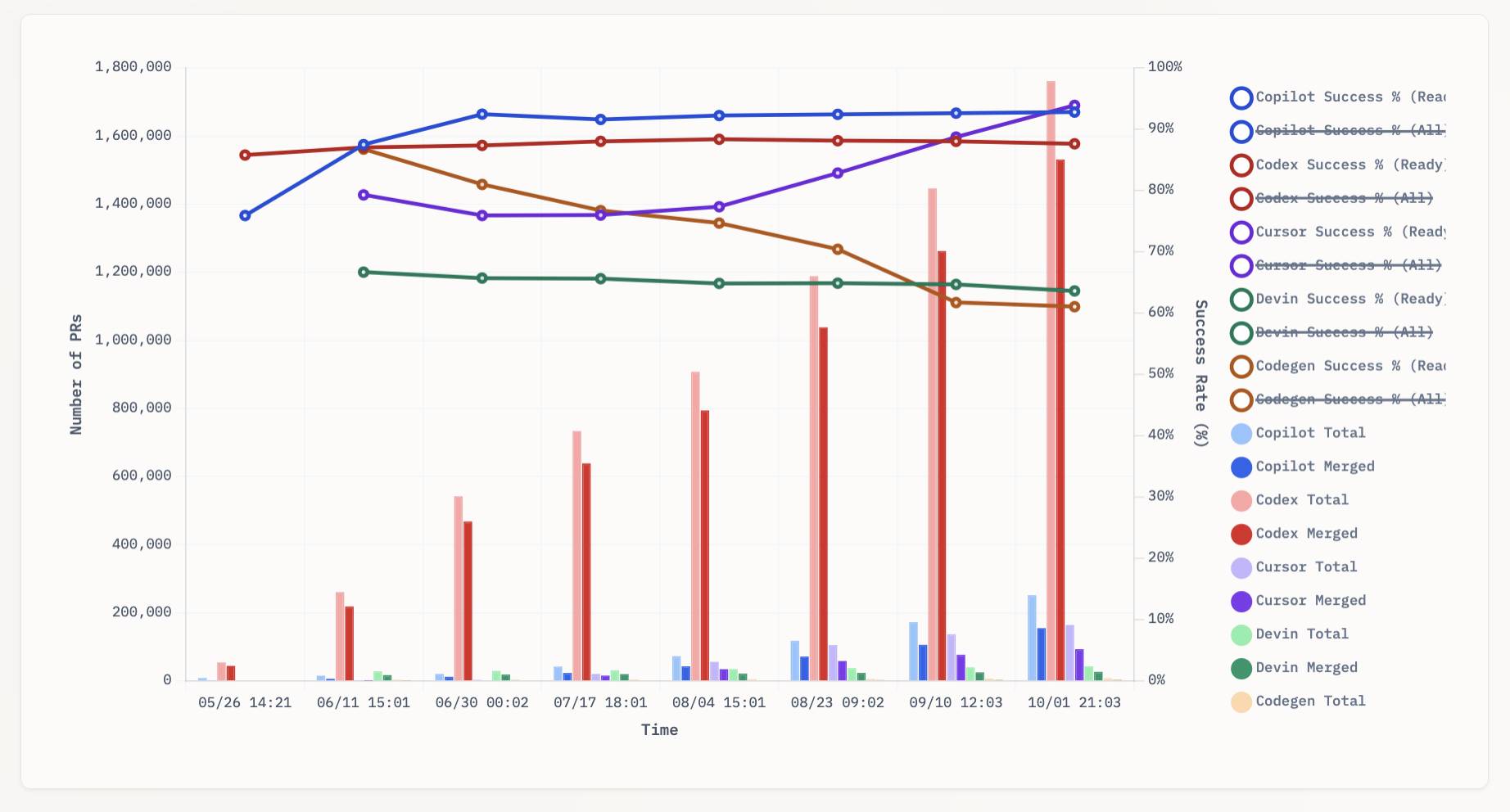

[... 1,275 words]aavetis/PRarena. Albert Avetisian runs this repository on GitHub which uses the Github Search API to track the number of PRs that can be credited to a collection of different coding agents. The repo runs this collect_data.py script every three hours using GitHub Actions to collect the data, then updates the PR Arena site with a visual leaderboard.

The result is this neat chart showing adoption of different agents over time, along with their PR success rate:

I found this today while trying to pull off the exact same trick myself! I got as far as creating the following table before finding Albert's work and abandoning my own project.

| Tool | Search term | Total PRs | Merged PRs | % merged | Earliest |

|---|---|---|---|---|---|

| Claude Code | is:pr in:body "Generated with Claude Code" |

146,000 | 123,000 | 84.2% | Feb 21st |

| GitHub Copilot | is:pr author:copilot-swe-agent[bot] |

247,000 | 152,000 | 61.5% | March 7th |

| Codex Cloud | is:pr in:body "chatgpt.com" label:codex |

1,900,000 | 1,600,000 | 84.2% | April 23rd |

| Google Jules | is:pr author:google-labs-jules[bot] |

35,400 | 27,800 | 78.5% | May 22nd |

(Those "earliest" links are a little questionable, I tried to filter out false positives and find the oldest one that appeared to really be from the agent in question.)

It looks like OpenAI's Codex Cloud is massively ahead of the competition right now in terms of numbers of PRs both opened and merged on GitHub.

Update: To clarify, these numbers are for the category of autonomous coding agents - those systems where you assign a cloud-based agent a task or issue and the output is a PR against your repository. They do not (and cannot) capture the popularity of many forms of AI tooling that don't result in an easily identifiable pull request.

Claude Code for example will be dramatically under-counted here because its version of an autonomous coding agent comes in the form of a somewhat obscure GitHub Actions workflow buried in the documentation.

Jules, our asynchronous coding agent, is now available for everyone (via) I wrote about the Jules beta back in May. Google's version of the OpenAI Codex PR-submitting hosted coding tool graduated from beta today.

I'm mainly linking to this now because I like the new term they are using in this blog entry: Asynchronous coding agent. I like it so much I gave it a tag.

I continue to avoid the term "agent" as infuriatingly vague, but I can grudgingly accept it when accompanied by a prefix that clarifies the type of agent we are talking about. "Asynchronous coding agent" feels just about obvious enough to me to be useful.

... I just ran a Google search for "asynchronous coding agent" -jules and came up with a few more notable examples of this name being used elsewhere:

- Introducing Open SWE: An Open-Source Asynchronous Coding Agent is an announcement from LangChain just this morning of their take on this pattern. They provide a hosted version (bring your own API keys) or you can run it yourself with their MIT licensed code.

- The press release for GitHub's own version of this GitHub Introduces Coding Agent For GitHub Copilot states that "GitHub Copilot now includes an asynchronous coding agent".

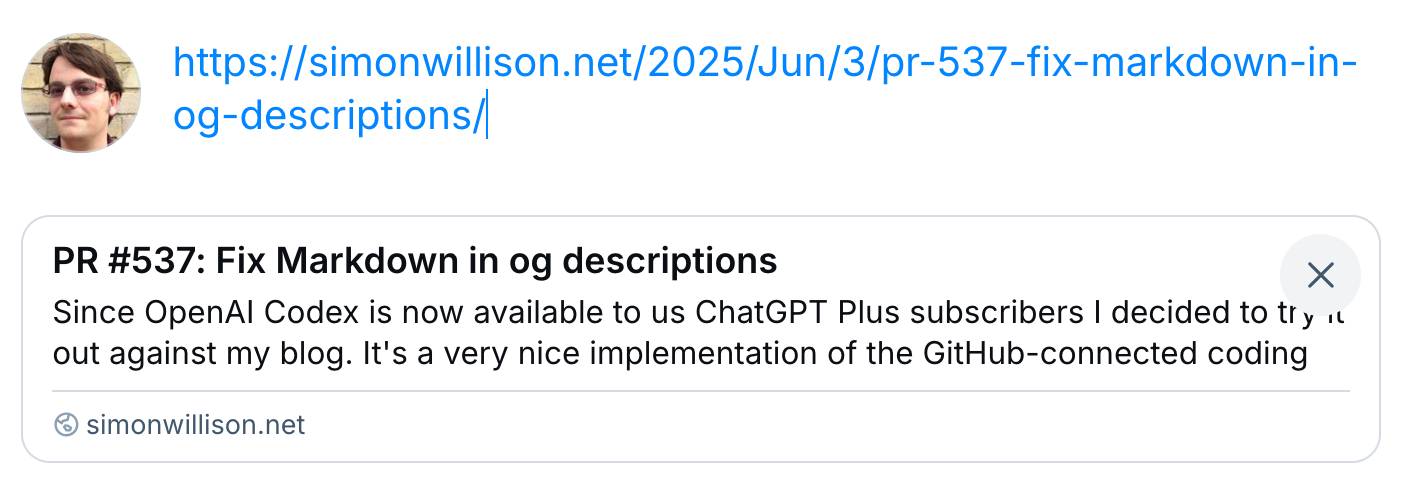

PR #537: Fix Markdown in og descriptions. Since OpenAI Codex is now available to us ChatGPT Plus subscribers I decided to try it out against my blog.

It's a very nice implementation of the GitHub-connected coding "agent" pattern, as also seen in Google's Jules and Microsoft's Copilot Coding Agent.

First I had to configure an environment for it. My Django blog uses PostgreSQL which isn't part of the default Codex container, so I had Claude Sonnet 4 help me come up with a startup recipe to get PostgreSQL working.

I attached my simonw/simonwillisonblog GitHub repo and used the following as the "setup script" for the environment:

# Install PostgreSQL

apt-get update && apt-get install -y postgresql postgresql-contrib

# Start PostgreSQL service

service postgresql start

# Create a test database and user

sudo -u postgres createdb simonwillisonblog

sudo -u postgres psql -c "CREATE USER testuser WITH PASSWORD 'testpass';"

sudo -u postgres psql -c "GRANT ALL PRIVILEGES ON DATABASE simonwillisonblog TO testuser;"

sudo -u postgres psql -c "ALTER USER testuser CREATEDB;"

pip install -r requirements.txt

I left "Agent internet access" off for reasons described previously.

Then I prompted Codex with the following (after one previous experimental task to check that it could run my tests):

Notes and blogmarks can both use Markdown.

They serve

meta property="og:description" content="tags on the page, but those tags include that raw Markdown which looks bad on social media previews.Fix it so they instead use just the text with markdown stripped - so probably render it to HTML and then strip the HTML tags.

Include passing tests.

Try to run the tests, the postgresql details are:

database = simonwillisonblog username = testuser password = testpass

Put those in the DATABASE_URL environment variable.

I left it to churn away for a few minutes (4m12s, to be precise) and it came back with a fix that edited two templates and added one more (passing) test. Here's that change in full.

And sure enough, the social media cards for my posts now look like this - no visible Markdown any more:

Jules. It seems like everyone is rolling out AI coding assistants that attach to your GitHub account and submit PRs for you right now. We had OpenAI Codex last week, today Microsoft announced GitHub Copilot coding agent (confusingly not the same thing as Copilot Workspace) and I found out just now that Google's Jules, announced in December, is now in a beta preview.

I'm flying home from PyCon but I managed to try out Jules from my phone. I took this GitHub issue thread, converted it to copy-pasteable Markdown with this tool and pasted it into Jules, with no further instructions.

Here's the resulting PR created from its branch. I haven't fully reviewed it yet and the tests aren't passing, so it's hard to evaluate from my phone how well it did. In a cursory first glance it looks like it's covered most of the requirements from the issue thread.

My habit of creating long issue threads where I talk to myself about the features I'm planning is proving to be a good fit for outsourcing implementation work to this new generation of coding assistants.