Wednesday, 13th August 2025

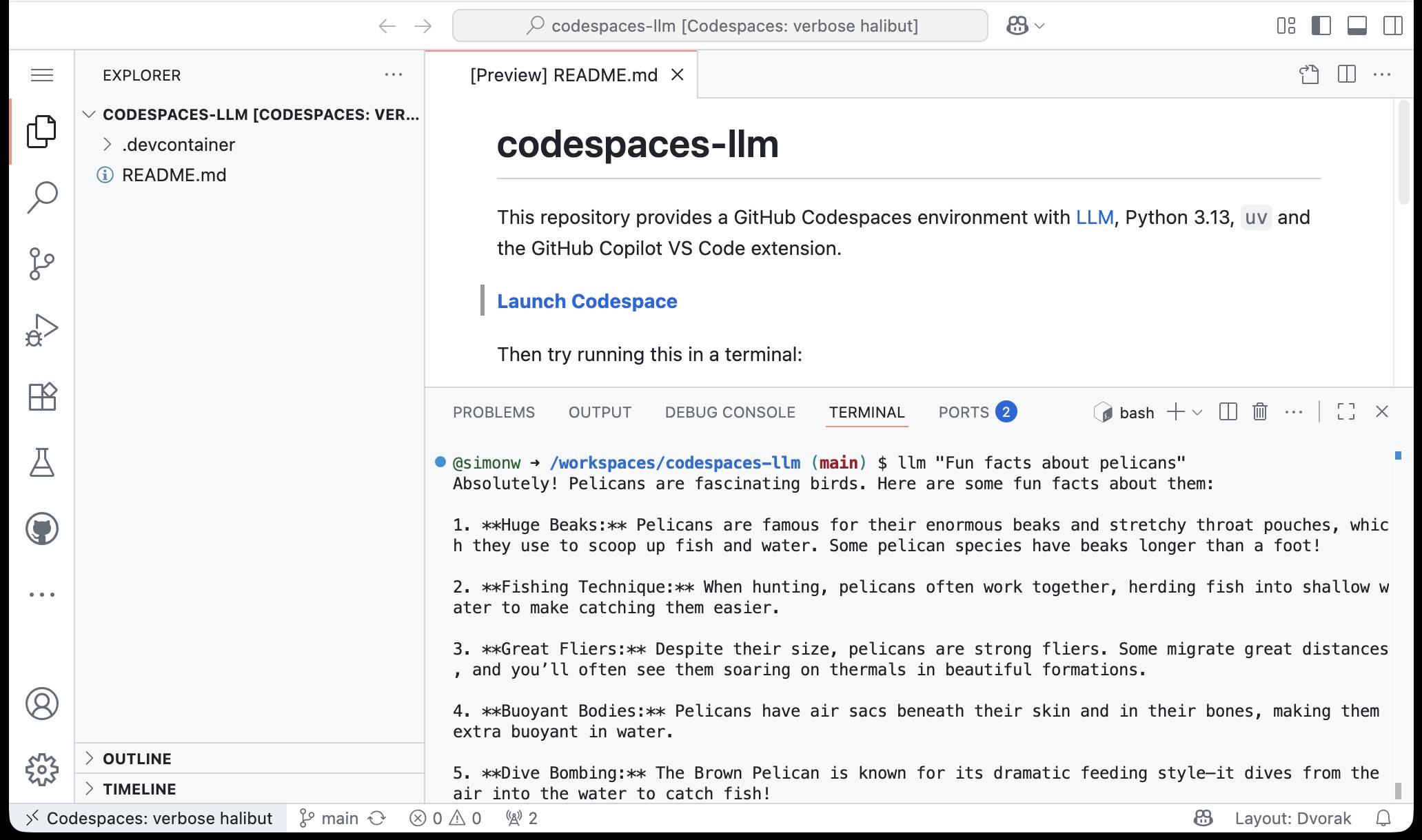

simonw/codespaces-llm. GitHub Codespaces provides full development environments in your browser, and is free to use with anyone with a GitHub account. Each environment has a full Linux container and a browser-based UI using VS Code.

I found out today that GitHub Codespaces come with a GITHUB_TOKEN environment variable... and that token works as an API key for accessing LLMs in the GitHub Models collection, which includes dozens of models from OpenAI, Microsoft, Mistral, xAI, DeepSeek, Meta and more.

Anthony Shaw's llm-github-models plugin for my LLM tool allows it to talk directly to GitHub Models. I filed a suggestion that it could pick up that GITHUB_TOKEN variable automatically and Anthony shipped v0.18.0 with that feature a few hours later.

... which means you can now run the following in any Python-enabled Codespaces container and get a working llm command:

pip install llm

llm install llm-github-models

llm models default github/gpt-4.1

llm "Fun facts about pelicans"

Setting the default model to github/gpt-4.1 means you get free (albeit rate-limited) access to that OpenAI model.

To save you from needing to even run that sequence of commands I've created a new GitHub repository, simonw/codespaces-llm, which pre-installs and runs those commands for you.

Anyone with a GitHub account can use this URL to launch a new Codespaces instance with a configured llm terminal command ready to use:

codespaces.new/simonw/codespaces-llm?quickstart=1

While putting this together I wrote up what I've learned about devcontainers so far as a TIL: Configuring GitHub Codespaces using devcontainers.

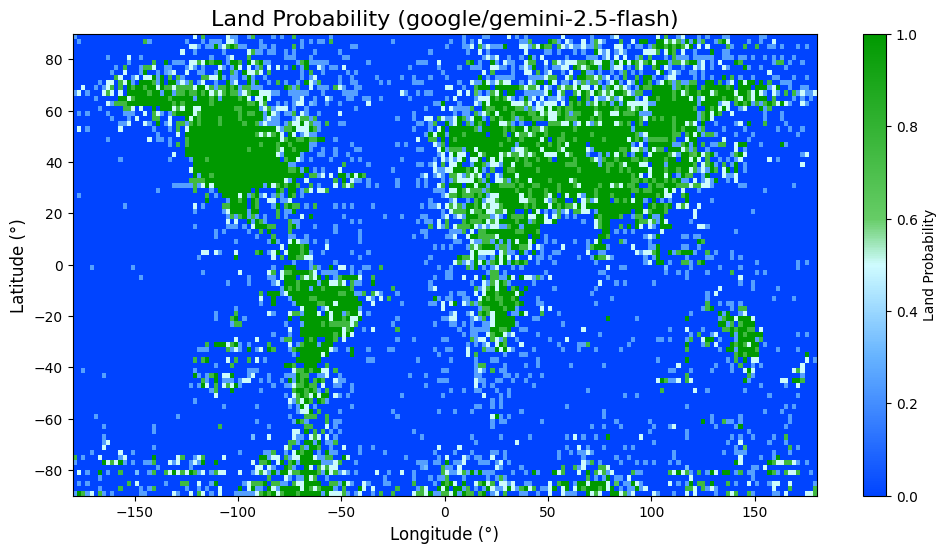

How Does A Blind Model See The Earth? (via) Fun, creative new micro-eval. Split the world into a sampled collection of latitude longitude points and for each one ask a model:

If this location is over land, say 'Land'. If this location is over water, say 'Water'. Do not say anything else.

Author henry goes a step further: for models that expose logprobs they use the relative probability scores of Land or Water to get a confidence level, for other models they prompt four times at temperature 1 to get a score.

And then.. they plot those probabilities on a chart! Here's Gemini 2.5 Flash (one of the better results):

This reminds me of my pelican riding a bicycle benchmark in that it gives you an instant visual representation that's very easy to compare between different models.

Screaming in the Cloud: AI’s Security Crisis: Why Your Assistant Might Betray You. I recorded this podcast conversation with Corey Quinn a few weeks ago:

On this episode of Screaming in the Cloud, Corey Quinn talks with Simon Willison, founder of Datasette and creator of LLM CLI about AI’s realities versus the hype. They dive into Simon’s “lethal trifecta” of AI security risks, his prediction of a major breach within six months, and real-world use cases of his open source tools, from investigative journalism to OSINT sleuthing. Simon shares grounded insights on coding with AI, the real environmental impact, AGI skepticism, and why human expertise still matters. A candid, hype-free take from someone who truly knows the space.

This was a really fun conversation - very high energy and we covered a lot of different topics. It's about a lot more than just LLM security.

pyx: a Python-native package registry, now in Beta (via) Since its first release, the single biggest question around the uv Python environment management tool has been around Astral's business model: Astral are a VC-backed company and at some point they need to start making real revenue.

Back in September Astral founder Charlie Marsh said the following:

I don't want to charge people money to use our tools, and I don't want to create an incentive structure whereby our open source offerings are competing with any commercial offerings (which is what you see with a lost of hosted-open-source-SaaS business models).

What I want to do is build software that vertically integrates with our open source tools, and sell that software to companies that are already using Ruff, uv, etc. Alternatives to things that companies already pay for today.

An example of what this might look like (we may not do this, but it's helpful to have a concrete example of the strategy) would be something like an enterprise-focused private package registry. [...]

It looks like those plans have become concrete now! From today's announcement:

TL;DR: pyx is a Python-native package registry --- and the first piece of the Astral platform, our next-generation infrastructure for the Python ecosystem.

We think of pyx as an optimized backend for uv: it's a package registry, but it also solves problems that go beyond the scope of a traditional "package registry", making your Python experience faster, more secure, and even GPU-aware, both for private packages and public sources (like PyPI and the PyTorch index).

pyx is live with our early partners, including Ramp, Intercom, and fal [...]

This looks like a sensible direction to me, and one that stays true to Charlie's promises to carefully design the incentive structure to avoid corrupting the core open source project that the Python community is coming to depend on.