Saturday, 7th December 2024

A test of how seriously your firm is taking AI: when o-1 (& the new Gemini) came out this week, were there assigned folks who immediately ran the model through internal, validated, firm-specific benchmarks to see how useful it as? Did you update any plans or goals as a result?

Or do you not have people (including non-technical people) assigned to test the new models? No internal benchmarks? No perspective on how AI will impact your business that you keep up-to-date?

No one is going to be doing this for organizations, you need to do it yourself.

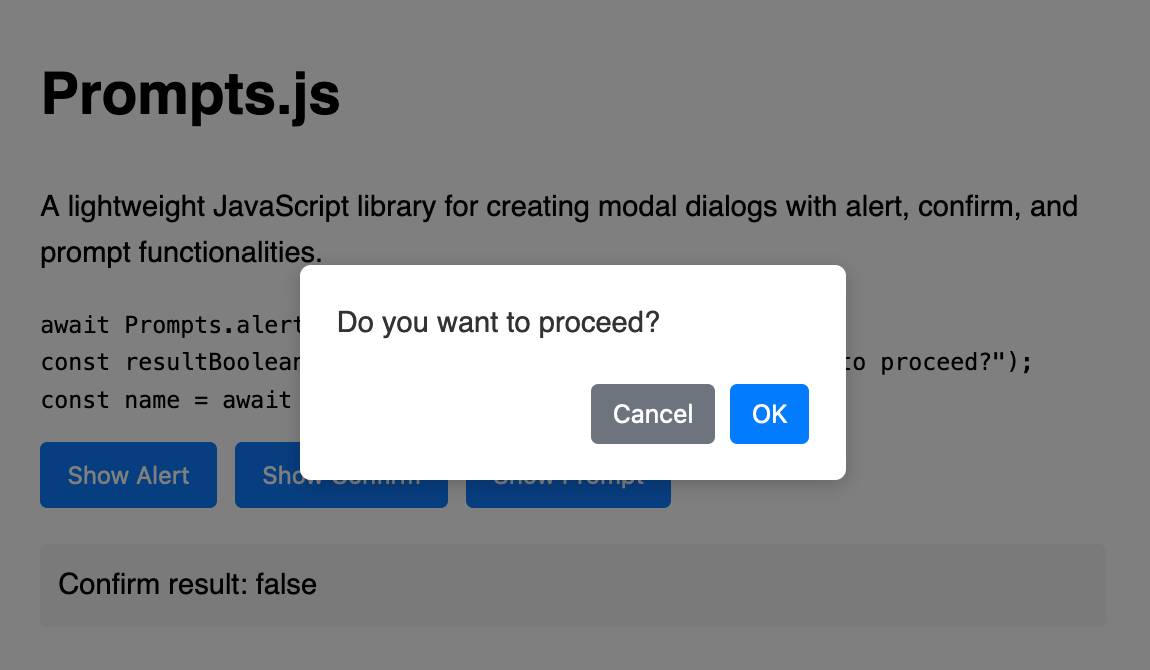

Prompts.js

I’ve been putting the new o1 model from OpenAI through its paces, in particular for code. I’m very impressed—it feels like it’s giving me a similar code quality to Claude 3.5 Sonnet, at least for Python and JavaScript and Bash... but it’s returning output noticeably faster.

[... 1,119 words]Writing down (and searching through) every UUID (via) Nolen Royalty built everyuuid.com, and this write-up of how he built it is utterly delightful.

First challenge: infinite scroll.

Browsers do not want to render a window that is over a trillion trillion pixels high, so I needed to handle scrolling and rendering on my own.

That means implementing hot keys and mouse wheel support and custom scroll bars with animation... mostly implemented with the help of Claude.

The really fun stuff is how Nolen implemented custom ordering - because "Scrolling through a list of UUIDs should be exciting!", but "it’d be disappointing if you scrolled through every UUID and realized that you hadn’t seen one. And it’d be very hard to show someone a UUID that you found if you couldn’t scroll back to the same spot to find it."

And if that wasn't enough... full text search! How can you efficiently search (or at least pseudo-search) for text across 5.3 septillion values? The trick there turned out to be generating a bunch of valid UUIDv4s containing the requested string and then picking the one closest to the current position on the page.