Weeknotes: Self-hosted language models with LLM plugins, a new Datasette tutorial, a dozen package releases, a dozen TILs

16th July 2023

A lot of stuff to cover from the past two and a half weeks.

LLM and self-hosted language model plugins

My biggest project was the new version of my LLM tool for interacting with Large Language Models. LLM now accepts plugins for adding alternative language models to the tool, meaning it’s now applicable to more than just the OpenAI collection.

I figured out quite a few of the details of this while offline on a camping trip up in the Northern California redwoods, which forced the issue on figuring out how to work with LLMs that I could host on my own computer because I didn’t have a connection to access the OpenAI APIs.

Comprehensive documentation is sorely lacking in the world of generative AI. I’ve decided to push back against that for LLM, so I spent a bunch of time working on an extremely comprehensive tutorial for writing a plugin that adds a new language model to the tool:

As part of researching this tutorial I finally figured out how to build a Python package using just a pyproject.toml file, with no setup.py or setup.cfg or anything else like that. I wrote that up in detail in Python packages with pyproject.toml and nothing else, and I’ve started using that pattern for all of my new Python packages.

LLM also now includes a Python API for interacting with models, which provides an abstraction that works the same for the OpenAI models and for other models (including self-hosted models) installed via plugins. Here’s the documentation for that—it ends up looking like this:

import llm model = llm.get_model("gpt-3.5-turbo") model.key = 'YOUR_API_KEY_HERE' response = model.prompt("Five surprising names for a pet pelican") for chunk in response: print(chunk, end="")

To use another model, just swap its name in for gpt-3.5-turbo. The self-hosted models provided by the llm-gpt4all plugin work the same way:

pip install llm-gpt4allThen:

import llm model = llm.get_model("ggml-vicuna-7b-1") response = model.prompt("Five surprising names for a pet pelican") # You can do this instead of looping through the chunks: print(response.text())

I’ve released three plugins so far:

- llm-gpt4all with 17 self-hosted models from the GPT4All project.

- llm-palm with Google’s PaLM 2 language model, via their API.

- llm-mpt30b providing the 19GB MPT-30B model, using TheBloke/mpt-30B-GGML.

I’m looking forward to someone else following the tutorial and releasing their own plugin!

A new tutorial: Data analysis with SQLite and Python

I presented this as a 2hr45m tutorial at PyCon a few months ago. The video is now available, and I like to try to turn these kinds of things into more permanent documentation.

The Datasette website has a growing collection of tutorials, and I decided to make that the final home for this one too.

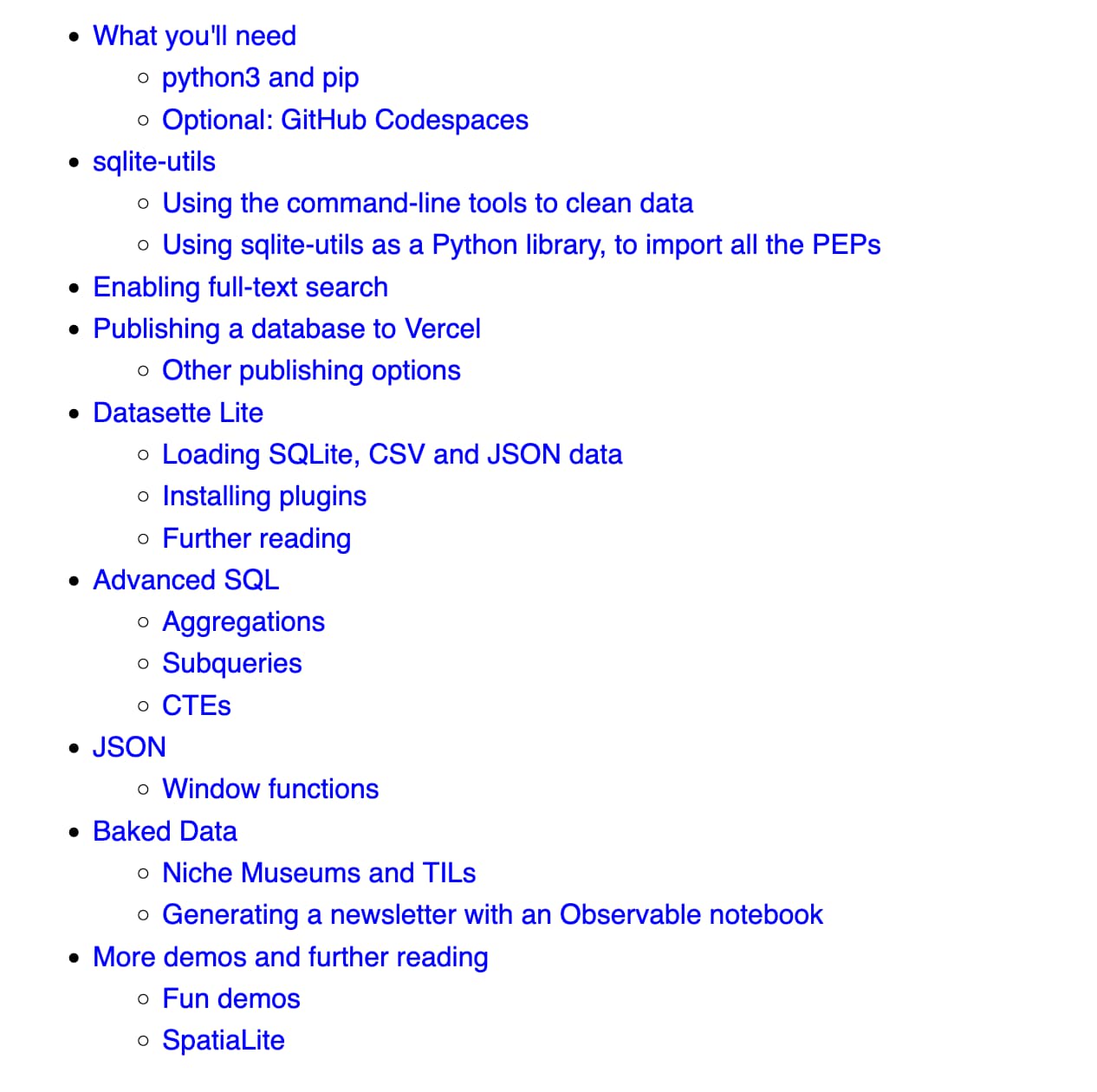

Data analysis with SQLite and Python now has the full 2hr45m video plus an improved version of the handout I used for the talk. The written material there there should also be valuable for people who don’t want to spend nearly three hours watching the video!

As part of putting that page together I solved a problem I’ve been wanting to figure out for a long time: I figured out a way to build a custom Jinja block tag that looks like this:

{% markdown %}

# This will be rendered as markdown

- Bulleted

- List

{% endmarkdown %}I released that in datasette-render-markdown 2.2. I also wrote up a TIL on Custom Jinja template tags with attributes describing the pattern I used.

One bonus feature for that tutorial: I decided to drop in a nested table of contents, automatically derived from the HTML headers on the page.

I wrote the code for this entirely using the new ChatGPT Code Interpreter, which can write Python based on your description and, crucially, execute it and see if it works.

Here’s my ChatGPT transcript showing how I built the feature.

I’ve been using ChatGPT Code Interpreter for a few months now, and I’m completely hooked: I think it’s the most interesting thing in the whole AI space at the moment.

I participated in a Code Interpreter Latent Space episode to talk about it, which ended up drawing 17,000 listeners on Twitter Spaces and is now also available as a podcast episode, neatly edited together by swyx.

Symbex --check and --rexec

Symbex is my Python CLI tool for quickly finding Python functions and classes and outputting either the full code or just the signature of the matching symbol. I first wrote about that here.

symbex 1.1 adds two new features.

symbex --function --undocumented --checkThis new --check mode is designed to run in Continuous Integration environments. If it finds any symbols matching the filters (in this case functions that are missing their docstring) it returns a non-zero exit code, which will fail the CI step.

It’s an imitation of black . --check—the idea is that Symbex can now be used to enforce code quality issues like docstrings and the presence of type annotations.

The other new feature is --rexec. This is an extension of the existing --replace feature, which lets you find a symbol in your code and replace its body with new code.

--rexec takes a shell expression. The body of the matching symbol will be piped into that command, and its output will be used as the replacement.

Which means you can do things like this:

symbex my_function \

--rexec "llm --system 'add type hints and a docstring'"This will find def my_function() and its body, pass that through llm (using the gpt-3.5-turbo default model, but you can specify -m gpt-4 or any other model to use something else), and then take the output and update the file in-place with the new implementation.

As a demo, I ran it against this:

def my_function(a, b): return a + b + 3

And got back:

def my_function(a: int, b: int) -> int: """ Returns the sum of two integers (a and b) plus 3. Parameters: a (int): The first integer. b (int): The second integer. Returns: int: The sum of a and b plus 3. """ return a + b + 3

Obviously this is fraught with danger, and you should only run this against code that has already been committed to Git and hence can be easily recovered... but it’s a really fun trick!

ttok --encode --decode

ttok is my CLI tool for counting tokens, as used by LLM models such as GPT-4. ttok 0.2 adds a requested feature to help make tokens easier to understand, best illustrated by this demo:

ttok Hello world

# Outputs 2 - the number of tokens

ttok Hello world --encode

# Outputs 9906 1917 - the encoded tokens

ttok 9906 1917 --decode

# Outputs Hello world - decoding the tokens back again

ttok Hello world --encode --tokens

# Outputs [b'Hello', b' world']Being able to easily see the encoded tokens including whitespace (the b' world' part) is particularly useful for understanding how the tokens all fit together.

I wrote more about GPT tokenization in understanding GPT tokenizers.

TIL this week

- Using tree-sitter with Python—2023-07-14

- Auto-formatting YAML files with yamlfmt—2023-07-13

- Quickly testing code in a different Python version using pyenv—2023-07-10

- Using git-filter-repo to set commit dates to author dates—2023-07-10

- Using OpenAI functions and their Python library for data extraction—2023-07-10

- Python packages with pyproject.toml and nothing else—2023-07-08

- Syntax highlighted code examples in Datasette—2023-07-02

- Custom Jinja template tags with attributes—2023-07-02

- Local wildcard DNS on macOS with dnsmasq—2023-06-30

- A Discord bot to expand issue links to a private GitHub repository—2023-06-30

- Bulk editing status in GitHub Projects—2023-06-29

- CLI tools hidden in the Python standard library—2023-06-29

Releases this week

-

symbex 1.1—2023-07-16

Find the Python code for specified symbols -

llm-mpt30b 0.1—2023-07-12

LLM plugin adding support for the MPT-30B language model -

llm-markov 0.1—2023-07-12

Plugin for LLM adding a Markov chain generating model -

llm-gpt4all 0.1—2023-07-12

Plugin for LLM adding support for the GPT4All collection of models -

llm-palm 0.1—2023-07-12

Plugin for LLM adding support for Google’s PaLM 2 model -

llm 0.5—2023-07-12

Access large language models from the command-line -

ttok 0.2—2023-07-10

Count and truncate text based on tokens -

strip-tags 0.5.1—2023-07-09

CLI tool for stripping tags from HTML -

pocket-to-sqlite 0.2.3—2023-07-09

Create a SQLite database containing data from your Pocket account -

datasette-render-markdown 2.2—2023-07-02

Datasette plugin for rendering Markdown -

asgi-proxy-lib 0.1a0—2023-07-01

An ASGI function for proxying to a backend over HTTP -

datasette-upload-csvs 0.8.3—2023-06-28

Datasette plugin for uploading CSV files and converting them to database tables

More recent articles

- Phoenix.new is Fly's entry into the prompt-driven app development space - 23rd June 2025

- Trying out the new Gemini 2.5 model family - 17th June 2025

- The lethal trifecta for AI agents: private data, untrusted content, and external communication - 16th June 2025