Thursday, 26th June 2025

Two interesting new products for running code in a sandbox today.

Cloudflare launched their Containers product in open beta, and added a new Sandbox library for Cloudflare Workers that can run commands in a "secure, container-based environment":

import { getSandbox } from "@cloudflare/sandbox";

const sandbox = getSandbox(env.Sandbox, "my-sandbox");

const output = sandbox.exec("ls", ["-la"]);Vercel shipped a similar feature, introduced in Run untrusted code with Vercel Sandbox, which enables code that looks like this:

import { Sandbox } from "@vercel/sandbox";

const sandbox = await Sandbox.create();

await sandbox.writeFiles([

{ path: "script.js", stream: Buffer.from(result.text) },

]);

await sandbox.runCommand({

cmd: "node",

args: ["script.js"],

stdout: process.stdout,

stderr: process.stderr,

});In both cases a major intended use-case is safely executing code that has been created by an LLM.

Yesterday Anthropic got a bunch of buzz out of their new window.claude.complete() API which allows Claude Artifacts to run their own API calls to execute prompts.

It turns out Gemini had beaten them to that feature by over a month, but the announcement was tucked away in a bullet point of their release notes for the 20th of May:

Vibe coding apps in Canvas just got better too! With just a few prompts, you can now build fully functional personalised apps in Canvas that can use Gemini-powered features, save data between sessions and share data between multiple users.

Ethan Mollick has been building some neat demos on top of Gemini Canvas, including this text adventure starship bridge simulator.

Similar to Claude Artifacts, Gemini Canvas detects if the application uses APIs that require authentication (to run prompts, for example) and requests the user sign in with their Google account:

![Futuristic sci-fi interface screenshot showing "Helm Control" at top with navigation buttons for Helm, Comms, Science, Tactical, Engineering, and Operations, displaying red error message "[SYSTEM_ERROR] Connection to AI core failed: API error: 403. This may be an authentication issue." with command input field showing "Enter command..." and Send button, plus Google Account sign-in notification at bottom stating "You need to sign in with your Google Account to see some features" with Sign in button and X close icon](https://static.simonwillison.net/static/2025/gemini-auth.jpg)

Introducing Gemma 3n: The developer guide. Extremely consequential new open weights model release from Google today:

Multimodal by design: Gemma 3n natively supports image, audio, video, and text inputs and text outputs.

Optimized for on-device: Engineered with a focus on efficiency, Gemma 3n models are available in two sizes based on effective parameters: E2B and E4B. While their raw parameter count is 5B and 8B respectively, architectural innovations allow them to run with a memory footprint comparable to traditional 2B and 4B models, operating with as little as 2GB (E2B) and 3GB (E4B) of memory.

This is very exciting: a 2B and 4B model optimized for end-user devices which accepts text, images and audio as inputs!

Gemma 3n is also the most comprehensive day one launch I've seen for any model: Google partnered with "AMD, Axolotl, Docker, Hugging Face, llama.cpp, LMStudio, MLX, NVIDIA, Ollama, RedHat, SGLang, Unsloth, and vLLM" so there are dozens of ways to try this out right now.

So far I've run two variants on my Mac laptop. Ollama offer a 7.5GB version (full tag gemma3n:e4b-it-q4_K_M0) of the 4B model, which I ran like this:

ollama pull gemma3n

llm install llm-ollama

llm -m gemma3n:latest "Generate an SVG of a pelican riding a bicycle"

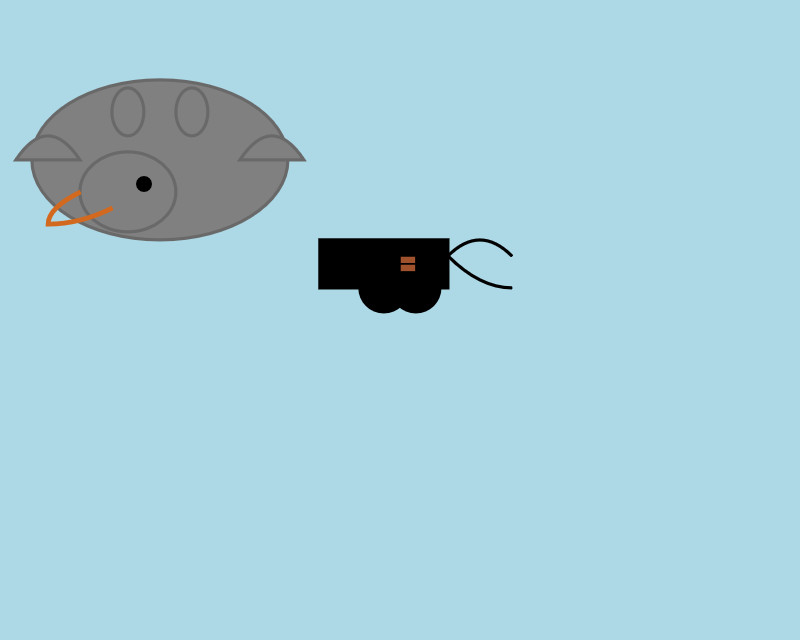

It drew me this:

The Ollama version doesn't appear to support image or audio input yet.

... but the mlx-vlm version does!

First I tried that on this WAV file like so (using a recipe adapted from Prince Canuma's video):

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 100 \

--temperature 0.7 \

--prompt "Transcribe the following speech segment in English:" \

--audio pelican-joke-request.wav

That downloaded a 15.74 GB bfloat16 version of the model and output the following correct transcription:

Tell me a joke about a pelican.

Then I had it draw me a pelican for good measure:

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 100 \

--temperature 0.7 \

--prompt "Generate an SVG of a pelican riding a bicycle"

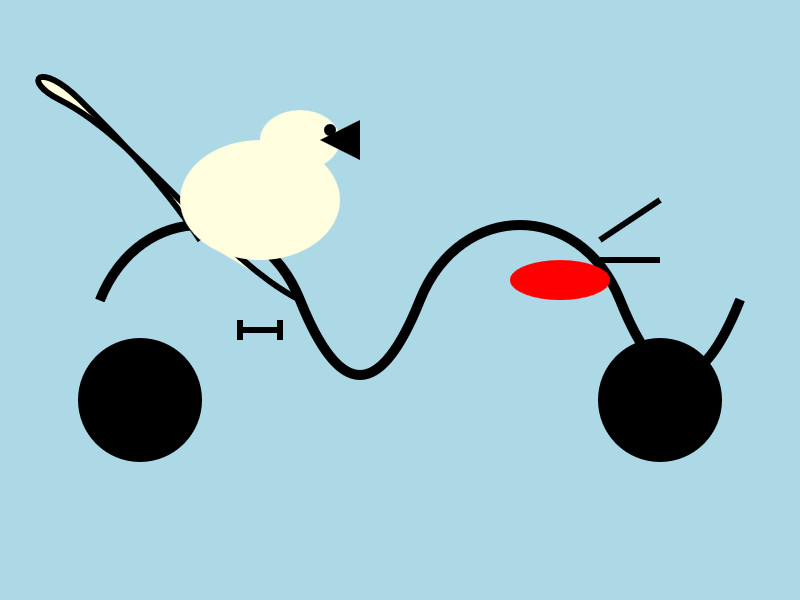

I quite like this one:

It's interesting to see such a striking visual difference between those 7.5GB and 15GB model quantizations.

Finally, I had it describe the image it had just created:

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 1000 \

--prompt "Describe image" \

--image gemma3n-mlx-vlm.jpg

Here's what I got back - it thought this was a chemical diagram!

The image is a cartoon-style illustration depicting a molecular structure against a light blue background. The structure is composed of several differently colored and shaped elements connected by curved black lines.

Here's a breakdown of the elements:

- Large Black Circles: There are two large, solid black circles, likely representing larger molecules or components.

- Cream-Colored Oval: A prominent cream-colored oval shape is centrally located and connected to other elements. It has a smaller, darker cream-colored sphere attached to its left side.

- Red Oval: A smaller, solid red oval is positioned near one of the black circles and connected to the cream-colored oval.

- Black Lines: These lines act as bonds, connecting the various molecular components. They are curved and dynamic, suggesting movement or interaction.

- Triangular Shape: A small black triangle is attached to the smaller cream-colored sphere.

- Letter "I": The letter "I" appears twice, likely labeling specific parts of the molecule.

The overall impression is of a simplified representation of a biological molecule, possibly a protein or a complex organic compound. The use of different colors helps to distinguish the various components within the structure.