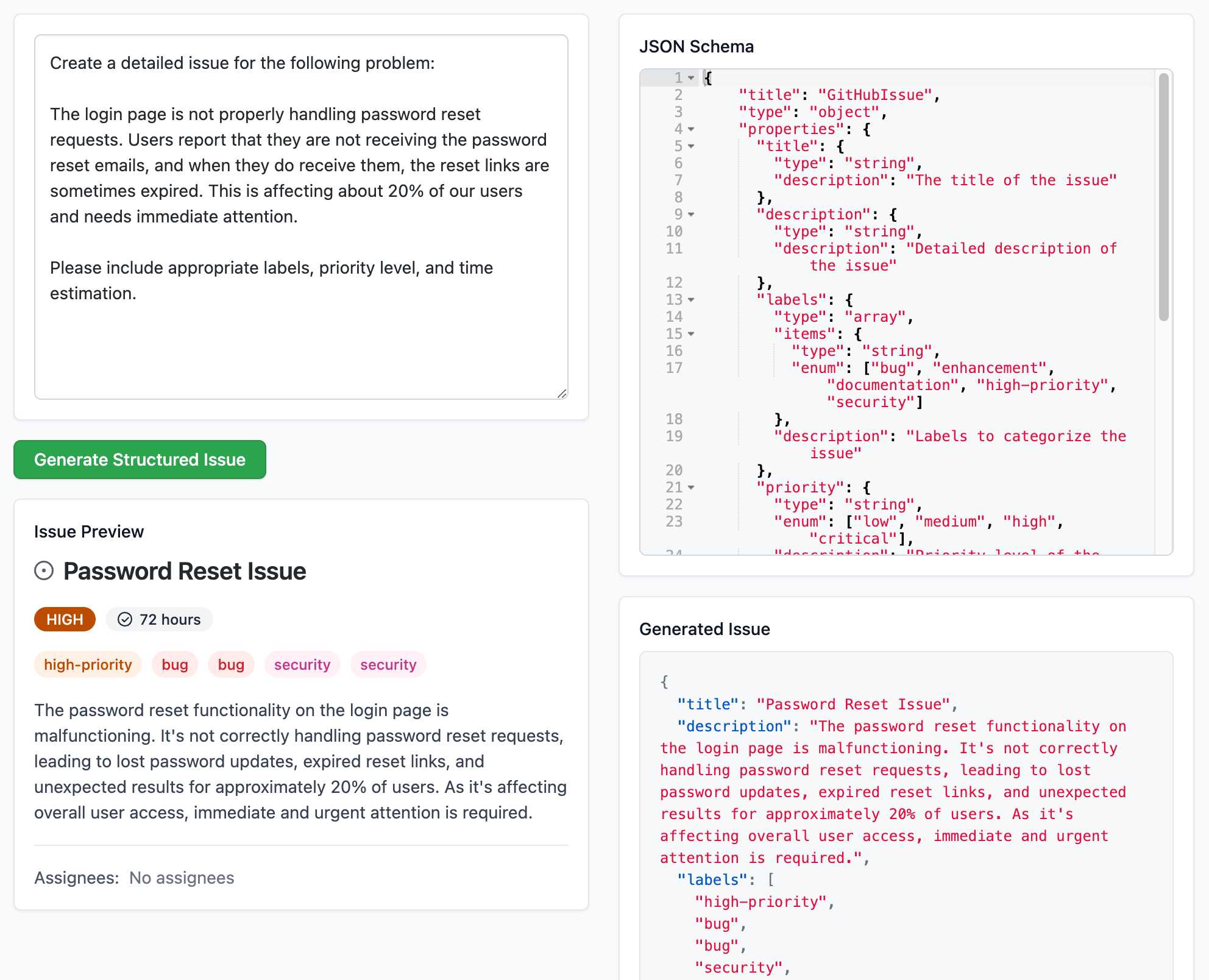

Structured Generation w/ SmolLM2 running in browser & WebGPU (via) Extraordinary demo by Vaibhav Srivastav (VB). Here's Hugging Face's SmolLM2-1.7B-Instruct running directly in a web browser (using WebGPU, so requires Chrome for the moment) demonstrating structured text extraction, converting a text description of an image into a structured GitHub issue defined using JSON schema.

The page loads 924.8MB of model data (according to this script to sum up files in window.caches) and performs everything in-browser. I did not know a model this small could produce such useful results.

Here's the source code for the demo. It's around 200 lines of code, 50 of which are the JSON schema describing the data to be extracted.

The real secret sauce here is web-llm by MLC. This library has made loading and executing prompts through LLMs in the browser shockingly easy, and recently incorporated support for MLC's XGrammar library (also available in Python) which implements both JSON schema and EBNF-based structured output guidance.

Recent articles

- Phoenix.new is Fly's entry into the prompt-driven app development space - 23rd June 2025

- Trying out the new Gemini 2.5 model family - 17th June 2025

- The lethal trifecta for AI agents: private data, untrusted content, and external communication - 16th June 2025