Friday, 20th December 2024

50% of cybersecurity is endlessly explaining that consumer VPNs don’t address any real cybersecurity issues. They are basically only useful for bypassing geofences and making money telling people they need to buy a VPN.

Man-in-the-middle attacks on Public WiFi networks haven't been a realistic threat in a decade. Almost all websites use encryption by default, and anything of value uses HSTS to prevent attackers from downgrading / disabling encryption. It's a non issue.

Building effective agents (via) My principal complaint about the term "agents" is that while it has many different potential definitions most of the people who use it seem to assume that everyone else shares and understands the definition that they have chosen to use.

This outstanding piece by Erik Schluntz and Barry Zhang at Anthropic bucks that trend from the start, providing a clear definition that they then use throughout.

They discuss "agentic systems" as a parent term, then define a distinction between "workflows" - systems where multiple LLMs are orchestrated together using pre-defined patterns - and "agents", where the LLMs "dynamically direct their own processes and tool usage". This second definition is later expanded with this delightfully clear description:

Agents begin their work with either a command from, or interactive discussion with, the human user. Once the task is clear, agents plan and operate independently, potentially returning to the human for further information or judgement. During execution, it's crucial for the agents to gain “ground truth” from the environment at each step (such as tool call results or code execution) to assess its progress. Agents can then pause for human feedback at checkpoints or when encountering blockers. The task often terminates upon completion, but it’s also common to include stopping conditions (such as a maximum number of iterations) to maintain control.

That's a definition I can live with!

They also introduce a term that I really like: the augmented LLM. This is an LLM with augmentations such as tools - I've seen people use the term "agents" just for this, which never felt right to me.

The rest of the article is the clearest practical guide to building systems that combine multiple LLM calls that I've seen anywhere.

Most of the focus is actually on workflows. They describe five different patterns for workflows in detail:

- Prompt chaining, e.g. generating a document and then translating it to a separate language as a second LLM call

- Routing, where an initial LLM call decides which model or call should be used next (sending easy tasks to Haiku and harder tasks to Sonnet, for example)

- Parallelization, where a task is broken up and run in parallel (e.g. image-to-text on multiple document pages at once) or processed by some kind of voting mechanism

- Orchestrator-workers, where a orchestrator triggers multiple LLM calls that are then synthesized together, for example running searches against multiple sources and combining the results

- Evaluator-optimizer, where one model checks the work of another in a loop

These patterns all make sense to me, and giving them clear names makes them easier to reason about.

When should you upgrade from basic prompting to workflows and then to full agents? The authors provide this sensible warning:

When building applications with LLMs, we recommend finding the simplest solution possible, and only increasing complexity when needed. This might mean not building agentic systems at all.

But assuming you do need to go beyond what can be achieved even with the aforementioned workflow patterns, their model for agents may be a useful fit:

Agents can be used for open-ended problems where it’s difficult or impossible to predict the required number of steps, and where you can’t hardcode a fixed path. The LLM will potentially operate for many turns, and you must have some level of trust in its decision-making. Agents' autonomy makes them ideal for scaling tasks in trusted environments.

The autonomous nature of agents means higher costs, and the potential for compounding errors. We recommend extensive testing in sandboxed environments, along with the appropriate guardrails

They also warn against investing in complex agent frameworks before you've exhausted your options using direct API access and simple code.

The article is accompanied by a brand new set of cookbook recipes illustrating all five of the workflow patterns. The Evaluator-Optimizer Workflow example is particularly fun, setting up a code generating prompt and an code reviewing evaluator prompt and having them loop until the evaluator is happy with the result.

December in LLMs has been a lot

I had big plans for December: for one thing, I was hoping to get to an actual RC of Datasette 1.0, in preparation for a full release in January. Instead, I’ve found myself distracted by a constant barrage of new LLM releases.

[... 901 words]Live blog: the 12th day of OpenAI—“Early evals for OpenAI o3”

It’s the final day of OpenAI’s 12 Days of OpenAI launch series, and since I built a live blogging system a couple of months ago I’ve decided to roll it out again to provide live commentary during the half hour event, which kicks off at 10am San Francisco time.

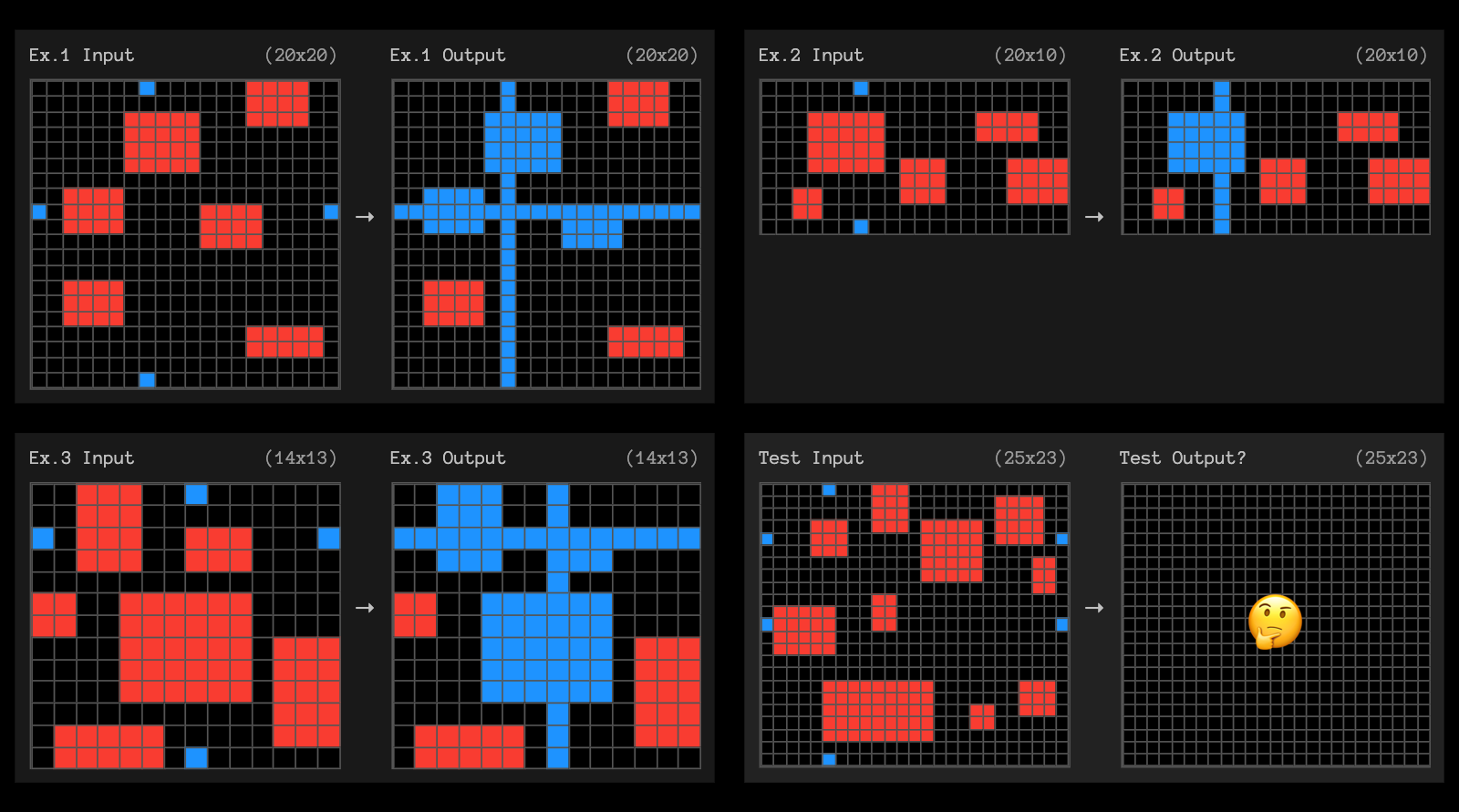

[... 76 words]OpenAI's new o3 system - trained on the ARC-AGI-1 Public Training set - has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.

This is a surprising and important step-function increase in AI capabilities, showing novel task adaptation ability never seen before in the GPT-family models. For context, ARC-AGI-1 took 4 years to go from 0% with GPT-3 in 2020 to 5% in 2024 with GPT-4o. All intuition about AI capabilities will need to get updated for o3.

— François Chollet, Co-founder, ARC Prize

OpenAI o3 breakthrough high score on ARC-AGI-PUB. François Chollet is the co-founder of the ARC Prize and had advanced access to today's o3 results. His article here is the most insightful coverage I've seen of o3, going beyond just the benchmark results to talk about what this all means for the field in general.

One fascinating detail: it cost $6,677 to run o3 in "high efficiency" mode against the 400 public ARC-AGI puzzles for a score of 82.8%, and an undisclosed amount of money to run the "low efficiency" mode model to score 91.5%. A note says:

o3 high-compute costs not available as pricing and feature availability is still TBD. The amount of compute was roughly 172x the low-compute configuration.

So we can get a ballpark estimate here in that 172 * $6,677 = $1,148,444!

Here's how François explains the likely mechanisms behind o3, which reminds me of how a brute-force chess computer might work.

For now, we can only speculate about the exact specifics of how o3 works. But o3's core mechanism appears to be natural language program search and execution within token space – at test time, the model searches over the space of possible Chains of Thought (CoTs) describing the steps required to solve the task, in a fashion perhaps not too dissimilar to AlphaZero-style Monte-Carlo tree search. In the case of o3, the search is presumably guided by some kind of evaluator model. To note, Demis Hassabis hinted back in a June 2023 interview that DeepMind had been researching this very idea – this line of work has been a long time coming.

So while single-generation LLMs struggle with novelty, o3 overcomes this by generating and executing its own programs, where the program itself (the CoT) becomes the artifact of knowledge recombination. Although this is not the only viable approach to test-time knowledge recombination (you could also do test-time training, or search in latent space), it represents the current state-of-the-art as per these new ARC-AGI numbers.

Effectively, o3 represents a form of deep learning-guided program search. The model does test-time search over a space of "programs" (in this case, natural language programs – the space of CoTs that describe the steps to solve the task at hand), guided by a deep learning prior (the base LLM). The reason why solving a single ARC-AGI task can end up taking up tens of millions of tokens and cost thousands of dollars is because this search process has to explore an enormous number of paths through program space – including backtracking.

I'm not sure if o3 (and o1 and similar models) even qualifies as an LLM any more - there's clearly a whole lot more going on here than just next-token prediction.

On the question of if o3 should qualify as AGI (whatever that might mean):

Passing ARC-AGI does not equate to achieving AGI, and, as a matter of fact, I don't think o3 is AGI yet. o3 still fails on some very easy tasks, indicating fundamental differences with human intelligence.

Furthermore, early data points suggest that the upcoming ARC-AGI-2 benchmark will still pose a significant challenge to o3, potentially reducing its score to under 30% even at high compute (while a smart human would still be able to score over 95% with no training).

The post finishes with examples of the puzzles that o3 didn't manage to solve, including this one which reassured me that I can still solve at least some puzzles that couldn't be handled with thousands of dollars of GPU compute!