Friday, 1st December 2023

So something everybody I think pretty much agrees on, including Sam Altman, including Yann LeCun, is LLMs aren't going to make it. The current LLMs are not a path to ASI. They're getting more and more expensive, they're getting more and more slow, and the more we use them, the more we realize their limitations.

We're also getting better at taking advantage of them, and they're super cool and helpful, but they appear to be behaving as extremely flexible, fuzzy, compressed search engines, which when you have enough data that's kind of compressed into the weights, turns out to be an amazingly powerful operation to have at your disposal.

[...] And the thing you can really see missing here is this planning piece, right? So if you try to get an LLM to solve fairly simple graph coloring problems or fairly simple stacking problems, things that require backtracking and trying things and stuff, unless it's something pretty similar in its training, they just fail terribly.

[...] So that's the theory about what something like Q* might be, or just in general, how do we get past this current constraint that we have?

Seamless Communication (via) A new “family of AI research models” from Meta AI for speech and text translation. The live demo is particularly worth trying—you can record a short webcam video of yourself speaking and get back the same video with your speech translated into another language.

The key to it is the new SeamlessM4T v2 model, which supports 101 languages for speech input, 96 Languages for text input/output and 35 languages for speech output. SeamlessM4T-Large v2 is a 9GB file, available on Hugging Face.

Also in this release: SeamlessExpressive, which “captures certain underexplored aspects of prosody such as speech rate and pauses”—effectively maintaining things like expressed enthusiasm across languages.

Plus SeamlessStreaming, “a model that can deliver speech and text translations with around two seconds of latency”.

Write shaders for the Vegas sphere (via) Alexandre Devaux built this phenomenal three.js / WebGL demo, which displays a rotating flyover of the Vegas Sphere and lets you directly edit shader code to render your own animations on it and see what they would look like. The via Hacker News thread includes dozens of examples of scripts you can paste in.

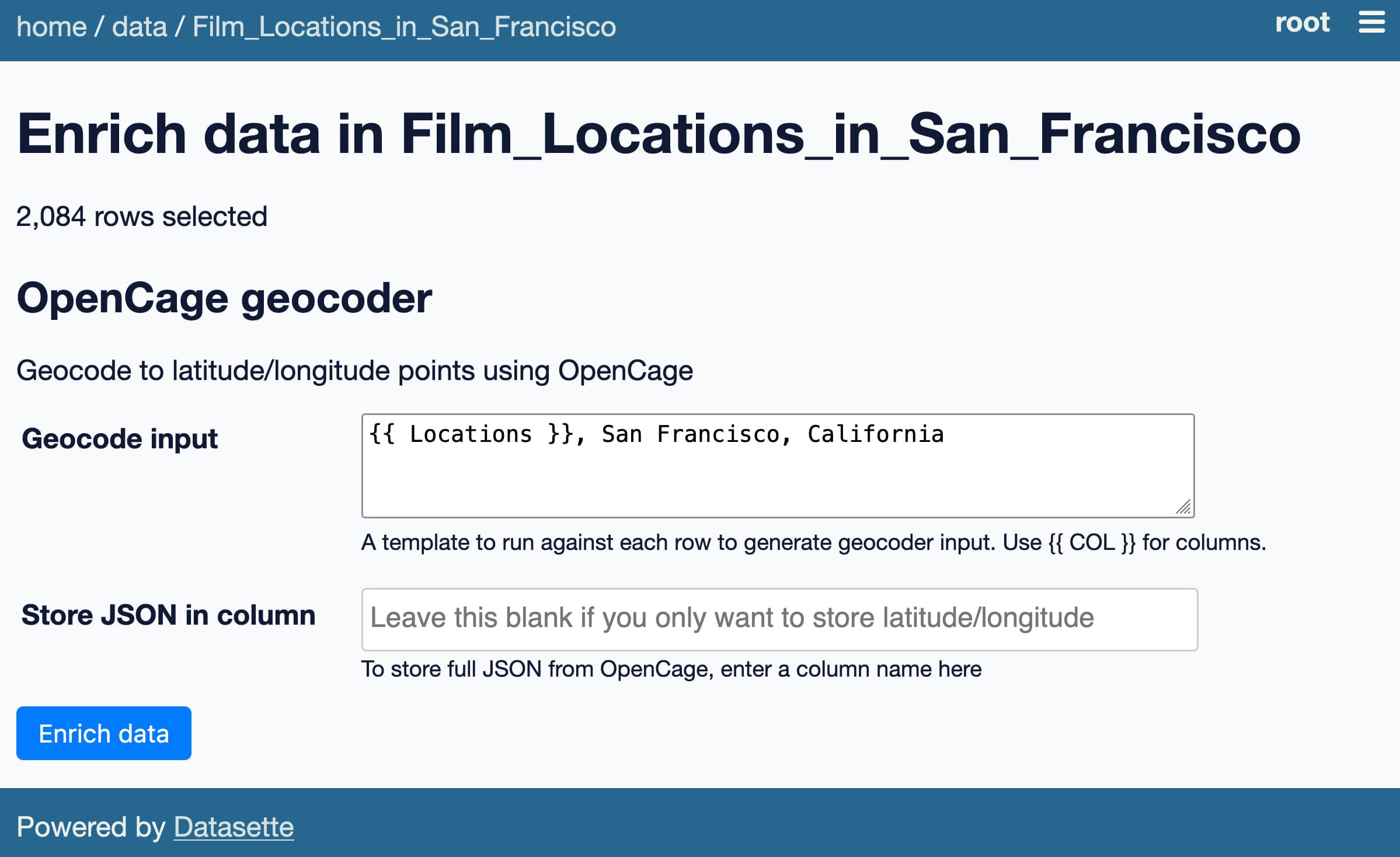

Datasette Enrichments: a new plugin framework for augmenting your data

Today I’m releasing datasette-enrichments, a new feature for Datasette which provides a framework for applying “enrichments” that can augment your data.

[... 1,202 words]