Building a searchable archive for the San Francisco Microscopical Society

25th August 2022

The San Francisco Microscopical Society was founded in 1870 by a group of scientists dedicated to advancing the field of microscopy.

Today the society is a non-profit run entirely by volunteers (they welcome new members).

This year they’ve been working with the UC Berkeley Bancroft Library to digitize their archives, which reach back to the founding of the organization.

Hep Svadja, Jenny Jacox and Ariel Waldman have taken the lead on this effort, resulting in a wealth of scanned PDF files covering 150 years of microscope history.

I’ve been helping out with the project running OCR against the archives (using Amazon Textract via my s3-ocr tool, which I built for this project) and turning them into a searchable website using Datasette.

The result is now live! Take a look at archive.sfmicrosociety.org.

Try running a search for newton to see the search feature in action.

You can also browse by folder structure or by individual document.

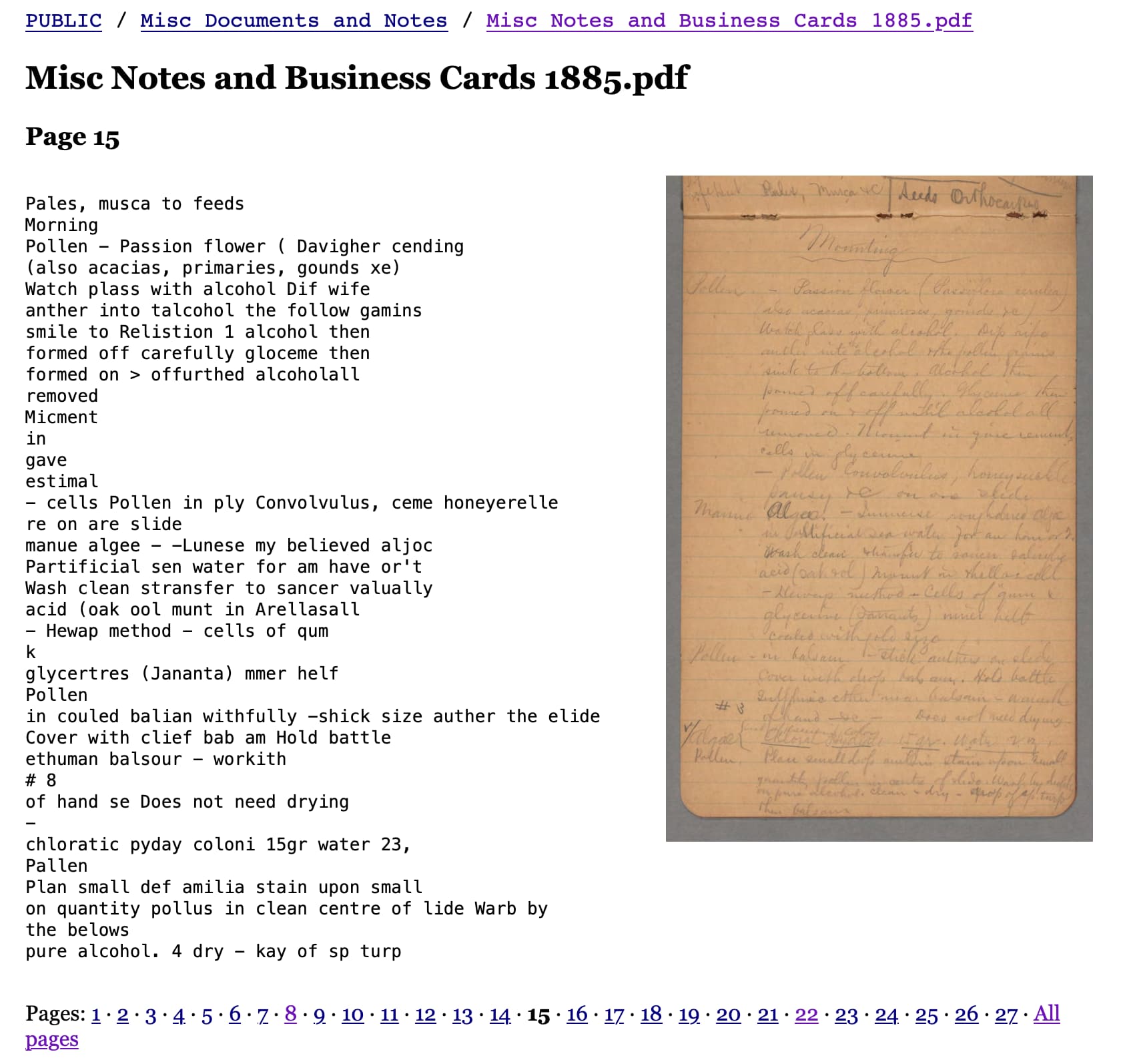

A neat thing about using AWS Textract is that it is significantly better at reading 19th century handwritten documents than I am.

Each document page is shown with the OCRd text alongside the original scan, so you can see that for yourself on this page.

How it works

The website itself is a custom Datasette instance hosted on Vercel—deployed using the datasette-publish-vercel plugin.

It’s an implementation of the Baked Data architectural pattern, where the database itself is packaged up as a SQLite and included as a binary file in the application that is deployed to Vercel.

You can directly browse and query the underlying database (a 12.4MB SQLite file) using the default Datasette interface at archive.sfmicrosociety.org/sfms.

The database schema (excluding the tables that enable full-text search) looks like this:

CREATE TABLE [documents] (

[id] TEXT PRIMARY KEY,

[title] TEXT,

[path] TEXT,

[etag] TEXT

)

CREATE TABLE [pages] (

[id] TEXT PRIMARY KEY,

[document_id] TEXT,

[page] INTEGER,

[text] TEXT,

FOREIGN KEY([document_id]) REFERENCES [documents]([id])

)As you can see, the site consists of documents and pages. Documents have a path (the directory structure), a title (the filename) and an ID. Pages belong to documents and have a text column and a page number. It’s a pretty simple schema!

The public pages on the site are all built using custom templates and views. You can browse the source code in the simonw/sfms-history GitHub repository.

In particular, the custom pages are implemented using a one-off site plugin (in the plugins/ folder) that adds extra routes to Datasette using the register_routes() plugin hook, like this:

@hookimpl def register_routes(): return [ (r"^/docs$", docs), (r"^/docs/(?P<document_id>[a-z0-9]+)$", document), (r"^/docs/(?P<document_id>[a-z0-9]+)/(?P<page>\d+)/?$", page), (r"^/folders/(?P<folder>.*)$", folder), ]

docs, document, page and folder are Python view functions that take a request and return a custom response.

Here’s the docs function for example, which powers the listing of all documents at archive.sfmicrosociety.org/docs:

async def docs(datasette, request): db = datasette.get_database("sfms") documents = [ to_document(doc) for doc in await db.execute( """ select documents.*, count(*) as num_pages from pages join documents on pages.document_id = documents.id group by documents.id order by path """ ) ] return Response.html( await datasette.render_template("docs.html", {"documents": documents}, request) )

And here’s the docs.html template it uses.

The index.html template which implements both the homepage and the search results page is worth a look too. It takes a slightly different approach, using the extra_template_vars() plugin hook to populate extra custom template variables that can be used by that page to loop through the search results.

I built that page first, before adding custom routes for the other pages—I should probably refactor that to use register_routes() instead for consistency.

OCRing the documents

The documents started out in a Google Drive. I used the Rclone tool to sync that to an S3 bucket, and then ran my s3-ocr tool against specific folders within that bucket to run them through AWS Textract.

Then I ran the s3-ocr index command to suck down the OCR results and build those into a SQLite database.

The resulting schema wasn’t quite right for this particular project. I wrote this build-db.sh script to construct a schema specifically designed for the archive, copy the data in and then enable full-text search on the resulting tables.

The SQLite database file containing the inital OCR results is called index.db. I used the following commands to attach that index.db file to the same connection as the sfms.db database and copy and re-shape the records that I wanted:

# Populate documents

sqlite-utils sfms.db --attach index2 index.db "$(cat <<EOF

insert into documents select

substr(s3_ocr_etag, 2, 8) as id,

key as title,

key as path,

replace(s3_ocr_etag, '"', '') as etag

from

index2.ocr_jobs

where

key in (

select path from index2.pages

where (

folder like 'INTAKE/%'

or folder like 'PUBLIC/%'

)

and folder not like '%PROCESSED INTAKE DOCUMENTS/%'

)

EOF

)"

# Populate pages

sqlite-utils sfms.db --attach index2 index.db "$(cat <<EOF

insert into pages select distinct

substr(s3_ocr_etag, 2, 8) || '-' || page as id,

substr(s3_ocr_etag, 2, 8) as document_id,

page,

text

from index2.pages

join index2.ocr_jobs

on index2.pages.path = index2.ocr_jobs.key

where

(

folder like 'INTAKE/%'

or folder like 'PUBLIC/%'

)

and folder not like '%PROCESSED INTAKE DOCUMENTS/%'

EOF

)"This script is run by GitHub Actions as part of the workflow that deploys the site to Vercel.

Images of pages

The site itself makes use of the OCRd text that is stored in the database—that’s how the search feature works.

But the scanned pages are visually so much more interesting than that!

I wanted to display those page images both as thumbnails and larger images, both to add visual appeal and because OCRd text loses a lot of the richness of the underlying records.

Since I already had the PDFs in an S3 bucket, the easiest way to build this was using imgix.

imgix offers a proxy service for running URL-defined transforms against images. I’ve used it on a bunch of projcets before: it’s really powerful.

In this case, given an imgix source configured against an S3 bucket an image for a specific page of a PDF can be constructed like this (newlines added for readability):

https://sfms-history.imgix.net/

PUBLIC/Scientific%20Documents/

MicroscopicGymnastics_J.EdwardsSmith-04-06-1876.pdf

?page=1

&w=200

&auto=format

&s=634c00249fbe4a2eda90f00be0ae66d6

Here we’re providing the path to the PDF in the S3 bucket, requesting a render of page 1 of that PDF, at width 200px and using auto=format which caues imgix to serve the image in an optimized format based on the capabilities of the user’s device.

The &s= parameter is a signature which can be configured to prevent people from constructing their own arbitrary URLs. In this case the S3 bucket has some files in that are not part of the public archive, so using a signature prevents people from guessing filenames and sniffing around in the bucket contents.

s3-ocr elsewhere

I built s3-ocr for this specific project, but it’s already starting to see use for other projects. My favourite example so far is the work Philip James has been doing to deploy search engines against OCRd meeting minutes for the cities of Alameda and Oakland (and soon more)—he wrote about that in detail is Digitizing 55,000 pages of civic meetings.

If you have an interesting archive of PDFs that need to be OCRd I would love to hear from you! Contact me on Twitter or email me at swillison at Google’s webmail provider.

Also this week

I got very distracted by Analyzing ScotRail audio announcements with Datasette—which did at least produce a new, detailed tutorial describing my process for approaching new projects with Datasette.

I added Plugin support to Datasete Lite.

Releases this week

-

datasette-multiline-links: 0.1—2022-08-24

Make multiple newline separated URLs clickable in Datasette -

datasette-publish-vercel: 0.14.2—(23 releases total)—2022-08-24

Datasette plugin for publishing data using Vercel -

datasette-edit-schema: 0.5.1—(10 releases total)—2022-08-22

Datasette plugin for modifying table schemas -

pocket-to-sqlite: 0.2.2—(4 releases total)—2022-08-22

Create a SQLite database containing data from your Pocket account -

datasette-mp3-audio: 0.2—(2 releases total)—2022-08-20

Turn .mp3 URLs into an audio player in the Datasette interface -

sqlite-diffable: 0.5—(6 releases total)—2022-08-18

Tools for dumping/loading a SQLite database to diffable directory structure -

datasette-sentry: 0.2—(5 releases total)—2022-08-14

Datasette plugin for configuring Sentry -

datasette: 0.62—(113 releases total)—2022-08-14

An open source multi-tool for exploring and publishing data -

s3-credentials: 0.13—(14 releases total)—2022-08-12

A tool for creating credentials for accessing S3 buckets

TIL this week

More recent articles

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026