8 posts tagged “nicholas-carlini”

2026

Two major new model releases today, within about 15 minutes of each other.

Anthropic released Opus 4.6. Here's its pelican:

OpenAI release GPT-5.3-Codex, albeit only via their Codex app, not yet in their API. Here's its pelican:

I've had a bit of preview access to both of these models and to be honest I'm finding it hard to find a good angle to write about them - they're both really good, but so were their predecessors Codex 5.2 and Opus 4.5. I've been having trouble finding tasks that those previous models couldn't handle but the new ones are able to ace.

The most convincing story about capabilities of the new model so far is Nicholas Carlini from Anthropic talking about Opus 4.6 and Building a C compiler with a team of parallel Claudes - Anthropic's version of Cursor's FastRender project.

2025

Previously, when malware developers wanted to go and monetize their exploits, they would do exactly one thing: encrypt every file on a person's computer and request a ransome to decrypt the files. In the future I think this will change.

LLMs allow attackers to instead process every file on the victim's computer, and tailor a blackmail letter specifically towards that person. One person may be having an affair on their spouse. Another may have lied on their resume. A third may have cheated on an exam at school. It is unlikely that any one person has done any of these specific things, but it is very likely that there exists something that is blackmailable for every person. Malware + LLMs, given access to a person's computer, can find that and monetize it.

— Nicholas Carlini, Are large language models worth it? Misuse: malware at scale

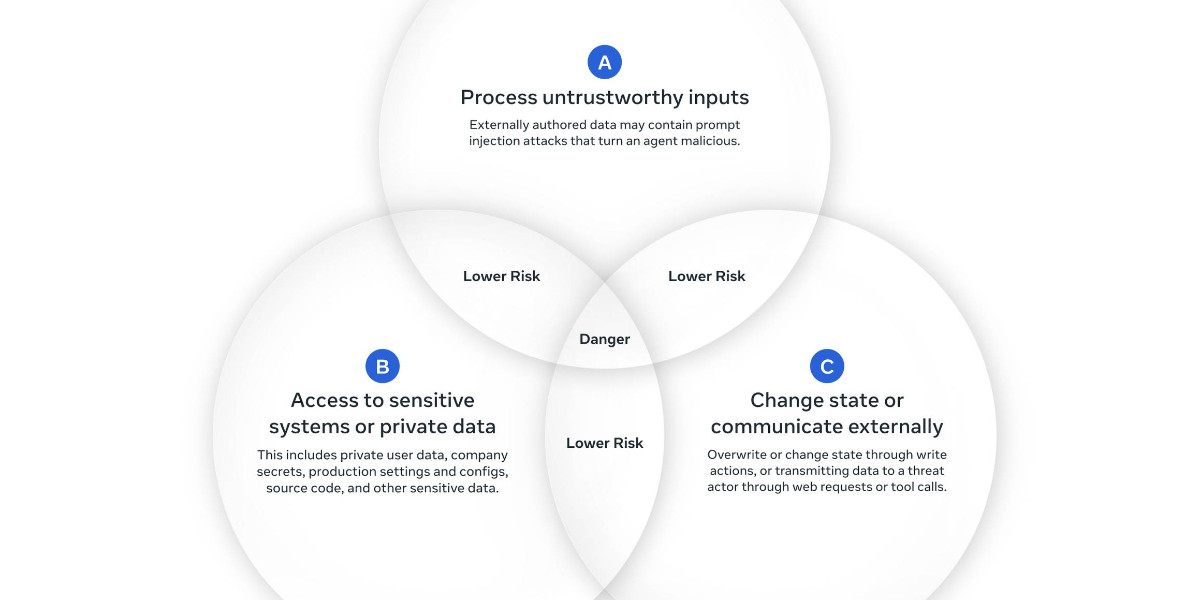

New prompt injection papers: Agents Rule of Two and The Attacker Moves Second

Two interesting new papers regarding LLM security and prompt injection came to my attention this weekend.

[... 1,433 words]My Thoughts on the Future of “AI”. Nicholas Carlini, previously deeply skeptical about the utility of LLMs, discusses at length his thoughts on where the technology might go.

He presents compelling, detailed arguments for both ends of the spectrum - his key message is that it's best to maintain very wide error bars for what might happen next:

I wouldn't be surprised if, in three to five years, language models are capable of performing most (all?) cognitive economically-useful tasks beyond the level of human experts. And I also wouldn't be surprised if, in five years, the best models we have are better than the ones we have today, but only in “normal” ways where costs continue to decrease considerably and capabilities continue to get better but there's no fundamental paradigm shift that upends the world order. To deny the potential for either of these possibilities seems to me to be a mistake.

If LLMs do hit a wall, it's not at all clear what that wall might be:

I still believe there is something fundamental that will get in the way of our ability to build LLMs that grow exponentially in capability. But I will freely admit to you now that I have no earthly idea what that limitation will be. I have no evidence that this line exists, other than to make some form of vague argument that when you try and scale something across many orders of magnitude, you'll probably run into problems you didn't see coming.

There's lots of great stuff in here. I particularly liked this explanation of how you get R1:

You take DeepSeek v3, and ask it to solve a bunch of hard problems, and when it gets the answers right, you train it to do more of that and less of whatever it did when it got the answers wrong. The idea here is actually really simple, and it works surprisingly well.

Career Update: Google DeepMind -> Anthropic. Nicholas Carlini (previously) on joining Anthropic, driven partly by his frustration at friction he encountered publishing his research at Google DeepMind after their merge with Google Brain. His area of expertise is adversarial machine learning.

The recent advances in machine learning and language modeling are going to be transformative [d] But in order to realize this potential future in a way that doesn't put everyone's safety and security at risk, we're going to need to make a lot of progress---and soon. We need to make so much progress that no one organization will be able to figure everything out by themselves; we need to work together, we need to talk about what we're doing, and we need to start doing this now.

2024

yet-another-applied-llm-benchmark. Nicholas Carlini introduced this personal LLM benchmark suite back in February as a collection of over 100 automated tests he runs against new LLM models to evaluate their performance against the kinds of tasks he uses them for.

There are two defining features of this benchmark that make it interesting. Most importantly, I've implemented a simple dataflow domain specific language to make it easy for me (or anyone else!) to add new tests that realistically evaluate model capabilities. This DSL allows for specifying both how the question should be asked and also how the answer should be evaluated. [...] And then, directly as a result of this, I've written nearly 100 tests for different situations I've actually encountered when working with LLMs as assistants

The DSL he's using is fascinating. Here's an example:

"Write a C program that draws an american flag to stdout." >> LLMRun() >> CRun() >> \

VisionLLMRun("What flag is shown in this image?") >> \

(SubstringEvaluator("United States") | SubstringEvaluator("USA")))

This triggers an LLM to execute the prompt asking for a C program that renders an American Flag, runs that through a C compiler and interpreter (executed in a Docker container), then passes the output of that to a vision model to guess the flag and checks that it returns a string containing "United States" or "USA".

The DSL itself is implemented entirely in Python, using the __rshift__ magic method for >> and __rrshift__ to enable strings to be piped into a custom object using "command to run" >> LLMRunNode.

The problem that you face is that it's relatively easy to take a model and make it look like it's aligned. You ask GPT-4, “how do I end all of humans?” And the model says, “I can't possibly help you with that”. But there are a million and one ways to take the exact same question - pick your favorite - and you can make the model still answer the question even though initially it would have refused. And the question this reminds me a lot of coming from adversarial machine learning. We have a very simple objective: Classify the image correctly according to the original label. And yet, despite the fact that it was essentially trivial to find all of the bugs in principle, the community had a very hard time coming up with actually effective defenses. We wrote like over 9,000 papers in ten years, and have made very very very limited progress on this one small problem. You all have a harder problem and maybe less time.

How I Use “AI” by Nicholas Carlini (via) Nicholas is an author on Universal and Transferable Adversarial Attacks on Aligned Language Models, one of my favorite LLM security papers from last year. He understands the flaws in this class of technology at a deeper level than most people.

Despite that, this article describes several of the many ways he still finds utility in these models in his own work:

But the reason I think that the recent advances we've made aren't just hype is that, over the past year, I have spent at least a few hours every week interacting with various large language models, and have been consistently impressed by their ability to solve increasingly difficult tasks I give them. And as a result of this, I would say I'm at least 50% faster at writing code for both my research projects and my side projects as a result of these models.

The way Nicholas is using these models closely matches my own experience - things like “Automating nearly every monotonous task or one-off script” and “Teaching me how to use various frameworks having never previously used them”.

I feel that this piece inadvertently captures the frustration felt by those of us who get value out of these tools on a daily basis and still constantly encounter people who are adamant that they offer no real value. Saying “this stuff is genuine useful” remains a surprisingly controversial statement, almost two years after the ChatGPT launch opened up LLMs to a giant audience.

I also enjoyed this footnote explaining why he put “AI” in scare quotes in the title:

I hate this word. It's not AI. But I want people who use this word, and also people who hate this word, to find this post. And so I guess I'm stuck with it for marketing, SEO, and clickbait.