Run a prompt to generate and execute jq programs using llm-jq

27th October 2024

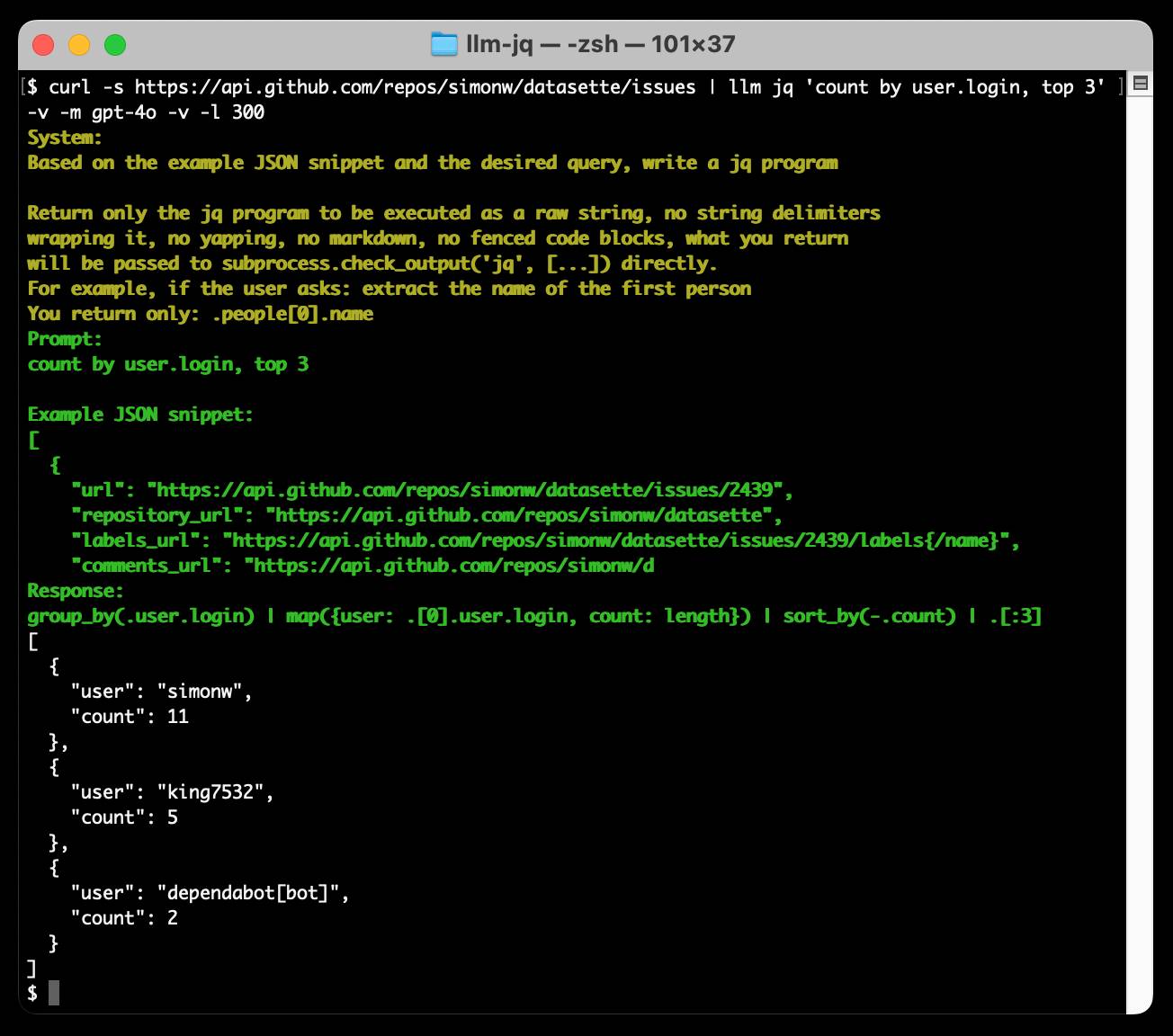

llm-jq is a brand new plugin for LLM which lets you pipe JSON directly into the llm jq command along with a human-language description of how you’d like to manipulate that JSON and have a jq program generated and executed for you on the fly.

Thomas Ptacek on Twitter:

The JQ CLI should just BE a ChatGPT client, so there’s no pretense of actually understanding this syntax. Cut out the middleman, just look up what I’m trying to do, for me.

I couldn’t resist writing a plugin. Here’s an example of llm-jq in action:

llm install llm-jq

curl -s https://api.github.com/repos/simonw/datasette/issues | \

llm jq 'count by user login, top 3'This outputs the following:

[

{

"login": "simonw",

"count": 11

},

{

"login": "king7532",

"count": 5

},

{

"login": "dependabot[bot]",

"count": 2

}

]

group_by(.user.login) | map({login: .[0].user.login, count: length}) | sort_by(-.count) | .[0:3]

The JSON result is sent to standard output, the jq program it generated and executed is sent to standard error. Add the -s/--silent option to tell it not to output the program, or the -v/--verbose option for verbose output that shows the prompt it sent to the LLM as well.

Under the hood it passes the first 1024 bytes of the JSON piped to it plus the program description “count by user login, top 3” to the default LLM model (usually gpt-4o-mini unless you set another with e.g. llm models default claude-3.5-sonnet) and system prompt. It then runs jq in a subprocess and pipes in the full JSON that was passed to it.

Here’s the system prompt it uses, adapted from my llm-cmd plugin:

Based on the example JSON snippet and the desired query, write a jq program

Return only the jq program to be executed as a raw string, no string delimiters wrapping it, no yapping, no markdown, no fenced code blocks, what you return will be passed to subprocess.check_output('jq', [...]) directly. For example, if the user asks: extract the name of the first person You return only: .people[0].name

I used Claude to figure out how to pipe content from the parent process to the child and detect and return the correct exit code.

More recent articles

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026