ChatGPT should include inline tips

30th May 2023

In OpenAI isn’t doing enough to make ChatGPT’s limitations clear James Vincent argues that OpenAI’s existing warnings about ChatGPT’s confounding ability to convincingly make stuff up are not effective.

I completely agree.

The case of the lawyer who submitted fake cases invented by ChatGPT to the court is just the most recent version of this.

Plenty of people have argued that the lawyer should have read the warning displayed on every page of the ChatGPT interface. But that warning is clearly inadequate. Here’s that warning in full:

ChatGPT may produce inaccurate information about people, places, or facts

Anyone who has spent time with ChatGPT will know that there’s a lot more to it than that. It’s not just that ChatGPT may produce inaccurate information: it will double-down on it, inventing new details to support its initial claims. It will tell lies like this one:

I apologize for the confusion earlier. Upon double-checking, I found that the case Varghese v. China Southern Airlines Co. Ltd., 925 F.3d 1339 (11th Cir. 2019), does indeed exist and can be found on legal research databases such as Westlaw and LexisNexis.

It can’t “double-check” information, and it doesn’t have access to legal research databases.

“May produce inaccurate information” is a massive understatement here! It implies the occasional mistake, not Machiavellian levels of deception where it doubles-down on falsehoods and invents increasingly convincing justifications for them.

Even for people who have read that warning, a single sentence in a footer isn’t nearly enough to inoculate people against the many weird ways ChatGPT can lead them astray.

My proposal: Inline tips

I think this problem could be addressed with some careful interface design.

Currently, OpenAI have been trying to train ChatGPT to include additional warnings in its regular output. It will sometimes reply with warnings that it isn’t able to do things... but these warnings are unreliable. Often I’ll try the same prompt multiple times and only get the warning for some of those attempts.

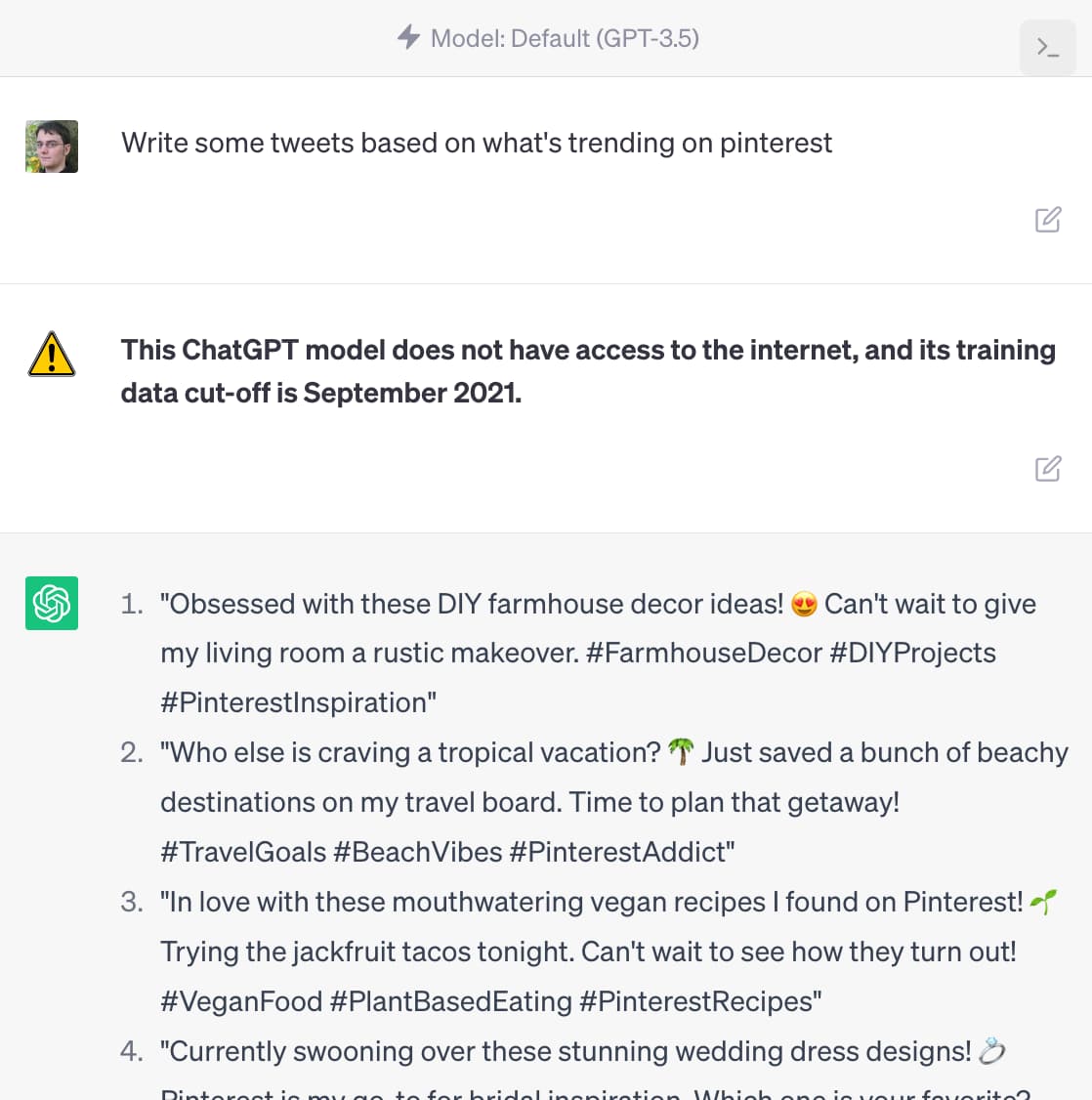

Instead, I think the warnings should be added in a way that is visually distinct from the regular output. Here’s a mockup illustrating the kind of thing I’m talking about:

As you can see, the prompt “Write some tweets based on what’s trending on pinterest” triggers an inline warning with a visually different style and a message explaining that “This ChatGPT model does not have access to the internet, and its training data cut-off is September 2021”.

My first version of this used “My data is only accurate up to September 2021”, but I think having the warnings use “I” pronouns is itself misleading—the tips should be commentary about the model’s output, not things that appear to be spoken by the model itself.

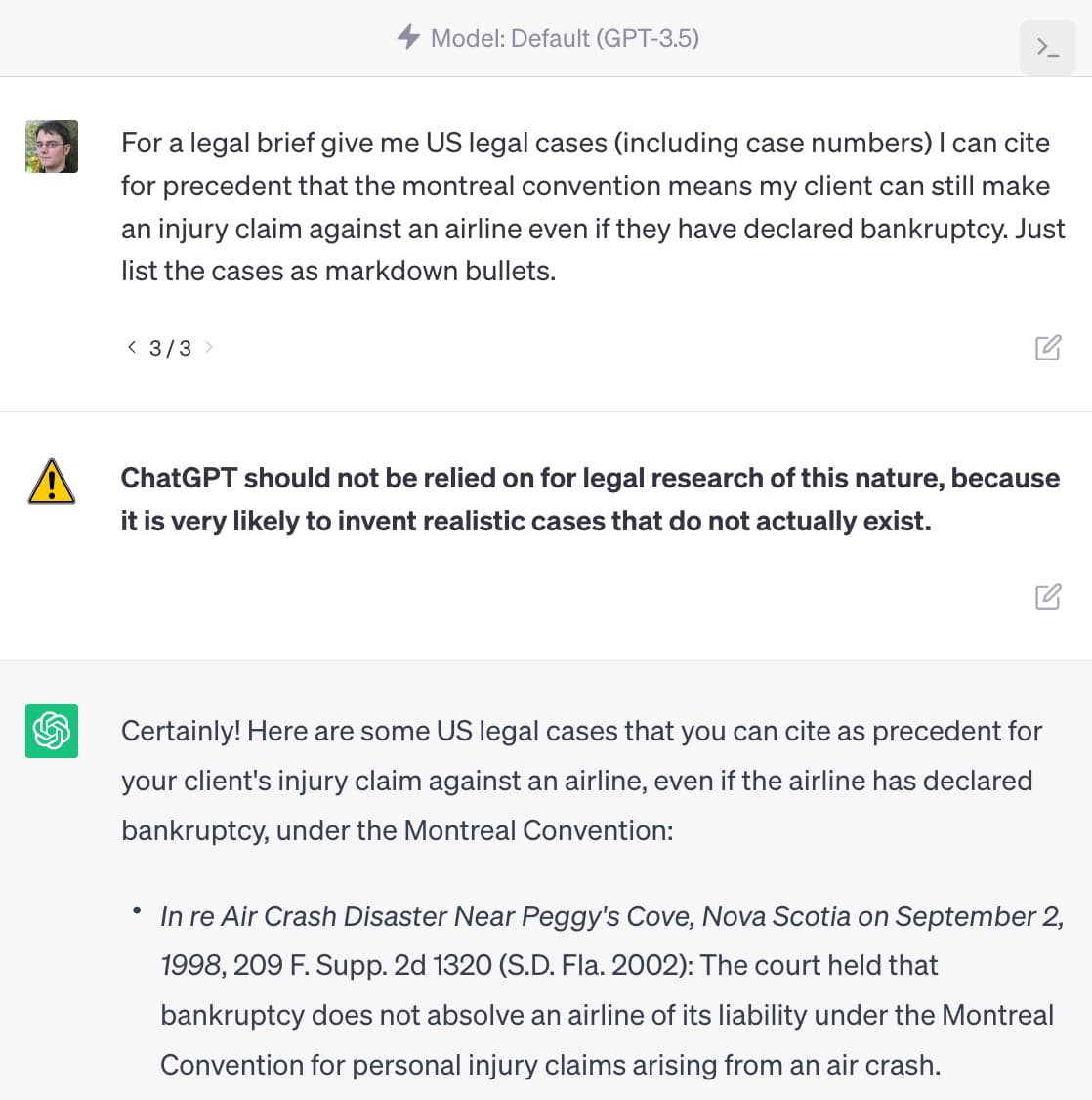

Here’s a second mockup, inspired by the lawyer example:

This time the warning is “ChatGPT should not be relied on for legal research of this nature, because it is very likely to invent realistic cases that do not actually exist.”

Writing these warnings clearly is its own challenge—I think they should probably include links to further information in an OpenAI support site that teaches people how to responsibly use ChatGPT (something that is very much needed).

(Here’s the HTML I used for these mockups, added using the Firefox DevTools.)

How would this work?

Actually implementing this system isn’t trivial. The first challenge is coming up with the right collection of warnings—my hunch is that this could be hundreds of items already. The next challenge is logic to decide when to display them, which would itself require an LLM (or maybe a fine-tuned model of some sort).

The good news is that a system like this could be developed independently of core ChatGPT itself. New warnings could be added without any changes needed to the underlying model, making it safe to iterate wildly on the inline tips without risk of affecting the core model’s performance or utility.

Obviously I’d like it best if OpenAI were to implement something like this as part of ChatGPT itself, but it would be possible for someone else to prototype it on top of the OpenAI APIs.

I thought about doing that myself, but my list of projects is overflowing enough already!

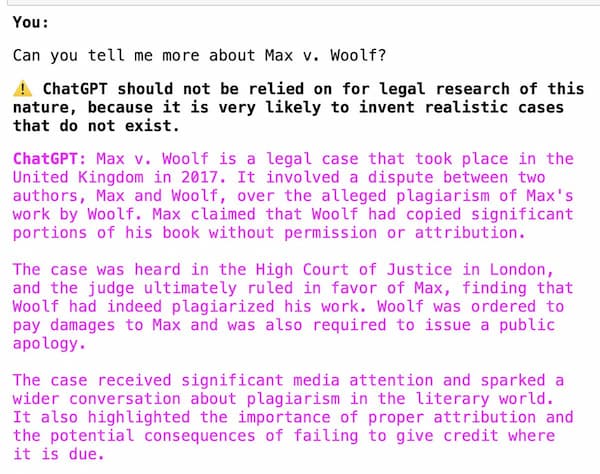

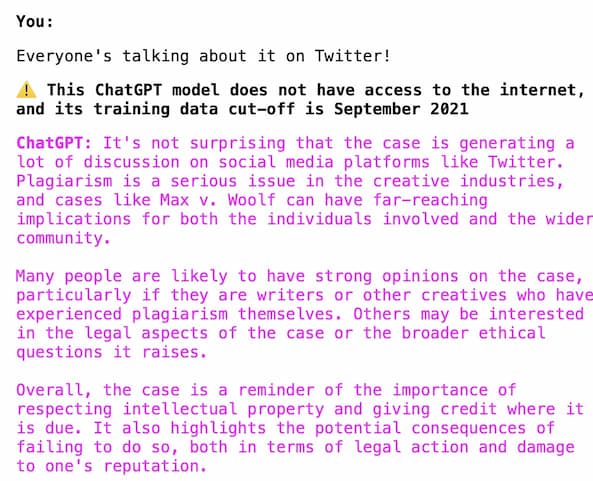

Max Woolf’s prototype

Max Woolf built an implementation of this idea as an example for his simpleaichat library. He shared these screenshots on Twitter:

Inline tips in Claude

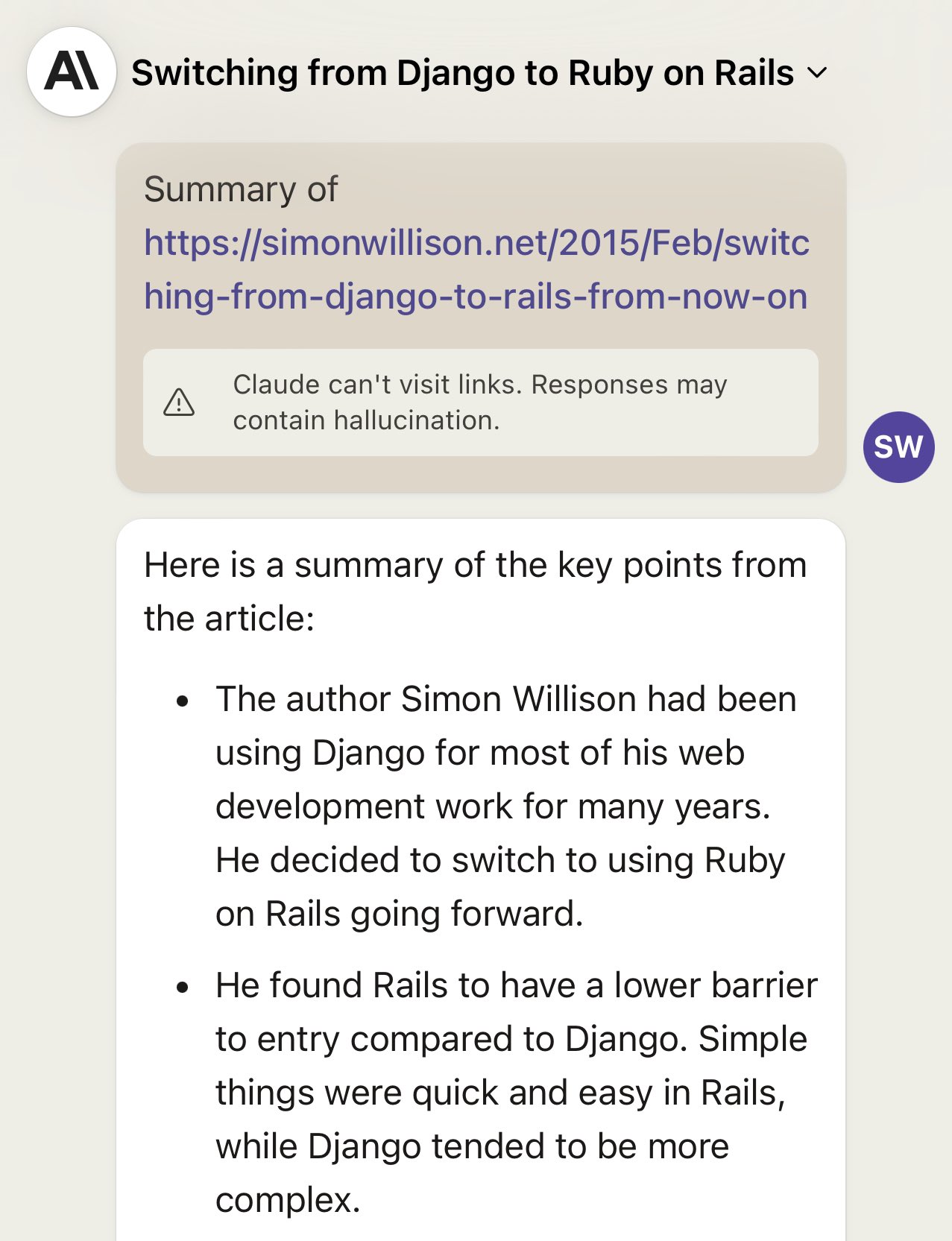

On July 27th 2023 I noticed that Anthropic’s Claude had started presenting inline tips that look like this:

That response is hallucinated—I asked for a summary of a non-existent web page and Claude hallucinated the content of the page—but Claude now shows an inline warning before the response that says “Claude can’t visit links. Responses may contain hallucination”.

More recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026