First impressions of DALL-E, generating images from text

23rd June 2022

I made it off the DALL-E waiting list a few days ago and I’ve been having an enormous amount of fun experimenting with it. Here are some notes on what I’ve learned so far (and a bunch of example images too).

(For those not familiar with it, DALL-E is OpenAI’s advanced text-to-image generator: you feed it a prompt, it generates images. It’s extraordinarily good at it.)

First, a warning: DALL-E only allows you to generate up to 50 images a day. I found this out only when I tried to generate image number 51. So there’s a budget to watch out for.

I’ve usually run out by lunch time!

How to use DALL-E

DALL-E is even simpler to use than GPT-3: you get a text box to type in, and that’s it. There are no advanced settings to tweak.

It does have one other mode: you can upload your own photo, crop it to a square and then erase portions of it and ask DALL-E to fill them in with a prompt. This feature is clearly still in the early stages—I’ve not had great results with it yet.

DALL-E always returns six resulting images, which I believe it has selected as the “best” from hundreds of potential results.

Tips on prompts

DALL-E’s initial label suggests to “Start with a detailed description”. This is very good advice!

The more detail you provide, the more interesting DALL-E gets.

If you type “Pelican”, you’ll get an image that is indistinguishable from what you might get from something like Google Image search. But the more details you ask for, the more interesting and fun the result.

Fun with pelicans

Here’s “A ceramic pelican in a Mexican folk art style with a big cactus growing out of it”:

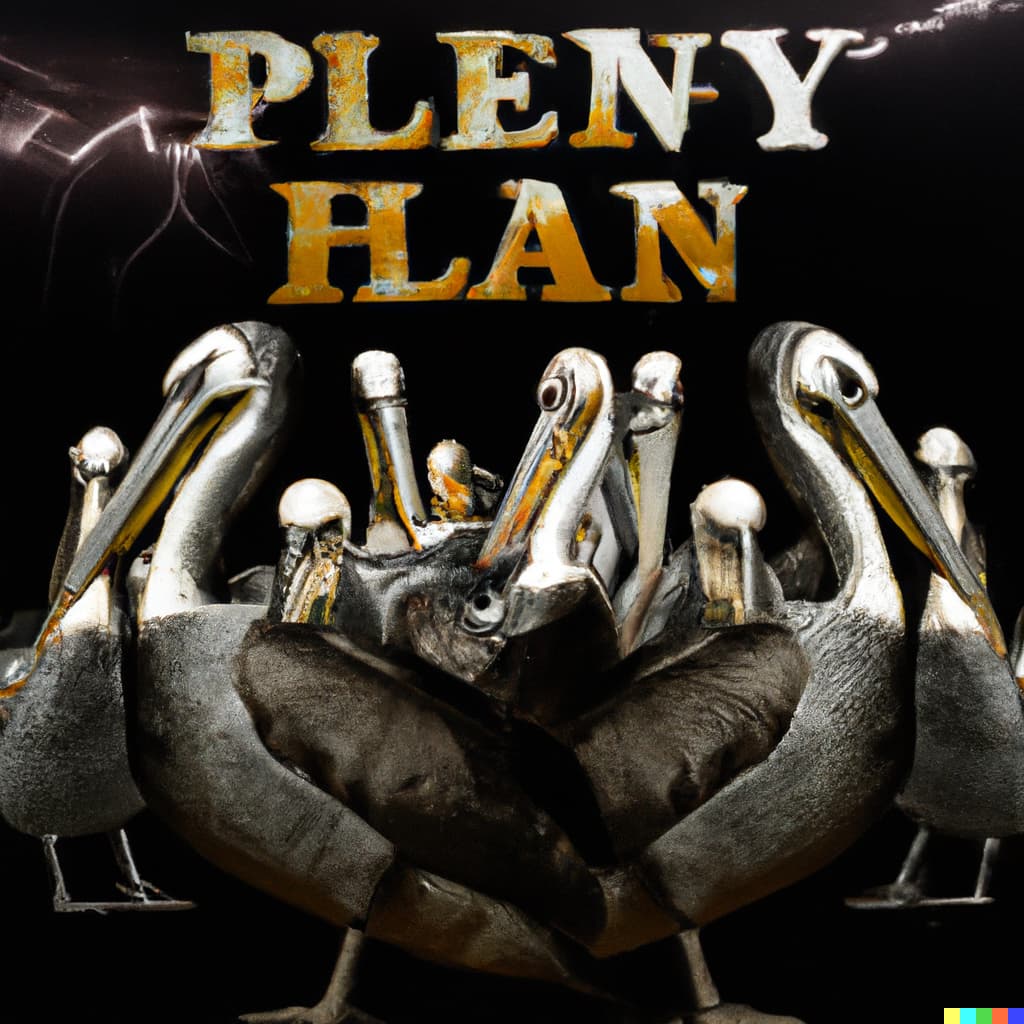

Some of the most fun results you can have come from providing hints as to a medium or art style you would like. Here’s “A heavy metal album cover where the band members are all pelicans... made of lightning”:

This illustrates a few interesting points. Firstly, DALL-E is hilariously bad at any images involving text. It can make things that look like letters and words but it has no concept of actual writing.

My initial prompt was for “A death metal album cover...”—but DALL-E refused to generate that. It has a filter to prevent people from generating images that go outside its content policy, and the word “death” triggered it.

(I’m confident that the filter can be easily avoided, but I don’t want to have my access revoked so I haven’t spent any time pushing its limits.)

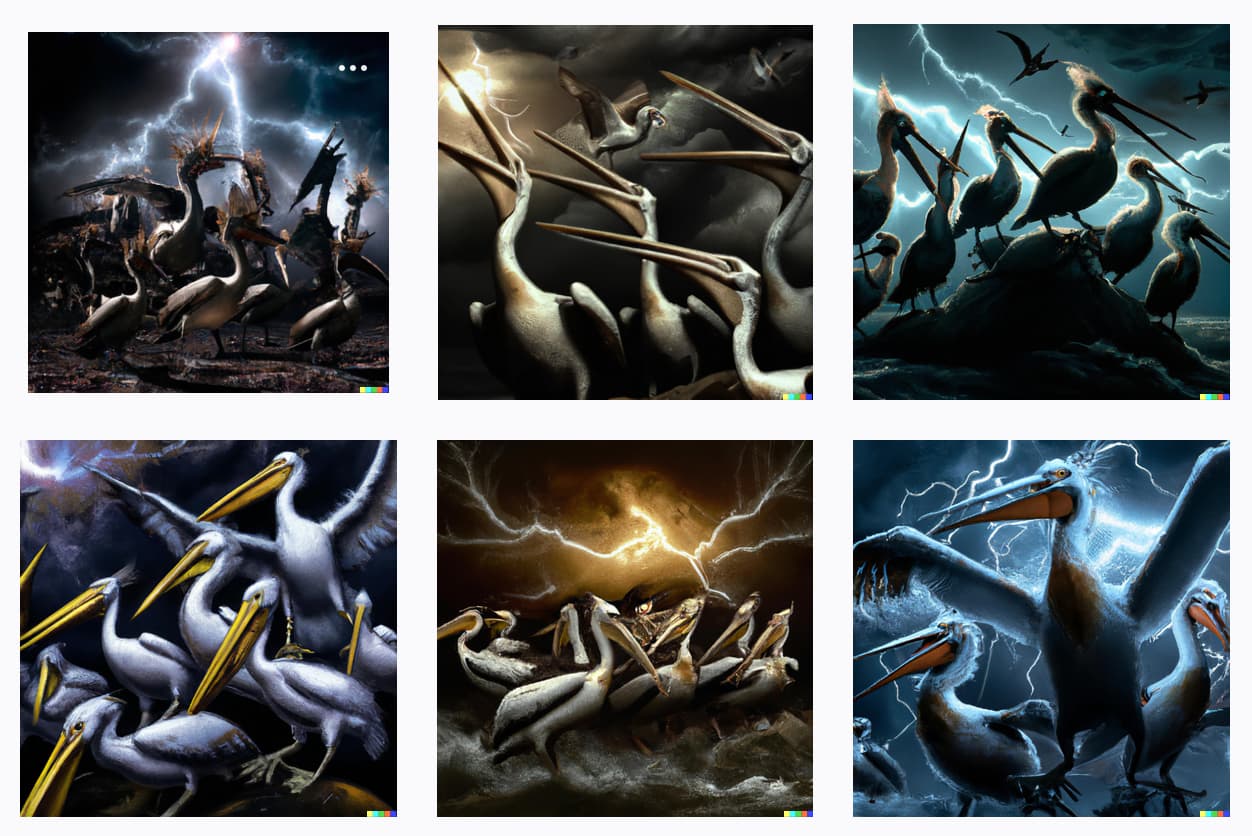

It’s also not a great result—those pelicans are not made of lightning! I tried a tweaked prompt:

“A heavy metal album cover where the band members are all pelicans that are made of lightning”:

Still not made of lightning. One more try:

“pelican made of lightning”:

Let’s try the universal DALL-E cheat code, adding “digital art” to the prompt.

“a pelican made of lightning, digital art”

OK, those look a lot better!

One last try—the earlier prompt but with “digital art” added.

“A heavy metal album cover where the band members are all pelicans that are made of lightning, digital art”:

OK, these are cool. The text is gone—maybe the “digital art” influence over-rode the “album cover” a tiny bit there.

This process is a good example of “prompt engineering”—feeding in altered prompts to try to iterate towards a better result. This is a very deep topic, and I’m confident I’ve only just scratched the surface of it.

Breaking away from album art, here’s “A squadron of pelicans having a tea party in a forest with a raccoon, digital art”. Often when you specify “digital art” it picks some other additional medium:

Recreating things you see

A fun game I started to play with DALL-E was to see if I could get it to recreate things I saw in real life.

My dog, Cleo, was woofing at me for breakfast. I took this photo of her:

Then I tried this prompt: “A medium sized black dog who is a pit bull mix sitting on the ground wagging her tail and woofing at me on a hardwood floor”

OK, wow.

Later, I caught her napping on the bed:

Here’s DALL-E for “A medium sized black pit bull mix curled up asleep on a dark green duvet cover”:

One more go at that. Our chicken Cardi snuck into the house and snuggled up on the sofa. Before I evicted her back into the garden I took this photo:

“a black and white speckled chicken with a red comb snuggled on a blue sofa next to a cushion with a blue seal pattern and a blue and white knitted blanket”:

Clearly I didn’t provide a detailed enough prompt here! I would need to iterate on this one a lot.

Stained glass

DALL-E is great at stained glass windows.

“Pelican in a waistcoat as a stained glass window”:

"A stained glass window depicting 5 different nudibranchs"

People

DALL-E is (understandably) quite careful about depictions of people. It won’t let you upload images with recognisable faces in them, and when you ask for a prompt with a famous person it will sometimes pull off tricks like showing them from behind.

Here’s “The pope on a bicycle leading a bicycle race through Paris”:

Though maybe it was the “leading a bicycle race” part that inspired it to draw the image from this direction? I’m not sure.

It will sometimes generate made-up people with visible faces, but they ask users not to share those images.

Assorted images

Here are a bunch of images that I liked, with their prompts.

Inspired by one of our chickens:

“A blue-grey fluffy chicken puffed up and looking angry perched under a lemon tree”

I asked it for the same thing, painted by Salvador Dali:

“A blue-grey fluffy chicken puffed up and looking angry perched under a lemon tree, painted by Salvador Dali”:

“Bats having a quinceañera, digital art”:

“The scene in an Agatha Christie mystery where the e detective reveals who did it, but everyone is a raccoon. Digital art.”:

(It didn’t make everyone a raccoon. It also refused my initial prompt where I asked for an Agatha Christie murder mystery, presumably because of the word “murder”.)

“An acoustic guitar decorated with capybaras in Mexican folk art style, sigma 85mm”:

Adding “sigma 85mm” (and various other mm lengths) is a trick I picked up which gives you realistic images that tend to be cropped well.

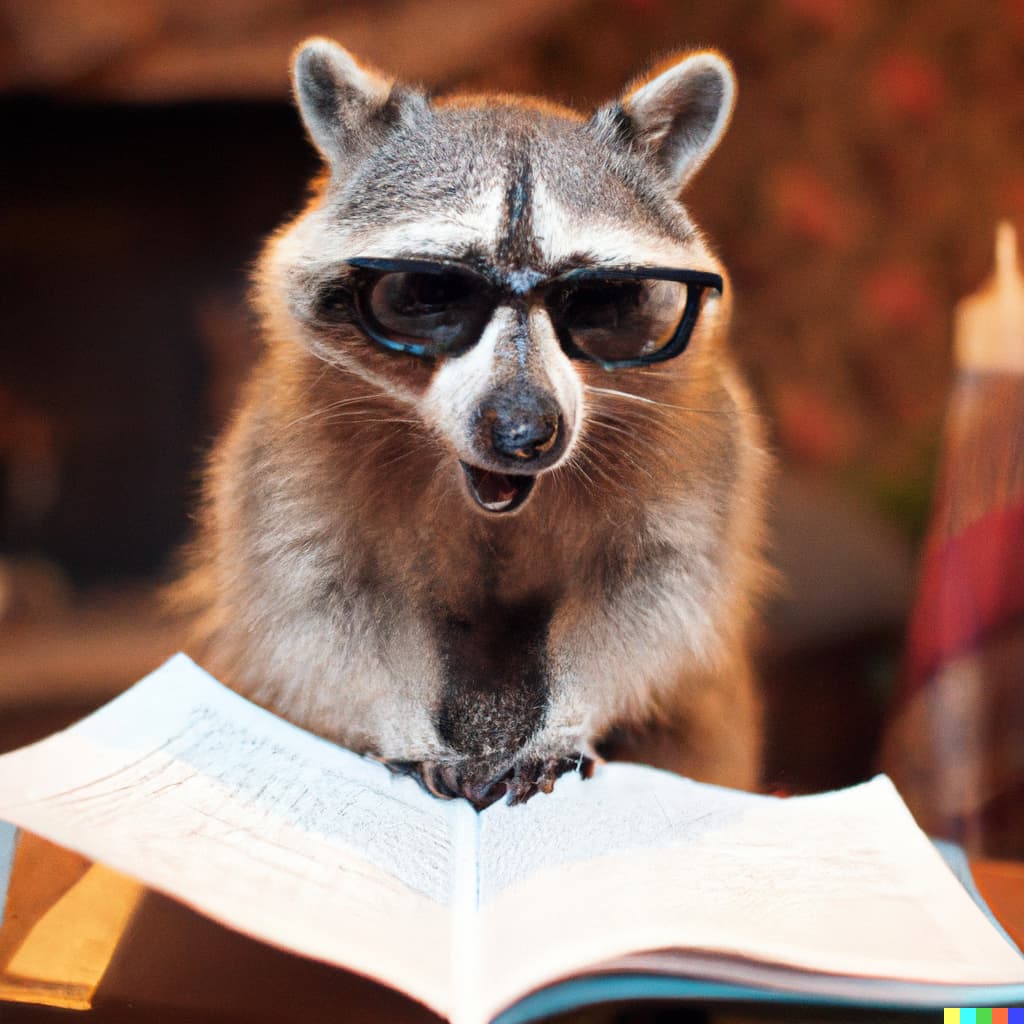

“A raccoon wearing glasses and reading a poem at a poetry evening, sigma 35mm”:

“Pencil sketch of a Squirrel reading a book”:

Pencil sketches come out fantastically well.

“The royal pavilion in brighton covered in snow”

I experienced this once, many years ago when I lived in Brighton—but forgot to take a photo of it. It looked exactly like this.

And a game: fantasy breakfast tacos

It’s difficult to overstate how much fun playing with this stuff is. Here’s a game I came up with: fantasy breakfast tacos. See how tasty a taco you can invent!

Mine was “breakfast tacos with lobster, steak, salmon, sausages and three different sauces”:

Natalie is a vegetarian, which I think puts her at a disadvantage in this game. “breakfast taco containing cauliflower, cheesecake, tomatoes, eggs, flowers”:

Closing thoughts

As you can see, I have been enjoying playing with this a LOT. I could easily share twice as much—the above are just the highlights from my experiments so far.

The obvious question raised by this is how it will affect people who generate art and design for a living. I don’t have anything useful to say about that, other than recommending that they make themselves familiar with the capabilities of these kinds of tools—which have taken an astonishing leap forward in the past few years.

My current mental model of DALL-E is that it’s a fascinating tool for enhancing my imagination. Being able to imagine something and see it visualized a few seconds later is an extraordinary new ability.

I haven’t yet figured out how to apply this to real world problems that I face—my attempts at getting DALL-E to generate website wireframes or explanatory illustrations have been unusable so far—but I’ll keep on experimenting with it. Especially since feeding it prompts is just so much fun.

More recent articles

- Can coding agents relicense open source through a “clean room” implementation of code? - 5th March 2026

- Something is afoot in the land of Qwen - 4th March 2026

- I vibe coded my dream macOS presentation app - 25th February 2026