Porting VaccinateCA to Django

12th April 2021

As I mentioned back in February, I’ve been working with the VaccinateCA project to try to bring the pandemic to an end a little earlier by helping gather as accurate a model as possible of where the Covid vaccine is available in California and how people can get it.

The key activity at VaccinateCA is calling places to check on their availability and eligibility criteria. Up until last night this was powered by a heavily customized Airtable instance, accompanied by a custom JavaScript app for the callers that communicated with the Airtable API via some Netlify functions.

Today, the flow is powered by a new custom Django backend, running on top of PostgreSQL.

The thing you should never do

Here’s one that took me fifteen years to learn: “let’s build a new thing and replace this” is hideously dangerous: 90% of the time you won’t fully replace the old thing, and now you have two problems!

- Simon Willison (@simonw) June 29, 2019

Replacing an existing system with a from-scratch rewrite is risky. Replacing a system that is built on something as flexible as Airtable that is evolving on a daily basis is positively terrifying!

Airtable served us extremely well, but unfortunately there are hard limits to the number of rows Airtable can handle and we’ve already bounced up against them and had to archive some of our data. To keep scaling the organization we needed to migrate away.

We needed to build a matching relational database with a comprehensive, permission-controlled interface for editing it, plus APIs to drive our website and application. And we needed to do it using the most boring technology possible, so we could focus on solving problems directly rather than researching anything new.

It will never cease to surprise me that Django has attained boring technology status! VaccineCA sits firmly in Django’s sweet-spot. So we used that to build our replacement.

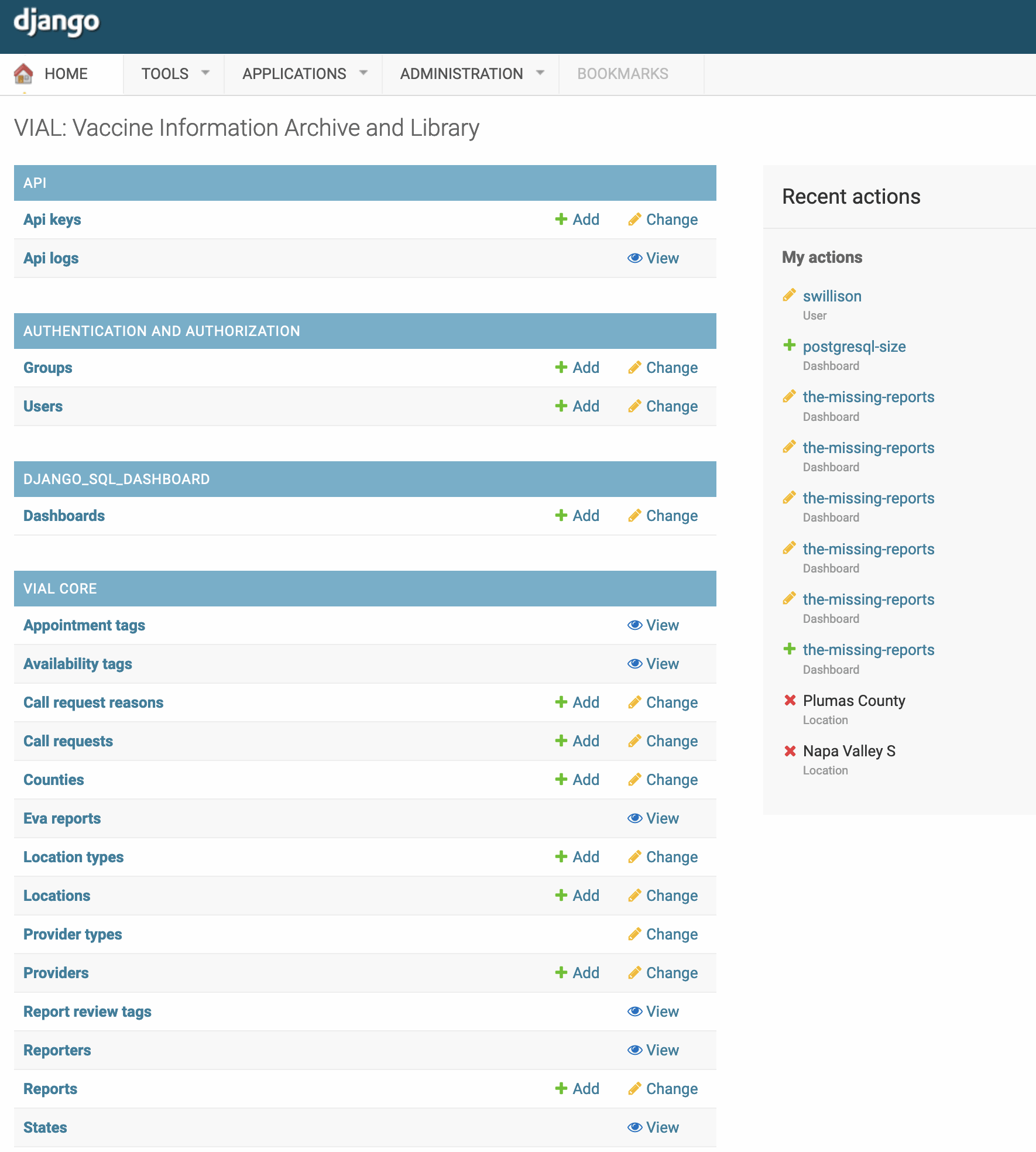

The new Django-based system is called VIAL, for “Vaccine Information Archive and Library”—a neat Jesse Vincent bacronym.

We switched things over to VIAL last night, but we still have activity in Airtable as well. I expect we’ll keep using Airtable for the lifetime of the organization—there are plenty of ad-hoc data projects for which it’s a perfect fit.

The most important thing here is to have a trusted single point of truth for any piece of information. I’m not quite ready to declare victory on that point just yet, but hopefully once things settle down over the next few days.

Data synchronization patterns

The first challenge, before even writing any code, was how to get stuff out of Airtable. I built a tool for this a while ago called airtable-export, and it turned out the VaccinateCA team were using it already before I joined!

airtable-export was already running several times an hour, backing up the data in JSON format to a GitHub repository (a form of Git scraping). This gave us a detailed history of changes to the Airtable data, which occasionally proved extremely useful for answering questions about when a specific record was changed or deleted.

Having the data in a GitHub repository was also useful because it gave us somewhere to pull data from that wasn’t governed by Airtable’s rate limits.

I iterated through a number of different approaches for writing importers for the data.

Each Airtable table ended up as a single JSON file in our GitHub repository, containing an array of objects—those files got pretty big, topping out at about 80MB.

I started out with Django management commands, which could be passed a file or a URL. A neat thing about using GitHub for this is that you can use the “raw data” link to obtain a URL with a short-lived token, which grants access to that file. So I could create a short-term URL and paste it directly to my import tool.

I don’t have a good pattern for running Django management commands on Google Cloud Run, so I started moving to API-based import scripts instead.

The pattern that ended up working best was to provide a /api/importRecords API endpoint which accepts a JSON array of items.

The API expects the input to have a unique primary key in each record—airtable_id in our case. It then uses Django’s update_or_create() ORM method to create new records if they were missing, and update existing records otherwise.

One remaining challenge: posting 80MB of JSON to an API in one go would likely run into resource limits. I needed a way to break that input up into smaller batches.

I ended up building a new tool for this called json-post. It has an extremely specific use-case: it’s for when you want to POST a big JSON array to an API endpoint but you want to first break it up into batches!

Here’s how to break up the JSON in Reports.json into 50 item arrays and send them to that API as separate POSTs:

json-post Reports.json \

"https://example.com/api/importReports" \

--batch-size 50

Here are some more complex options. Here we need to pass an Authorization: Bearer XXXtokenXXX API key header, run the array in reverse, record our progress (the JSON responses from the API as newline-delimited JSON) to a log file, set a longer HTTP read timeout and filter for just specific items:

% json-post Reports.json \

"https://example.com/api/importReports" \

-h Authorization 'Bearer XXXtokenXXX' \

--batch-size 50 \

--reverse \

--log /tmp/progress.txt \

--http-read-timeout 20 \

--filter 'item.get("is_soft_deleted")'

The --filter option proved particularly useful. As we kicked the tires on VIAL we would spot new bugs—things like the import script failing to correctly record the is_soft_deleted field we were using in Airtable. Being able to filter that input file with a command-line flag meant we could easily re-run the import just for a subset of reports that were affected by a particular bug.

--filter takes a Python expression that gets compiled into a function and passed item as the current item in the list. I borrowed the pattern from my sqlite-transform tool.

The value of API logs

VaccineCA’s JavaScript caller application used to send data to Airtable via a Netlify function, which allowed additional authentication to be added built using Auth0.

Back in February, the team had the bright idea to log the API traffic to that function to a separate base in Airtable—including full request and response bodies.

This proved invaluable for debugging. It also meant that when I started building VIAL’s alternative implementation of the “submit a call report” API I could replay historic API traffic that had been recorded in that table, giving me a powerful way to exercise the new API with real-world traffic.

This meant that when we turned on VIAL we could switch our existing JavaScript SPA over to talking to it using a fully tested clone of the existing Airtable-backed API.

VIAL implements this logging pattern again, this time using Django and PostgreSQL.

Given that the writable APIs will recieve in the low thousands of requests a day, keeping them in a database table works great. The table has grown to 90MB so far. I’m hoping that the pandemic will be over before we have to worry about logging capacity!

We’re using PostgreSQL jsonb columns to store the incoming and returned JSON, via Django’s JSONField. This means we can do in-depth API analysis using PostgreSQL’s JSON SQL functions! Being able to examine returned JSON error messages or aggregate across incoming request bodies helped enormously when debugging problems with the API import scripts.

Storing the original JSON

Today, almost all of the data stored in VIAL originated in Airtable. One trick that has really helped build the system is that each of the tables that might contain imported data has both an airtable_id nullable column and an import_json JSON field.

Any time we import a record from Airtable, we record both the ID and the full, original Airtable JSON that we used for the import.

This is another powerful tool for debugging: we can view the original Airtable JSON directly in the Django admin interface for a record, and confirm that it matches the ORM fields that we set from that.

I came up with a simple pattern for Pretty-printing all read-only JSON in the Django admin that helps with this too.

Staying as flexible as possible

The thing that worried me most about replacing Airtable with Django was Airtable’s incredible flexibility. In the organization’s short life it has already solved so many problems by adding new columns in Airtable, or building new views.

Is it possible to switch to custom software without losing that huge cultural advantage?

This is the same reason it’s so hard for custom software to compete with spreadsheets.

We’ve only just made the switch, so we won’t know for a while how well we’ve done at handling this. I have a few mechanisms in place that I’m hoping will help.

The first is django-sql-dashboard. I wrote about this project in previous weeknotes here and here—the goal is to bring some of the ideas from Datasette into the Django/PostgreSQL world, by providing a read-only mechanism for constructing SQL queries, bookmarking and saving the results and outputting simple SQL-driven visualizations.

We have a lot of SQL knowledge at VaccinateCA, so my hope is that people with SQL will be able to solve their own problems, and people who don’t know SQL yet will have no trouble finding someone who can help them.

In the boring technology model of things, django-sql-dashboard counts as the main innovation token I’m spending for this project. I’m optimistic that it will pay off.

I’m also leaning heavily on Django’s migration system, with the aim of making database migrations common and boring, rather than their usual default of being rare and exciting. We’re up to 77 migrations already, in a codebase that is just over two months old!

I think a culture that evolves the database schema quickly and with as little drama as possible is crucial to maintaining the agility that this kind of organization needs.

Aside from the Django Admin providing the editing interface, everything that comes into and goes out of VIAL happens through APIs. These are fully documented: I want people to be able to build against the APIs independently, especially for things like data import.

After seeing significant success with PostgreSQL JSON already, I’m considering using it to add even more API-driven flexbility to VIAL in the future. Allowing our client developers to start collecting a new piece of data from our volunteers in an existing JSON field, then migrating that into a separate column once it has proven its value, is very tempting indeed.

Open source tools we are using

An incomplete list of open source packages we are using for VIAL so far:

- pydantic—as a validation layer for some of the API endpoints

- social-auth-app-django—to integrate with Auth0

- django-cors-headers

- python-jose—for JWTs, which were already in use by our Airtable caller app

- django-reversion and django-reversion-compare to provide a diffable, revertable history of some of our core models

- django-admin-tools—which adds a handy customizable menu to the admin, good for linking to additional custom tools

- django-migration-linter—to help avoid accidentally shipping migrations that could cause downtime during a deploy

- pytest-django, time-machine and pytest-httpx for our unit tests

- sentry-sdk, honeycomb-beeline and prometheus-client for error logging and observability

Want to help out?

VaccinateCA is hiring! It’s an interesting gig, because the ultimate goal is to end the pandemic and put this non-profit permanently out of business. So if you want to help end things faster, get in touch.

VaccinateCA is hiring a handful of engineers to help scale our data ingestion and display by more than an order of magnitude.

- Patrick McKenzie (@patio11) April 7, 2021

If you’d like to register interest:https://t.co/BSvi40sW1M

Generalists welcome. Three subprojects; Python backend/pedestrian front-end JS.

TIL this week

- Language-specific indentation settings in VS Code

- Efficient bulk deletions in Django

- Using unnest() to use a comma-separated string as the input to an IN query

Releases this week

-

json-post: 0.2—(3 total releases)—2021-04-11

Tool for posting JSON to an API, broken into pages -

airtable-export: 0.7.1—(10 total releases)—2021-04-09

Export Airtable data to YAML, JSON or SQLite files on disk -

django-sql-dashboard: 0.6a0—(13 total releases)—2021-04-09

Django app for building dashboards using raw SQL queries

More recent articles

- Gemini 2.0 Flash: An outstanding multi-modal LLM with a sci-fi streaming mode - 11th December 2024

- ChatGPT Canvas can make API requests now, but it's complicated - 10th December 2024

- I can now run a GPT-4 class model on my laptop - 9th December 2024