Datasette 0.44: The annotated release notes

12th June 2020

I just released Datasette 0.44 to PyPI. With 128 commits since 0.43 this is the biggest release in a long time—and likely the last major release of new features before Datasette 1.0.

You can read the full release notes here, but I’ve decided to try something a little different for this release and write up some annotations here on my blog.

Writable canned queries

Datasette’s Canned queries feature lets you define SQL queries in

metadata.jsonwhich can then be executed by users visiting a specific URL. https://latest.datasette.io/fixtures/neighborhood_search for example.Canned queries were previously restricted to

SELECT, but Datasette 0.44 introduces the ability for canned queries to executeINSERTorUPDATEqueries as well, using the new"write": trueproperty (#800)

I originally intended this to be the main feature in the release.

Datasette 0.37 added the ability for plugins to write to the database. This marked a pretty huge philosophical shift for Datasette: from a read-only publishing system to a framework for building interactive SQL-driven applications. But you needed a plugin, such as my datasette-upload-csvs.

I realized back in March that canned queries could provide a simple, sensible way to start adding write functionality to Datasette core. A query could be configured like this:

{

"databases": {

"my-database": {

"queries": {

"add_twitter_handle": {

"sql": "insert into twitter_handles (username) values (:username)",

"write": true

}

}

}

}

}And Datasette could then provide a form interface at /my-database/add_twitter_handle for executing that query. How hard could that be to implement?

The problem with “simple” features like this is that they open up a cascade of other features.

If you’re going to have write queries, you probably need to restrict who can execute them—which means authentication and permissions. If forms are performing POSTs you need CSRF protection. If users are changing state you need a way to show them messages telling them what happened.

So let’s talk about authentication and permissions.

Authentication

Prior to this release the Datasette ecosystem has treated authentication as exclusively the realm of plugins, most notably through datasette-auth-github.

0.44 introduces Authentication and permissions as core Datasette concepts (#699). This makes it easier for different plugins can share responsibility for authenticating requests—you might have one plugin that handles user accounts and another one that allows automated access via API keys, for example.

I demonstrated with datasette-auth-github and datasette-auth-existing-cookies that authentication could exist as completely separate layer from Datasette call (thanks to the magic of ASGI middleware). But I’d started to run into limitations of this approach.

Crucially, I wanted to be able to support more than one kind of authentication. Users might get authenticated with cookies (via a SSO mechanism such as GitHub’s) but API clients need API keys. Now the different authentication plugins need to make sure they don’t accidentally intefere with each other’s logic.

Authentication in web applications always comes down to the same thing: inspecting aspects of the incoming HTTP request (headers, cookies, querystring variables etc) and deciding if they prove that the request is coming from a specific user or API integration.

I decided to use the word “actor” for this, since “user or API integration” is a bit of a mess. This means that authentication can work entirely via a new plugin hook, actor_from_request.

Here’s a really simple implementation of that hook, copied directly from the documentation:

from datasette import hookimpl

import secrets

SECRET_KEY = "this-is-a-secret"

@hookimpl

def actor_from_request(datasette, request):

authorization = request.headers.get("authorization") or ""

expected = "Bearer {}".format(SECRET_KEY)

if secrets.compare_digest(authorization, expected):

return {"id": "bot"}It returns an “actor dictionary” describing the authenticated actor. Datasette currently has no opinion at all on what shape this dictionary should take, though I expect conventions to emerge over time.

I have a new policy of never releasing a new plugin hook without also building a real-world plugin with it. For actor_from_request I’ve released datasette-auth-tokens, which lets you create secret API tokens in a configuration file and specify which actions they are allowed to perfom.

Permissions

Datasette also now has a built-in concept of Permissions. The permissions system answers the following question:

Is this actor allowed to perform this action, optionally against this particular resource?

You can use the new

"allow"block syntax inmetadata.json(ormetadata.yaml) to set required permissions at the instance, database, table or canned query level. For example, to restrict access to thefixtures.dbdatabase to the"root"user:{ "databases": { "fixtures": { "allow": { "id" "root" } } } }See Defining permissions with “allow” blocks for more details.

Plugins can implement their own custom permission checks using the new permission_allowed(datasette, actor, action, resource) hook.

Authentication on its own isn’t enough: you also need a way of deciding if an authenticated actor has permission to perform a specific action.

I was dreading adding permissions to Datasette. I have a long-running feud with Amazon IAM and the Google Cloud equivalent: I’ve been using AWS for over a decade and I still get completely lost any time I try to figure out the minimum set of permissions for something.

But... I want Datasette permissions to be flexible. My dream for Datasette is to nurture a growing ecosystem of plugins that can solve data collaboration and analysis problems way beyond what I’ve imagined myself.

Thanks to Datasette’s plugin hooks, I think I’ve found a way to provide powerful plugins with minimum additional footprint to Datasette itself.

The key is the new permission_allowed() hook, which lets plugins receive an actor, action and optional resource and allows them to reply with “allow”, “deny” or “I don’t know, ask someone else”.

Its partner is the datasette.permission_allowed(actor, action, resource) method, which plugins (and Datasette core) can call to check if the current actor is allowed to perform an action against a given resource (a specific database, table or canned query).

I invented a JSON/YAML based syntax for defining simple permission rules. If you want to provide access to a table to a specific user you can do so like this:

{

"allow": {

"id": "simonw"

}

}Or you can grant access to any actor with a role of “staff” like so:

{

"allow": {

"role": "staff"

}

}What are roles here? They’re nothing at all to Datasette itself. Datasette authentication plugins can create actors of any shape, so if your plugin decides that “role” is a useful concept it can just bake it into the Datasette.

You can read more about how these “allow” blocks work in the documentation.

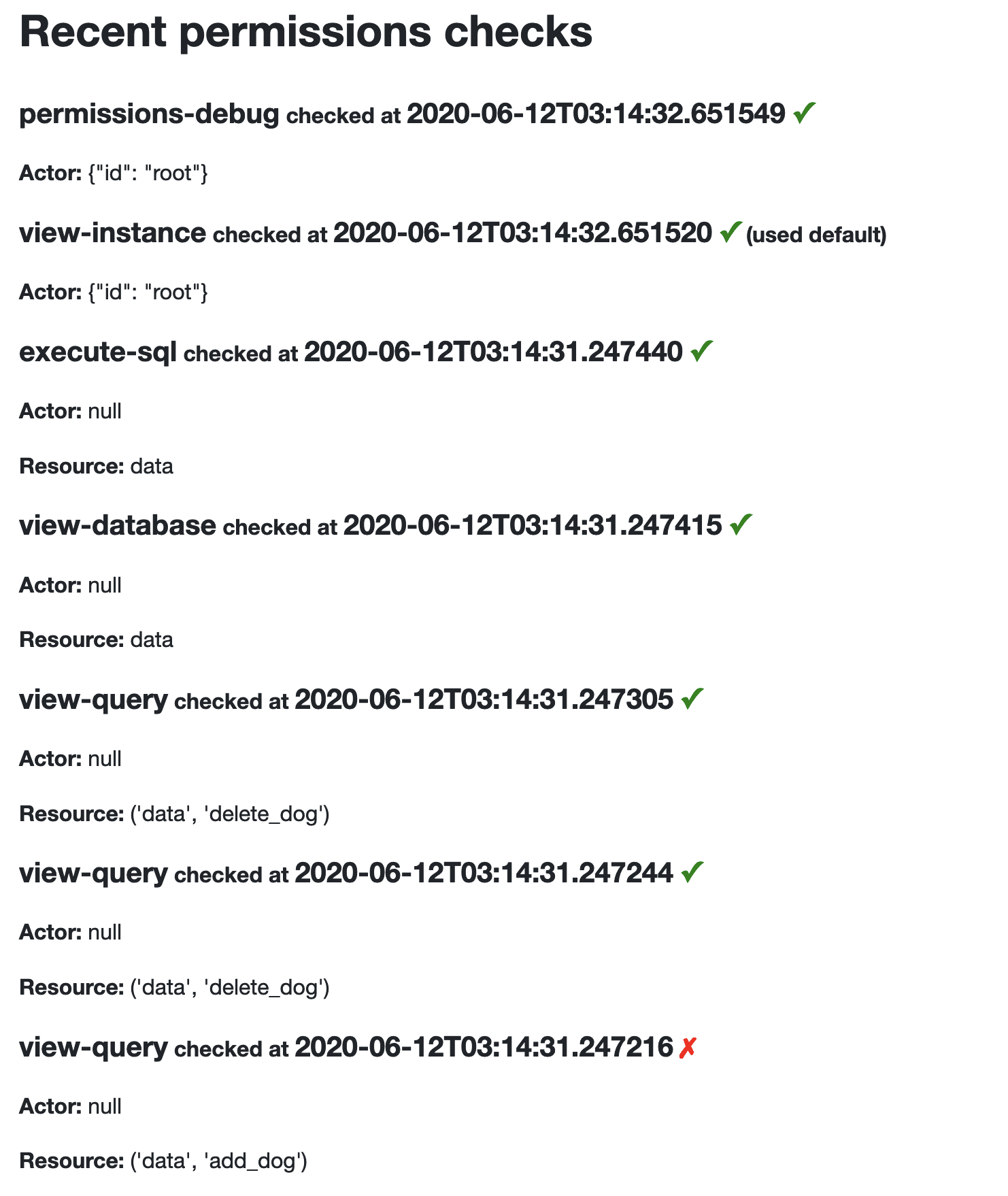

/-/permissions debug tool

Given my ongoing battle with opaque permission systems, I’m determined to try and make Datasette’s take on permissions as transparent as possible. The new /-/permissions page—visible only to authenticated users with the debug-permissions permission—shows a rolling log of the last 200 permission checks carried out by that instance. My hope is that instance administrators and plugin authors can use this to figure out exactly what is going on.

datasette-permissions-sql

datasette-permissions-sql is my new proof-of-concept plugin that puts the permission hook to use.

It exercises a Datasette pattern I find really interesting: using SQL queries to configure custom behaviour. I first started eploring this in datasette-atom and datasette-ics.

This is best illustrated by an example metadata.yaml file. I prefer YAML over JSON for anything that includes a SQL query because YAML has support for multi-line strings:

databases:

mydatabase:

queries:

promote_to_staff:

sql: |-

UPDATE users

SET is is_staff=1

WHERE id=:id

write: true

plugins:

datasette-permissions-sql:

- action: view-query

resource:

- mydatabase

- promote_to_staff

sql: |-

SELECT * FROM users

WHERE is_staff = 1

AND id = :actor_idThis block does two things. It configures a writable canned query called promote_to_staff. It then uses datasette-permissions-sql to define a permission rule that says that only authenticated actors who’s id appears in the users table with is_staff=1 are allewod to execute that canned query

This is the beginnings of a full user management system in just a few lines of configuration. I’m really excited about exploring this concept further.

register_routes() plugin hooks

Plugins can now register new views and routes via the register_routes() plugin hook (#819). View functions can be defined that accept any of the current

datasetteobject, the currentrequest, or the ASGIscope,sendandreceiveobjects.

I thought the asgi_wrapper() hook might be enough to allow plugins to add their own custom routes and views, but the more I worked with it the more I wanted something a bit more high level.

Inspired by pytest, the view functions you can define using register_routes() benefit from a simple form of dependency injection. Any of the following counts as a valid view function—it will be called with the arguments it requests:

async def hello(request):

return Response.html("Hello {}".format(

html.escape(request.args.get("name"))

))

async def page(request, datasette):

page = await datasette.get_databse().execute(

"select body from pages where id = :id", {

"id: request.url_vars["page_id"]

}

).first()

return Response.html(body)

async def asgi_hello_world(scope, receive, send):

assert scope["type"] == "http"

await send(

{

"type": "http.response.start",

"status": 200,

"headers": [

[b"content-type", b"application/json"]

],

}

)

await send({

"type": "http.response.body",

"body": b'{"hello": "world"}'

})Here’s the code that makes this work—utility functions that pass in just the arguments that match a function’s signature.

Tucked away at the end of the 0.44 release notes is this:

The road to Datasette 1.0

I’ve assembled a milestone for Datasette 1.0. The focus of the 1.0 release will be the following:

- Signify confidence in the quality/stability of Datasette

- Give plugin authors confidence that their plugins will work for the whole 1.x release cycle

- Provide the same confidence to developers building against Datasette JSON APIs

If you have thoughts about what you would like to see for Datasette 1.0 you can join the conversation on issue #519.

It’s time to start working towards the big 1.0!

More recent articles

- Phoenix.new is Fly's entry into the prompt-driven app development space - 23rd June 2025

- Trying out the new Gemini 2.5 model family - 17th June 2025

- The lethal trifecta for AI agents: private data, untrusted content, and external communication - 16th June 2025