10th June 2025 - Link Blog

Magistral — the first reasoning model by Mistral AI. Mistral's first reasoning model is out today, in two sizes. There's a 24B Apache 2 licensed open-weights model called Magistral Small (actually Magistral-Small-2506), and a larger API-only model called Magistral Medium.

Magistral Small is available as mistralai/Magistral-Small-2506 on Hugging Face. From that model card:

Context Window: A 128k context window, but performance might degrade past 40k. Hence we recommend setting the maximum model length to 40k.

Mistral also released an official GGUF version, Magistral-Small-2506_gguf, which I ran successfully using Ollama like this:

ollama pull hf.co/mistralai/Magistral-Small-2506_gguf:Q8_0

That fetched a 25GB file. I ran prompts using a chat session with llm-ollama like this:

llm chat -m hf.co/mistralai/Magistral-Small-2506_gguf:Q8_0

Here's what I got for "Generate an SVG of a pelican riding a bicycle" (transcript here):

It's disappointing that the GGUF doesn't support function calling yet - hopefully a community variant can add that, it's one of the best ways I know of to unlock the potential of these reasoning models.

I just noticed that Ollama have their own Magistral model too, which can be accessed using:

ollama pull magistral:latest

That gets you a 14GB q4_K_M quantization - other options can be found in the full list of Ollama magistral tags.

One thing that caught my eye in the Magistral announcement:

Legal, finance, healthcare, and government professionals get traceable reasoning that meets compliance requirements. Every conclusion can be traced back through its logical steps, providing auditability for high-stakes environments with domain-specialized AI.

I guess this means the reasoning traces are fully visible and not redacted in any way - interesting to see Mistral trying to turn that into a feature that's attractive to the business clients they are most interested in appealing to.

Also from that announcement:

Our early tests indicated that Magistral is an excellent creative companion. We highly recommend it for creative writing and storytelling, with the model capable of producing coherent or — if needed — delightfully eccentric copy.

I haven't seen a reasoning model promoted for creative writing in this way before.

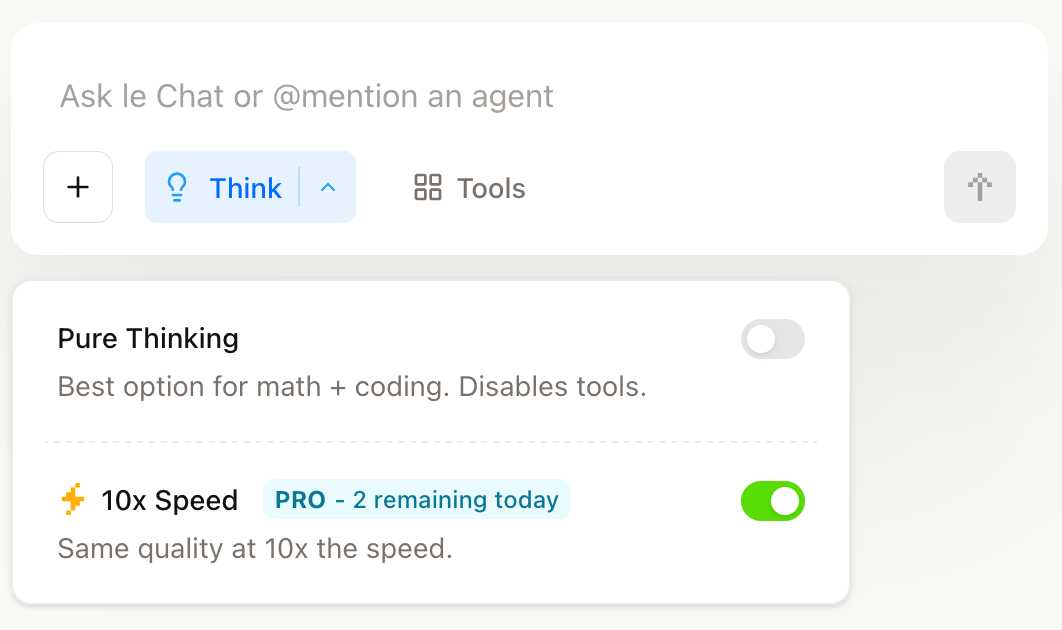

You can try out Magistral Medium by selecting the new "Thinking" option in Mistral's Le Chat.

They have options for "Pure Thinking" and a separate option for "10x speed", which runs Magistral Medium at 10x the speed using Cerebras.

The new models are also available through the Mistral API. You can access them by installing llm-mistral and running llm mistral refresh to refresh the list of available models, then:

llm -m mistral/magistral-medium-latest \

'Generate an SVG of a pelican riding a bicycle'

Here's that transcript. At 13 input and 1,236 output tokens that cost me 0.62 cents - just over half a cent.

Recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026