Run LLMs on macOS using llm-mlx and Apple’s MLX framework

15th February 2025

llm-mlx is a brand new plugin for my LLM Python Library and CLI utility which builds on top of Apple’s excellent MLX array framework library and mlx-lm package. If you’re a terminal user or Python developer with a Mac this may be the new easiest way to start exploring local Large Language Models.

Running Llama 3.2 3B using llm-mlx

If you haven’t already got LLM installed you’ll need to install it—you can do that in a bunch of different ways—in order of preference I like uv tool install llm or pipx install llm or brew install llm or pip install llm.

Next, install the new plugin (macOS only):

llm install llm-mlxNow download and register a model. Llama 3.2 3B is an excellent first choice—it’s pretty small (a 1.8GB download) but is a surprisingly capable starter model.

llm mlx download-model mlx-community/Llama-3.2-3B-Instruct-4bitThis will download 1.8GB of model weights from mlx-community/Llama-3.2-3B-Instruct-4bit on Hugging Face and store them here:

~/.cache/huggingface/hub/models--mlx-community--Llama-3.2-3B-Instruct-4bit

Now you can start running prompts:

llm -m mlx-community/Llama-3.2-3B-Instruct-4bit 'Python code to traverse a tree, briefly'Which output this for me:

Here’s a brief example of how to traverse a tree in Python:

class Node: def __init__(self, value): self.value = value self.children = [] def traverse_tree(node): if node is None: return print(node.value) for child in node.children: traverse_tree(child) # Example usage: root = Node("A") root.children = [Node("B"), Node("C")] root.children[0].children = [Node("D"), Node("E")] root.children[1].children = [Node("F")] traverse_tree(root) # Output: A, B, D, E, C, FIn this example, we define a

Nodeclass to represent each node in the tree, with avalueattribute and a list ofchildren. Thetraverse_treefunction recursively visits each node in the tree, printing its value.This is a basic example of a tree traversal, and there are many variations and optimizations depending on the specific use case.

That generation ran at an impressive 152 tokens per second!

That command was a bit of a mouthful, so let’s assign an alias to the model:

llm aliases set l32 mlx-community/Llama-3.2-3B-Instruct-4bitNow we can use that shorter alias instead:

llm -m l32 'a joke about a haggis buying a car'(The joke isn’t very good.)

As with other models supported by LLM, you can also pipe things to it. Here’s how to get it to explain a piece of Python code (in this case itself):

cat llm_mlx.py | llm -m l32 'explain this code'The response started like this:

This code is a part of a larger project that uses the Hugging Face Transformers library to create a text-to-text conversational AI model. The code defines a custom model class

MlxModeland a set of command-line interface (CLI) commands for working with MLX models. [...]

Here’s the rest of the response. I’m pretty amazed at how well it did for a tiny 1.8GB model!

This plugin can only run models that have been converted to work with Apple’s MLX framework. Thankfully the mlx-community organization on Hugging Face has published over 1,000 of these. A few I’ve tried successfully:

-

mlx-community/Qwen2.5-0.5B-Instruct-4bit—278 MB -

mlx-community/Mistral-7B-Instruct-v0.3-4bit—4.08 GB -

mlx-community/Mistral-Small-24B-Instruct-2501-4bit—13.26 GB -

mlx-community/DeepSeek-R1-Distill-Qwen-32B-4bit—18.5 GB -

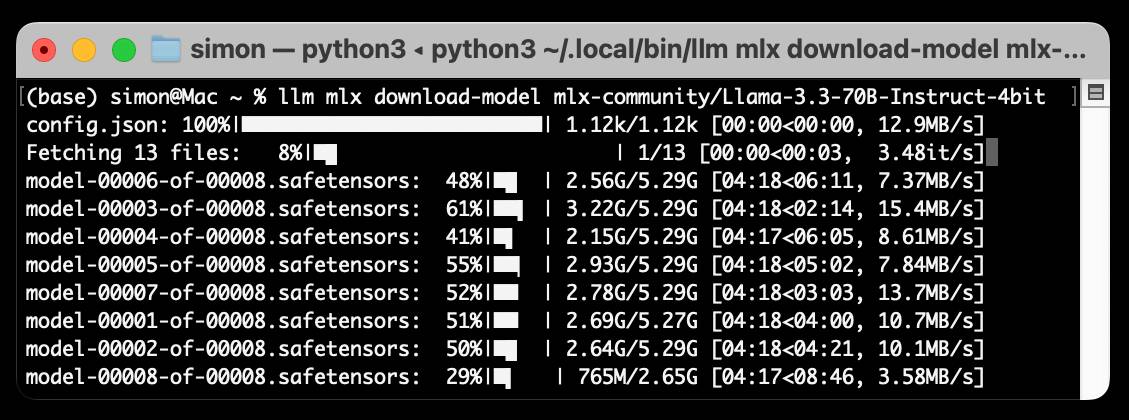

mlx-community/Llama-3.3-70B-Instruct-4bit—40 GB

Mistral-Small-24B really does feel GPT-4 quality despite only needing around 12GB of RAM to run—so it’s a good default model if you want to leave space to run other apps.

DeepSeek-R1-Distill-Qwen-32B-4bit is a great model if you want to try out R1 style “reasoning” which outputs a <think>...</think>... block at the start of each response.

That last Llama 70B one needs a machine with 64GB of RAM to run but is very capable—I wrote about trying out the Ollama version of Llama 3.3 70B in I can now run a GPT-4 class model on my laptop back in December.

llm mlx download-model mlx-community/Llama-3.3-70B-Instruct-4bit

For larger models like that I recommend using the llm chat command as that ensures they stay in memory for multiple rounds of the conversation:

llm chat -m mlx-community/Llama-3.3-70B-Instruct-4bitActivity Monitor shows the process using 37.5GB of RAM while the model is running.

Some of these jokes are actually pretty good!

Chatting with mlx-community/Llama-3.3-70B-Instruct-4bit Type 'exit' or 'quit' to exit Type '!multi' to enter multiple lines, then '!end' to finish > hi How's your day going so far? Is there something I can help you with or would you like to chat? > jokes about weird animals please Here are a few: 1. Why did the axolotl go to therapy? Because it was struggling to come to terms with its identity crisis... and also because it had a lot of "regrowing" to do! 2. What did the aye-aye say to the other aye-aye when they were out on a date? "You've got a finger on the pulse of my heart!" 3. Why did the naked mole rat go to the party? Because it was a "hair-raising" experience and it wanted to get to the "root" of the fun! 4. What do you call a group of flamingos playing instruments? The Pink Floyd... of the avian world, of course! 5. Why did the blobfish go to the doctor? It had a "gut" feeling something was wrong... and also because it was feeling a little "deflated"! Want more? I've got a whole zoo of 'em!

That ran at 8.8 tokens per second.

As with every LLM plugin, all prompts and responses are logged to a SQLite database that can be accessed using the llm logs command.

You can use LLM models from your Python code as well. The README includes notes on how to do this, as does the LLM Python API documentation. TLDR:

import llm model = llm.get_model("mlx-community/Llama-3.2-3B-Instruct-4bit") print(model.prompt("hi").text())

MLX and mlx-lm

The secret sauce behind this new plugin is Apple’s MLX library, which as been improving at an extraordinary pace over the past year—it first launched on GitHub just in November of 2023.

The core library is C++, but the MLX team at Apple also maintain a mlx-examples repo with a wealth of Python examples, including the mlx-lm and mlx-whisper packages.

The community around it is growing too. I’m particularly impressed by Prince Canuma’s mlx-vlm library for accessing multi-modal vision LLMs—I’d love to get that working as an LLM plugin once I figure out how to use it for conversations.

I’ve used MLX for a few experiments in the past, but this tweet from MLX core developer Awni Hannun finally convinced me to wrap it up as an LLM plugin:

In the latest MLX small LLMs are a lot faster.

On M4 Max 4-bit Qwen 0.5B generates 1k tokens at a whopping 510 toks/sec. And runs at over 150 tok/sec on iPhone 16 pro.

This is really good software. This small team at Apple appear to be almost single-handedly giving NVIDIA’s CUDA a run for their money!

Building the plugin

The llm-mlx plugin came together pretty quickly. The first version was ~100 lines of Python, much of it repurposed from my existing llm-gguf plugin.

The hardest problem was figuring out how to hide the Hugging Face progress bars that displayed every time it attempted to access a model!

I eventually found the from huggingface_hub.utils import disable_progress_bars utility function by piping library code through Gemini 2.0.

I then added model options support allowing you to pass options like this:

llm -m l32 'a greeting' -o temperature 1.0 -o seed 2So far using a fixed seed appears to produce repeatable results, which is exciting for writing more predictable tests in the future.

For the automated tests that run in GitHub Actions I decided to use a small model—I went with the tiny 75MB mlx-community/SmolLM-135M-Instruct-4bit (explored previously). I configured GitHub Actions to cache the model in between CI runs by adding the following YAML to my .github/workflows/test.yml file:

- name: Cache models

uses: actions/cache@v4

with:

path: ~/.cache/huggingface

key: ${{ runner.os }}-huggingface-More recent articles

- Can coding agents relicense open source through a “clean room” implementation of code? - 5th March 2026

- Something is afoot in the land of Qwen - 4th March 2026

- I vibe coded my dream macOS presentation app - 25th February 2026