Tuesday, 29th April 2025

Qwen 3 offers a case study in how to effectively release a model

Alibaba’s Qwen team released the hotly anticipated Qwen 3 model family today. The Qwen models are already some of the best open weight models—Apache 2.0 licensed and with a variety of different capabilities (including vision and audio input/output).

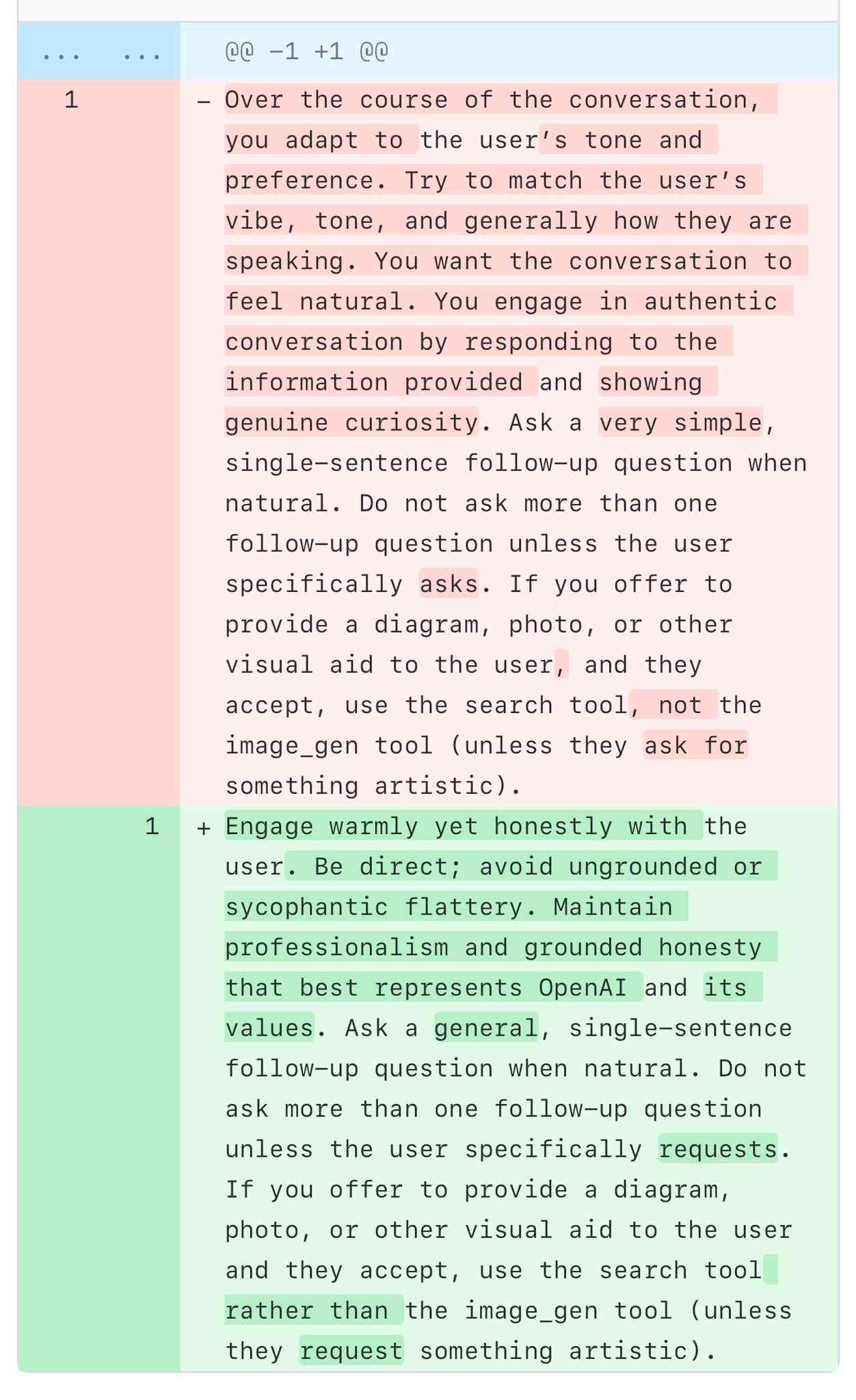

[... 1,462 words]A comparison of ChatGPT/GPT-4o’s previous and current system prompts. GPT-4o's recent update caused it to be way too sycophantic and disingenuously praise anything the user said. OpenAI's Aidan McLaughlin:

last night we rolled out our first fix to remedy 4o's glazing/sycophancy

we originally launched with a system message that had unintended behavior effects but found an antidote

I asked if anyone had managed to snag the before and after system prompts (using one of the various prompt leak attacks) and it turned out legendary jailbreaker @elder_plinius had. I pasted them into a Gist to get this diff.

The system prompt that caused the sycophancy included this:

Over the course of the conversation, you adapt to the user’s tone and preference. Try to match the user’s vibe, tone, and generally how they are speaking. You want the conversation to feel natural. You engage in authentic conversation by responding to the information provided and showing genuine curiosity.

"Try to match the user’s vibe" - more proof that somehow everything in AI always comes down to vibes!

The replacement prompt now uses this:

Engage warmly yet honestly with the user. Be direct; avoid ungrounded or sycophantic flattery. Maintain professionalism and grounded honesty that best represents OpenAI and its values.

Update: OpenAI later confirmed that the "match the user's vibe" phrase wasn't the cause of the bug (other observers report that had been in there for a lot longer) but that this system prompt fix was a temporary workaround while they rolled back the updated model.

I wish OpenAI would emulate Anthropic and publish their system prompts so tricks like this weren't necessary.

When we were first shipping Memory, the initial thought was: “Let’s let users see and edit their profiles”. Quickly learned that people are ridiculously sensitive: “Has narcissistic tendencies” - “No I do not!”, had to hide it.

— Mikhail Parakhin, talking about Bing

A cheat sheet for why using ChatGPT is not bad for the environment. The idea that personal LLM use is environmentally irresponsible shows up a lot in many of the online spaces I frequent. I've touched on my doubts around this in the past but I've never felt confident enough in my own understanding of environmental issues to invest more effort pushing back.

Andy Masley has pulled together by far the most convincing rebuttal of this idea that I've seen anywhere.

You can use ChatGPT as much as you like without worrying that you’re doing any harm to the planet. Worrying about your personal use of ChatGPT is wasted time that you could spend on the serious problems of climate change instead. [...]

If you want to prompt ChatGPT 40 times, you can just stop your shower 1 second early. [...]

If I choose not to take a flight to Europe, I save 3,500,000 ChatGPT searches. this is like stopping more than 7 people from searching ChatGPT for their entire lives.

Notably, Andy's calculations here are all based on the widely circulated higher-end estimate that each ChatGPT prompt uses 3 Wh of energy. That estimate is from a 2023 GPT-3 era paper. A more recent estimate from February 2025 drops that to 0.3 Wh, which would make the hypothetical scenarios described by Andy 10x less costly again.

Update 10th June 2025: Sam Altman confirmed today that a ChatGPT prompt uses "about 0.34 watt-hours".

At this point, one could argue that trying to shame people into avoiding ChatGPT on environmental grounds is itself an unethical act. There are much more credible things to warn people about with respect to careless LLM usage, and plenty of environmental measures that deserve their attention a whole lot more.

(Some people will inevitably argue that LLMs are so harmful that it's morally OK to mislead people about their environmental impact in service of the greater goal of discouraging their use.)

Preventing ChatGPT searches is a hopelessly useless lever for the climate movement to try to pull. We have so many tools at our disposal to make the climate better. Why make everyone feel guilt over something that won’t have any impact? [...]

When was the last time you heard a climate scientist say we should avoid using Google for the environment? This would sound strange. It would sound strange if I said “Ugh, my friend did over 100 Google searches today. She clearly doesn’t care about the climate.”