mlx-whisper (via) Apple's MLX framework for running GPU-accelerated machine learning models on Apple Silicon keeps growing new examples. mlx-whisper is a Python package for running OpenAI's Whisper speech-to-text model. It's really easy to use:

pip install mlx-whisper

Then in a Python console:

>>> import mlx_whisper

>>> result = mlx_whisper.transcribe(

... "/tmp/recording.mp3",

... path_or_hf_repo="mlx-community/distil-whisper-large-v3")

.gitattributes: 100%|███████████| 1.52k/1.52k [00:00<00:00, 4.46MB/s]

config.json: 100%|██████████████| 268/268 [00:00<00:00, 843kB/s]

README.md: 100%|████████████████| 332/332 [00:00<00:00, 1.95MB/s]

Fetching 4 files: 50%|████▌ | 2/4 [00:01<00:01, 1.26it/s]

weights.npz: 63%|██████████ ▎ | 944M/1.51G [02:41<02:15, 4.17MB/s]

>>> result.keys()

dict_keys(['text', 'segments', 'language'])

>>> result['language']

'en'

>>> len(result['text'])

100105

>>> print(result['text'][:3000])

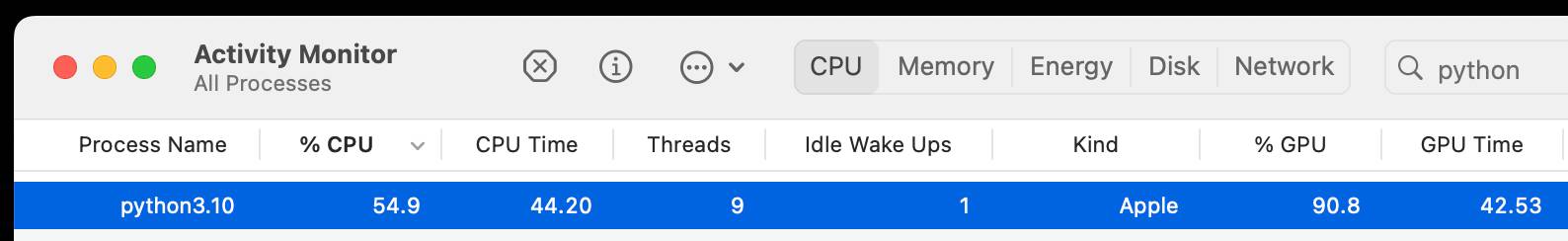

This is so exciting. I have to tell you, first of all ...Here's Activity Monitor confirming that the Python process is using the GPU for the transcription:

This example downloaded a 1.5GB model from Hugging Face and stashed it in my ~/.cache/huggingface/hub/models--mlx-community--distil-whisper-large-v3 folder.

Calling .transcribe(filepath) without the path_or_hf_repo argument uses the much smaller (74.4 MB) whisper-tiny-mlx model.

A few people asked how this compares to whisper.cpp. Bill Mill compared the two and found mlx-whisper to be about 3x faster on an M1 Max.

Update: this note from Josh Marshall:

That '3x' comparison isn't fair; completely different models. I ran a test (14" M1 Pro) with the full (non-distilled) large-v2 model quantised to 8 bit (which is my pick), and whisper.cpp was 1m vs 1m36 for mlx-whisper.

I've now done a better test, using the MLK audio, multiple runs and 2 models (distil-large-v3, large-v2-8bit)... and mlx-whisper is indeed 30-40% faster

Recent articles

- The evolution of OpenAI's mission statement - 13th February 2026

- Introducing Showboat and Rodney, so agents can demo what they’ve built - 10th February 2026

- How StrongDM's AI team build serious software without even looking at the code - 7th February 2026