Refactoring databases with sqlite-utils extract

23rd September 2020

Yesterday I described the new sqlite-utils transform mechanism for applying SQLite table transformations that go beyond those supported by ALTER TABLE. The other new feature in sqlite-utils 2.20 builds on that capability to allow you to refactor a database table by extracting columns into separate tables. I’ve called it sqlite-utils extract.

The problem

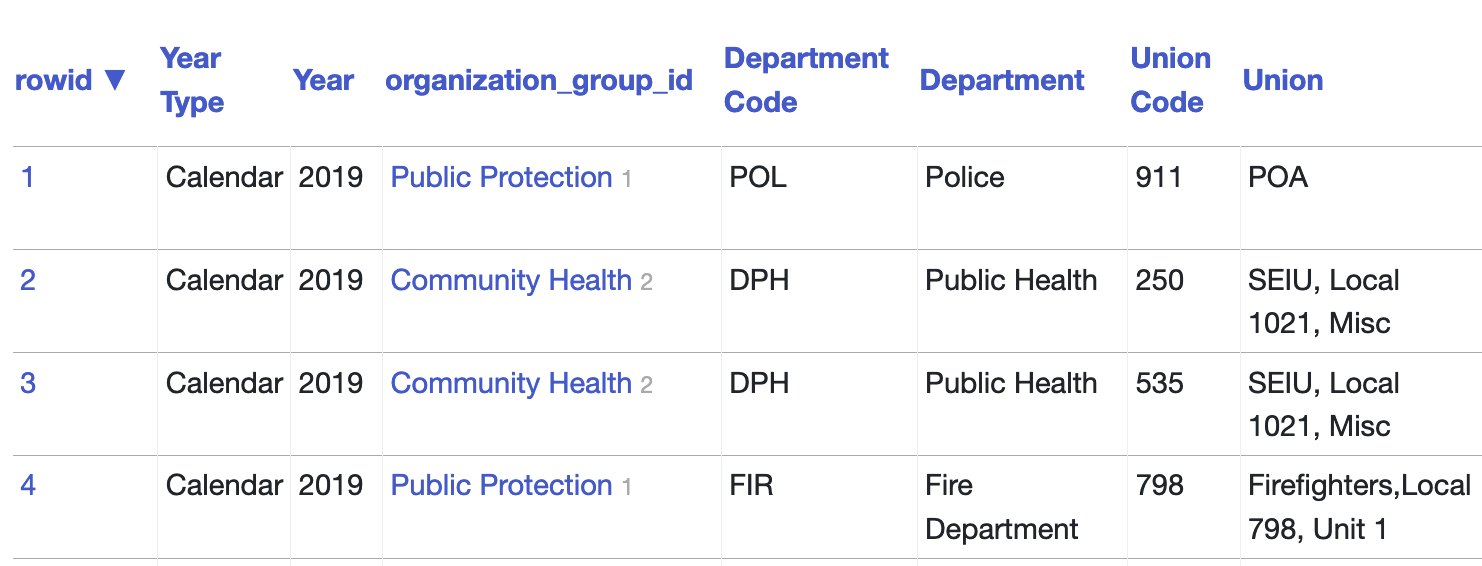

Much of the data I work with in Datasette starts off as a CSV file published by an organization or government. Since CSV files aren’t relational databases, they are often denormalized. It’s particularly common to see something like this:

| Organization Group Code | Organization Group | Department Code | Department | Union Code | Union |

|---|---|---|---|---|---|

| 1 | Public Protection | POL | Police | 911 | POA |

| 4 | Community Health | DPH | Public Health | 250 | SEIU, Local 1021, Misc |

| 1 | Public Protection | FIR | Fire Department | 798 | Firefighters,Local 798, Unit 1 |

| 1 | Public Protection | POL | Police | 911 | POA |

This is an extract from the San Francisco Employee Compensation dataset from DataSF.

The sqlite-utils extract command-line tool, and the table.extract() Python method that underlies it, can be used to extract these duplicated column pairs out into separate tables with foreign key relationships from the main table.

How to refactor that data

Here’s how to use sqlite-utils to clean up and refactor that compensation data.

First, grab the data. It’s a 150M CSV file containing over 600,000 rows:

curl -o salaries.csv 'https://data.sfgov.org/api/views/88g8-5mnd/rows.csv?accessType=DOWNLOAD'

Use sqlite-utils insert to load that into a SQLite database:

sqlite-utils insert salaries.db salaries salaries.csv --csv

Fire up Datasette to check that the data looks right:

datasette salaries.db

There’s a catch here: the schema for the generated table (shown at the bottom of http://localhost:8001/salaries/salaries) reveals that because we imported from CSV every column is a text column. Since some of this data is numeric we should convert to numbers, so we can do things like sort the table by the highest salary.

We can do that using sqlite-transform:

sqlite-utils transform salaries.db salaries \

--type 'Employee Identifier' integer \

--type Salaries float \

--type Overtime float \

--type 'Other Salaries' float \

--type 'Total Salary' float \

--type 'Retirement' float \

--type 'Health and Dental' float \

--type 'Other Benefits' float \

--type 'Total Benefits' float \

--type 'Total Compensation' float

[Update 13 August 2021: As of sqlite-utils 3.10 you can instead run sqlite-utils insert salaries.db salaries salaries.csv --csv --detect-types. The --detect-types (or -d) option will detect types for you during the initial import.]

Having run that command, here’s the new database schema:

$ sqlite3 salaries.db '.schema salaries'

CREATE TABLE IF NOT EXISTS "salaries" (

[rowid] INTEGER PRIMARY KEY,

[Year Type] TEXT,

[Year] TEXT,

[Organization Group Code] TEXT,

[Organization Group] TEXT,

[Department Code] TEXT,

[Department] TEXT,

[Union Code] TEXT,

[Union] TEXT,

[Job Family Code] TEXT,

[Job Family] TEXT,

[Job Code] TEXT,

[Job] TEXT,

[Employee Identifier] INTEGER,

[Salaries] FLOAT,

[Overtime] FLOAT,

[Other Salaries] FLOAT,

[Total Salary] FLOAT,

[Retirement] FLOAT,

[Health and Dental] FLOAT,

[Other Benefits] FLOAT,

[Total Benefits] FLOAT,

[Total Compensation] FLOAT

);

Now we can start extracting those columns. We do this using several rounds of the sqlite-utils extract command, one for each duplicated pairs.

For Organization Group Code and Organization Group:

sqlite-utils extract salaries.db salaries \

'Organization Group Code' 'Organization Group' \

--table 'organization_groups' \

--fk-column 'organization_group_id' \

--rename 'Organization Group Code' code \

--rename 'Organization Group' name

This took about 12 minutes on my laptop, and displayed a progress bar as it runs. (UPDATE: in issue #172 I improved the performance and knocked it down to just 4 seconds. I also removed the progress bar).

Here’s the refactored database schema:

$ sqlite3 salaries.db .schema

CREATE TABLE [organization_groups] (

[id] INTEGER PRIMARY KEY,

[code] TEXT,

[name] TEXT

);

CREATE TABLE IF NOT EXISTS "salaries" (

[rowid] INTEGER PRIMARY KEY,

[Year Type] TEXT,

[Year] TEXT,

[organization_group_id] INTEGER,

[Department Code] TEXT,

[Department] TEXT,

[Union Code] TEXT,

[Union] TEXT,

[Job Family Code] TEXT,

[Job Family] TEXT,

[Job Code] TEXT,

[Job] TEXT,

[Employee Identifier] INTEGER,

[Salaries] FLOAT,

[Overtime] FLOAT,

[Other Salaries] FLOAT,

[Total Salary] FLOAT,

[Retirement] FLOAT,

[Health and Dental] FLOAT,

[Other Benefits] FLOAT,

[Total Benefits] FLOAT,

[Total Compensation] FLOAT,

FOREIGN KEY(organization_group_id) REFERENCES organization_groups(id)

);

CREATE UNIQUE INDEX [idx_organization_groups_code_name]

ON [organization_groups] ([code], [name]);

Now fire up Datasette to confirm it had the desired effect:

datasette salaries.db

Here’s what that looks like:

Note that the new organization_group_id column still shows the name of the organization group, because Datasette automatically de-references foreign key relationships when it displays a table and uses any column called name (or title or value) as the label for a link to the record.

Let’s extract the other columns. This will take a while:

sqlite-utils extract salaries.db salaries \

'Department Code' 'Department' \

--table 'departments' \

--fk-column 'department_id' \

--rename 'Department Code' code \

--rename 'Department' name

sqlite-utils extract salaries.db salaries \

'Union Code' 'Union' \

--table 'unions' \

--fk-column 'union_id' \

--rename 'Union Code' code \

--rename 'Union' name

sqlite-utils extract salaries.db salaries \

'Job Family Code' 'Job Family' \

--table 'job_families' \

--fk-column 'job_family_id' \

--rename 'Job Family Code' code \

--rename 'Job Family' name

sqlite-utils extract salaries.db salaries \

'Job Code' 'Job' \

--table 'jobs' \

--fk-column 'job_id' \

--rename 'Job Code' code \

--rename 'Job' name

Our finished schema looks like this:

$ sqlite3 salaries.db .schema

CREATE TABLE [organization_groups] (

[id] INTEGER PRIMARY KEY,

[code] TEXT,

[name] TEXT

);

CREATE TABLE [departments] (

[id] INTEGER PRIMARY KEY,

[code] TEXT,

[name] TEXT

);

CREATE TABLE [unions] (

[id] INTEGER PRIMARY KEY,

[code] TEXT,

[name] TEXT

);

CREATE TABLE [job_families] (

[id] INTEGER PRIMARY KEY,

[code] TEXT,

[name] TEXT

);

CREATE TABLE [jobs] (

[id] INTEGER PRIMARY KEY,

[code] TEXT,

[name] TEXT

);

CREATE TABLE IF NOT EXISTS "salaries" (

[rowid] INTEGER PRIMARY KEY,

[Year Type] TEXT,

[Year] TEXT,

[organization_group_id] INTEGER REFERENCES [organization_groups]([id]),

[department_id] INTEGER REFERENCES [departments]([id]),

[union_id] INTEGER REFERENCES [unions]([id]),

[job_family_id] INTEGER REFERENCES [job_families]([id]),

[job_id] INTEGER,

[Employee Identifier] INTEGER,

[Salaries] FLOAT,

[Overtime] FLOAT,

[Other Salaries] FLOAT,

[Total Salary] FLOAT,

[Retirement] FLOAT,

[Health and Dental] FLOAT,

[Other Benefits] FLOAT,

[Total Benefits] FLOAT,

[Total Compensation] FLOAT,

FOREIGN KEY(job_id) REFERENCES jobs(id)

);

CREATE UNIQUE INDEX [idx_organization_groups_code_name]

ON [organization_groups] ([code], [name]);

CREATE UNIQUE INDEX [idx_departments_code_name]

ON [departments] ([code], [name]);

CREATE UNIQUE INDEX [idx_unions_code_name]

ON [unions] ([code], [name]);

CREATE UNIQUE INDEX [idx_job_families_code_name]

ON [job_families] ([code], [name]);

CREATE UNIQUE INDEX [idx_jobs_code_name]

ON [jobs] ([code], [name]);

We’ve also shrunk our database file quite a bit. Before the transformations salaries.db was 159MB. It’s now just 70MB—that’s less than half the size!

I used datasette publish cloudrun to publish a copy of my final database here:

https://sf-employee-compensation.datasettes.com/salaries/salaries

Here’s the command I used to publish it:

datasette publish cloudrun salaries.db \

--service sf-employee-compensation \

--title "San Francisco Employee Compensation (as-of 21 Sep 2020)" \

--source "DataSF" \

--source_url "https://data.sfgov.org/City-Management-and-Ethics/Employee-Compensation/88g8-5mnd" \

--about "About this project" \

--about_url "https://simonwillison.net/2020/Sep/23/sqlite-utils-extract/" \

--install datasette-block-robots \

--install datasette-vega \

--install datasette-copyable \

--install datasette-graphql

Bonus: explore salaries with GraphQL

You may have noticed my datasette publish line above finished with the line --install datasette-graphql. This installs the datasette-graphql plugin as part of the deployment to Cloud Run. Which means we can query the salary data using GraphQL as an alternative to SQL!

Here’s a GraphQL query that shows the ten highest paid employees, including their various expanded foreign key references:

{

salaries(sort_desc: Total_Compensation, first: 10) {

nodes {

Year_Type

Year

union_id {

id

name

}

job_id {

id

name

}

job_family_id {

id

name

}

department_id {

id

name

}

organization_group_id {

id

name

}

Salaries

Overtime

Other_Salaries

Total_Salary

Retirement

Health_and_Dental

Other_Benefits

Total_Benefits

Total_Compensation

rowid

Employee_Identifier

}

}

}You can try that query out here in the GraphiQL API explorer.

More recent articles

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026