Our contribution to a global environmental standard for AI (via) Mistral have released environmental impact numbers for their largest model, Mistral Large 2, in more detail than I have seen from any of the other large AI labs.

The methodology sounds robust:

[...] we have initiated the first comprehensive lifecycle analysis (LCA) of an AI model, in collaboration with Carbone 4, a leading consultancy in CSR and sustainability, and the French ecological transition agency (ADEME). To ensure robustness, this study was also peer-reviewed by Resilio and Hubblo, two consultancies specializing in environmental audits in the digital industry.

Their headline numbers:

- the environmental footprint of training Mistral Large 2: as of January 2025, and after 18 months of usage, Large 2 generated the following impacts:

- 20,4 ktCO₂e,

- 281 000 m3 of water consumed,

- and 660 kg Sb eq (standard unit for resource depletion).

- the marginal impacts of inference, more precisely the use of our AI assistant Le Chat for a 400-token response - excluding users' terminals:

- 1.14 gCO₂e,

- 45 mL of water,

- and 0.16 mg of Sb eq.

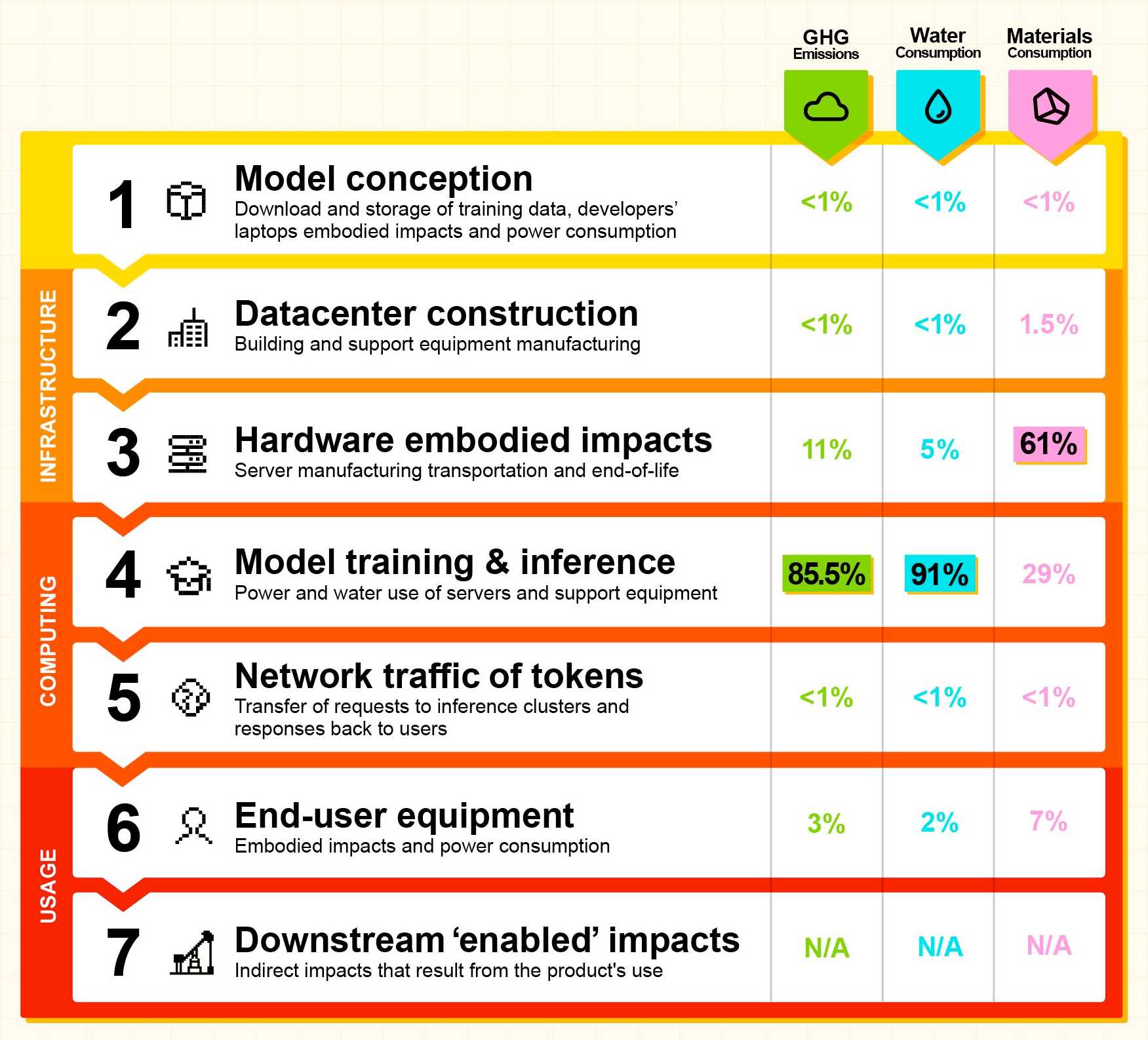

They also published this breakdown of how the energy, water and resources were shared between different parts of the process:

It's a little frustrating that "Model training & inference" are bundled in the same number (85.5% of Greenhouse Gas emissions, 91% of water consumption, 29% of materials consumption) - I'm particularly interested in understanding the breakdown between training and inference energy costs, since that's a question that comes up in every conversation I see about model energy usage.

I'd really like to see these numbers presented in context - what does 20,4 ktCO₂e actually mean? I'm not environmentally sophisticated enough to attempt an estimate myself - I tried running it through o3 (at an unknown cost in terms of CO₂ for that query) which estimated ~100 London to New York flights with 350 passengers or around 5,100 US households for a year but I have little confidence in the credibility of those numbers.

Recent articles

- Highlights from my appearance on the Data Renegades podcast with CL Kao and Dori Wilson - 26th November 2025

- Claude Opus 4.5, and why evaluating new LLMs is increasingly difficult - 24th November 2025

- sqlite-utils 4.0a1 has several (minor) backwards incompatible changes - 24th November 2025