20th September 2025 - Link Blog

Grok 4 Fast. New hosted vision-enabled reasoning model from xAI that's designed to be fast and extremely competitive on price. It has a 2 million token context window and "was trained end-to-end with tool-use reinforcement learning".

It's priced at $0.20/million input tokens and $0.50/million output tokens - 15x less than Grok 4 (which is $3/million input and $15/million output). That puts it cheaper than GPT-5 mini and Gemini 2.5 Flash on llm-prices.com.

The same model weights handle reasoning and non-reasoning based on a parameter passed to the model.

I've been trying it out via my updated llm-openrouter plugin, since Grok 4 Fast is available for free on OpenRouter for a limited period.

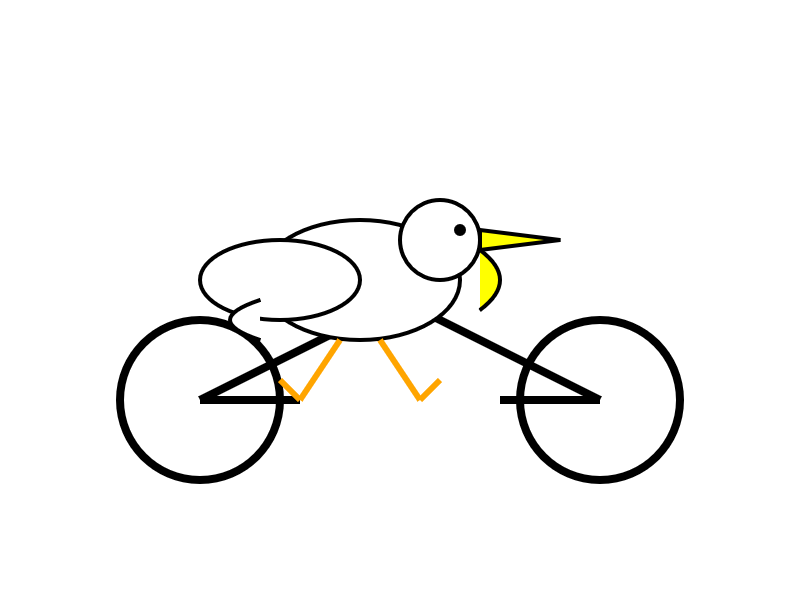

Here's output from the non-reasoning model. This actually output an invalid SVG - I had to make a tiny manual tweak to the XML to get it to render.

llm -m openrouter/x-ai/grok-4-fast:free "Generate an SVG of a pelican riding a bicycle" -o reasoning_enabled false

(I initially ran this without that -o reasoning_enabled false flag, but then I saw that OpenRouter enable reasoning by default for that model. Here's my previous invalid result.)

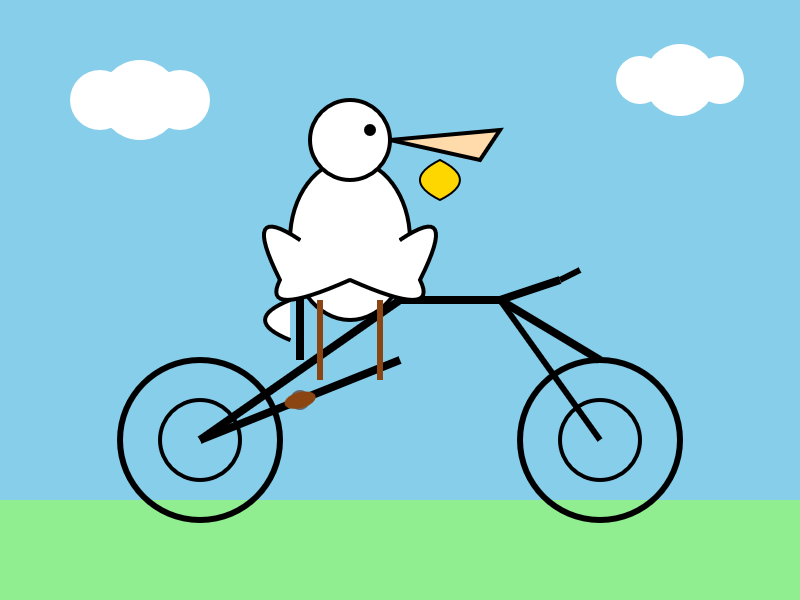

And the reasoning model:

llm -m openrouter/x-ai/grok-4-fast:free "Generate an SVG of a pelican riding a bicycle" -o reasoning_enabled true

In related news, the New York Times had a story a couple of days ago about Elon's recent focus on xAI: Since Leaving Washington, Elon Musk Has Been All In on His A.I. Company.

Recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026