28th May 2025

Here's a quick demo of the kind of casual things I use LLMs for on a daily basis.

I just found out that Perplexity offer their Deep Research feature via their API, through a model called Sonar Deep Research.

Their documentation includes an example response, which included this usage data in the JSON:

{"prompt_tokens": 19, "completion_tokens": 498, "total_tokens": 517, "citation_tokens": 10175, "num_search_queries": 48, "reasoning_tokens": 95305}

But how much would that actually cost?

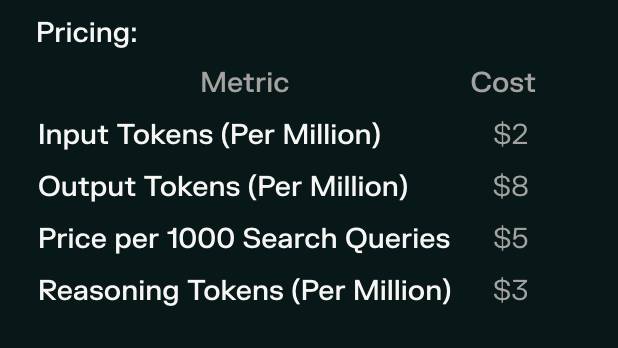

Their pricing page lists the price for that model. I snapped this screenshot of the prices:

I could break out a calculator at this point, but I'm not quite curious enough to go through the extra effort.

So I pasted that screenshot into Claude along with the JSON and prompted:

{"prompt_tokens": 19, "completion_tokens": 498, "total_tokens": 517, "citation_tokens": 10175, "num_search_queries": 48, "reasoning_tokens": 95305}Calculate price, use javascript

I wanted to make sure Claude would use its JavaScript analysis tool, since LLMs can't do maths.

I watched Claude Sonnet 4 write 61 lines of JavaScript - keeping an eye on it to check it didn't do anything obviously wrong. The code spat out this output:

=== COST CALCULATIONS ===

Input tokens cost: 19 tokens × $2/million = $0.000038

Output tokens cost: 498 tokens × $8/million = $0.003984

Search queries cost: 48 queries × $5/1000 = $0.240000

Reasoning tokens cost: 95305 tokens × $3/million = $0.285915

=== COST SUMMARY ===

Input tokens: $0.000038

Output tokens: $0.003984

Search queries: $0.240000

Reasoning tokens: $0.285915

─────────────────────────

TOTAL COST: $0.529937

TOTAL COST: $0.5299 (rounded to 4 decimal places)

So that Deep Research API call would cost 53 cents! Curiosity satisfied in less than a minute.

Recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026