Trying out llama.cpp’s new vision support

10th May 2025

This llama.cpp server vision support via libmtmd pull request—via Hacker News—was merged earlier today. The PR finally adds full support for vision models to the excellent llama.cpp project. It’s documented on this page, but the more detailed technical details are covered here. Here are my notes on getting it working on a Mac.

llama.cpp models are usually distributed as .gguf files. This project introduces a new variant of those called mmproj, for multimodal projector. libmtmd is the new library for handling these.

You can try it out by compiling llama.cpp from source, but I found another option that works: you can download pre-compiled binaries from the GitHub releases.

On macOS there’s an extra step to jump through to get these working, which I’ll describe below.

Update: it turns out the Homebrew package for llama.cpp turns things around extremely quickly. You can run brew install llama.cpp or brew upgrade llama.cpp and start running the below tools without any extra steps.

I downloaded the llama-b5332-bin-macos-arm64.zip file from this GitHub release and unzipped it, which created a build/bin directory.

That directory contains a bunch of binary executables and a whole lot of .dylib files. macOS wouldn’t let me execute these files because they were quarantined. Running this command fixed that for the llama-mtmd-cli and llama-server executables and the .dylib files they needed:

sudo xattr -rd com.apple.quarantine llama-server llama-mtmd-cli *.dylib

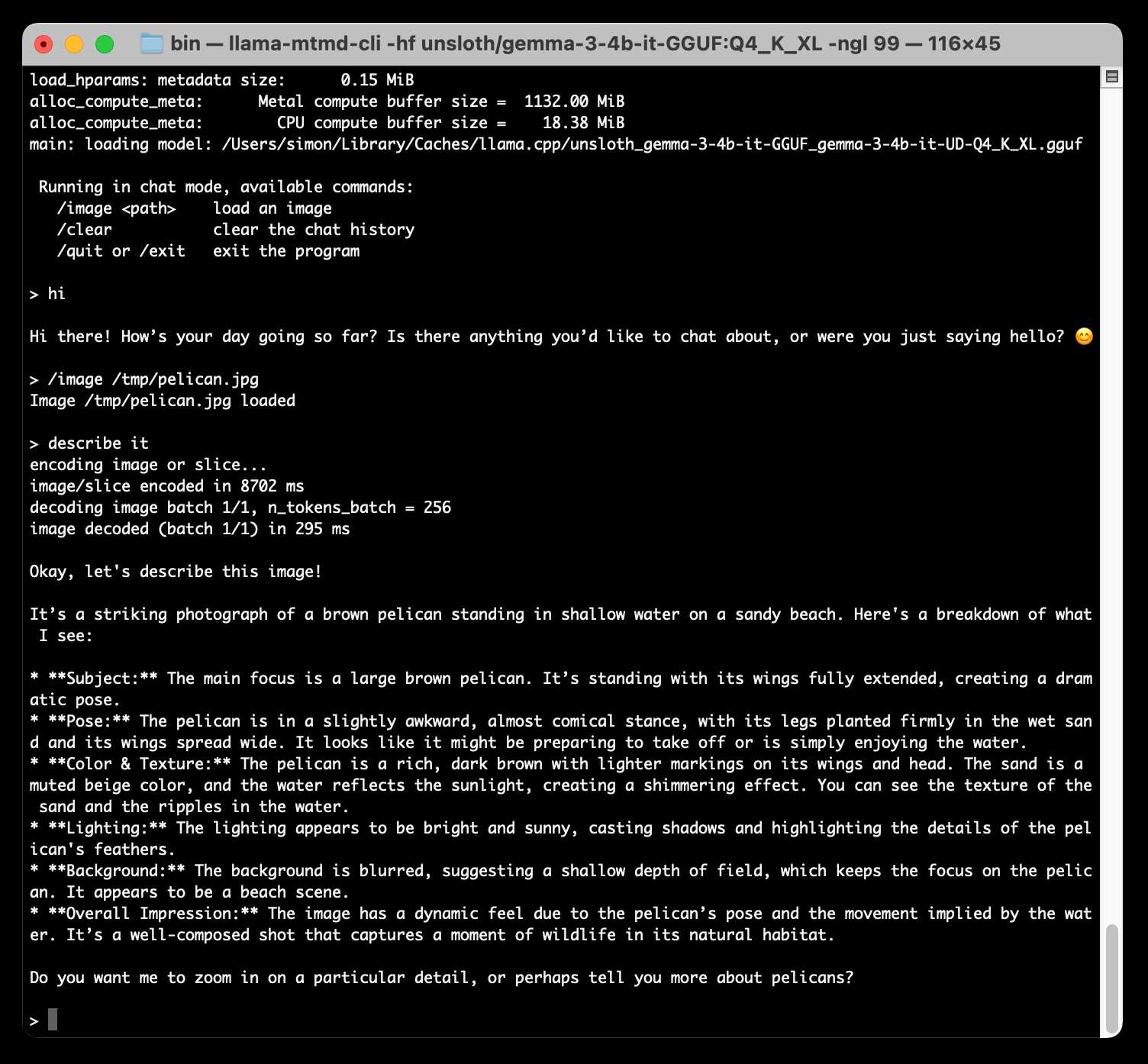

Now I can run an interactive terminal LLM session using this command:

./llama-mtmd-cli -hf unsloth/gemma-3-4b-it-GGUF:Q4_K_XLThe first time this runs it fetches four files from unsloth/gemma-3-4b-it-GGUF—a quantized copy of Google DeepMind’s Gemma 3 4B—and stores them in ~/Library/Caches/llama.cpp:

2.4G unsloth_gemma-3-4b-it-GGUF_gemma-3-4b-it-UD-Q4_K_XL.gguf

217B unsloth_gemma-3-4b-it-GGUF_gemma-3-4b-it-UD-Q4_K_XL.gguf.json

812M unsloth_gemma-3-4b-it-GGUF_mmproj-F16.gguf

203B unsloth_gemma-3-4b-it-GGUF_mmproj-F16.gguf.json

Once they’ve downloaded an interactive session starts. You can chat directly with the model or use the /image path-to-image.jpg command to load an image into the context.

I loaded in a pelican photo and prompted “describe it” and got back this:

It’s a striking photograph of a brown pelican standing in shallow water on a sandy beach. Here’s a breakdown of what I see:

Subject: The main focus is a large brown pelican. It’s standing with its wings fully extended, creating a dramatic pose.

Pose: The pelican is in a slightly awkward, almost comical stance, with its legs planted firmly in the wet sand and its wings spread wide. It looks like it might be preparing to take off or is simply enjoying the water.

Color & Texture: The pelican is a rich, dark brown with lighter markings on its wings and head. The sand is a muted beige color, and the water reflects the sunlight, creating a shimmering effect. You can see the texture of the sand and the ripples in the water.

Lighting: The lighting appears to be bright and sunny, casting shadows and highlighting the details of the pelican’s feathers.

Background: The background is blurred, suggesting a shallow depth of field, which keeps the focus on the pelican. It appears to be a beach scene.

Overall Impression: The image has a dynamic feel due to the pelican’s pose and the movement implied by the water. It’s a well-composed shot that captures a moment of wildlife in its natural habitat.

Do you want me to zoom in on a particular detail, or perhaps tell you more about pelicans?

Not bad for a 3.2GB model running on my laptop!

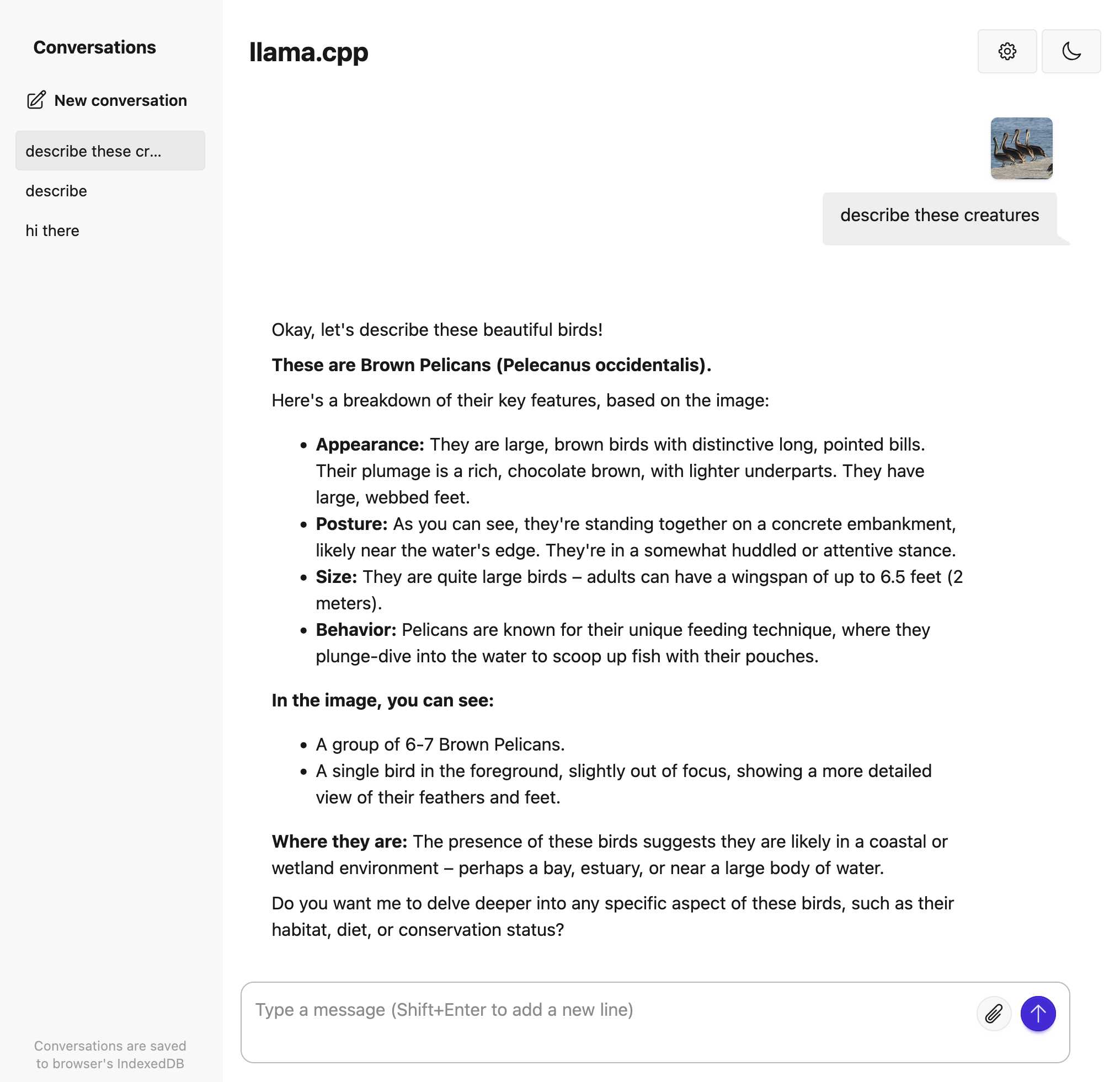

Running llama-server

Even more fun is the llama-server command. This starts a localhost web server running on port 8080 to serve the model, with both a web UI and an OpenAI-compatible API endpoint.

The command to run it is the same:

./llama-server -hf unsloth/gemma-3-4b-it-GGUF:Q4_K_XLNow visit http://localhost:8080 in your browser to start interacting with the model:

It miscounted the pelicans in the group photo, but again, this is a tiny 3.2GB model.

With the server running on port 8080 you can also access the OpenAI-compatible API endpoint. Here’s how to do that using curl:

curl -X POST http://localhost:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"messages": [

{"role": "user", "content": "Describe a pelicans ideal corporate retreat"}

]

}' | jqI built a new plugin for LLM just now called llm-llama-server to make interacting with this API more convenient. You can use that like this:

llm install llm-llama-server

llm -m llama-server 'invent a theme park ride for a pelican'Or for vision models use llama-server-vision:

llm -m llama-server-vision 'describe this image' -a https://static.simonwillison.net/static/2025/pelican-group.jpgThe LLM plugin uses the streaming API, so responses will stream back to you as they are being generated.

More recent articles

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026