Monday, 16th June 2025

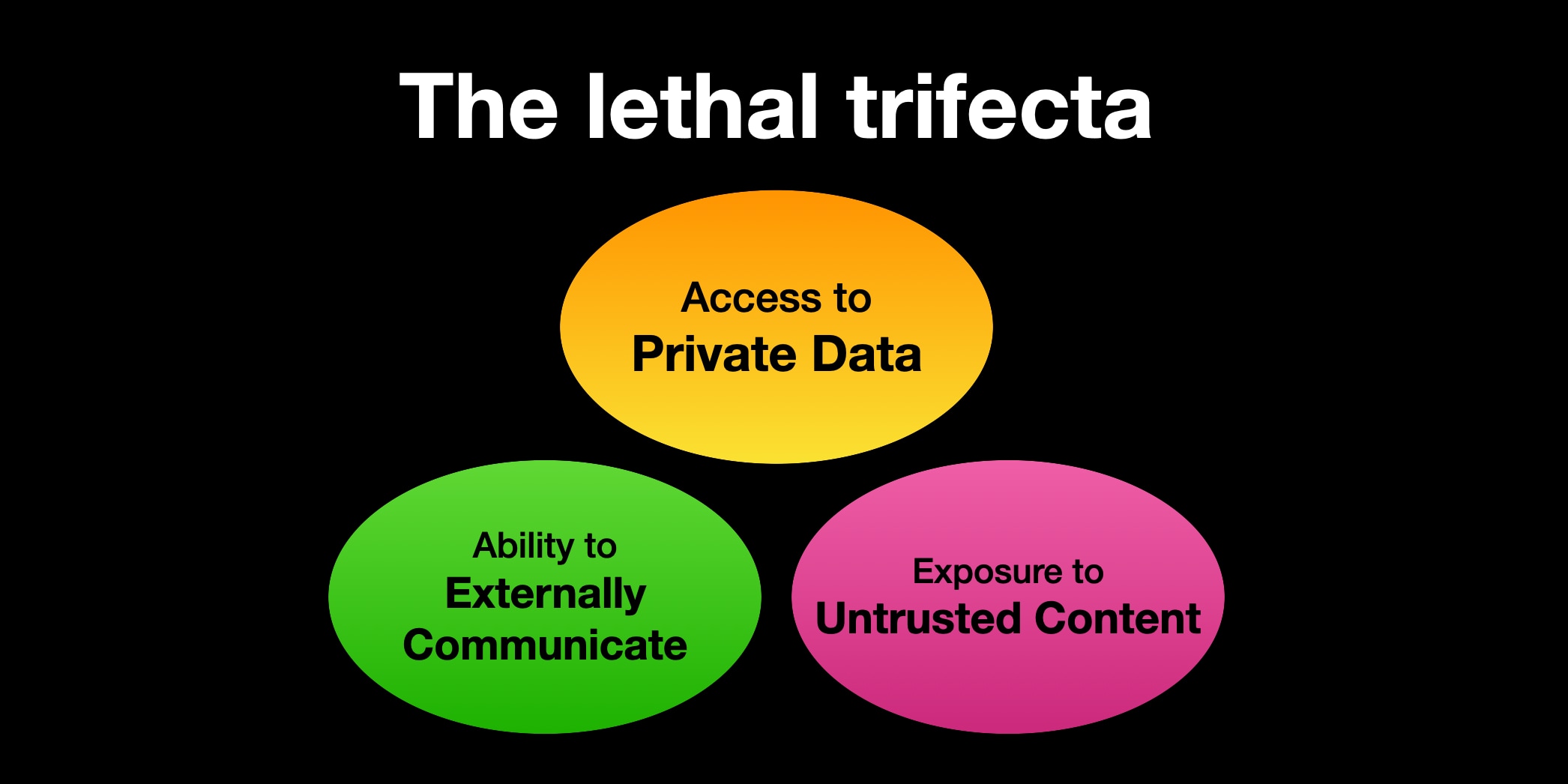

The lethal trifecta for AI agents: private data, untrusted content, and external communication

If you are a user of LLM systems that use tools (you can call them “AI agents” if you like) it is critically important that you understand the risk of combining tools with the following three characteristics. Failing to understand this can let an attacker steal your data.

[... 1,324 words]In conversation with our investors and the board, we believed that the best way forward was to shut down the company [Dark, Inc], as it was clear that an 8 year old product with no traction was not going to attract new investment. In our discussions, we agreed that continuity of the product [Darklang] was in the best interest of the users and the community (and of both founders and investors, who do not enjoy being blamed for shutting down tools they can no longer afford to run), and we agreed that this could best be achieved by selling it to the employees.

— Paul Biggar, Goodbye Dark Inc. - Hello Darklang Inc.

Cloudflare Project Galileo. I only just heard about this Cloudflare initiative, though it's been around for more than a decade:

If you are an organization working in human rights, civil society, journalism, or democracy, you can apply for Project Galileo to get free cyber security protection from Cloudflare.

It's effectively free denial-of-service protection for vulnerable targets in the civil rights public interest groups.

Last week they published Celebrating 11 years of Project Galileo’s global impact with some noteworthy numbers:

Journalists and news organizations experienced the highest volume of attacks, with over 97 billion requests blocked as potential threats across 315 different organizations. [...]

Cloudflare onboarded the Belarusian Investigative Center, an independent journalism organization, on September 27, 2024, while it was already under attack. A major application-layer DDoS attack followed on September 28, generating over 28 billion requests in a single day.

Every time I get into an online conversation about prompt injection it's inevitable that someone will argue that a mitigation which works 99% of the time is still worthwhile because there's no such thing as a security fix that is 100% guaranteed to work.

I don't think that's true.

If I use parameterized SQL queries my systems are 100% protected against SQL injection attacks.

If I make a mistake applying those and someone reports it to me I can fix that mistake and now I'm back up to 100%.

If our measures against SQL injection were only 99% effective none of our digital activities involving relational databases would be safe.

I don't think it is unreasonable to want a security fix that, when applied correctly, works 100% of the time.

(I first argued a version of this back in September 2022 in You can’t solve AI security problems with more AI.)